“Deep inertial poser: learning to reconstruct human pose from sparse inertial measurements in real time”

Conference:

Type(s):

Title:

- Deep inertial poser: learning to reconstruct human pose from sparse inertial measurements in real time

Session/Category Title:

- How people look and move

Presenter(s)/Author(s):

Moderator(s):

Abstract:

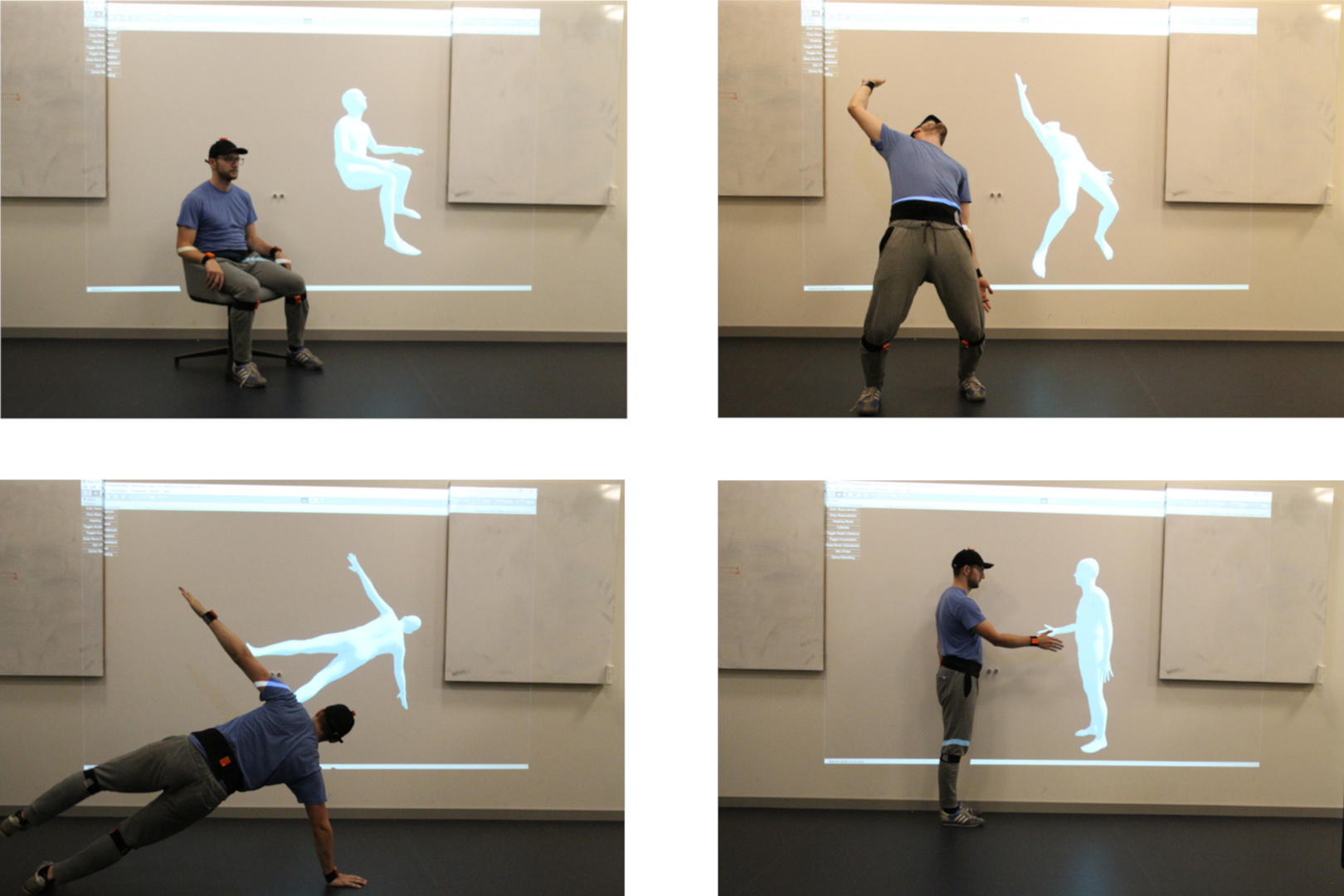

We demonstrate a novel deep neural network capable of reconstructing human full body pose in real-time from 6 Inertial Measurement Units (IMUs) worn on the user’s body. In doing so, we address several difficult challenges. First, the problem is severely under-constrained as multiple pose parameters produce the same IMU orientations. Second, capturing IMU data in conjunction with ground-truth poses is expensive and difficult to do in many target application scenarios (e.g., outdoors). Third, modeling temporal dependencies through non-linear optimization has proven effective in prior work but makes real-time prediction infeasible. To address this important limitation, we learn the temporal pose priors using deep learning. To learn from sufficient data, we synthesize IMU data from motion capture datasets. A bi-directional RNN architecture leverages past and future information that is available at training time. At test time, we deploy the network in a sliding window fashion, retaining real time capabilities. To evaluate our method, we recorded DIP-IMU, a dataset consisting of 10 subjects wearing 17 IMUs for validation in 64 sequences with 330 000 time instants; this constitutes the largest IMU dataset publicly available. We quantitatively evaluate our approach on multiple datasets and show results from a real-time implementation. DIP-IMU and the code are available for research purposes.1

References:

1. 2018. Unifying Motion Capture Datasets by Automatically Solving for Full-body Shape and Motion. In preparation (2018).Google Scholar

2. Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg S. Corrado, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Ian Goodfellow, Andrew Harp, Geoffrey Irving, Michael Isard, Yangqing Jia, Rafal Jozefowicz, Lukasz Kaiser, Manjunath Kudlur, Josh Levenberg, Dandelion Mané, Rajat Monga, Sherry Moore, Derek Murray, Chris Olah, Mike Schuster, Jonathon Shlens, Benoit Steiner, Ilya Sutskever, Kunal Talwar, Paul Tucker, Vincent Vanhoucke, Vijay Vasudevan, Fernanda Viégas, Oriol Vinyals, Pete Warden, Martin Wattenberg, Martin Wicke, Yuan Yu, and Xiaoqiang Zheng. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. (2015). https://www.tensorflow.org/Software available from tensorflow.org.Google Scholar

3. Ijaz Akhter and Michael J Black. 2015. Pose-conditioned joint angle limits for 3D human pose reconstruction. In Proceedings of the IEEE Conf. on Computer Vision and Pattern Recognition. IEEE, 1446–1455.Google ScholarCross Ref

4. Thiemo Alldieck, Marcus Magnor, Weipeng Xu, Christian Theobalt, and Gerard Pons-Moll. 2018. Video Based Reconstruction of 3D People Models. In IEEE Conference on Computer Vision and Pattern Recognition. CVPR Spotlight.Google ScholarCross Ref

5. Sheldon Andrews, Ivan Huerta, Taku Komura, Leonid Sigal, and Kenny Mitchell. 2016. Real-time Physics-based Motion Capture with Sparse Sensors. In Proceedings of the 13th European Conference on Visual Media Production (CVMP 2016). ACM, 5. Google ScholarDigital Library

6. Luca Ballan, Aparna Taneja, Jürgen Gall, Luc Van Gool, and Marc Pollefeys. 2012. Motion capture of hands in action using discriminative salient points. Computer Vision-ECCV 2012 (2012), 640–653. Google ScholarDigital Library

7. Federica Bogo, Angjoo Kanazawa, Christoph Lassner, Peter Gehler, Javier Romero, and Michael J. Black. 2016. Keep it SMPL: Automatic Estimation of 3D Human Pose and Shape from a Single Image. In Computer Vision – ECCV 2016 (Lecture Notes in Computer Science). Springer International Publishing, 561–578.Google Scholar

8. Christoph Bregler and Jitendra Malik. 1998. Tracking people with twists and exponential maps. In Computer Vision and Pattern Recognition, 1998. Proceedings. 1998 IEEE Computer Society Conference on. IEEE, 8–15. Google ScholarDigital Library

9. Zhe Cao, Tomas Simon, Shih-En Wei, and Yaser Sheikh. 2016. Realtime multi-person 2d pose estimation using part affinity fields. arXiv preprint arXiv:1611.08050 (2016).Google Scholar

10. Jinxiang Chai and Jessica K Hodgins. 2005. Performance animation from low-dimensional control signals. In ACM Transactions on Graphics (TOG), Vol. 24. ACM, 686–696. Google ScholarDigital Library

11. Xianjie Chen and Alan L Yuille. 2014. Articulated pose estimation by a graphical model with image dependent pairwise relations. In NIPS. 1736–1744. Google ScholarDigital Library

12. Alvaro Collet, Ming Chuang, Pat Sweeney, Don Gillett, Dennis Evseev, David Calabrese, Hugues Hoppe, Adam Kirk, and Steve Sullivan. 2015. High-quality streamable free-viewpoint video. ACM Transactions on Graphics 34, 4 (2015), 69. Google ScholarDigital Library

13. Edilson de Aguiar, Carsten Stoll, Christian Theobalt, Naveed Ahmed, Hans-Peter Seidel, and Sebastian Thrun. 2008. Performance Capture from Sparse Multi-view Video. In ACM SIGGRAPH 2008 Papers (SIGGRAPH ’08). ACM, New York, NY, USA, Article 98, 10 pages. Google ScholarDigital Library

14. Fernando De la Torre, Jessica Hodgins, Adam Bargteil, Xavier Martin, Justin Macey, Alex Collado, and Pep Beltran. 2008. Guide to the carnegie mellon university multimodal activity (cmu-mmac) database. Robotics Institute (2008), 135.Google Scholar

15. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, Pushmeet Kohli, Vladimir Tankovich, and Shahram Izadi. 2016. Fusion4D: Real-time Performance Capture of Challenging Scenes. ACM Trans. Graph. 35, 4, Article 114 (July 2016), 13 pages. Google ScholarDigital Library

16. Ahmed Elhayek, Edilson de Aguiar, Arjun Jain, J Thompson, Leonid Pishchulin, Mykhaylo Andriluka, Christoph Bregler, Bernt Schiele, and Christian Theobalt. 2017. MARCOnI-ConvNet-Based MARker-Less Motion Capture in Outdoor and Indoor Scenes. IEEE transactions on pattern analysis and machine intelligence 39, 3 (2017), 501–514. Google ScholarDigital Library

17. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Computer Vision (ICCV), 2015 IEEE International Conference on. IEEE, 4346–4354. Google ScholarDigital Library

18. Varun Ganapathi, Christian Plagemann, Daphne Koller, and Sebastian Thrun. 2012. Real-time human pose tracking from range data. In European conference on computer vision. Springer, 738–751. Google ScholarDigital Library

19. Partha Ghosh, Jie Song, Emre Aksan, and Otmar Hilliges. 2017. Learning Human Motion Models for Long-term Predictions. arXiv preprint arXiv:1704.02827 (2017).Google Scholar

20. Julius Hannink, Thomas Kautz, Cristian Pasluosta, Karl-Gunter Gassmann, Jochen Klucken, and Bjoern Eskofier. 2016. Sensor-based Gait Parameter Extraction with Deep Convolutional Neural Networks. IEEE Journal of Biomedical and Health Informatics (2016), 85–93.Google Scholar

21. Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. 2017. Mask r-cnn. In Computer Vision (ICCV), 2017 IEEE International Conference on. IEEE, 2980–2988.Google ScholarCross Ref

22. Thomas Helten, Meinard Muller, Hans-Peter Seidel, and Christian Theobalt. 2013. Real-time body tracking with one depth camera and inertial sensors. In Proceedings of the IEEE International Conference on Computer Vision. 1105–1112. Google ScholarDigital Library

23. Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural computation 9, 8 (1997), 1735–1780. Google ScholarDigital Library

24. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 42. Google ScholarDigital Library

25. Yinghao Huang, Federica Bogo, Christoph Lassner, Angjoo Kanazawa, Peter V. Gehler, Javier Romero, Ijaz Akhter, and Michael J. Black. 2017. Towards Accurate Marker-less Human Shape and Pose Estimation over Time. In International Conference on 3D Vision (3DV). 421–430.Google Scholar

26. Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2014. Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments. IEEE Transactions on Pattern Analysis and Machine Intelligence 36, 7 (jul 2014), 1325–1339. Google ScholarDigital Library

27. Angjoo Kanazawa, Michael J. Black, David W. Jacobs, and Jitendra Malik. 2018. End-to-end Recovery of Human Shape and Pose. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE Computer Society.Google Scholar

28. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

29. Huajun Liu, Xiaolin Wei, Jinxiang Chai, Inwoo Ha, and Taehyun Rhee. 2011. Realtime human motion control with a small number of inertial sensors. In Symposium on Interactive 3D Graphics and Games. ACM, 133–140. Google ScholarDigital Library

30. Matthew Loper, Naureen Mahmood, and Michael J Black. 2014. MoSh: Motion and shape capture from sparse markers. ACM Transactions on Graphics (TOG) 33, 6 (2014), 220. Google ScholarDigital Library

31. Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J Black. 2015. SMPL: A skinned multi-person linear model. ACM Transactions on Graphics (TOG) 34, 6 (2015), 248. Google ScholarDigital Library

32. Charles Malleson, Marco Volino, Andrew Gilbert, Matthew Trumble, John Collomosse, and Adrian Hilton. 2017. Real-time Full-Body Motion Capture from Video and IMUs. In 2017 Fifth International Conference on 3D Vision (3DV). 449–457.Google ScholarCross Ref

33. Julieta Martinez, Michael J Black, and Javier Romero. 2017. On human motion prediction using recurrent neural networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 4674–4683.Google ScholarCross Ref

34. Dushyant Mehta, Oleksandr Sotnychenko, Franziska Mueller, Weipeng Xu, Srinath Sridhar, Gerard Pons-Moll, and Christian Theobalt. 2018. Single-Shot Multi-Person 3D Pose Estimation From Monocular RGB. In International Conference on 3D Vision (3DV).Google ScholarCross Ref

35. Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas, and Christian Theobalt. 2017. Vnect: Real-time 3d human pose estimation with a single rgb camera. ACM Transactions on Graphics (TOG) 36, 4 (2017), 44. Google ScholarDigital Library

36. Christos Mousas. 2017. Full-Body Locomotion Reconstruction of Virtual Characters Using a Single Inertial Measurement Unit. Sensors 17, 11 (2017), 2589.Google ScholarCross Ref

37. Richard A Newcombe, Dieter Fox, and Steven M Seitz. 2015. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE conference on computer vision and pattern recognition. 343–352.Google ScholarCross Ref

38. Alejandro Newell, Kaiyu Yang, and Jia Deng. 2016. Stacked hourglass networks for human pose estimation. In ECCV. 483–499.Google Scholar

39. Iasonas Oikonomidis, Nikolaos Kyriazis, and Antonis A Argyros. 2012. Tracking the articulated motion of two strongly interacting hands. In Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 1862–1869. Google ScholarDigital Library

40. Mohamed Omran, Christoph Lassner, Gerard Pons-Moll, Peter Gehler, and Bernt Schiele. 2018. Neural Body Fitting: Unifying Deep Learning and Model Based Human Pose and Shape Estimation. In International Conference on 3D Vision (3DV).Google Scholar

41. Xue Bin Peng, Glen Berseth, Kangkang Yin, and Michiel Van De Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. 36, 4, Article 41 (July 2017), 13 pages. Google ScholarDigital Library

42. Gerard Pons-Moll, Andreas Baak, Juergen Gall, Laura Leal-Taixe, Meinard Mueller, Hans-Peter Seidel, and Bodo Rosenhahn. 2011. Outdoor Human Motion Capture using Inverse Kinematics and von Mises-Fisher Sampling. In IEEE International Conference on Computer Vision (ICCV). 1243–1250. Google ScholarDigital Library

43. Gerard Pons-Moll, Andreas Baak, Thomas Helten, Meinard Müller, Hans-Peter Seidel, and Bodo Rosenhahn. 2010. Multisensor-Fusion for 3D Full-Body Human Motion Capture. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

44. Gerard Pons-Moll, Sergi Pujades, Sonny Hu, and Michael Black. 2017. ClothCap: Seamless 4D Clothing Capture and Retargeting. ACM Transactions on Graphics 36, 4 (2017), 73:1–73:15. Google ScholarDigital Library

45. Gerard Pons-Moll, Javier Romero, Naureen Mahmood, and Michael J Black. 2015a. Dyna: A model of dynamic human shape in motion. ACM Transactions on Graphics (TOG) 34, 4 (2015), 120. Google ScholarDigital Library

46. Gerard Pons-Moll, Jonathan Taylor, Jamie Shotton, Aaron Hertzmann, and Andrew Fitzgibbon. 2015b. Metric Regression Forests for Correspondence Estimation. International Journal of Computer Vision (IJCV) (2015), 1–13. Google ScholarDigital Library

47. Helge Rhodin, Christian Richardt, Dan Casas, Eldar Insafutdinov, Mohammad Shafiei, Hans-Peter Seidel, Bernt Schiele, and Christian Theobalt. 2016. EgoCap: egocentric marker-less motion capture with two fisheye cameras. 35, 6 (2016), 162. Google ScholarDigital Library

48. Helge Rhodin, Nadia Robertini, Christian Richardt, Hans-Peter Seidel, and Christian Theobalt. 2015. A versatile scene model with differentiable visibility applied to generative pose estimation. In Proceedings of the IEEE International Conference on Computer Vision. 765–773. Google ScholarDigital Library

49. Daniel Roetenberg, Henk Luinge, and Per Slycke. 2007. Moven: Full 6dof human motion tracking using miniature inertial sensors. Xsen Technologies, December (2007).Google Scholar

50. Nikolaos Sarafianos, Bogdan Boteanu, Bogdan Ionescu, and Ioannis A Kakadiaris. 2016. 3d human pose estimation: A review of the literature and analysis of covariates. Computer Vision and Image Understanding 152 (2016), 1–20. Google ScholarDigital Library

51. Mike Schuster and Kuldip K Paliwal. 1997. Bidirectional recurrent neural networks. IEEE Transactions on Signal Processing 45, 11 (1997), 2673–2681. Google ScholarDigital Library

52. L. Schwarz, D. Mateus, and N. Navab. 2009. Discriminative Human Full-Body Pose Estimation from Wearable Inertial Sensor Data. Modelling the Physiological Human (2009), 159–172. Google ScholarDigital Library

53. Jamie Shotton, Toby Sharp, Alex Kipman, Andrew Fitzgibbon, Mark Finocchio, Andrew Blake, Mat Cook, and Richard Moore. 2013. Real-time human pose recognition in parts from single depth images. Commun. ACM 56, 1 (2013), 116–124. Google ScholarDigital Library

54. L. Sigal, A.O. Balan, and M.J. Black. 2010. Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. International Journal on Computer Vision (IJCV) 87, 1 (2010), 4–27. Google ScholarDigital Library

55. R. Slyper and J. Hodgins. 2008. Action capture with accelerometers. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’08). Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, 193–199. http://dl.acm.org/citation.cfm?id=1632592.1632620 Google ScholarDigital Library

56. Jonathan Starck and Adrian Hilton. 2003. Model-based multiple view reconstruction of people. In null. IEEE, 915. Google ScholarDigital Library

57. Carsten Stoll, Nils Hasler, Juergen Gall, Hans-Peter Seidel, and Christian Theobalt. 2011. Fast articulated motion tracking using a sums of gaussians body model. In Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 951–958. Google ScholarDigital Library

58. Yu Tao, Zerong Zheng, Kaiwen Guo, Jianhui Zhao, Dai Quionhai, Hao Li, Gerard Pons-Moll, and Yebin Liu. 2018. DoubleFusion: Real-time Capture of Human Performance with Inner Body Shape from a Depth Sensor, In IEEE Conf. on Computer Vision and Pattern Recognition. IEEE Conf. on Computer Vision and Pattern Recognition. CVPR Oral.Google Scholar

59. Jochen Tautges, Arno Zinke, Björn Krüger, Jan Baumann, Andreas Weber, Thomas Helten, Meinard Müller, Hans-Peter Seidel, and Bernd Eberhardt. 2011. Motion reconstruction using sparse accelerometer data. ACM Transactions on Graphics (TOG) 30, 3 (2011), 18. Google ScholarDigital Library

60. Jonathan Taylor, Jamie Shotton, Toby Sharp, and Andrew Fitzgibbon. 2012. The vitruvian manifold: Inferring dense correspondences for one-shot human pose estimation. In Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 103–110. Google ScholarDigital Library

61. Bugra Tekin, Pablo Márquez-Neila, Mathieu Salzmann, and Pascal Fua. 2016. Fusing 2D Uncertainty and 3D Cues for Monocular Body Pose Estimation. arXiv preprint arXiv:1611.05708 (2016).Google Scholar

62. Jonathan J Tompson, Arjun Jain, Yann LeCun, and Christoph Bregler. 2014. Joint training of a convolutional network and a graphical model for human pose estimation. In NIPS. 1799–1807. Google ScholarDigital Library

63. Alexander Toshev and Christian Szegedy. 2014. Deeppose: Human pose estimation via deep neural networks. In CVPR. 1653–1660. Google ScholarDigital Library

64. Matthew Trumble, Andrew Gilbert, Charles Malleson, Adrian Hilton, and John Collomosse. 2017. Total capture: 3d human pose estimation fusing video and inertial sensors. In Proceedings of 28th British Machine Vision Conference. 1–13.Google ScholarCross Ref

65. Aäron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew W. Senior, and Koray Kavukcuoglu. 2016. WaveNet: A Generative Model for Raw Audio. CoRR abs/1609.03499 (2016). arXiv:1609.03499 http://arxiv.org/abs/1609.03499Google Scholar

66. Daniel Vlasic, Rolf Adelsberger, Giovanni Vannucci, John Barnwell, Markus Gross, Wojciech Matusik, and Jovan Popović. 2007. Practical motion capture in everyday surroundings. ACM Transactions on Graphics (TOG) 26, 3, 35. Google ScholarDigital Library

67. Timo von Marcard, Roberto Henschel, Michael Black, Bodo Rosenhahn, and Gerard Pons-Moll. 2018. Recovering Accurate 3D Human Pose in The Wild Using IMUs and a Moving Camera. In European Conference on Computer Vision (ECCV).Google ScholarDigital Library

68. Timo von Marcard, Gerard Pons-Moll, and Bodo Rosenhahn. 2016. Human Pose Estimation from Video and IMUs. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 38, 8 (aug 2016), 1533–1547.Google Scholar

69. Timo von Marcard, Bodo Rosenhahn, Michael J Black, and Gerard Pons-Moll. 2017. Sparse inertial poser: Automatic 3D human pose estimation from sparse IMUs. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 349–360. Google ScholarDigital Library

70. Jindong Wang, Yiqiang Chen, Shuji Hao, Xiaohui Peng, and Lisha Hu. 2017. Deep learning for sensor-based activity recognition: A survey. arXiv preprint arXiv:1707.03502 (2017).Google Scholar

71. Shih-En Wei, Varun Ramakrishna, Takeo Kanade, and Yaser Sheikh. 2016. Convolutional pose machines. In CVPR. 4724–4732.Google Scholar

72. Xiaolin Wei, Peizhao Zhang, and Jinxiang Chai. 2012. Accurate realtime full-body motion capture using a single depth camera. ACM Transactions on Graphics (TOG) 31, 6 (2012), 188. Google ScholarDigital Library

73. Xiaowei Zhou, Menglong Zhu, Spyridon Leonardos, Konstantinos G Derpanis, and Kostas Daniilidis. 2016. Sparseness meets deepness: 3D human pose estimation from monocular video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4966–4975.Google ScholarCross Ref

74. Michael Zollhöfer, Matthias Nießner, Shahram Izadi, Christoph Rehmann, Christopher Zach, Matthew Fisher, Chenglei Wu, Andrew Fitzgibbon, Charles Loop, Christian Theobalt, et al. 2014. Real-time non-rigid reconstruction using an RGB-D camera. ACM Transactions on Graphics (TOG) 33, 4 (2014), 156. Google ScholarDigital Library