“Color-Perception-Guided Display Power Reduction for Virtual Reality” by Duinkharjav, Chen, Tyagi, He, Zhu, et al. …

Conference:

Type(s):

Title:

- Color-Perception-Guided Display Power Reduction for Virtual Reality

Session/Category Title:

- Perception in VR and AR

Presenter(s)/Author(s):

Abstract:

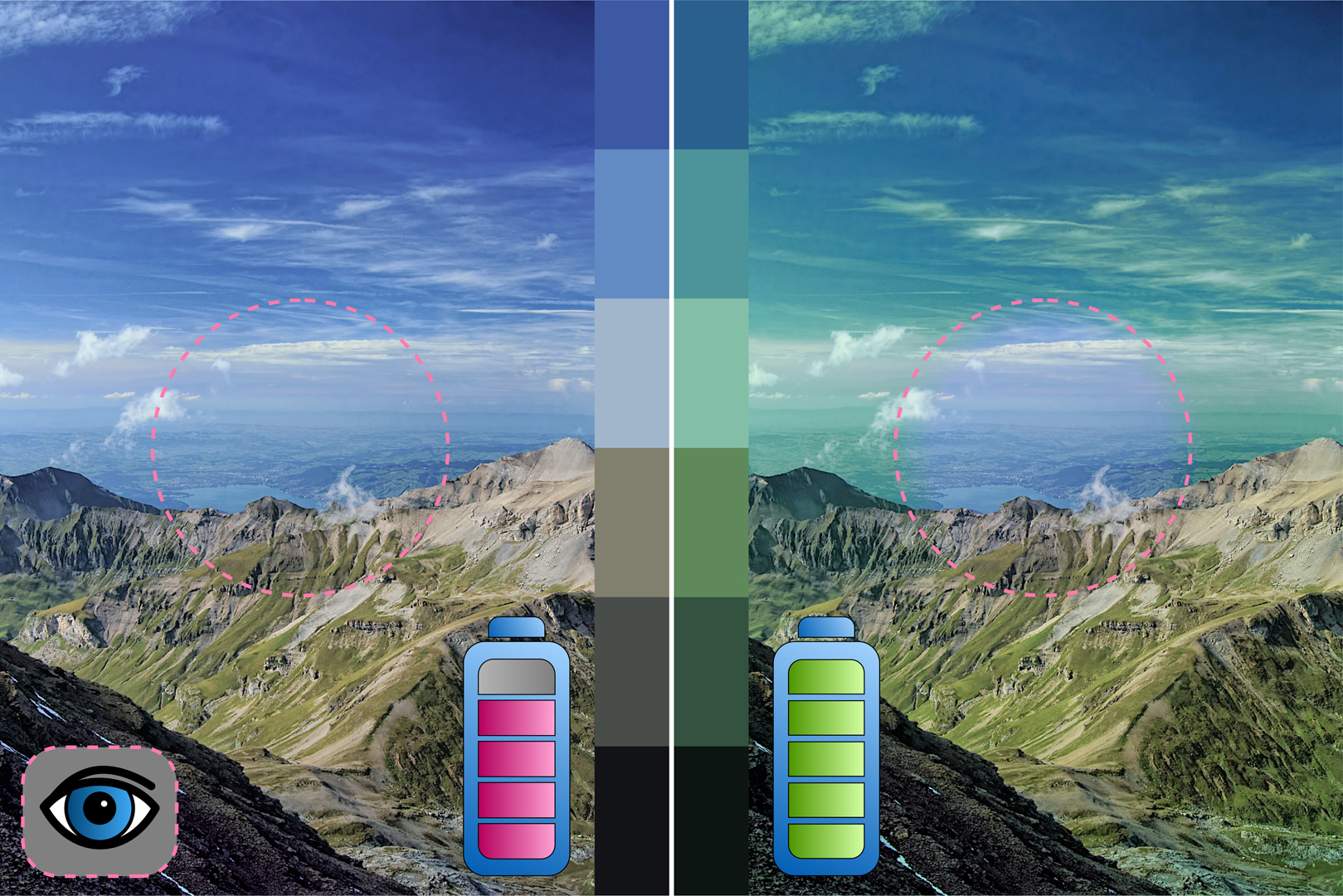

Battery life is an increasingly urgent challenge for today’s untethered VR and AR devices. However, the power efficiency of head-mounted displays is naturally at odds with growing computational requirements driven by better resolution, refresh rate, and dynamic ranges, all of which reduce the sustained usage time of untethered AR/VR devices. For instance, the Oculus Quest 2, under a fully-charged battery, can sustain only 2 to 3 hours of operation time. Prior display power reduction techniques mostly target smartphone displays. Directly applying smartphone display power reduction techniques, however, degrades the visual perception in AR/VR with noticeable artifacts. For instance, the “power-saving mode” on smartphones uniformly lowers the pixel luminance across the display and, as a result, presents an overall darkened visual perception to users if directly applied to VR content.Our key insight is that VR display power reduction must be cognizant of the gaze-contingent nature of high field-of-view VR displays. To that end, we present a gaze-contingent system that, without degrading luminance, minimizes the display power consumption while preserving high visual fidelity when users actively view immersive video sequences. This is enabled by constructing 1) a gaze-contingent color discrimination model through psychophysical studies, and 2) a display power model (with respect to pixel color) through real-device measurements. Critically, due to the careful design decisions made in constructing the two models, our algorithm is cast as a constrained optimization problem with a closed-form solution, which can be implemented as a real-time, image-space shader. We evaluate our system using a series of psychophysical studies and large-scale analyses on natural images. Experiment results show that our system reduces the display power by as much as 24% (14% on average) with little to no perceptual fidelity degradation.

References:

1. Mark Bohr. 2007. A 30 year retrospective on Dennard’s MOSFET scaling paper. IEEE Solid-State Circuits Society Newsletter 12, 1 (2007), 11–13.

2. ML Boroson, JE Ludwicki, and MJ Murdoch. US Patent 7,586,497, Sep. 8, 2009. OLED display with improved power performance.

3. James K Bowmaker and HJk Dartnall. 1980. Visual pigments ofrods and cones in a human retina. The Journal of physiology 298, 1 (1980), 501–511.

4. Doris I Braun, Alexander C Schütz, and Karl R Gegenfurtner. 2017. Visual sensitivity for luminance and chromatic stimuli during the execution of smooth pursuit and saccadic eye movements. Vision Research 136 (2017), 57–69.

5. Giorgia Chinazzo, Kynthia Chamilothori, Jan Wienold, and Marilyne Andersen. 2021. Temperature-color interaction: subjective indoor environmental perception and physiological responses in virtual reality. Human Factors 63, 3 (2021), 474–502.

6. Michael A. Cohen, Thomas L. Botch, and Caroline E. Robertson. 2020. The limits of color awareness during active, real-world vision. Proceedings of the National Academy of Sciences 117, 24 (2020), 13821–13827. arXiv:https://www.pnas.org/content/117/24/13821.full.pdf

7. Bevil R Conway, Rhea T Eskew Jr, Paul R Martin, and Andrew Stockman. 2018. A tour of contemporary color vision research. Vision research 151 (2018), 2–6.

8. Herbert JA Dartnall, James K Bowmaker, and John Dixon Mollon. 1983. Human visual pigments: microspectrophotometric results from the eyes of seven persons. Proceedings of the Royal society of London. Series B. Biological sciences 220, 1218 (1983), 115–130.

9. Pranab Dash and Y. Charlie Hu. 2021. How Much Battery Does Dark Mode Save? An Accurate OLED Display Power Profiler for Modern Smartphones. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services (Virtual Event, Wisconsin) (MobiSys ’21). Association for Computing Machinery, New York, NY, USA, 323–335.

10. Russell L De Valois, Israel Abramov, and Gerald H Jacobs. 1966. Analysis of response patterns of LGN cells. JOSA 56, 7 (1966), 966–977.

11. Kurt Debattista, Keith Bugeja, Sandro Spina, Thomas Bashford-Rogers, and Vedad Hulusic. 2018. Frame rate vs resolution: A subjective evaluation of spatiotemporal perceived quality under varying computational budgets. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 363–374.

12. Andrew M Derrington, John Krauskopf, and Peter Lennie. 1984. Chromatic mechanisms in lateral geniculate nucleus of macaque. The Journal of physiology 357, 1 (1984), 241–265.

13. Mian Dong, Yung-Seok Kevin Choi, and Lin Zhong. 2009. Power modeling of graphical user interfaces on OLED displays. In 2009 46th ACM/IEEE Design Automation Conference. IEEE, 652–657.

14. Mian Dong and Lin Zhong. 2011. Chameleon: A color-adaptive web browser for mobile OLED displays. In Proceedings of the 9th international conference on Mobile systems, applications, and services. 85–98.

15. Andrew Duchowski, Nathan Cournia, and Hunter Murphy. 2005. Gaze-Contingent Displays: A Review. Cyberpsychology & behavior: the impact of the Internet, multimedia and virtual reality on behavior and society 7 (01 2005), 621–34.

16. Mark D Fairchild. 2013. Color appearance models. John Wiley & Sons.

17. Mark D Fairchild and Lisa Reniff. 1995. Time course of chromatic adaptation for color-appearance judgments. JOSA A 12, 5 (1995), 824–833.

18. Hugh S Fairman, Michael H Brill, and Henry Hemmendinger. 1997. How the CIE 1931 color-matching functions were derived from Wright-Guild data. Color Research & Application 22, 1 (1997), 11–23.

19. Google. 2019. Fundamental Concepts of Google VR. https://developers.google.com/vr/discover/fundamentals#display_persistence.

20. Brian Guenter, Mark Finch, Steven Drucker, Desney Tan, and John Snyder. 2012. Foveated 3D graphics. ACM Transactions on Graphics (TOG) 31, 6 (2012), 1–10.

21. John Guild. 1931. The colorimetric properties of the spectrum. Philosophical Transactions of the Royal Society of London. Series A, Containing Papers of a Mathematical or Physical Character 230, 681–693 (1931), 149–187.

22. Rolf R Hainich and Oliver Bimber. 2016. Displays: fundamentals & applications. AK Peters/CRC Press.

23. Matthew Halpern, Yuhao Zhu, and Vijay Janapa Reddi. 2016. Mobile CPU’s rise to power: Quantifying the impact of generational mobile CPU design trends on performance, energy, and user satisfaction. In 2016 IEEE International Symposium on High Performance Computer Architecture (HPCA). IEEE, 64–76.

24. Thorsten Hansen, Martin Giesel, and Karl R Gegenfurtner. 2008. Chromatic discrimination of natural objects. Journal of Vision 8, 1 (2008), 2–2.

25. Thorsten Hansen, Lars Pracejus, and Karl R Gegenfurtner. 2009. Color perception in the intermediate periphery of the visual field. Journal of vision 9, 4 (2009), 26–26.

26. Nargess Hassani and Michael J Murdoch. 2016. Color appearance modeling in augmented reality. In Proceedings of the ACM Symposium on Applied Perception. 132–132.

27. Katherine L Hermann, Shridhar R Singh, Isabelle A Rosenthal, Dimitrios Pantazis, and Bevil R Conway. 2021. Temporal dynamics of the neural representation of hue and luminance contrast. BioRxiv (2021), 2020–06.

28. Yuge Huang, En-Lin Hsiang, Ming-Yang Deng, and Shin-Tson Wu. 2020. Mini-LED, Micro-LED and OLED displays: Present status and future perspectives. Light: Science & Applications 9, 1 (2020), 1–16.

29. Anton S Kaplanyan, Anton Sochenov, Thomas Leimkühler, Mikhail Okunev, Todd Goodall, and Gizem Rufo. 2019. DeepFovea: neural reconstruction for foveated rendering and video compression using learned statistics of natural videos. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–13.

30. Minjung Kim, Maryam Azimi, and Rafał K Mantiuk. 2021. Color threshold functions: Application of contrast sensitivity functions in standard and high dynamic range color spaces. Electronic Imaging 2021, 11 (2021), 153–1.

31. Brooke Krajancich, Petr Kellnhofer, and Gordon Wetzstein. 2021. A Perceptual Model for Eccentricity-dependent Spatio-temporal Flicker Fusion and its Applications to Foveated Graphics. ACM Trans. Graph. 40 (2021). Issue 4.

32. John Krauskopf and Gegenfurtner Karl. 1992. Color discrimination and adaptation. Vision research 32, 11 (1992), 2165–2175.

33. James Larimer, Carol M Cicerone, et al. 1974. Opponent-process additivity—I: Red/green equilibria. Vision Research 14, 11 (1974), 1127–1140.

34. James Larimer, David H Krantz, and Carol M Cicerone. 1975. Opponent process additivity—II. Yellow/blue equilibria and nonlinear models. Vision research 15, 6 (1975),723–731.

35. Yue Leng, Chi-Chun Chen, Qiuyue Sun, Jian Huang, and Yuhao Zhu. 2019. Energy-efficient video processing for virtual reality. In Proceedings of the 46th International Symposium on Computer Architecture. 91–103.

36. Yang Li, Alexandre Chapiro, Mehmet N. Agaoglu, Nicolas Pierre Marie Frederic Bonnier, Yi-Pai Huang, Chaohao Wang, Andrew B. Watson, and Pretesh A. Mascarenhas. 2022. PERIPHERAL LUMINANCE OR COLOR REMAPPING FOR POWER SAVING.

37. David L MacAdam. 1942. Visual sensitivities to color differences in daylight. Josa 32, 5 (1942), 247–274.

38. Rafał K Mantiuk, Gyorgy Denes, Alexandre Chapiro, Anton Kaplanyan, Gizem Rufo, Romain Bachy, Trisha Lian, and Anjul Patney. 2021. FovVideoVDP: A visible difference predictor for wide field-of-view video. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–19.

39. Michael Mauderer, David R Flatla, and Miguel A Nacenta. 2016. Gaze-contingent manipulation of color perception. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems. 5191–5202.

40. Andrew B Metha, Algis J Vingrys, and David R Badcock. 1994. Detection and discrimination of moving stimuli: the effects of color, luminance, and eccentricity. JOSA A 11, 6 (1994), 1697–1709.

41. Microsoft. 2021. About HoloLens 2. https://docs.microsoft.com/en-us/hololens/hololens2-hardware.

42. ME Miller, RS Cok, AD Arnold, and MJ Murdoch. US Patent 7,230,594, Jun. 12, 2007. Color OLED display with improved power efficiency.

43. ME Miller, MJ Murdoch, RS Cok, and AD Arnold. US Patent 7,333,080, Feb. 19, 2008. Color OLED display with improved power efficiency.

44. Michael E Miller, Michael J Murdoch, John E Ludwicki, and Andrew D Arnold. 2006. P-73: Determining Power Consumption for Emissive Displays. In SID Symposium Digest of Technical Papers, Vol. 37. Wiley Online Library, 482–485.

45. Michael J Murdoch, Mariska GM Stokkermans, and Marc Lambooij. 2015. Towards perceptual accuracy in 3D visualizations of illuminated indoor environments. Journal of Solid State Lighting 2, 1 (2015), 1–19.

46. Dirk V Norren and Johannes J Vos. 1974. Spectral transmission of the human ocular media. Vision research 14, 11 (1974), 1237–1244.

47. Anjul Patney, Marco Salvi, Joohwan Kim, Anton Kaplanyan, Chris Wyman, Nir Benty, David Luebke, and Aaron Lefohn. 2016. Towards Foveated Rendering for Gaze-Tracked Virtual Reality. ACM Trans. Graph. 35, 6, Article 179 (Nov. 2016), 12 pages.

48. Rui Peng, Mingkai Cao, Qiyan Zhai, and Ming Ronnier Luo. 2021. White appearance and chromatic adaptation on a display under different ambient lighting conditions. Color Research & Application 46, 5 (2021), 1034–1045.

49. Ernst Pöppel and Lewis O Harvey. 1973. Light-difference threshold and subjective brightness in the periphery of the visual field. Psychologische Forschung 36, 2 (1973), 145–161.

50. Parthasarathy Ranganathan, Erik Geelhoed, Meera Manahan, and Ken Nicholas. 2006. Energy-aware user interfaces and energy-adaptive displays. Computer 39, 3 (2006), 31–38.

51. Theresa-Marie Rhyne, Nicholas Bazarian, Jose Echevarria, Michael J Murdoch, and Danielle Feinberg. 2018. Color mavens advise on digital media creation and tools: SIGGRAPH 2018 panel. In ACM SIGGRAPH 2018 Panels. 1–2.

52. Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. 2015. Imagenet large scale visual recognition challenge. International journal of computer vision 115, 3 (2015), 211–252.

53. Peter H Schiller and Nikos K Logothetis. 1990. The color-opponent and broad-band channels of the primate visual system. Trends in neurosciences 13, 10 (1990), 392–398.

54. Fred Schlachter. 2013. No Moore’s Law for batteries. Proceedings of the National Academy of Sciences 110, 14 (2013), 5273–5273.

55. Donghwa Shin, Younghyun Kim, Naehyuck Chang, and Massoud Pedram. 2013. Dynamic driver supply voltage scaling for organic light emitting diode displays. IEEE Transactions on Computer-Aided Design of integrated circuits and systems 32, 7 (2013), 1017–1030.

56. Alex Shye, Benjamin Scholbrock, and Gokhan Memik. 2009. Into the wild: studying real user activity patterns to guide power optimizations for mobile architectures. In Proceedings of the 42nd annual IEEE/ACM international symposium on microarchitecture. 168–178.

57. Vivianne C Smith and Joel Pokorny. 1975. Spectral sensitivity of the foveal cone photopigments between 400 and 500 nm. Vision research 15, 2 (1975), 161–171.

58. Hongxin Song, Toco Yuen Ping Chui, Zhangyi Zhong, Ann E Elsner, and Stephen A Burns. 2011. Variation of cone photoreceptor packing density with retinal eccentricity and age. Investigative ophthalmology & visual science 52, 10 (2011), 7376–7384.

59. Andrew Stockman and Lindsay T Sharpe. 2000. The spectral sensitivities of the middle- and long-wavelength-sensitive cones derived from measurements in observers of known genotype. Vision research 40, 13 (2000), 1711–1737.

60. Qi Sun, Fu-Chung Huang, Joohwan Kim, Li-Yi Wei, David Luebke, and Arie Kaufman. 2017. Perceptually-Guided Foveation for Light Field Displays. ACM Trans. Graph. 36, 6, Article 192 (Nov. 2017), 13 pages.

61. Qi Sun, Fu-Chung Huang, Li-Yi Wei, David Luebke, Arie Kaufman, and Joohwan Kim. 2020. Eccentricity effects on blur and depth perception. Optics express 28, 5 (2020), 6734–6739.

62. Takatoshi Tsujimura. 2017. OLED display fundamentals and applications. John Wiley & Sons.

63. Okan Tarhan Tursun, Elena Arabadzhiyska-Koleva, Marek Wernikowski, Radosław Mantiuk, Hans-Peter Seidel, Karol Myszkowski, and Piotr Didyk. 2019. Luminance-contrast-aware foveated rendering. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–14.

64. Algis J Vingrys and Luke E Mahon. 1998. Color and luminance detection and discrimination asymmetries and interactions. Vision research 38, 8 (1998), 1085–1095.

65. David R Walton, Rafael Kuffner Dos Anjos, Sebastian Friston, David Swapp, Kaan Akşit, Anthony Steed, and Tobias Ritschel. 2021. Beyond blur: Real-time ventral metamers for foveated rendering. ACM Transactions on Graphics 40, 4 (2021), 1–14.

66. Rui Wang, Bowen Yu, Julio Marco, Tianlei Hu, Diego Gutierrez, and Hujun Bao. 2016. Real-time rendering on a power budget. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.

67. Stephen Westland, Caterina Ripamonti, and Vien Cheung. 2012. Computational colour science using MATLAB. John Wiley & Sons.

68. Wisecoco. 2022. 3.81 inch OLED AMOLED Display . https://www.aliexpress.com/item/33015630007.html.

69. William David Wright. 1929. A re-determination of the trichromatic coefficients of the spectral colours. Transactions of the Optical Society 30, 4 (1929), 141.

70. Hao Xie, Susan P Farnand, and Michael J Murdoch. 2020. Observer metamerism in commercial displays. JOSA A 37, 4 (2020), A61-A69.

71. Zhisheng Yan, Chen Song, Feng Lin, and Wenyao Xu. 2018. Exploring Eye Adaptation in Head-Mounted Display for Energy Efficient Smartphone Virtual Reality. In Proceedings of the 19th International Workshop on Mobile Computing Systems & Applications (Tempe, Arizona, USA) (HotMobile ’18). Association for Computing Machinery, New York, NY, USA, 13–18.

72. Lili Zhang, Michael J Murdoch, and Romain Bachy. 2021a. Color appearance shift in augmented reality metameric matching. JOSA A 38, 5 (2021), 701–710.

73. Yunjin Zhang, Marta Ortin, Victor Arellano, Rui Wang, Diego Gutierrez, and Hujun Bao. 2018. On-the-Fly Power-Aware Rendering. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 155–166.

74. Yunjin Zhang, Rui Wang, Yuchi Huo, Wei Hua, and Hujun Bao. 2021b. PowerNet: Learning-based Real-time Power-budget Rendering. IEEE Transactions on Visualization and Computer Graphics (2021).