“CLIPasso: semantically-aware object sketching” by Vinker, Pajouheshgar, Bo, Bachmann, Bermano, et al. …

Conference:

Type(s):

Title:

- CLIPasso: semantically-aware object sketching

Presenter(s)/Author(s):

Abstract:

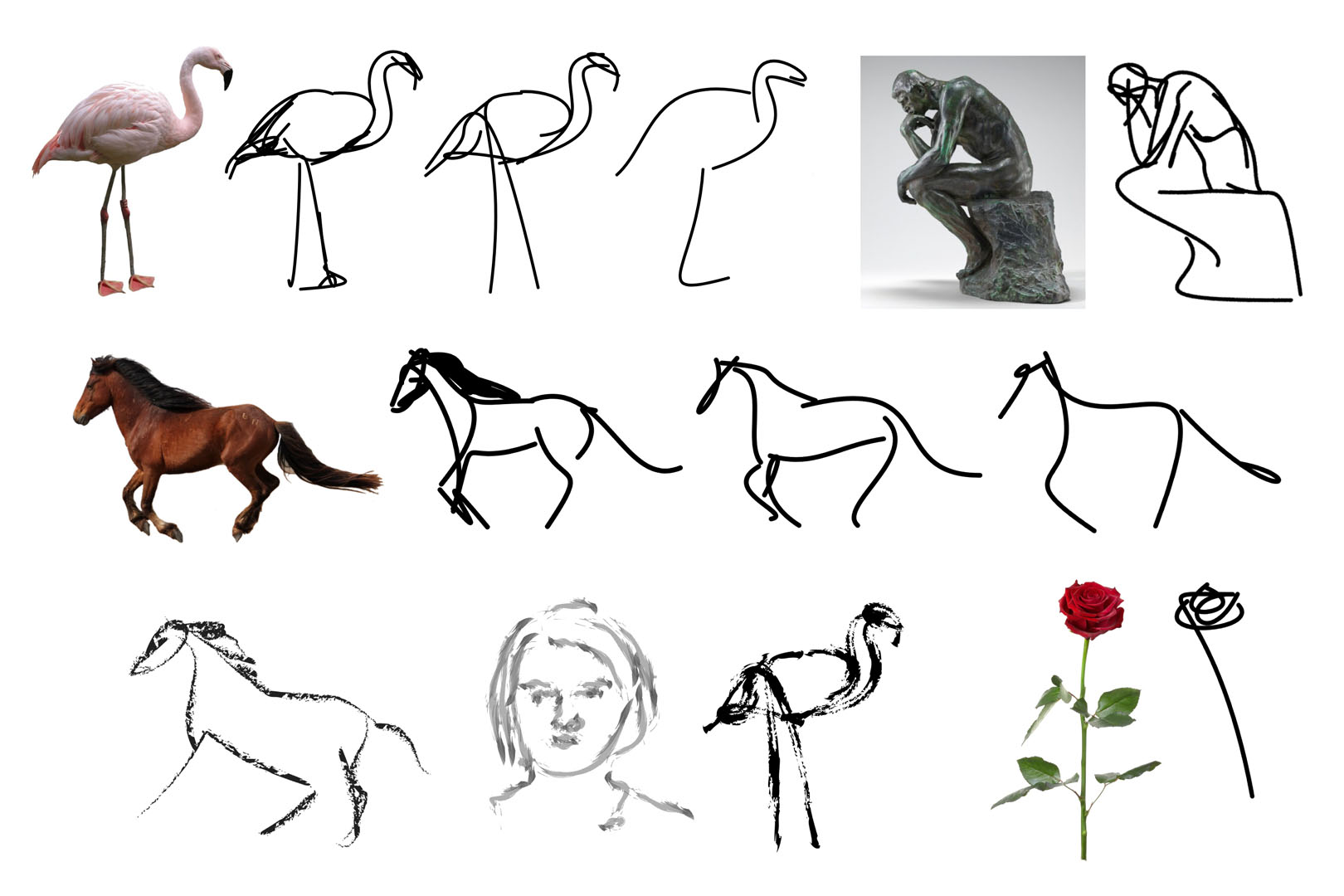

Abstraction is at the heart of sketching due to the simple and minimal nature of line drawings. Abstraction entails identifying the essential visual properties of an object or scene, which requires semantic understanding and prior knowledge of high-level concepts. Abstract depictions are therefore challenging for artists, and even more so for machines. We present CLIPasso, an object sketching method that can achieve different levels of abstraction, guided by geometric and semantic simplifications. While sketch generation methods often rely on explicit sketch datasets for training, we utilize the remarkable ability of CLIP (Contrastive-Language-Image-Pretraining) to distill semantic concepts from sketches and images alike. We define a sketch as a set of Bézier curves and use a differentiable rasterizer to optimize the parameters of the curves directly with respect to a CLIP-based perceptual loss. The abstraction degree is controlled by varying the number of strokes. The generated sketches demonstrate multiple levels of abstraction while maintaining recognizability, underlying structure, and essential visual components of the subject drawn.

References:

1. Pablo Arbel?ez, Michael Maire, Charless Fowlkes, and Jitendra Malik. 2011. Contour Detection and Hierarchical Image Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence 33, 5 (2011), 898–916. Google ScholarDigital Library

2. Pierre B?nard and Aaron Hertzmann. 2019. Line Drawings from 3D Models. Found. Trends Comput. Graph. Vis. 11 (2019), 1–159.Google ScholarDigital Library

3. Itamar Berger, Ariel Shamir, Moshe Mahler, Elizabeth Carter, and Jessica Hodgins. 2013. Style and Abstraction in Portrait Sketching. ACM Trans. Graph. 32, 4, Article 55 (jul 2013), 12 pages. Google ScholarDigital Library

4. Ayan Kumar Bhunia, Ayan Das, Umar Riaz Muhammad, Yongxin Yang, Timothy M. Hospedales, Tao Xiang, Yulia Gryaditskaya, and Yi-Zhe Song. 2020. Pixelor: a competitive sketching AI agent. so you think you can sketch? ACM Trans. Graph. 39 (2020), 166:1–166:15.Google ScholarDigital Library

5. John Canny. 1986. A computational approach to edge detection. IEEE Transactions on pattern analysis and machine intelligence 6 (1986), 679–698.Google ScholarDigital Library

6. Rebecca Chamberlain and Johan Wagemans. 2016. The genesis of errors in drawing. Neuroscience & Biobehavioral Reviews 65 (2016), 195–207.Google ScholarCross Ref

7. Hila Chefer, Shir Gur, and Lior Wolf. 2021. Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers. 2021 IEEE/CVF International Conference on Computer Vision (ICCV) (2021), 387–396.Google Scholar

8. Hong Chen, Ying-Qing Xu, Harry Shum, Song-Chun Zhu, and Nanning Zheng. 2001. Example-based facial sketch generation with non-parametric sampling. Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001 2 (2001), 433–438 vol.2.Google ScholarCross Ref

9. Yajing Chen, Shikui Tu, Yuqi Yi, and Lei Xu. 2017. Sketch-pix2seq: a Model to Generate Sketches of Multiple Categories. ArXiv abs/1709.04121 (2017).Google Scholar

10. Judith E. Fan, Daniel L. K. Yamins, and Nicholas B. Turk-Browne. 2018. Common Object Representations for Visual Production and Recognition. Cognitive science 42 8 (2018), 2670–2698.Google Scholar

11. Judith W. Fan, Robert D. Hawkins, Mike Wu, and Noah D. Goodman. 2019. Pragmatic Inference and Visual Abstraction Enable Contextual Flexibility During Visual Communication. Computational Brain & Behavior 3 (2019), 86–101.Google ScholarCross Ref

12. Kevin Frans, Lisa B. Soros, and Olaf Witkowski. 2021. CLIPDraw: Exploring Text-to-Drawing Synthesis through Language-Image Encoders. CoRR abs/2106.14843 (2021). arXiv:2106.14843 https://arxiv.org/abs/2106.14843Google Scholar

13. Yaroslav Ganin, Tejas D. Kulkarni, Igor Babuschkin, S. M. Ali Eslami, and Oriol Vinyals. 2018. Synthesizing Programs for Images using Reinforced Adversarial Learning. ArXiv abs/1804.01118 (2018).Google Scholar

14. Chengying Gao, Qi Liu, Qi Xu, Limin Wang, Jianzhuang Liu, and Changqing Zou. 2020. SketchyCOCO: Image Generation From Freehand Scene Sketches. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5174–5183.Google ScholarCross Ref

15. Gabriel Goh, Nick Cammarata ?, Chelsea Voss ?, Shan Carter, Michael Petrov, Ludwig Schubert, Alec Radford, and Chris Olah. 2021. Multimodal Neurons in Artificial Neural Networks. Distill (2021). https://distill.pub/2021/multimodal-neurons. Google ScholarCross Ref

16. Yulia Gryaditskaya, Mark Sypesteyn, Jan Willem Hoftijzer, Sylvia C. Pont, Fr?do Durand, and Adrien Bousseau. 2019. OpenSketch: a richly-annotated dataset of product design sketches. ACM Trans. Graph. 38 (2019), 232:1–232:16.Google ScholarDigital Library

17. David Ha and Douglas Eck. 2017. A Neural Representation of Sketch Drawings. CoRR abs/1704.03477 (2017). arXiv:1704.03477 http://arxiv.org/abs/1704.03477Google Scholar

18. A. Hertzmann. 2003. A survey of stroke-based rendering. IEEE Computer Graphics and Applications 23, 4 (2003), 70–81. Google ScholarDigital Library

19. Aaron Hertzmann. 2020. Why Do Line Drawings Work? A Realism Hypothesis. Perception 49 (2020), 439–451.Google Scholar

20. Aaron Hertzmann. 2021. The Role of Edges in Line Drawing Perception. Perception 50 (2021), 266–275.Google ScholarCross Ref

21. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In CVPR.Google Scholar

22. Moritz Kampelm?hler and Axel Pinz. 2020. Synthesizing human-like sketches from natural images using a conditional convolutional decoder. CoRR abs/2003.07101 (2020). arXiv:2003.07101 https://arxiv.org/abs/2003.07101Google Scholar

23. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2015).Google Scholar

24. Alexander Kolesnikov, Alexey Dosovitskiy, Dirk Weissenborn, Georg Heigold, Jakob Uszkoreit, Lucas Beyer, Matthias Minderer, Mostafa Dehghani, Neil Houlsby, Sylvain Gelly, Thomas Unterthiner, and Xiaohua Zhai. 2021. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. In ICLR ’21.Google Scholar

25. Yann LeCun and Corinna Cortes. 2005. The mnist database of handwritten digits.Google Scholar

26. Mengtian Li, Zhe Lin, Radomir Mech, Ersin Yumer, and Deva Ramanan. 2019. Photo-Sketching: Inferring Contour Drawings from Images. arXiv:1901.00542 [cs.CV]Google Scholar

27. Tzu-Mao Li, Michal Luk??, Gharbi Micha?l, and Jonathan Ragan-Kelley. 2020. Differentiable Vector Graphics Rasterization for Editing and Learning. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 39, 6 (2020), 193:1–193:15.Google Scholar

28. Yi Li, Yi-Zhe Song, Timothy M. Hospedales, and Shaogang Gong. 2015. Free-hand Sketch Synthesis with Deformable Stroke Models. CoRR abs/1510.02644 (2015). arXiv:1510.02644 http://arxiv.org/abs/1510.02644Google Scholar

29. Hangyu Lin, Yanwei Fu, Yu-Gang Jiang, and X. Xue. 2020. Sketch-BERT: Learning Sketch Bidirectional Encoder Representation From Transformers by Self-Supervised Learning of Sketch Gestalt. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), 6757–6766.Google Scholar

30. John F. J. Mellor, Eunbyung Park, Yaroslav Ganin, Igor Babuschkin, Tejas Kulkarni, Dan Rosenbaum, Andy Ballard, Theophane Weber, Oriol Vinyals, and S. M. Ali Eslami. 2019. Unsupervised Doodling and Painting with Improved SPIRAL. CoRR abs/1910.01007 (2019). arXiv:1910.01007 http://arxiv.org/abs/1910.01007Google Scholar

31. Daniela Mihai and Jonathon S. Hare. 2021a. Differentiable Drawing and Sketching. ArXiv abs/2103.16194 (2021).Google Scholar

32. Daniela Mihai and Jonathon S. Hare. 2021b. Learning to Draw: Emergent Communication through Sketching. ArXiv abs/2106.02067 (2021).Google Scholar

33. Meredith Minear and Denise C. Park. 2004. A lifespan database of adult facial stimuli. Behavior Research Methods, Instruments, & Computers 36 (2004), 630–633.Google ScholarCross Ref

34. Umar Riaz Muhammad, Yongxin Yang, Yi-Zhe Song, Tao Xiang, and Timothy M. Hospedales. 2018. Learning Deep Sketch Abstraction. CoRR abs/1804.04804 (2018). arXiv:1804.04804 http://arxiv.org/abs/1804.04804Google Scholar

35. Yonggang Qi, Guoyao Su, Pinaki Nath Chowdhury, Mingkang Li, and Yi-Zhe Song. 2021. SketchLattice: Latticed Representation for Sketch Manipulation. ArXiv abs/2108.11636 (2021).Google Scholar

36. Xuebin Qin, Zichen Zhang, Chenyang Huang, Masood Dehghan, Osmar Zaiane, and Martin Jagersand. 2020. U2-Net: Going Deeper with Nested U-Structure for Salient Object Detection. Pattern Recognition 106, 107404.Google ScholarCross Ref

37. Shuwen Qiu, Sirui Xie, Lifeng Fan, Tao Gao, Song-Chun Zhu, and Yixin Zhu. 2021. Emergent Graphical Conventions in a Visual Communication Game.Google Scholar

38. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. CoRR abs/2103.00020 (2021). arXiv:2103.00020 https://arxiv.org/abs/2103.00020Google Scholar

39. Leo Sampaio Ferraz Ribeiro, Tu Bui, John P. Collomosse, and Moacir Antonelli Ponti. 2020. Sketchformer: Transformer-Based Representation for Sketched Structure. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), 14141–14150.Google Scholar

40. Patsorn Sangkloy, Nathan Burnell, Cusuh Ham, and James Hays. 2016. The Sketchy Database: Learning to Retrieve Badly Drawn Bunnies. ACM Trans. Graph. 35, 4, Article 119 (jul 2016), 12 pages. Google ScholarDigital Library

41. Jifei Song, Kaiyue Pang, Yi-Zhe Song, Tao Xiang, and Timothy Hospedales. 2018. Learning to Sketch with Shortcut Cycle Consistency. arXiv:1805.00247 [cs.CV]Google Scholar

42. Yingtao Tian and David Ha. 2021. Modern Evolution Strategies for Creativity: Fitting Concrete Images and Abstract Concepts. CoRR abs/2109.08857 (2021). arXiv:2109.08857 https://arxiv.org/abs/2109.08857Google Scholar

43. Barbara Tversky. 2002. What do Sketches Say about Thinking.Google Scholar

44. V Varshaneya, Sangeetha Balasubramanian, and Vineeth N. Balasubramanian. 2021. Teaching GANs to sketch in vector format. Proceedings of the Twelfth Indian Conference on Computer Vision, Graphics and Image Processing (2021).Google Scholar

45. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2017. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. CoRR abs/1711.11585 (2017). arXiv:1711.11585 http://arxiv.org/abs/1711.11585Google Scholar

46. Holger Winnem?ller, Jan Eric Kyprianidis, and Sven C. Olsen. 2012. XDoG: An eXtended difference-of-Gaussians compendium including advanced image stylization. Comput. Graph. 36 (2012), 740–753.Google ScholarDigital Library

47. Peng Xu, Timothy M. Hospedales, Qiyue Yin, Yi-Zhe Song, Tao Xiang, and Liang Wang. 2020. Deep Learning for Free-Hand Sketch: A Survey and A Toolbox. arXiv:2001.02600 [cs.CV]Google Scholar

48. Justin Yang and Judith E. Fan. 2021. Visual communication of object concepts at different levels of abstraction. ArXiv abs/2106.02775 (2021).Google Scholar

49. Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018), 586–595.Google ScholarCross Ref

50. N. Zheng, Yf Jiang, and Ding jiang Huang. 2019. StrokeNet: A Neural Painting Environment. In ICLR.Google Scholar

51. Tao Zhou, Chen Fang, Zhaowen Wang, Jimei Yang, Byungmoon Kim, Zhili Chen, Jonathan Brandt, and Demetri Terzopoulos. 2018. Learning to Sketch with Deep Q Networks and Demonstrated Strokes. ArXiv abs/1810.05977 (2018).Google Scholar