“Camera keyframing with style and control” by Jiang, Christie, Wang, Liu, Wang, et al. …

Conference:

Type(s):

Title:

- Camera keyframing with style and control

Session/Category Title:

- Physically-based Simulation and Motion Control

Presenter(s)/Author(s):

Abstract:

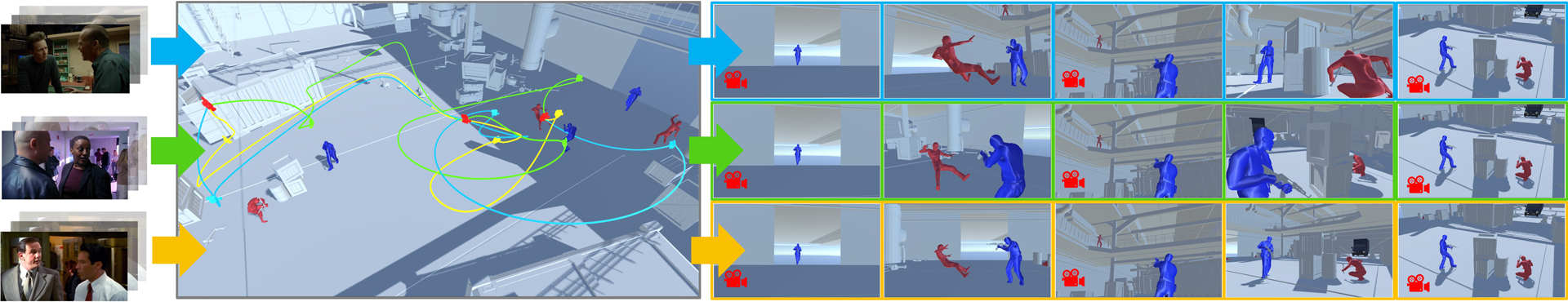

We present a novel technique that enables 3D artists to synthesize camera motions in virtual environments following a camera style, while enforcing user-designed camera keyframes as constraints along the sequence. To solve this constrained motion in-betweening problem, we design and train a camera motion generator from a collection of temporal cinematic features (camera and actor motions) using a conditioning on target keyframes. We further condition the generator with a style code to control how to perform the interpolation between the keyframes. Style codes are generated by training a second network that encodes different camera behaviors in a compact latent space, the camera style space. Camera behaviors are defined as temporal correlations between actor features and camera motions and can be extracted from real or synthetic film clips. We further extend the system by incorporating a fine control of camera speed and direction via a hidden state mapping technique. We evaluate our method on two aspects: i) the capacity to synthesize style-aware camera trajectories with user defined keyframes; and ii) the capacity to ensure that in-between motions still comply with the reference camera style while satisfying the keyframe constraints. As a result, our system is the first style-aware keyframe in-betweening technique for camera control that balances style-driven automation with precise and interactive control of keyframes.

References:

1. Ferran Argelaguet and Carlos Andujar. 2010. Automatic Speed Graph Generation for Predefined Camera Paths. In Proceedings of the 10th International Conference on Smart Graphics. Springer-Verlag, Berlin, Heidelberg, 115–126.

2. Jim Blinn. 1988. Where am I? What am I looking at?(cinematography). IEEE Computer Graphics and Applications 8, 4 (1988), 76–81.

3. Rogerio Bonatti, Arthur Bucker, Sebastian Scherer, Mustafa Mukadam, and Jessica Hodgins. 2020a. Batteries, camera, action! Learning a semantic control space for expressive robot cinematography. arXiv:2011.10118 [cs.CV]

4. Rogerio Bonatti, Wenshan Wang, Cherie Ho, Aayush Ahuja, Mirko Gschwindt, Efe Camci, Erdal Kayacan, Sanjiban Choudhury, and Sebastian Scherer. 2020b. Autonomous aerial cinematography in unstructured environments with learned artistic decision-making. Journal of Field Robotics 37, 4 (2020), 606–641.

5. Michael Büttner and Simon Clavet. 2015. Motion Matching-The Road to Next Gen Animation. Proc. of Nucl. ai (2015).

6. Loïc Ciccone, Cengiz Öztireli, and Robert W Sumner. 2019. Tangent-space optimization for interactive animation control. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–10.

7. Petros Faloutsos, Michiel van de Panne, and Demetri Terzopoulos. 2001. Composable Controllers for Physics-Based Character Animation. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques. Association for Computing Machinery, New York, NY, USA, 251–260.

8. Quentin Galvane, Marc Christie, Christophe Lino, and Rémi Ronfard. 2015a. Camera-on-rails: automated computation of constrained camera paths. In Proc. ACM SIGGRAPH Conf. Motion in Games.

9. Quentin Galvane, Marc Christie, Rémi Ronfard, Chen-Kim Lim, and Marie-Paule Cani. 2013. Steering Behaviors for Autonomous Cameras. In Proceedings of Motion on Games. Association for Computing Machinery, New York, NY, USA, 93–102.

10. Quentin Galvane, Rémi Ronfard, Christophe Lino, and Marc Christie. 2015b. Continuity Editing for 3D Animation. Proceedings of the AAAI Conference on Artificial Intelligence 29, 1 (Feb. 2015).

11. Xiao-Shan Gao, Xiao-Rong Hou, Jianliang Tang, and Hang-Fei Cheng. 2003. Complete solution classification for the perspective-three-point problem. IEEE transactions on pattern analysis and machine intelligence 25, 8 (2003), 930–943.

12. Mirko Gschwindt, Efe Camci, Rogerio Bonatti, Wenshan Wang, Erdal Kayacan, and Sebastian Scherer. 2019. Can a Robot Become a Movie Director? Learning Artistic Principles for Aerial Cinematography. arXiv:1904.02579 [cs.RO]

13. Félix G Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Trans. on Graphics 39, 4 (2020), 60–1.

14. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.

15. Axel Hösl. 2019. Understanding and designing for control in camera operation. Ph.D. Dissertation. lmu.

16. Chong Huang, Yuanjie Dang, Peng Chen, Xin Yang, et al. 2019a. One-Shot Imitation Filming of Human Motion Videos. arXiv preprint arXiv:1912.10609 (2019).

17. Chong Huang, Chuan-En Lin, Zhenyu Yang, Yan Kong, Peng Chen, Xin Yang, and Kwang-Ting Cheng. 2019b. Learning to film from professional human motion videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4244–4253.

18. Chong Huang, Zhenyu Yang, Yan Kong, Peng Chen, Xin Yang, and Kwang-Ting Tim Cheng. 2019c. Learning to capture a film-look video with a camera drone. In 2019 International Conference on Robotics and Automation (ICRA). IEEE, 1871–1877.

19. H. Huang, D. Lischinski, Z. Hao, M. Gong, M. Christie, and D. Cohen-Or. 2016. Trip Synopsis: 60km in 60sec. Computer Graphics Forum 35, 7 (2016), 107–116.

20. Qingqiu Huang, Yu Xiong, Anyi Rao, Jiaze Wang, and Dahua Lin. 2020. MovieNet: A Holistic Dataset for Movie Understanding. In Proc. European Conference on Computer Vision.

21. Robert A Jacobs, Michael I Jordan, Steven J Nowlan, and Geoffrey E Hinton. 1991. Adaptive mixtures of local experts. Neural computation 3, 1 (1991), 79–87.

22. Hongda Jiang, Bin Wang, Xi Wang, Marc Christie, and Baoquan Chen. 2020. Example-Driven Virtual Cinematography by Learning Camera Behaviors. ACM Trans. Graph. 39, 4 (2020), 14.

23. Diederick P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In Int’l Conf. Learning Representations.

24. C. Lino and M. Christie. 2012. Efficient Composition for Virtual Camera Control. In Proc. ACM SIGGRAPH/Eurographics Symp. on Computer Animation.

25. Christophe Lino and Marc Christie. 2015. Intuitive and Efficient Camera Control with the Toric Space. ACM Trans. Graph. 34, 4, Article 82 (2015), 12 pages.

26. Eric Marchand and Nicolas Courty. 2000. Image-based virtual camera motion strategies. In Graphics Interface Conference, GI’00. Morgan Kaufmann, 69–76.

27. Jianyuan Min and Jinxiang Chai. 2012. Motion Graphs++: A Compact Generative Model for Semantic Motion Analysis and Synthesis. ACM Trans. Graph. 31, 6, Article 153 (November 2012), 12 pages.

28. Patrick Olivier, Nicolas Halper, Jon Pickering, and Pamela Luna. 1999. Visual composition as optimisation. In AISB symposium on AI and creativity in entertainment and visual art, Vol. 1. 22–30.

29. Thomas Oskam, Robert W. Sumner, Nils Thuerey, and Markus Gross. 2009. Visibility Transition Planning for Dynamic Camera Control. In Proceedings of the 2009 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Association for Computing Machinery, New York, NY, USA, 55–65.

30. Andrew Witkin and Michael Kass. 1988. Spacetime Constraints. SIGGRAPH Comput. Graph. 22, 4 (1988), 159–168.

31. Xinyi Zhang and Michiel van de Panne. 2018. Data-Driven Autocompletion for Keyframe Animation. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. Association for Computing Machinery, New York, NY, USA, Article 10, 11 pages.