“Burst photography for high dynamic range and low-light imaging on mobile cameras” by Hasinoff, Sharlet, Geiss, Adams, Barron, et al. …

Conference:

Type(s):

Title:

- Burst photography for high dynamic range and low-light imaging on mobile cameras

Session/Category Title:

- Computational Photography

Presenter(s)/Author(s):

Abstract:

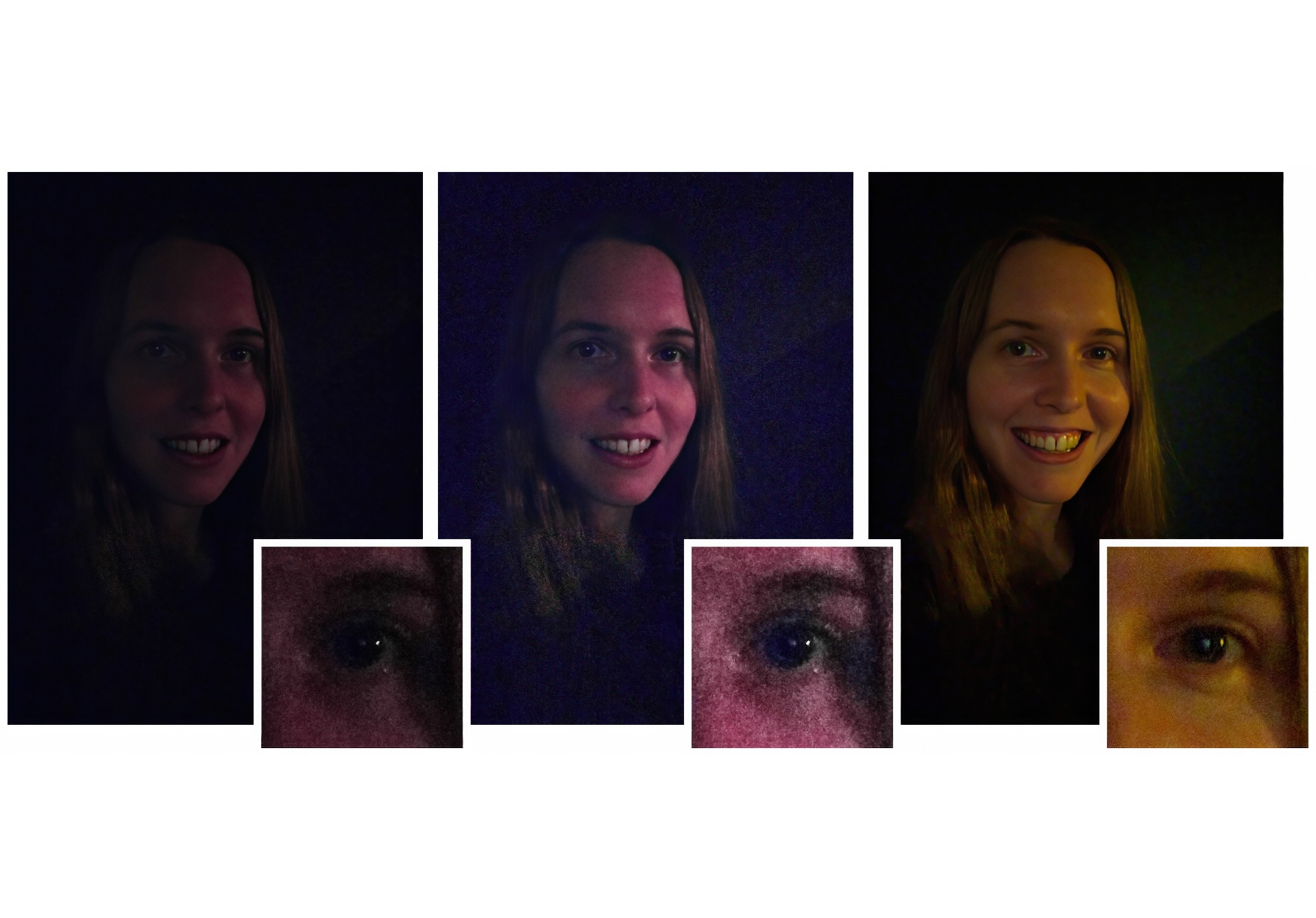

Cell phone cameras have small apertures, which limits the number of photons they can gather, leading to noisy images in low light. They also have small sensor pixels, which limits the number of electrons each pixel can store, leading to limited dynamic range. We describe a computational photography pipeline that captures, aligns, and merges a burst of frames to reduce noise and increase dynamic range. Our system has several key features that help make it robust and efficient. First, we do not use bracketed exposures. Instead, we capture frames of constant exposure, which makes alignment more robust, and we set this exposure low enough to avoid blowing out highlights. The resulting merged image has clean shadows and high bit depth, allowing us to apply standard HDR tone mapping methods. Second, we begin from Bayer raw frames rather than the demosaicked RGB (or YUV) frames produced by hardware Image Signal Processors (ISPs) common on mobile platforms. This gives us more bits per pixel and allows us to circumvent the ISP’s unwanted tone mapping and spatial denoising. Third, we use a novel FFT-based alignment algorithm and a hybrid 2D/3D Wiener filter to denoise and merge the frames in a burst. Our implementation is built atop Android’s Camera2 API, which provides per-frame camera control and access to raw imagery, and is written in the Halide domain-specific language (DSL). It runs in 4 seconds on device (for a 12 Mpix image), requires no user intervention, and ships on several mass-produced cell phones.

References:

1. Adams, A., Talvala, E.-V., Park, S. H., Jacobs, D. E., Ajdin, B., Gelfand, N., Dolson, J., Vaquero, D., Baek, J., Tico, M., Lensch, H. P. A., Matusik, W., Pulli, K., Horowitz, M., and Levoy, M. 2010. The Frankencamera: an experimental platform for computational photography. SIGGRAPH.

2. Adams, A. 1981. The Print, The Ansel Adams Photography Series 3. New York Graphic Society.

3. Adobe Inc., 2016. Photoshop CC 2015.1.2, http://www.adobe.com/creativecloud.html.

4. Aubry, M., Paris, S., Hasinoff, S. W., Kautz, J., and Du-rand, F. 2014. Fast local laplacian filters: Theory and applications. TOG.

5. Baker, S., Scharstein, D., Lewis, J. P., Roth, S., Black, M. J., and Szeliski, R. 2011. A database and evaluation methodology for optical flow. IJCV.

6. Bennett, E. P., and McMillan, L. 2005. Video enhancement using per-pixel virtual exposures. SIGGRAPH.

7. Brox, T., and Malik, J. 2011. Large displacement optical flow: Descriptor matching in variational motion estimation. TPAMI.

8. Dabov, K., Foi, A., and Egiazarian, K. 2007. Video denoising by sparse 3D transform-domain collaborative filtering. EUSIPCO.

9. Dabov, K., Foi, A., Katkovnik, V., and Egiazarian, K. 2007. Image denoising by sparse 3-D transform-domain collaborative filtering. TIP.

10. Debevec, P. E., and Malik, J. 1997. Recovering high dynamic range radiance maps from photographs. SIGGRAPH.

11. Delbracio, M., and Sapiro, G. 2015. Hand-held video deblur-ring via efficient fourier aggregation. TCI.

12. Donoho, D. L. 1995. De-noising by soft-thresholding. IEEE Transactions on Information Theory 41, 3, 613–627.

13. DxO Inc., 2015. Google Nexus 6P review, http://www.dxomark.com/Mobiles.

14. Farbman, Z., Fattal, R., and Lischinski, D. 2011. Convolution pyramids. SIGGRAPH.

15. Farnebäck, G. 2002. Polynomial Expansion for Orientation and Motion Estimation. PhD thesis, Linköping University, Sweden.

16. Farsiu, S., Elad, M., and Milanfar, P. 2006. Multi-frame demosaicing and super-resolution of color images. TIP.

17. Frigo, M., and Johnson, S. G. 2005. The design and implementation of FFTW3. Proc. IEEE.

18. Gallo, O., and Sen, P. 2016. Stack-based algorithms for HDR capture and reconstruction. In High Dynamic Range Video: From Acquisition, to Display and Applications, F. Dufaux, P. L. Callet, R. K. Mantiuk, and M. Mrak, Eds. Academic Press, ch. 3, 85–119.

19. Google Inc., 2016. Android Camera2 API, http://developer.android.com/reference/android/hardware/camera2/package-summary.html.

20. Google Inc., 2016. HDR+ burst photography dataset, http://www.hdrplusdata.org.

21. Gunturk, B., Glotzbach, J., Altunbasak, Y., Schafer, R., and Mersereau, R. 2005. Demosaicking: color filter array interpolation. IEEE Signal Processing Magazine.

22. Hasinoff, S. W., Durand, F., and Freeman, W. T. 2010. Noise-optimal capture for high dynamic range photography. CVPR.

23. Healey, G., and Kondepudy, R. 1994. Radiometric CCD camera calibration and noise estimation. TPAMI 16, 3, 267–276.

24. Heide, F., Steinberger, M., Tsai, Y.-T., Rouf, M., Pajk, D., Reddy, D., Gallo, O., Liu, J., Heidrich, W., Egiazarian, K., Kautz, J., and Pulli, K. 2014. FlexISP: A flexible camera image processing framework. SIGGRAPH Asia.

25. Horn, B. K. P., and Schunk, B. G. 1981. Determining optical flow. Artificial Intelligence.

26. Joshi, N., and Cohen, M. F. 2010. Seeing Mt. Rainier: Lucky imaging for multi-image denoising, sharpening, and haze removal. ICCP.

27. Kim, S. J., Lin, H. T., Lu, Z., Süsstrunk, S., Lin, S., and Brown, M. S. 2012. A new in-camera imaging model for color computer vision and its application. TPAMI.

28. Kokaram, A. C. 1993. Motion picture restoration. PhD thesis, Churchill College, University of Cambridge. Section 8.1.

29. Levoy, M. 2010. Experimental platforms for computational photography. IEEE CG&A 30.

30. Lewis, J. 1995. Fast normalized cross-correlation. Vision interface.

31. Light, 2016. Light L16 camera, https://light.co/camera.

32. Liu, C., Yuen, J., and Torralba, A. 2011. Sift flow: Dense correspondence across scenes and its applications. TPAMI.

33. Liu, Z., Yuan, L., Tang, X., Uyttendaele, M., and Sun, J. 2014. Fast burst images denoising. SIGGRAPH Asia.

34. Lucas, B. D., and Kanade, T. 1981. An iterative image registration technique with an application to stereo vision. IJCAI.

35. Mäkitalo, M., and Foi, A. 2013. Optimal inversion of the generalized Anscombe transformation for Poisson-Gaussian noise. TIP.

36. Martinec, E., 2008. Noise, dynamic range and bit depth in digital SLRs, http://theory.uchicago.edu/~ejm/pix/20d/tests/noise.

37. Menze, M., and Geiger, A. 2015. Object scene flow for autonomous vehicles. CVPR.

38. Mertens, T., Kautz, J., and Reeth, F. V. 2007. Exposure fusion. Pacific Graphics.

39. Petschnigg, G., Szeliski, R., Agrawala, M., Cohen, M., Hoppe, H., and Toyama, K. 2004. Digital photography with flash and no-flash image pairs. SIGGRAPH.

40. Ragan-Kelley, J., Adams, A., Paris, S., Levoy, M., Amarasinghe, S., and Durand, F. 2012. Decoupling algorithms from schedules for easy optimization of image processing pipelines. SIGGRAPH.

41. Reinhard, E., Ward, G., Pattanaik, S. N., Debevec, P. E., and Heidrich, W. 2010. High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting. Academic Press.

42. Stone, H. S., Orchard, M. T., Chang, E.-C., and Martucci, S. 2001. A fast direct Fourier-based algorithm for subpixel registration of images. TGRS.

43. Tao, M. W., Bai, J., Kohli, P., and Paris, S. 2012. Simple-flow: A non-iterative, sublinear optical flow algorithm. Computer Graphics Forum (Eurographics 2012).

44. Telleen, J., Sullivan, A., Yee, J., Wang, O., Gunawardane, P., Collins, I., and Davis, J. 2007. Synthetic shutter speed imaging. Computer Graphics Forum.

45. Wiegand, T., Sullivan, G. J., Bjøntegaard, G., and Luthra, A. 2003. Overview of the H.264/AVC video coding standard. TCSVT.

46. Wilburn, B., Joshi, N., Vaish, V., Talvala, E.-V., Antunez, E., Barth, A., Adams, A., Horowitz, M., and Levoy, M. 2005. High performance imaging using large camera arrays. SIGGRAPH.

47. Yamaguchi, K., McAllester, D., and Urtasun, R. 2014. Efficient joint segmentation, occlusion labeling, stereo and flow estimation. ECCV.

48. Zhang, L., Deshpande, A., and Chen, X. 2010. Denoising vs. deblurring: HDR imaging techniques using moving cameras. CVPR.