“BiDi screen: a thin, depth-sensing LCD for 3D interaction using light fields”

Conference:

Type(s):

Title:

- BiDi screen: a thin, depth-sensing LCD for 3D interaction using light fields

Session/Category Title:

- 3D is fun

Presenter(s)/Author(s):

Moderator(s):

Abstract:

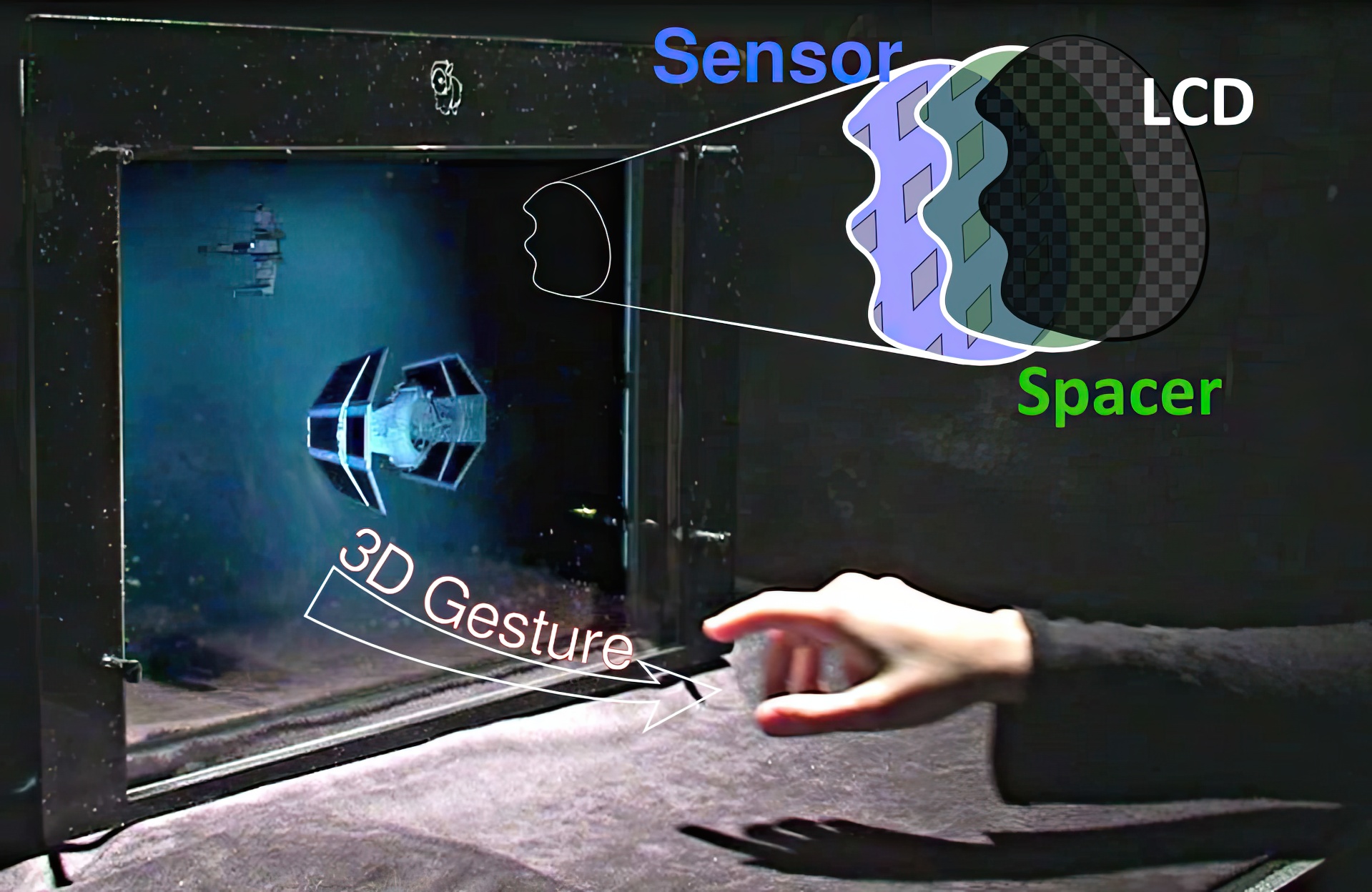

We transform an LCD into a display that supports both 2D multi-touch and unencumbered 3D gestures. Our BiDirectional (BiDi) screen, capable of both image capture and display, is inspired by emerging LCDs that use embedded optical sensors to detect multiple points of contact. Our key contribution is to exploit the spatial light modulation capability of LCDs to allow lensless imaging without interfering with display functionality. We switch between a display mode showing traditional graphics and a capture mode in which the backlight is disabled and the LCD displays a pinhole array or an equivalent tiled-broadband code. A large-format image sensor is placed slightly behind the liquid crystal layer. Together, the image sensor and LCD form a mask-based light field camera, capturing an array of images equivalent to that produced by a camera array spanning the display surface. The recovered multi-view orthographic imagery is used to passively estimate the depth of scene points. Two motivating applications are described: a hybrid touch plus gesture interaction and a light-gun mode for interacting with external light-emitting widgets. We show a working prototype that simulates the image sensor with a camera and diffuser, allowing interaction up to 50 cm in front of a modified 20.1 inch LCD.

References:

1. Abileah, A., den Boer, W., Tuenge, R. T., and Larsson, T. S., 2006. Integrated optical light sensitive active matrix liquid crystal display. United States Patent 7,009,663.Google Scholar

2. Benko, H., and Ishak, E. W. 2005. Cross-dimensional gestural interaction techniques for hybrid immersive environments. In IEEE VR, 209–216, 327. Google ScholarDigital Library

3. Benko, H., and Wilson, A. D. 2009. DepthTouch: Using depth-sensing camera to enable freehand interactions on and above the interactive surface. Tech. Report MSR-TR-2009-23.Google Scholar

4. Bishop, T., Zanetti, S., and Favaro, P. 2009. Light field superresolution. In IEEE ICCP.Google Scholar

5. Brown, C. J., Kato, H., Maeda, K., and Hadwen, B. 2007. A continuous-grain silicon-system LCD with optical input function. IEEE J. of Solid-State Circuits 42, 12.Google ScholarCross Ref

6. Cossairt, O., Nayar, S., and Ramamoorthi, R. 2008. Light field transfer: Global illumination between real and synthetic objects. ACM Trans. Graph. 27, 3. Google ScholarDigital Library

7. Dietz, P., and Leigh, D. 2001. DiamondTouch: A multi-user touch technology. In ACM UIST, 219–226. Google ScholarDigital Library

8. Farid, H. 1997. Range Estimation by Optical Differentiation. PhD thesis, University of Pennsylvania. Google ScholarDigital Library

9. Fenimore, E. E., and Cannon, T. M. 1978. Coded aperture imaging with uniformly redundant arrays. Appl. Optics 17, 3, 337–347.Google ScholarCross Ref

10. Forlines, C., and Shen, C. 2005. DTLens: Multi-user tabletop spatial data exploration. In ACM UIST, 119–122. Google ScholarDigital Library

11. Fuchs, M., Raskar, R., Seidel, H.-P., and Lensch, H. P. A. 2008. Towards passive 6D reflectance field displays. ACM Trans. Graph. 27, 3. Google ScholarDigital Library

12. Gottesman, S. R., and Fenimore, E. E. 1989. New family of binary arrays for coded aperture imaging. Appl. Optics 28, 20, 4344–4352.Google ScholarCross Ref

13. Han, J. Y. 2005. Low-cost multi-touch sensing through frustrated total internal reflection. ACM UIST, 115–118. Google ScholarDigital Library

14. Hecht, E. 2001. Optics (4th Edition). Addison Wesley.Google Scholar

15. Hillis, W. D. 1982. A high-resolution imaging touch sensor. Int’l. J. of Robotics Research 1, 2, 33–44.Google ScholarCross Ref

16. Izadi, S., Hodges, S., Butler, A., Rrustemi, A., and Buxton, B. 2007. ThinSight: Integrated optical multi-touch sensing through thin form-factor displays. In Workshop on Emerging Display Technologies. Google ScholarDigital Library

17. Izadi, S., Hodges, S., Taylor, S., Rosenfeld, D., Villar, N., Butler, A., and Westhues, J. 2008. Going beyond the display: A surface technology with an electronically switchable diffuser. In ACM UIST, 269–278. Google ScholarDigital Library

18. Lanman, D., Raskar, R., Agrawal, A., and Taubin, G. 2008. Shield fields: Modeling and capturing 3D occluders. ACM Trans. Graph. 27, 5. Google ScholarDigital Library

19. Lee, S., Buxton, W., and Smith, K. C. 1985. A multi-touch three dimensional touch-sensitive tablet. In ACM SIGCHI, 21–25. Google ScholarDigital Library

20. Levin, A., Fergus, R., Durand, F., and Freeman, W. T. 2007. Image and depth from a conventional camera with a coded aperture. ACM Trans. Graph. 26, 3, 70. Google ScholarDigital Library

21. Levoy, M., and Hanrahan, P. 1996. Light field rendering. In ACM SIGGRAPH, 31–42. Google ScholarDigital Library

22. Liang, C.-K., Lin, T.-H., Wong, B.-Y., Liu, C., and Chen, H. H. 2008. Programmable aperture photography: Multiplexed light field acquisition. ACM Trans. Graph. 27, 3. Google ScholarDigital Library

23. Lokhorst, D. M., and Alexander, S. R., 2004. Pressure sensitive surfaces. United States Patent 7,077,009.Google Scholar

24. Lumsdaine, A., and Georgiev, T. 2009. The focused plenoptic camera. In IEEE ICCP.Google Scholar

25. Malik, S., and Laszlo, J. 2004. Visual touchpad: A two-handed gestural input device. In Int’l. Conf. on Multimodal Interfaces, 289–296. Google ScholarDigital Library

26. Matsushita, N., and Rekimoto, J. 1997. HoloWall: Designing a finger, hand, body, and object sensitive wall. In ACM UIST, 209–210. Google ScholarDigital Library

27. Nayar, S. K., and Nakagawa, Y. 1994. Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 16, 8, 824–831. Google ScholarDigital Library

28. Nayar, S. K., Belhumeur, P. N., and Boult, T. E. 2004. Lighting sensitive display. ACM Trans. Graph. 23, 4, 963–979. Google ScholarDigital Library

29. Ng, R. 2005. Fourier slice photography. In ACM SIGGRAPH, 735–744. Google ScholarDigital Library

30. Rekimoto, J. 2002. SmartSkin: An infrastructure for freehand manipulation on interactive surfaces. In ACM SIGCHI, 113–120. Google ScholarDigital Library

31. Rosenthal, A. H., 1947. Two-way television communication unit. United States Patent 2,420,198, May.Google Scholar

32. Vaish, V., Levoy, M., Szeliski, R., Zitnick, C. L., and Kang, S. B. 2006. Reconstructing occluded surfaces using synthetic apertures: Stereo, focus and robust measures. In IEEE CVPR, 2331–2338. Google ScholarDigital Library

33. Veeraraghavan, A., Raskar, R., Agrawal, R., Mohan, A., and Tumblin, J. 2007. Dappled photography: Mask enhanced cameras for heterodyned light fields and coded aperture refocusing. ACM Trans. Graph. 26, 3, 69. Google ScholarDigital Library

34. Watanabe, M., and Nayar, S. K. 1998. Rational filters for passive depth from defocus. Int. J. Comput. Vision 27, 3. Google ScholarDigital Library

35. Westerman, W., and Elias, J. G. 2001. Multi-Touch: A new tactile 2-D gesture interface for human-computer interaction. In Human Factors And Ergonomics Society, 632–636.Google Scholar

36. Wilson, A. D. 2004. TouchLight: An imaging touch screen and display for gesture-based interaction. In Int’l. Conf. on Multimodal Interfaces, 69–76. Google ScholarDigital Library

37. Zhang, C., and Chen, T. 2005. Light field capturing with lensless cameras. In IEEE ICIP, 792–795.Google Scholar

38. Zomet, A., and Nayar, S. 2006. Lensless imaging with a controllable aperture. IEEE CVPR 1, 339–346. Google ScholarDigital Library