“Authoring directed gaze for full-body motion capture” by Pejsa, Rakita, Mutlu and Gleicher

Conference:

Type(s):

Title:

- Authoring directed gaze for full-body motion capture

Session/Category Title: Human Motion

Presenter(s)/Author(s):

Abstract:

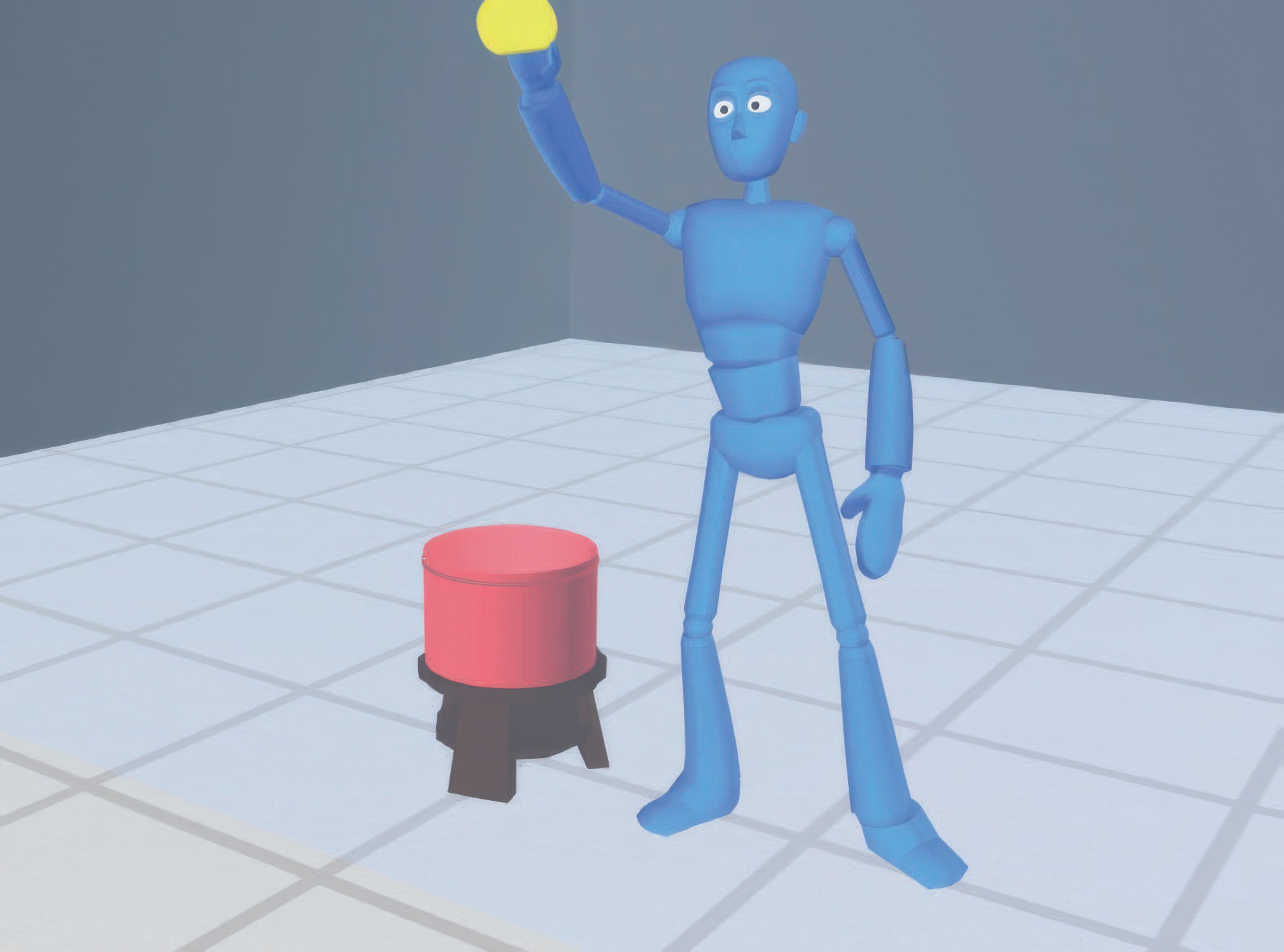

We present an approach for adding directed gaze movements to characters animated using full-body motion capture. Our approach provides a comprehensive authoring solution that automatically infers plausible directed gaze from the captured body motion, provides convenient controls for manual editing, and adds synthetic gaze movements onto the original motion. The foundation of the approach is an abstract representation of gaze behavior as a sequence of gaze shifts and fixations toward targets in the scene. We present methods for automatic inference of this representation by analyzing the head and torso kinematics and scene features. We introduce tools for convenient editing of the gaze sequence and target layout that allow an animator to adjust the gaze behavior without worrying about the details of pose and timing. A synthesis component translates the gaze sequence into coordinated movements of the eyes, head, and torso, and blends these with the original body motion. We evaluate the effectiveness of our inference methods, the efficiency of the authoring process, and the quality of the resulting animation.

References:

1. Bai, Y., Siu, K., and Liu, C. K. 2012. Synthesis of concurrent object manipulation tasks. ACM Transactions on Graphics 31, 6 (Nov.), 156:1–156:9.

2. Cafaro, A., Gaito, R., and Vilhjálmsson, H. 2009. Animating idle gaze in public places. In Proceedings of 9th International Conference on Intelligent Virtual Agents, IVA ’09, Springer, 250–256.

3. Coleman, P., Bibliowicz, J., Singh, K., and Gleicher, M. 2008. Staggered poses: A character motion representation for detail-preserving editing of pose and coordinated timing. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, SCA ’08, 137–146.

4. Deng, Z., Lewis, J. P., and Neumann, U. 2005. Automated eye motion using texture synthesis. IEEE Computer Graphics & Applications, 24–30.

5. ElKoura, G., and Singh, K. 2003. Handrix: Animating the human hand. In Proceedings of the 2003 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, SCA ’03, 110–119.

6. Fisher, R. A. 1925. Statistical Methods for Research Workers. Oliver and Boyd.

7. Fuller, J. H. 1992. Head movement propensity. Experimental Brain Research 92, 1, 152–164. Cross Ref

8. Gleicher, M. 2001. Motion path editing. In Proceedings of the 2001 Symposium on Interactive 3D Graphics, ACM, New York, NY, USA, I3D ’01, 195–202.

9. Grillon, H., and Thalmann, D. 2009. Simulating gaze attention behaviors for crowds. Computer Animation and Virtual Worlds 20, 23 (June), 111–119.

10. Heck, R. M. 2007. Automated authoring of quality human motion for interactive environments. PhD thesis, University of Wisconsin-Madison.

11. Henderson, J. M. 2003. Human gaze control during real-world scene perception. Trends in Cognitive Sciences 7, 11, 498–504. Cross Ref

12. Hietanen, J. K. 1999. Does your gaze direction and head orientation shift my visual attention? Neuroreport 10, 16, 3443. Cross Ref

13. Ho, E. S. L., Komura, T., and Tai, C.-L. 2010. Spatial relationship preserving character motion adaptation. ACM Transactions on Graphics 29, 4, 33:1–33:8.

14. Huang, Y., and Kallmann, M. 2016. Planning motions and placements for virtual demonstrators. IEEE Transactions on Visualization and Computer Graphics 22, 5 (May), 1568–1579.

15. Johansson, R. S., Westlin, G., Bäckström, A., and Flanagan, J. R. 2001. Eye-hand coordination in object manipulation. The Journal of Neuroscience 21, 17, 6917–6932. Cross Ref

16. Jörg, S., Hodgins, J., and Safonova, A. 2012. Data-driven finger motion synthesis for gesturing characters. ACM Transactions on Graphics 31, 6, 189:1–189:7.

17. Khullar, S. C., and Badler, N. I. 2001. Where to look? automating attending behaviors of virtual human characters. Autonomous Agents and Multi-Agent Systems 4, 1, 9–23.

18. Kokkinara, E., Oyekoya, O., and Steed, A. 2011. Modelling selective visual attention for autonomous virtual characters. Computer Animation and Virtual Worlds 22, 4, 361–369.

19. Lance, B. J., and Marsella, S. C. 2010. The expressive gaze model: Using gaze to express emotion. IEEE Computer Graphics & Applications 30, 4, 62–73.

20. Lee, S. P., Badler, J. B., and Badler, N. I. 2002. Eyes alive. In ACM Transactions on Graphics, vol. 21, 637–644.

21. Lee, J., Marsella, S., Traum, D., Gratch, J., and Lance, B. 2007. The rickel gaze model: A window on the mind of a virtual human. In Proceedings of 7th International Conference on Intelligent Virtual Agents, IVA ’07, Springer, 296–303.

22. McCluskey, M. K., and Cullen, K. E. 2007. Eye, head, and body coordination during large gaze shifts in rhesus monkeys: Movement kinematics and the influence of posture. Journal of Neurophysiology 97, 4, 2976–2991. Cross Ref

23. Mitake, H., Hasegawa, S., Koike, Y., and Sato, M. 2007. Reactive virtual human with bottom-up and top-down visual attention for gaze generation in realtime interactions. In Proceedings of 2007 IEEE Virtual Reality Conference, 211–214.

24. Ng, T.-H. 2001. Choice of delta in equivalence testing. Drug Information Journal 35, 4, 1517–1527. Cross Ref

25. Pejsa, T., Andrist, S., Gleicher, M., and Mutlu, B. 2015. Gaze and attention management for embodied conversational agents. ACM Transactions on Interactive Intelligent Systems 5, 1, 3:1–3:34.

26. Peters, R. J., and Itti, L. 2008. Applying computational tools to predict gaze direction in interactive visual environments. ACM Transactions on Applied Perception 5, 2 (May), 9:1–9:19.

27. Peters, C., and O’Sullivan, C. 2003. Bottom-up visual attention for virtual human animation. In Proceedings of the 16th International Conference on Computer Animation and Social Agents, CASA ’03, IEEE, 111–117.

28. Peters, C., and Qureshi, A. 2010. A head movement propensity model for animating gaze shifts and blinks of virtual characters. Computers & Graphics 34, 6, 677–687.

29. Popović, Z., and Witkin, A. 1999. Physically based motion transformation. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, ACM Press/Addison-Wesley Publishing Co., New York, NY, USA, SIGGRAPH ’99, 11–20.

30. Ruhland, K., Peters, C. E., Andrist, S., Badler, J. B., Badler, N. I., Gleicher, M., Mutlu, B., and McDonnell, R. 2015. A review of eye gaze in virtual agents, social robotics and hci: Behaviour generation, user interaction and perception. Computer Graphics Forum.

31. Shin, H. J., Lee, J., Shin, S. Y., and Gleicher, M. 2001. Computer puppetry: An importance-based approach. ACM Transactions on Graphics 20, 2 (Apr.), 67–94.

32. Thiebaux, M., Lance, B., and Marsella, S. 2009. Real-time expressive gaze animation for virtual humans. In Proceedings of The 8th International Conference on Autonomous Agents and Multiagent Systems – Volume 1, International Foundation for Autonomous Agents and Multiagent Systems, Richland, SC, AAMAS ’09, 321–328.

33. Uemura, T., Arai, Y., and Shimazaki, C. 1980. Eye-head coordination during lateral gaze in normal subjects. Acta OtoLaryngologica 90, 3–4, 191–198. Cross Ref

34. Ye, Y., and Liu, C. K. 2012. Synthesis of detailed hand manipulations using contact sampling. ACM Transactions on Graphics 31, 4, 41:1–41:10.