“Analogist: Out-of-the-box Visual In-context Learning With Image Diffusion Model”

Conference:

Type(s):

Title:

- Analogist: Out-of-the-box Visual In-context Learning With Image Diffusion Model

Presenter(s)/Author(s):

Abstract:

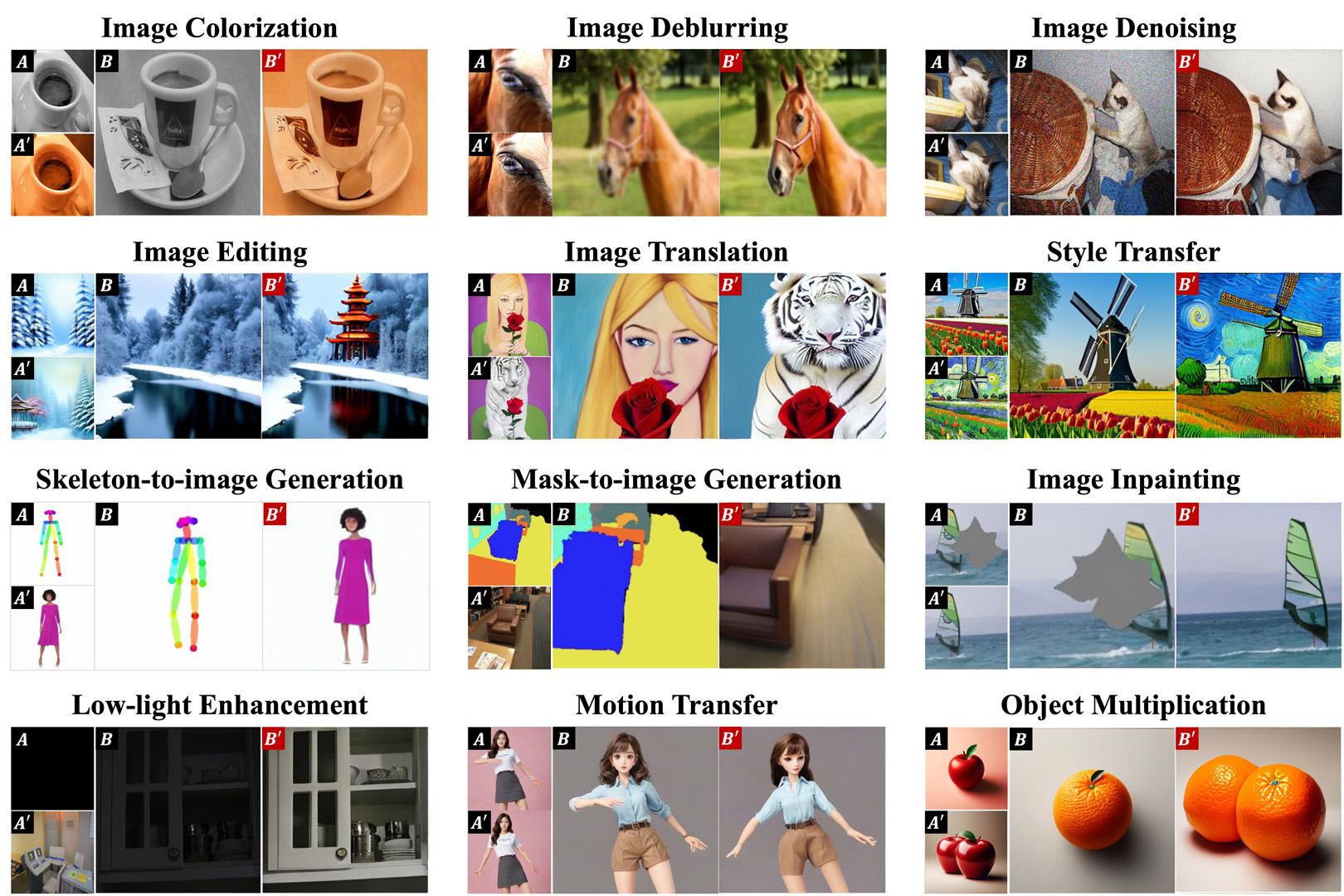

Analogist is a novel visual In-context Learning approach combining visual and textual prompts with a diffusion model. It introduces self-attention cloning and cross-attention masking to enhance analogy accuracy, offering a flexible, out-of-the-box solution that outperforms existing methods without needing fine-tuning or optimization.

References:

[1]

Yutong Bai, Xinyang Geng, Karttikeya Mangalam, Amir Bar, Alan Yuille, Trevor Darrell, Jitendra Malik, and Alexei A Efros. 2023. Sequential Modeling Enables Scalable Learning for Large Vision Models. arXiv preprint arXiv:2312.00785 (2023).

[2]

Amir Bar, Yossi Gandelsman, Trevor Darrell, Amir Globerson, and Alexei Efros. 2022. Visual prompting via image inpainting. Advances in Neural Information Processing Systems 35 (2022), 25005–25017.

[3]

Ahmet Canberk Baykal, Abdul Basit Anees, Duygu Ceylan, Erkut Erdem, Aykut Erdem, and Deniz Yuret. 2023. CLIP-guided StyleGAN Inversion for Text-driven Real Image Editing. ACM Transactions on Graphics 42, 5 (2023), 1–18.

[4]

James Betker, Gabriel Goh, Li Jing, Tim Brooks, Jianfeng Wang, Linjie Li, Long Ouyang, Juntang Zhuang, Joyce Lee, Yufei Guo, et al. 2023. Improving image generation with better captions. Computer Science. https://cdn.openai.com/papers/dall-e-3.pdf 2 (2023), 3.

[5]

Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2023. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18392–18402.

[6]

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. 2020. Language models are few-shot learners. Advances in Neural Information Processing Systems 33 (2020), 1877–1901.

[7]

Mingdeng Cao, Xintao Wang, Zhongang Qi, Ying Shan, Xiaohu Qie, and Yinqiang Zheng. 2023. Masactrl: Tuning-free mutual self-attention control for consistent image synthesis and editing. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 22560–22570.

[8]

Di Chang, Yichun Shi, Quankai Gao, Jessica Fu, Hongyi Xu, Guoxian Song, Qing Yan, Xiao Yang, and Mohammad Soleymani. 2023. MagicDance: Realistic Human Dance Video Generation with Motions & Facial Expressions Transfer. arXiv preprint arXiv:2311.12052 (2023).

[9]

Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFace-Drawing: Deep generation of face images from sketches. ACM Transactions on Graphics 39, 4 (2020), 72–1.

[10]

Wei Chen, Wang Wenjing, Yang Wenhan, and Liu Jiaying. 2018. Deep Retinex Decomposition for Low-Light Enhancement. In British Machine Vision Conference. British Machine Vision Association.

[11]

Angela Dai, Angel X Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nie?ner. 2017. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5828–5839.

[12]

Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. Ieee, 248–255.

[13]

Olga Diamanti, Connelly Barnes, Sylvain Paris, Eli Shechtman, and Olga Sorkine-Hornung. 2015. Synthesis of Complex Image Appearance from Limited Exemplars. ACM Transactions on Graphics (Mar 2015), 1–14.

[14]

Qingxiu Dong, Lei Li, Damai Dai, Ce Zheng, Zhiyong Wu, Baobao Chang, Xu Sun, Jingjing Xu, and Zhifang Sui. 2022. A survey for in-context learning. arXiv preprint arXiv:2301.00234 (2022).

[15]

Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. 2020. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

[16]

Zheng Gu, Chuanqi Dong, Jing Huo, Wenbin Li, and Yang Gao. 2021. CariMe: Unpaired caricature generation with multiple exaggerations. IEEE Transactions on Multimedia 24 (2021), 2673–2686.

[17]

Jiabang He, Lei Wang, Yi Hu, Ning Liu, Hui Liu, Xing Xu, and Heng Tao Shen. 2023. ICL-D3IE: In-Context Learning with Diverse Demonstrations Updating for Document Information Extraction. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 19485–19494.

[18]

Aaron Hertzmann, Charles E. Jacobs, Nuria Oliver, Brian Curless, and David H. Salesin. 2001. Image analogies. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques.

[19]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems 33 (2020), 6840–6851.

[20]

Ond?ej Jamri?ka, ??rka Sochorov?, Ond?ej Texler, Michal Luk??, Jakub Fi?er, Jingwan Lu, Eli Shechtman, and Daniel S?kora. 2019. Stylizing video by example. ACM Transactions on Graphics (Aug 2019), 1–11.

[21]

Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In International Conference on Machine Learning. PMLR, 12888–12900.

[22]

Jing Liao, Yuan Yao, Lu Yuan, Gang Hua, and Sing Bing Kang. 2017. Visual atribute transfer through deep image analogy. ACM Transactions on Graphics 36, 4 (2017), 120.

[23]

Ivona Najdenkoska, Animesh Sinha, Abhimanyu Dubey, Dhruv Mahajan, Vignesh Ramanathan, and Filip Radenovic. 2023. Context Diffusion: In-Context Aware Image Generation. arXiv preprint arXiv:2312.03584 (2023).

[24]

Thao Nguyen, Yuheng Li, Utkarsh Ojha, and Yong Jae Lee. 2023. Visual Instruction Inversion: Image Editing via Image Prompting. In Thirty-seventh Conference on Neural Information Processing Systems. https://openreview.net/forum?id=l9BsCh8ikK

[25]

Gaurav Parmar, Krishna Kumar Singh, Richard Zhang, Yijun Li, Jingwan Lu, and Jun-Yan Zhu. 2023. Zero-shot image-to-image translation. In ACM SIGGRAPH 2023 Conference Proceedings. 1–11.

[26]

Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in Neural Information Processing Systems 32 (2019).

[27]

Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2085–2094.

[28]

Federico Perazzi, Jordi Pont-Tuset, Brian McWilliams, Luc Van Gool, Markus Gross, and Alexander Sorkine-Hornung. 2016. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 724–732.

[29]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning. PMLR, 8748–8763.

[30]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10684–10695.

[31]

Ad?la ?ubrtov?, Michal Luk??, Jan ?ech, David Futschik, Eli Shechtman, and Daniel S?kora. 2023. Diffusion Image Analogies. In ACM SIGGRAPH 2023 Conference Proceedings. 1–10.

[32]

Yasheng SUN, Yifan Yang, Houwen Peng, Yifei Shen, Yuqing Yang, Han Hu, Lili Qiu, and Hideki Koike. 2023. ImageBrush: Learning Visual In-Context Instructions for Exemplar-Based Image Manipulation. In Thirty-seventh Conference on Neural Information Processing Systems. https://openreview.net/forum?id=EmOIP3t9nk

[33]

Alex Trevithick, Matthew Chan, Towaki Takikawa, Umar Iqbal, Shalini De Mello, Manmohan Chandraker, Ravi Ramamoorthi, and Koki Nagano. 2024. What You See is What You GAN: Rendering Every Pixel for High-Fidelity Geometry in 3D GANs. arXiv preprint arXiv:2401.02411 (2024).

[34]

Xinlong Wang, Wen Wang, Yue Cao, Chunhua Shen, and Tiejun Huang. 2023b. Images speak in images: A generalist painter for in-context visual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6830–6839.

[35]

Xinlong Wang, Xiaosong Zhang, Yue Cao, Wen Wang, Chunhua Shen, and Tiejun Huang. 2023c. SegGPT: Towards Segmenting Everything in Context. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 1130–1140.

[36]

Zhendong Wang, Yifan Jiang, Yadong Lu, yelong shen, Pengcheng He, Weizhu Chen, Zhangyang Wang, and Mingyuan Zhou. 2023a. In-Context Learning Unlocked for Diffusion Models. In Thirty-seventh Conference on Neural Information Processing Systems. https://openreview.net/forum?id=6BZS2EAkns

[37]

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems 35 (2022), 24824–24837.

[38]

Ronghuan Wu, Wanchao Su, Kede Ma, and Jing Liao. 2023. IconShop: Text-Guided Vector Icon Synthesis with Autoregressive Transformers. ACM Transactions on Graphics 42, 6 (2023), 1–14.

[39]

Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, and Ming-Hsuan Yang. 2022. Gan inversion: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 45, 3 (2022), 3121–3138.

[40]

Canwen Xu, Yichong Xu, Shuohang Wang, Yang Liu, Chenguang Zhu, and Julian McAuley. 2023. Small models are valuable plug-ins for large language models. arXiv preprint arXiv:2305.08848 (2023).

[41]

Jianwei Yang, Hao Zhang, Feng Li, Xueyan Zou, Chunyuan Li, and Jianfeng Gao. 2023b. Set-of-mark prompting unleashes extraordinary visual grounding in gpt-4v. arXiv preprint arXiv:2310.11441 (2023).

[42]

Zhengyuan Yang, Linjie Li, Kevin Lin, Jianfeng Wang, Chung-Ching Lin, Zicheng Liu, and Lijuan Wang. 2023a. The dawn of lmms: Preliminary explorations with gpt-4v (ision). arXiv preprint arXiv:2309.17421 9, 1 (2023).

[43]

Liang Yuan, Dingkun Yan, Suguru Saito, and Issei Fujishiro. 2024. DiffMat: Latent diffusion models for image-guided material generation. Visual Informatics (2024).

[44]

Polina Zablotskaia, Aliaksandr Siarohin, Bo Zhao, and Leonid Sigal. 2019. Dwnet: Dense warp-based network for pose-guided human video generation. arXiv preprint arXiv:1910.09139 (2019).

[45]

Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. 2023. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 3836–3847.

[46]

Deyao Zhu, Jun Chen, Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. 2023. MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. In The Twelfth International Conference on Learning Representations.