“Aesthetic-guided outward image cropping” by Zhong, Li, Huang, Zhang, Lu, et al. …

Conference:

Type(s):

Title:

- Aesthetic-guided outward image cropping

Session/Category Title: Computational Photography

Presenter(s)/Author(s):

Abstract:

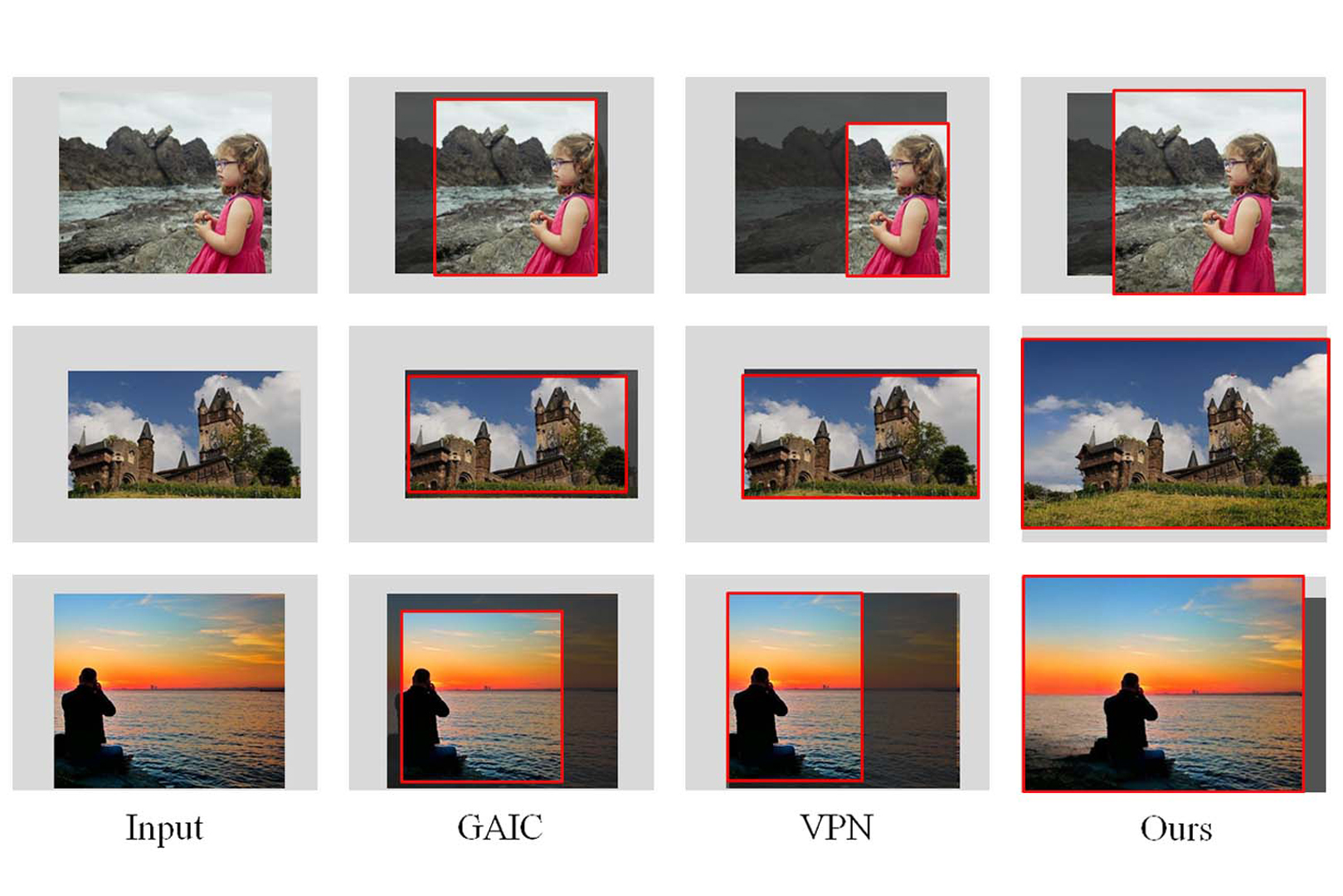

Image cropping is a commonly used post-processing operation for adjusting the scene composition of an input photography, therefore improving its aesthetics. Existing automatic image cropping methods are all bounded by the image border, thus have very limited freedom for aesthetics improvement if the original scene composition is far from ideal, e.g. the main object is too close to the image border.In this paper, we propose a novel, aesthetic-guided outward image cropping method. It can go beyond the image border to create a desirable composition that is unachievable using previous cropping methods. Our method first evaluates the input image to determine how much the content of the image should be extrapolated by a field of view (FOV) evaluation model. We then synthesize the image content in the extrapolated region, and seek an optimal aesthetic crop within the expanded FOV, by jointly considering the aesthetics of the cropped view, and the local image quality of the extrapolated image content. Experimental results show that our method can generate more visually pleasing image composition in cases that are difficult for previous image cropping tools due to the border constraint, and can also automatically degrade to an inward method when high quality image extrapolation is infeasible.

References:

1. Jonas Abeln, Leonie Fresz, Seyed Ali Amirshahi, I Chris McManus, Michael Koch, Helene Kreysa, and Christoph Redies. 2016. Preference for well-balanced saliency in details cropped from photographs. Frontiers in human neuroscience 9 (2016), 704.

2. Shai Avidan and Ariel Shamir. 2007. Seam Carving for Content-Aware Image Resizing. ACM Trans. Graph. 26, 3 (2007).

3. Abhishek Badki, Orazio Gallo, Jan Kautz, and Pradeep Sen. 2017. Computational zoom: A framework for post-capture image composition. ACM Trans. Graph. 36, 4 (2017), 1–14.

4. Coloma Ballester, Marcelo Bertalmio, Vicent Caselles, Guillermo Sapiro, and Joan Verdera. 2001. Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 10, 8 (2001), 1200–1211.

5. Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. 2009. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28, 3 (2009), 24.

6. Marcelo Bertalmio, Guillermo Sapiro, Vincent Caselles, and Coloma Ballester. 2000. Image inpainting. In SIGGRAPH. 417–424.

7. Subhabrata Bhattacharya, Rahul Sukthankar, and Mubarak Shah. 2011. A holistic approach to aesthetic enhancement of photographs. ACM Trans. Multim. Comput. 7, 1 (2011), 1–21.

8. Ali Borji, Ming-Ming Cheng, Qibin Hou, Huaizu Jiang, and Jia Li. 2019. Salient object detection: A survey. Computational visual media 5, 2 (2019), 117–150.

9. Hui-Tang Chang, Yu-Chiang Frank Wang, and Ming-Syan Chen. 2014. Transfer in photography composition. In Proc. ACM MM. 957–960.

10. Jiansheng Chen, Gaocheng Bai, Shaoheng Liang, and Zhengqin Li. 2016. Automatic image cropping: A computational complexity study. In Proc. CVPR. 507–515.

11. Yi-Ling Chen, Tzu-Wei Huang, Kai-Han Chang, Yu-Chen Tsai, Hwann-Tzong Chen, and Bing-Yu Chen. 2017a. Quantitative analysis of automatic image cropping algorithms: A dataset and comparative study. In Proc. WACV. 226–234.

12. Yi-Ling Chen, Jan Klopp, Min Sun, Shao-Yi Chien, and Kwan-Liu Ma. 2017b. Learning to compose with professional photographs on the web. In Proc. ACM MM. 37–45.

13. Taeg Sang Cho, Shai Avidan, and William T Freeman. 2009. The patch transform. IEEE Trans. Pattern Anal. Mach. Intell. 32, 8 (2009), 1489–1501.

14. Taeg Sang Cho, Moshe Butman, Shai Avidan, and William T Freeman. 2008. The patch transform and its applications to image editing. In Proc. CVPR. IEEE, 1–8.

15. Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, and Rita Cucchiara. 2018. Predicting human eye fixations via an lstm-based saliency attentive model. IEEE Trans. Image Process. 27, 10 (2018), 5142–5154.

16. Dov Danon, Hadar Averbuch-Elor, Ohad Fried, and Daniel Cohen-Or. 2019. Unsupervised natural image patch learning. Computational Visual Media 5, 3 (2019), 229–237.

17. Seyed A Esmaeili, Bharat Singh, and Larry S Davis. 2017. Fast-at: Fast automatic thumbnail generation using deep neural networks. In Proc. CVPR. 4622–4630.

18. Ruochen Fan, Ming-Ming Cheng, Qibin Hou, Tai-Jiang Mu, Jingdong Wang, and Shi-Min Hu. 2020. S4Net: Single stage salient-instance segmentation. Computational Visual Media 6, 2 (2020), 191–204.

19. Chen Fang, Zhe Lin, Radomir Mech, and Xiaohui Shen. 2014. Automatic image cropping using visual composition, boundary simplicity and content preservation models. In Proc. ACM MM. 1105–1108.

20. Michaël Gharbi, Jiawen Chen, Jonathan T Barron, Samuel W Hasinoff, and Frédo Durand. 2017. Deep bilateral learning for real-time image enhancement. ACM Trans. Graph. 36, 4 (2017), 1–12.

21. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Proc. NeurIPS. 2672–2680.

22. Tom Grill and Mark Scanlon. 1990. Photographic composition. Amphoto Books.

23. Dongsheng Guo, Hongzhi Liu, Haoru Zhao, Yunhao Cheng, Qingwei Song, Zhaorui Gu, Haiyong Zheng, and Bing Zheng. 2020. Spiral Generative Network for Image Extrapolation. In Proc. ECCV. Springer, 701–717.

24. YW Guo, Mingming Liu, TT Gu, and WP Wang. 2012. Improving photo composition elegantly: Considering image similarity during composition optimization. In Comput. Graph. Forum., Vol. 31. Wiley Online Library, 2193–2202.

25. James Hays and Alexei A Efros. 2007. Scene completion using millions of photographs. ACM Trans. Graph. 26, 3 (2007), 4–es.

26. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37, 9 (2015), 1904–1916.

27. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proc. CVPR. 770–778.

28. Shi-Min Hu, Fang-Lue Zhang, Miao Wang, Ralph R. Martin, and Jue Wang. 2013. PatchNet: A Patch-Based Image Representation for Interactive Library-Driven Image Editing. ACM Trans. Graph. 32, 6 (2013), 196:1–12.

29. Yuanming Hu, Hao He, Chenxi Xu, Baoyuan Wang, and Stephen Lin. 2018. Exposure: A white-box photo post-processing framework. ACM Trans. Graph. 37, 2 (2018), 1–17.

30. Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa. 2017. Globally and locally consistent image completion. ACM Trans. Graph. 36, 4 (2017), 1–14.

31. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proc. CVPR. 8110–8119.

32. Sylwester Klocek, Łukasz Maziarka, Maciej Wołczyk, Jacek Tabor, Jakub Nowak, and Marek Śmieja. 2019. Hypernetwork functional image representation. In International Conference on Artificial Neural Networks. 496–510.

33. Anat Levin, Assaf Zomet, and Yair Weiss. 2003. Learning How to Inpaint from Global Image Statistics.. In ACM Trans. Graph., Vol. 1. 305–312.

34. Debang Li, Huikai Wu, Junge Zhang, and Kaiqi Huang. 2018. A2-RL: Aesthetics aware reinforcement learning for image cropping. In Proc. CVPR. 8193–8201.

35. Debang Li, Junge Zhang, and Kaiqi Huang. 2020a. Learning to Learn Cropping Models for Different Aspect Ratio Requirements. In Proc. CVPR. 12685–12694.

36. Debang Li, Junge Zhang, Kaiqi Huang, and Ming-Hsuan Yang. 2020b. Composing Good Shots by Exploiting Mutual Relations. In Proc. CVPR. 4213–4222.

37. Ke Li, Bo Yan, Jun Li, and Aditi Majumder. 2015. Seam carving based aesthetics enhancement for photos. Signal Process Image Commun. 39 (2015), 509–516.

38. Yuan Liang, Xiting Wang, Song-Hai Zhang, Shi-Min Hu, and Shixia Liu. 2017. PhotoRecomposer: Interactive photo recomposition by cropping. IEEE Trans. Vis. Comput. Graph. 24, 10 (2017), 2728–2742.

39. Ligang Liu, Yong Jin, and Qingbiao Wu. 2010. Realtime Aesthetic Image Retargeting. Computational aesthetics 10 (2010), 1–8.

40. Peng Lu, Jiahui Liu, Xujun Peng, and Xiaojie Wang. 2020. Weakly Supervised Real-time Image Cropping based on Aesthetic Distributions. In Proc. ACM MM. 120–128.

41. Weirui Lu, Xiaofen Xing, Bolun Cai, and Xiangmin Xu. 2019. Listwise view ranking for image cropping. IEEE Access 7 (2019), 91904–91911.

42. Long Mai, Hailin Jin, and Feng Liu. 2016. Composition-preserving deep photo aesthetics assessment. In Proc. CVPR. 497–506.

43. Sebastian Nowozin, Botond Cseke, and Ryota Tomioka. 2016. f-GAN: training generative neural samplers using variational divergence minimization. In Proc. NeurIPS. 271–279.

44. Anthony Santella, Maneesh Agrawala, Doug DeCarlo, David Salesin, and Michael Cohen. 2006. Gaze-based interaction for semi-automatic photo cropping. In SIGCHI. 771–780.

45. Fred Stentiford. 2007. Attention based auto image cropping. In International Conference on Computer Vision Systems: Proceedings (2007).

46. Shaolin Su, Qingsen Yan, Yu Zhu, Cheng Zhang, Xin Ge, Jinqiu Sun, and Yanning Zhang. 2020. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proc. CVPR. 3667–3676.

47. Bongwon Suh, Haibin Ling, Benjamin B Bederson, and David W Jacobs. 2003. Automatic thumbnail cropping and its effectiveness. In Proc. UIST. 95–104.

48. Piotr Teterwak, Aaron Sarna, Dilip Krishnan, Aaron Maschinot, David Belanger, Ce Liu, and William T Freeman. 2019. Boundless: Generative adversarial networks for image extension. In Proc. ICCV. 10521–10530.

49. Yi Tu, Li Niu, Weijie Zhao, Dawei Cheng, and Liqing Zhang. 2020. Image Cropping with Composition and Saliency Aware Aesthetic Score Map. 12104–12111.

50. Miao Wang, Yu-Kun Lai, Yuan Liang, Ralph R Martin, and Shi-Min Hu. 2014. Biggerpicture: data-driven image extrapolation using graph matching. ACM Trans. Graph. 33, 6 (2014), 1–13.

51. Miao Wang, Ariel Shamir, Guo-Ye Yang, Jin-Kun Lin, Guo-Wei Yang, Shao-Ping Lu, and Shi-Min Hu. 2018. BiggerSelfie: Selfie video expansion with hand-held camera. IEEE Trans. Image Process. 27, 12 (2018), 5854–5865.

52. Wenguan Wang and Jianbing Shen. 2017. Deep cropping via attention box prediction and aesthetics assessment. In Proc. ICCV. 2186–2194.

53. Yi Wang, Xin Tao, Xiaoyong Shen, and Jiaya Jia. 2019a. Wide-context semantic image extrapolation. In Proc. CVPR. 1399–1408.

54. Yi Wang, Xin Tao, Xiaoyong Shen, and Jiaya Jia. 2019b. Wide-Context Semantic Image Extrapolation. In Proc. CVPR. 1399–1408.

55. Zijun Wei, Jianming Zhang, Xiaohui Shen, Zhe Lin, Radomír Mech, Minh Hoai, and Dimitris Samaras. 2018. Good view hunting: Learning photo composition from dense view pairs. In Proc. CVPR. 5437–5446.

56. Jianzhou Yan, Stephen Lin, Sing Bing Kang, and Xiaoou Tang. 2013. Learning the change for automatic image cropping. In Proc. CVPR. 971–978.

57. Zongxin Yang, Jian Dong, Ping Liu, Yi Yang, and Shuicheng Yan. 2019. Very long natural scenery image prediction by outpainting. In Proc. ICCV. 10561–10570.

58. Hui Zeng, Lida Li, Zisheng Cao, and Lei Zhang. 2019. Reliable and efficient image cropping: A grid anchor based approach. In Proc. CVPR. 5949–5957.

59. Fang-Lue Zhang, Miao Wang, and Shi-Min Hu. 2013. Aesthetic image enhancement by dependence-aware object recomposition. IEEE Trans. Multimedia 15, 7 (2013), 1480–1490.

60. Luming Zhang, Mingli Song, Qi Zhao, Xiao Liu, Jiajun Bu, and Chun Chen. 2012. Probabilistic graphlet transfer for photo cropping. IEEE Trans. Image Process. 22, 2 (2012), 802–815.

61. Shengyu Zhao, Jonathan Cui, Yilun Sheng, Yue Dong, Xiao Liang, Eric I Chang, and Yan Xu. 2021. Large Scale Image Completion via Co-Modulated Generative Adversarial Networks. In Proc. ICLR.

62. Chuanxia Zheng, Tat-Jen Cham, and Jianfei Cai. 2019. Pluralistic Image Completion. In Proc. CVPR. 1438–1447.

63. Bolei Zhou, Agata Lapedriza, Aditya Khosla, Aude Oliva, and Antonio Torralba. 2017. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40, 6 (2017), 1452–1464.