“Aerobatics control of flying creatures via self-regulated learning”

Conference:

Type(s):

Title:

- Aerobatics control of flying creatures via self-regulated learning

Session/Category Title: Aerial propagation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

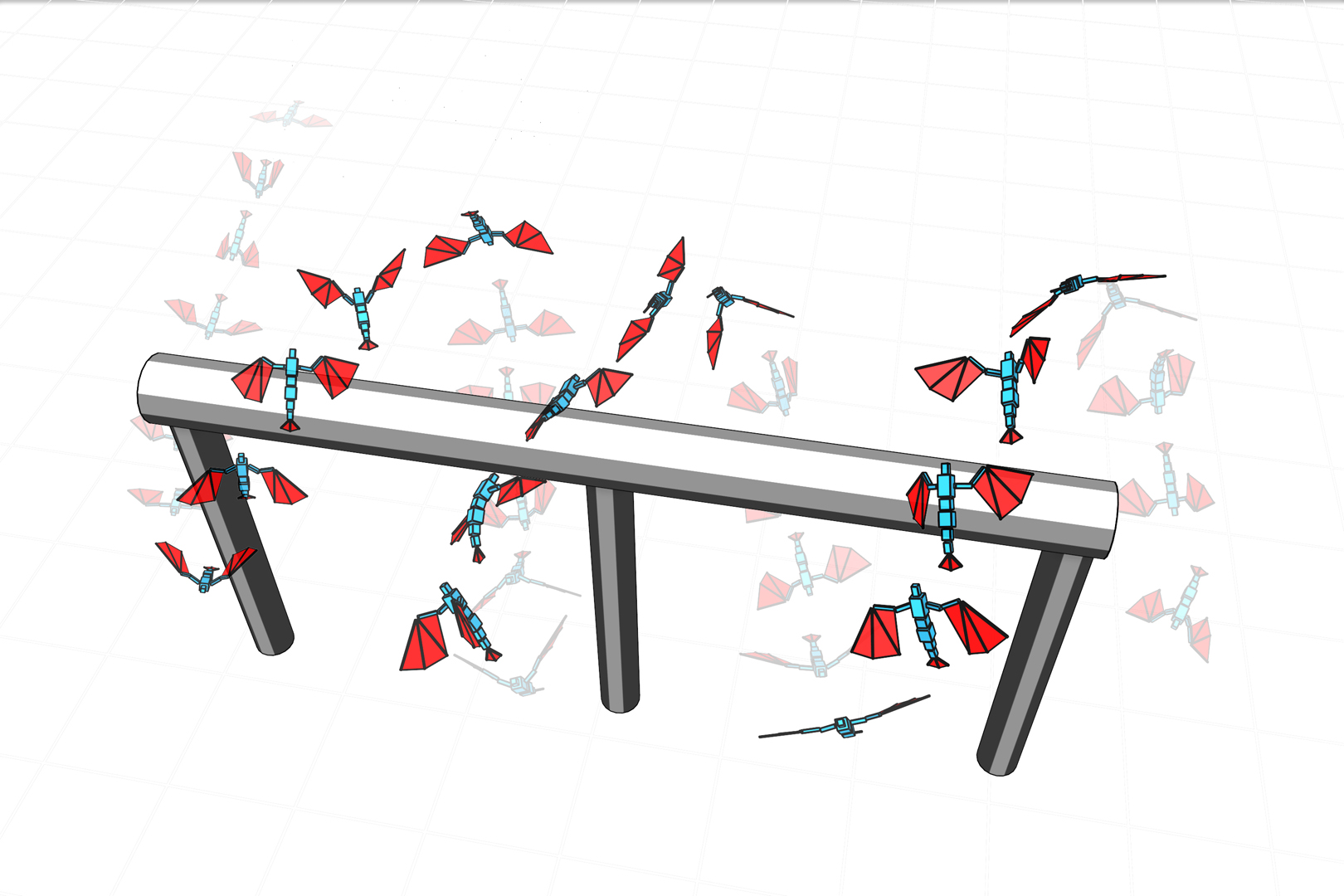

Flying creatures in animated films often perform highly dynamic aerobatic maneuvers, which require their extreme of exercise capacity and skillful control. Designing physics-based controllers (a.k.a., control policies) for aerobatic maneuvers is very challenging because dynamic states remain in unstable equilibrium most of the time during aerobatics. Recently, Deep Reinforcement Learning (DRL) has shown its potential in constructing physics-based controllers. In this paper, we present a new concept, Self-Regulated Learning (SRL), which is combined with DRL to address the aerobatics control problem. The key idea of SRL is to allow the agent to take control over its own learning using an additional self-regulation policy. The policy allows the agent to regulate its goals according to the capability of the current control policy. The control and self-regulation policies are learned jointly along the progress of learning. Self-regulated learning can be viewed as building its own curriculum and seeking compromise on the goals. The effectiveness of our method is demonstrated with physically-simulated creatures performing aerobatic skills of sharp turning, rapid winding, rolling, soaring, and diving.

References:

1. Social Psychology, Second Edition: Handbook of Basic Principles.Google Scholar

2. Pieter Abbeel, Adam Coates, and Andrew Y. Ng. 2010. Autonomous Helicopter Aerobatics Through Apprenticeship Learning. International Journal of Robotics Research 29, 13 (2010), 1608–1639. Google ScholarDigital Library

3. Pieter Abbeel, Adam Coates, Morgan Quigley, and Andrew Y. Ng. 2006. An Application of Reinforcement Learning to Aerobatic Helicopter Flight. In Proceedings of the 19th International Conference on Neural Information Processing Systems (NIPS 2016). 1–8. Google ScholarDigital Library

4. Pieter Abbeel and Andrew Y. Ng. 2004. Apprenticeship Learning via Inverse Reinforcement Learning. In Proceedings of the Twenty-first International Conference on Machine Learning (ICML 2004). Google ScholarDigital Library

5. Mazen Al Borno, Martin de Lasa, and Aaron Hertzmann. 2013. Trajectory Optimization for Full-Body Movements with Complex Contacts. IEEE Transactions on Visualization and Computer Graphics 19, 8 (2013). Google ScholarDigital Library

6. Jernej Barbič, Marco da Silva, and Jovan Popović. 2009. Deformable Object Animation Using Reduced Optimal Control. ACM Trans. Graph. 28, 3 (2009). Google ScholarDigital Library

7. Yoshua Bengio, Jérôme Louradour, Ronan Collobert, and Jason Weston. 2009. Curriculum Learning. In Proceedings of the 26th Annual International Conference on Machine Learning (ICML 2009). 41–48. Google ScholarDigital Library

8. M. Al Borno, M. de Lasa, and A. Hertzmann. 2013. Trajectory Optimization for Full-Body Movements with Complex Contacts. IEEE Transactions on Visualization and Computer Graphics 19, 8 (2013). Google ScholarDigital Library

9. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2010. Generalized biped walking control. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010). Google ScholarDigital Library

10. Stelian Coros, Andrej Karpathy, Ben Jones, Lionel Reveret, and Michiel van de Panne. 2011. Locomotion Skills for Simulated Quadrupeds. ACM Trans. Graph. (SIGGRPAH 2011) 30, 4 (2011). Google ScholarDigital Library

11. Stelian Coros, Sebastian Martin, Bernhard Thomaszewski, Christian Schumacher, Robert Sumner, and Markus Gross. 2012. Deformable Objects Alive! ACM Trans. Graph. (SIGGRAPH 2012) 31, 4 (2012). Google ScholarDigital Library

12. Marco da Silva, Yeuhi Abe, and Jovan Popović. 2008a. Interactive simulation of stylized human locomotion. ACM Trans. Graph. (SIGGRAPH 2008) 27, 3 (2008). Google ScholarDigital Library

13. Marco da Silva, Yeuhi Abe, and Jovan Popović. 2008b. Simulation of Human Motion Data Using Short-Horizon Model-Predictive Control. Computer Graphics Forum 27, 2 (2008).Google ScholarCross Ref

14. Dart. 2012. Dart: Dynamic Animation and Robotics Toolkit. https://dartsim.github.io/. (2012).Google Scholar

15. Martin de Lasa, Igor Mordatch, and Aaron Hertzmann. 2010. Feature-based locomotion controllers. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010). Google ScholarDigital Library

16. Justin Fu, Katie Luo, and Sergey Levine. 2017. Learning Robust Rewards with Adversarial Inverse Reinforcement Learning. CoRR abs/1710.11248 (2017).Google Scholar

17. Alex Graves, Marc G. Bellemare, Jacob Menick, Remi Munos, and Koray Kavukcuoglu. 2017. Automated Curriculum Learning for Neural Networks. In Proceedings of the 34th Annual International Conference on Machine Learning (ICML 2017). 1311–1320.Google ScholarDigital Library

18. Radek Grzeszczuk, Demetri Terzopoulos, and Geoffrey E. Hinton. 1998. NeuroAnimator: Fast Neural Network Emulation and Control of Physics-based Models. In Proceedings of International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH 1998). 9–20. Google ScholarDigital Library

19. Sehoon Ha and C. Karen Liu. 2014. Iterative Training of Dynamic Skills Inspired by Human Coaching Techniques. ACM Trans. Graph. 34, 1 (2014). Google ScholarDigital Library

20. Sehoon Ha, Yuting Ye, and C. Karen Liu. 2012. Falling and landing motion control for character animation. ACM Trans. Graph. (SIGGRAPH Asia 2012) 31, 6 (2012). Google ScholarDigital Library

21. Perttu Hämäläinen, Sebastian Eriksson, Esa Tanskanen, Ville Kyrki, and Jaakko Lehtinen. 2014. Online Motion Synthesis Using Sequential Monte Carlo. ACM Trans. Graph. (SIGGRAPH 2014) 33, 4 (2014). Google ScholarDigital Library

22. Perttu Hämäläinen, Joose Rajamäki, and C. Karen Liu. 2015. Online Control of Simulated Humanoids Using Particle Belief Propagation. ACM Trans. Graph. (SIGGRAPH 2015) 34, 4 (2015). Google ScholarDigital Library

23. Daseong Han, Haegwang Eom, Junyong Noh, and Joseph S. Shin. 2016. Data-guided Model Predictive Control Based on Smoothed Contact Dynamics. Computer Graphics Forum 35, 2 (2016).Google Scholar

24. Daseong Han, Junyong Noh, Xiaogang Jin, Joseph S. Shin, and Sung Yong Shin. 2014. On-line real-time physics-based predictive motion control with balance recovery. Computer Graphics Forum 33, 2 (2014). Google ScholarDigital Library

25. N. Hansen and A. Ostermeier. 1996. Adapting arbitrary normal mutation distributions in evolution strategies: the covariance matrix adaptation. In Proceedings of IEEE International Conference on Evolutionary Computation. 312–317.Google Scholar

26. David Held, Xinyang Geng, Carlos Florensa, and Pieter Abbeel. 2017. Automatic Goal Generation for Reinforcement Learning Agents. CoRR abs/1705.06366 (2017).Google Scholar

27. Eunjung Ju, Jungdam Won, Jehee Lee, Byungkuk Choi, Junyong Noh, and Min Gyu Choi. 2013. Data-driven Control of Flapping Flight. ACM Trans. Graph. 32, 5 (2013). Google ScholarDigital Library

28. H. J. Kim, Michael I. Jordan, Shankar Sastry, and Andrew Y. Ng. 2004. Autonomous Helicopter Flight via Reinforcement Learning. In Advances in Neural Information Processing Systems 16 (NIPS 2003). 799–806. Google ScholarDigital Library

29. Taesoo Kwon and Jessica Hodgins. 2010. Control Systems for Human Running Using an Inverted Pendulum Model and a Reference Motion Capture Sequence. In Proceedings of the 2010 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA 2010). Google ScholarDigital Library

30. Taesoo Kwon and Jessica K. Hodgins. 2017. Momentum-Mapped Inverted Pendulum Models for Controlling Dynamic Human Motions. ACM Trans. Graph. 36, 1 (2017). Google ScholarDigital Library

31. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010. Data-driven biped control. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010). Google ScholarDigital Library

32. Yoonsang Lee, Moon Seok Park, Taesoo Kwon, and Jehee Lee. 2014. Locomotion Control for Many-muscle Humanoids. ACM Trans. Graph. (SIGGRAPH Asia 2014) 33, 6 (2014). Google ScholarDigital Library

33. Sergey Levine and Vladlen Koltun. 2014. Learning Complex Neural Network Policies with Trajectory Optimization. In Proceedings of the 31st International Conference on Machine Learning (ICML 2014). Google ScholarDigital Library

34. Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. 2015. Continuous control with deep reinforcement learning. CoRR abs/1509.02971 (2015).Google Scholar

35. Libin Liu and Jessica Hodgins. 2017. Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning. ACM Trans. Graph. 36, 3 (2017). Google ScholarDigital Library

36. Libin Liu, Michiel Van De Panne, and Kangkang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Trans. Graph. 35, 3 (2016). Google ScholarDigital Library

37. Libin Liu, KangKang Yin, Michiel van de Panne, and Baining Guo. 2012. Terrain runner: control, parameterization, composition, and planning for highly dynamic motions. ACM Trans. Graph. (SIGGRAPH Asia 2012) 31, 6 (2012). Google ScholarDigital Library

38. Tambet Matiisen, Avital Oliver, Taco Cohen, and John Schulman. 2017. Teacher-Student Curriculum Learning. CoRR abs/1707.00183 (2017).Google Scholar

39. Igor Mordatch and Emanuel Todorov. 2014. Combining the benefits of function approximation and trajectory optimization. In In Robotics: Science and Systems (RSS 2014).Google Scholar

40. Igor Mordatch, Emanuel Todorov, and Zoran Popović. 2012. Discovery of complex behaviors through contact-invariant optimization. ACM Trans. Graph. (SIGGRAPH 2012) 29, 4 (2012). Google ScholarDigital Library

41. Andrew Y. Ng, Daishi Harada, and Stuart J. Russell. 1999. Policy Invariance Under Reward Transformations: Theory and Application to Reward Shaping. In Proceedings of the Sixteenth International Conference on Machine Learning (ICML ’99). 278–287. Google ScholarDigital Library

42. Jeanne Ellis Ormrod. 2009. Essentials of Educational Psychology. Pearson Education.Google Scholar

43. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills Paper Abstract Author Preprint Paper Video. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library

44. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2016. Terrain-adaptive Locomotion Skills Using Deep Reinforcement Learning. ACM Trans. Graph. (SIGGRPAH 2016) 35, 4 (2016). Google ScholarDigital Library

45. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel van de Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. (SIGGRAPH 2017) 36, 4 (2017). Google ScholarDigital Library

46. John Schulman, Philipp Moritz, Sergey Levine, Michael I. Jordan, and Pieter Abbeel. 2015. High-Dimensional Continuous Control Using Generalized Advantage Estimation. CoRR abs/1506.02438 (2015).Google Scholar

47. John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. 2017. Proximal Policy Optimization Algorithms. CoRR abs/1707.06347 (2017).Google Scholar

48. Kwang Won Sok, Manmyung Kim, and Jehee Lee. 2007. Simulating biped behaviors from human motion data. ACM Trans. Graph. (SIGGRAPH 2007) 26, 3 (2007). Google ScholarDigital Library

49. Sainbayar Sukhbaatar, Ilya Kostrikov, Arthur Szlam, and Rob Fergus. 2017. Intrinsic Motivation and Automatic Curricula via Asymmetric Self-Play. CoRR abs/1703.05407 (2017).Google Scholar

50. Jie Tan, Yuting Gu, Greg Turk, and C. Karen Liu. 2011. Articulated swimming creatures. ACM Trans. Graph. (SIGGRAPH 2011) 30, 4 (2011). Google ScholarDigital Library

51. Jie Tan, Greg Turk, and C. Karen Liu. 2012. Soft Body Locomotion. ACM Trans. Graph. (SIGGRAPH 2012) 31, 4 (2012). Google ScholarDigital Library

52. TensorFlow. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. (2015). http://tensorflow.org/ Software available from tensorflow.org.Google Scholar

53. Yao-Yang Tsai, Wen-Chieh Lin, Kuangyou B. Cheng, Jehee Lee, and Tong-Yee Lee. 2009. Real-Time Physics-Based 3D Biped Character Animation Using an Inverted Pendulum Model. IEEE Transactions on Visualization and Computer Graphics 99, 2 (2009). Google ScholarDigital Library

54. Xiaoyuan Tu and Demetri Terzopoulos. 1994. Artificial fishes: physics, locomotion, perception, behavior. Proceedings SIGGRAPH ’94 28, 4 (1994). Google ScholarDigital Library

55. Hado van Hasselt and Marco A. Wiering. 2007. Reinforcement Learning in Continuous Action Spaces. In Proceedings of the 2007 IEEE Symposium on Approximate Dynamic Programming and Reinforcement Learning (ADPRL 2007). 272–279.Google ScholarCross Ref

56. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2010. Optimizing Walking Controllers for Uncertain Inputs and Environments. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010). Google ScholarDigital Library

57. Jack M. Wang, Samuel. R. Hamner, Scott. L. Delp, and Vladlen. Koltun. 2012. Optimizing Locomotion Controllers Using Biologically-Based Actuators and Objectives. ACM Transactions on Graphics (SIGGRAPH 2012) 31, 4 (2012). Google ScholarDigital Library

58. Jungdam Won, Jongho Park, Kwanyu Kim, and Jehee Lee. 2017. How to Train Your Dragon: Example-guided Control of Flapping Flight. ACM Trans. Graph. 36, 6 (2017). Google ScholarDigital Library

59. Jia-chi Wu and Zoran Popović. 2003. Realistic modeling of bird flight animations. ACM Trans. Graph. (SIGGRAPH 2003) 22, 3 (2003). Google ScholarDigital Library

60. Yuting Ye and C. Karen Liu. 2010. Optimal feedback control for character animation using an abstract model. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010). Google ScholarDigital Library

61. Kangkang Yin, Kevin Loken, and Michiel van de Panne. 2007. SIMBICON: Simple Biped Locomotion Control. ACM Trans. Graph. (SIGGRAPH 2007) 26, 3 (2007). Google ScholarDigital Library

62. Wenhao Yu, Greg Turk, and C. Karen Liu. 2018. Learning Symmetry and Low-energy Locomotion Paper Abstract Author Preprint Paper Video. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library

63. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-Adaptive Neural Networks for Quadruped Motion Control. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library