“Adaptive Recurrent Frame Prediction with Learnable Motion Vectors” by Wu, Huo, Zuo, Yuan, Peng, et al. …

Conference:

Type(s):

Title:

- Adaptive Recurrent Frame Prediction with Learnable Motion Vectors

Session/Category Title:

- Rendering

Presenter(s)/Author(s):

Abstract:

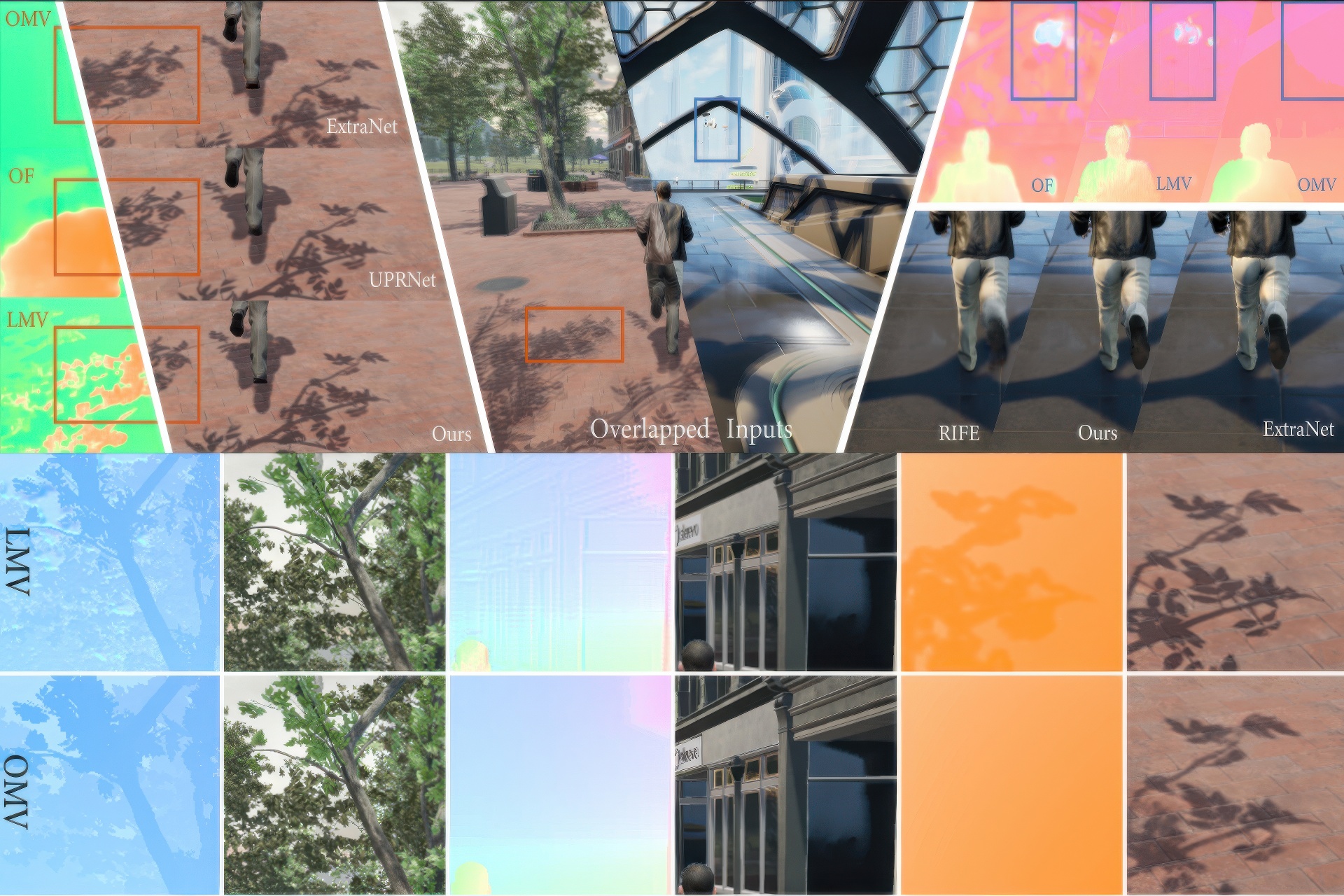

The utilization of dedicated ray tracing graphics cards has contributed to the production of stunning visual effects in real-time rendering. However, the demand for high frame rates and high resolutions remains a challenge to be addressed. A crucial technique for increasing frame rate and resolution is the pixel warping approach, which exploits spatio-temporal coherence. To this end, existing super-resolution and frame prediction methods rely heavily on motion vectors from rendering engine pipelines to track object movements. This work builds upon state-of-the-art heuristic approaches by exploring a novel adaptive recurrent frame prediction framework that integrates learnable motion vectors. Our framework supports the prediction of transparency, particles, and texture animations, with improved motion vectors that capture shading, reflections, and occlusions, in addition to geometry movements. We also introduce a feature streaming neural network, dubbed FSNet, that allows for the adaptive prediction of one or multiple sequential frames. Extensive experiments against state-of-the-art methods demonstrate that FSNet can operate at lower latency with significant visual enhancements and can upscale frame rates by at least two times. This approach offers a flexible pipeline to improve the rendering frame rates of various graphics applications and devices.

References:

[1]

AMD. 2022. FidelityFX Super Resolution 2.1. Retrieved 2022 from https://github.com/GPUOpen-Effects/FidelityFX-FSR2

[2]

Wenbo Bao, Wei-Sheng Lai, Chao Ma, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019a. Depth-aware video frame interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3703–3712.

[3]

Wenbo Bao, Wei-Sheng Lai, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019b. Memc-net: Motion estimation and motion compensation driven neural network for video interpolation and enhancement. IEEE transactions on pattern analysis and machine intelligence 43, 3 (2019), 933–948.

[4]

Karlis Martins Briedis, Abdelaziz Djelouah, Mark Meyer, Ian McGonigal, Markus Gross, and Christopher Schroers. 2021. Neural frame interpolation for rendered content. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–13.

[5]

Chakravarty R Alla Chaitanya, Anton S Kaplanyan, Christoph Schied, Marco Salvi, Aaron Lefohn, Derek Nowrouzezahrai, and Timo Aila. 2017. Interactive reconstruction of Monte Carlo image sequences using a recurrent denoising autoencoder. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–12.

[6]

Pierre Charbonnier, Laure Blanc-Feraud, Gilles Aubert, and Michel Barlaud. 1994. Two deterministic half-quadratic regularization algorithms for computed imaging. In Proceedings of 1st international conference on image processing, Vol. 2. IEEE, 168–172.

[7]

Xianhang Cheng and Zhenzhong Chen. 2020. Video frame interpolation via deformable separable convolution. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 10607–10614.

[8]

Myungsub Choi, Heewon Kim, Bohyung Han, Ning Xu, and Kyoung Mu Lee. 2020. Channel attention is all you need for video frame interpolation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 10663–10671.

[9]

Alexey Dosovitskiy, Philipp Fischer, Eddy Ilg, Philip Hausser, Caner Hazirbas, Vladimir Golkov, Patrick van der Smagt, Daniel Cremers, and Thomas Brox. 2015. FlowNet: Learning Optical Flow With Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).

[10]

Epic Games. 2022. Unreal Engine. Retrieved 2022 from https://unrealengine.com

[11]

Jie Guo, Xihao Fu, Liqiang Lin, Hengjun Ma, Yanwen Guo, Shiqiu Liu, and Ling-Qi Yan. 2021. ExtraNet: real-time extrapolated rendering for low-latency temporal supersampling. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–16.

[12]

Yu-Xiao Guo, Guojun Chen, Yue Dong, and Xin Tong. 2022. Classifier Guided Temporal Supersampling for Real-time Rendering. (2022).

[13]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision. 1026–1034.

[14]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

[15]

Xiaotao Hu, Zhewei Huang, Ailin Huang, Jun Xu, and Shuchang Zhou. 2023. A dynamic multi-scale voxel flow network for video prediction. arXiv preprint arXiv:2303.09875 (2023).

[16]

Zhaoyang Huang, Xiaoyu Shi, Chao Zhang, Qiang Wang, Ka Chun Cheung, Hongwei Qin, Jifeng Dai, and Hongsheng Li. 2022a. FlowFormer: A Transformer Architecture for Optical Flow. ECCV (2022).

[17]

Zhewei Huang, Tianyuan Zhang, Wen Heng, Boxin Shi, and Shuchang Zhou. 2022b. Real-time intermediate flow estimation for video frame interpolation. In European Conference on Computer Vision. Springer, 624–642.

[18]

Tak-Wai Hui and Chen Change Loy. 2020. Liteflownet3: Resolving correspondence ambiguity for more accurate optical flow estimation. In European Conference on Computer Vision. Springer, 169–184.

[19]

Tak-Wai Hui, Xiaoou Tang, and Chen Change Loy. 2018. Liteflownet: A lightweight convolutional neural network for optical flow estimation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8981–8989.

[20]

Tak-Wai Hui, Xiaoou Tang, and Chen Change Loy. 2020. A lightweight optical flow CNN—Revisiting data fidelity and regularization. IEEE transactions on pattern analysis and machine intelligence 43, 8 (2020), 2555–2569.

[21]

Junhwa Hur and Stefan Roth. 2019. Iterative residual refinement for joint optical flow and occlusion estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5754–5763.

[22]

E. Ilg, N. Mayer, T. Saikia, M. Keuper, and T. Brox. 2017. FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[23]

Intel. 2022. Intel Xe Super Sampling. https://www.intel.com/content/www/us/en/products/docs/arc-discrete-graphics/xess.html/

[24]

Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. 2018. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 9000–9008.

[25]

Shihao Jiang, Dylan Campbell, Yao Lu, Hongdong Li, and Richard Hartley. 2021. Learning to estimate hidden motions with global motion aggregation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 9772–9781.

[26]

Xin Jin, Longhai Wu, Jie Chen, Youxin Chen, Jayoon Koo, and Cheul-hee Hahm. 2023. A Unified Pyramid Recurrent Network for Video Frame Interpolation. In Proceedings of the IEEE conference on computer vision and pattern recognition.

[27]

Lingtong Kong, Boyuan Jiang, Donghao Luo, Wenqing Chu, Xiaoming Huang, Ying Tai, Chengjie Wang, and Jie Yang. 2022. IFRNet: Intermediate Feature Refine Network for Efficient Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1969–1978.

[28]

Zhan Li, Carl S Marshall, Deepak S Vembar, and Feng Liu. 2022. Future Frame Synthesis for Fast Monte Carlo Rendering. In Graphics Interface 2022.

[29]

Edward Liu. 2020. DLSS 2.0-Image reconstruction for real-time rendering with deep learning. In GPU Technology Conference (GTC).

[30]

Ziwei Liu, Raymond A Yeh, Xiaoou Tang, Yiming Liu, and Aseem Agarwala. 2017. Video frame synthesis using deep voxel flow. In Proceedings of the IEEE international conference on computer vision. 4463–4471.

[31]

Simon Niklaus, Long Mai, and Feng Liu. 2017. Video frame interpolation via adaptive separable convolution. In Proceedings of the IEEE International Conference on Computer Vision. 261–270.

[32]

NVIDIA. 2022. DLSS 3.0. Retrieved 2022 from https://www.nvidia.com/en-us/geforce/technologies/dlss/

[33]

Anurag Ranjan and Michael J Black. 2017. Optical flow estimation using a spatial pyramid network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4161–4170.

[34]

Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.

[35]

Daniel Scherzer, Lei Yang, Oliver Mattausch, Diego Nehab, Pedro V Sander, Michael Wimmer, and Elmar Eisemann. 2012. Temporal coherence methods in real-time rendering. In Computer Graphics Forum, Vol. 31. Wiley Online Library, 2378–2408.

[36]

Christoph Schied, Anton Kaplanyan, Chris Wyman, Anjul Patney, Chakravarty R Alla Chaitanya, John Burgess, Shiqiu Liu, Carsten Dachsbacher, Aaron Lefohn, and Marco Salvi. 2017. Spatiotemporal variance-guided filtering: real-time reconstruction for path-traced global illumination. In Proceedings of High Performance Graphics. 1–12.

[37]

Xingjian Shi, Zhourong Chen, Hao Wang, Dit-Yan Yeung, Wai-Kin Wong, and Wang-chun Woo. 2015. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Advances in neural information processing systems 28 (2015).

[38]

Deqing Sun, Xiaodong Yang, Ming-Yu Liu, and Jan Kautz. 2018. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8934–8943.

[39]

Zachary Teed and Jia Deng. 2020. Raft: Recurrent all-pairs field transforms for optical flow. In European conference on computer vision. Springer, 402–419.

[40]

Bruce Walter, George Drettakis, and Steven Parker. 1999. Interactive rendering using the render cache. In Rendering Techniques’ 99: Proceedings of the Eurographics Workshop in Granada, Spain, June 21–23, 1999 10. Springer, 19–30.

[41]

Haofei Xu, Jing Zhang, Jianfei Cai, Hamid Rezatofighi, and Dacheng Tao. 2022. GMFlow: Learning Optical Flow via Global Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8121–8130.

[42]

Xiangyu Xu, Li Siyao, Wenxiu Sun, Qian Yin, and Ming-Hsuan Yang. 2019. Quadratic video interpolation. Advances in Neural Information Processing Systems 32 (2019).

[43]

Lei Yang, Shiqiu Liu, and Marco Salvi. 2020. A survey of temporal antialiasing techniques. In Computer graphics forum, Vol. 39. Wiley Online Library, 607–621.

[44]

Yan Zeng, Lu Wang, Yanning Xu, and Xiangxu Meng. 2022. Neural Temporal Denoising for Indirect Illumination. IEEE Transactions on Visualization and Computer Graphics (2022), 1–11. https://doi.org/10.1109/TVCG.2022.3217305

[45]

Zheng Zeng, Shiqiu Liu, Jinglei Yang, Lu Wang, and Ling-Qi Yan. 2021. Temporally Reliable Motion Vectors for Real-time Ray Tracing. In Computer Graphics Forum, Vol. 40. Wiley Online Library, 79–90.