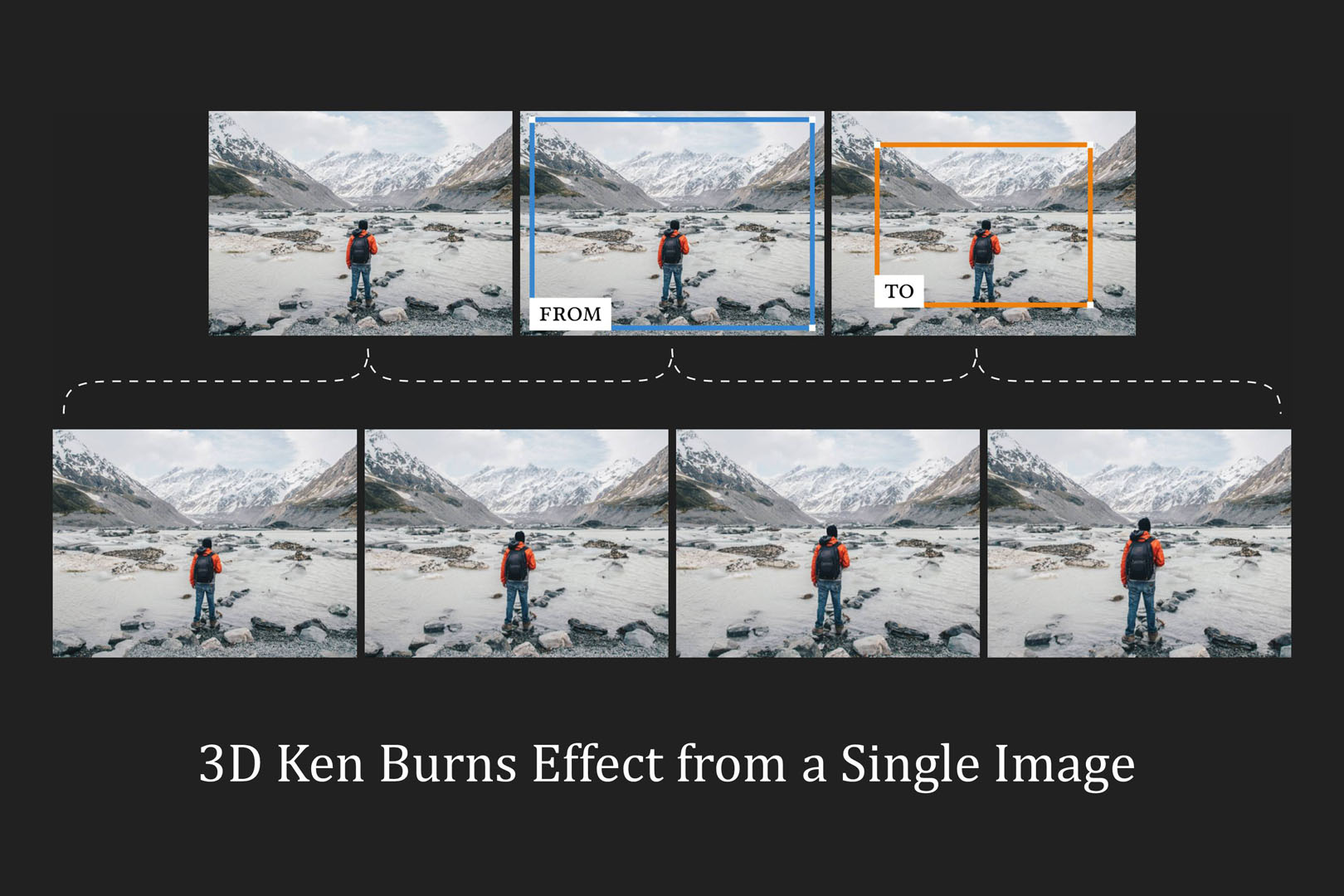

“3D Ken Burns effect from a single image” by Niklaus, Mai, Yang and Liu

Conference:

Type(s):

Title:

- 3D Ken Burns effect from a single image

Session/Category Title:

- Synthesis in the Arvo

Presenter(s)/Author(s):

Moderator(s):

Abstract:

The Ken Burns effect allows animating still images with a virtual camera scan and zoom. Adding parallax, which results in the 3D Ken Burns effect, enables significantly more compelling results. Creating such effects manually is time-consuming and demands sophisticated editing skills. Existing automatic methods, however, require multiple input images from varying viewpoints. In this paper, we introduce a framework that synthesizes the 3D Ken Burns effect from a single image, supporting both a fully automatic mode and an interactive mode with the user controlling the camera. Our framework first leverages a depth prediction pipeline, which estimates scene depth that is suitable for view synthesis tasks. To address the limitations of existing depth estimation methods such as geometric distortions, semantic distortions, and inaccurate depth boundaries, we develop a semantic-aware neural network for depth prediction, couple its estimate with a segmentation-based depth adjustment process, and employ a refinement neural network that facilitates accurate depth predictions at object boundaries. According to this depth estimate, our framework then maps the input image to a point cloud and synthesizes the resulting video frames by rendering the point cloud from the corresponding camera positions. To address disocclusions while maintaining geometrically and temporally coherent synthesis results, we utilize context-aware color- and depth-inpainting to fill in the missing information in the extreme views of the camera path, thus extending the scene geometry of the point cloud. Experiments with a wide variety of image content show that our method enables realistic synthesis results. Our study demonstrates that our system allows users to achieve better results while requiring little effort compared to existing solutions for the 3D Ken Burns effect creation.

References:

1. Amir Atapour Abarghouei and Toby P. Breckon. 2018. Real-Time Monocular Depth Estimation Using Synthetic Data With Domain Adaptation via Image Style Transfer. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

2. Kara-Ali Aliev, Dmitry Ulyanov, and Victor S. Lempitsky. 2019. Neural Point-Based Graphics. arXiv/1906.08240 (2019).Google Scholar

3. Giang Bui, Truc Le, Brittany Morago, and Ye Duan. 2018. Point-Based Rendering Enhancement via Deep Learning. The Visual Computer 34, 6–8 (2018), 829–841.Google ScholarDigital Library

4. Gaurav Chaurasia, Sylvain Duchêne, Olga Sorkine-Hornung, and George Drettakis. 2013. Depth Synthesis and Local Warps for Plausible Image-Based Navigation. ACM Transactions on Graphics 32, 3 (2013), 30:1–30:12.Google ScholarDigital Library

5. Gaurav Chaurasia, Olga Sorkine, and George Drettakis. 2011. Silhouette-Aware Warping for Image-Based Rendering. Computer Graphics Forum 30, 4 (2011), 1223–1232.Google ScholarDigital Library

6. Weifeng Chen, Zhao Fu, Dawei Yang, and Jia Deng. 2016. Single-Image Depth Perception in the Wild. In Advances in Neural Information Processing Systems.Google Scholar

7. Xiaodong Cun, Feng Xu, Chi-Man Pun, and Hao Gao. 2019. Depth-Assisted Full Resolution Network for Single Image-Based View Synthesis. In IEEE Computer Graphics and Applications.Google Scholar

8. Piotr Didyk, Pitchaya Sitthi-amorn, William T. Freeman, Frédo Durand, and Wojciech Matusik. 2013. Joint View Expansion and Filtering for Automultiscopic 3D Displays. ACM Transactions on Graphics 32, 6 (2013), 221:1–221:8.Google ScholarDigital Library

9. David Eigen and Rob Fergus. 2015. Predicting Depth, Surface Normals and Semantic Labels With a Common Multi-Scale Convolutional Architecture. In IEEE International Conference on Computer Vision.Google ScholarDigital Library

10. John Flynn, Ivan Neulander, James Philbin, and Noah Snavely. 2016. DeepStereo: Learning to Predict New Views From the World’S Imagery. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

11. Damien Fourure, Rémi Emonet, Élisa Fromont, Damien Muselet, Alain Trémeau, and Christian Wolf. 2017. Residual Conv-Deconv Grid Network for Semantic Segmentation. In British Machine Vision Conference.Google Scholar

12. Ravi Garg, B. G. Vijay Kumar, Gustavo Carneiro, and Ian D. Reid. 2016. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In European Conference on Computer Vision.Google Scholar

13. Andreas Geiger, Philip Lenz, Christoph Stiller, and Raquel Urtasun. 2013. Vision Meets Robotics: The KITTI Dataset. International Journal of Robotics Research 32, 11 (2013), 1231–1237.Google ScholarDigital Library

14. Clément Godard, Oisin Mac Aodha, and Gabriel J. Brostow. 2017. Unsupervised Monocular Depth Estimation With Left-Right Consistency. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

15. Ariel Gordon, Hanhan Li, Rico Jonschkowski, and Anelia Angelova. 2019. Depth From Videos in the Wild: Unsupervised Monocular Depth Learning From Unknown Cameras. arXiv/1904.04998 (2019).Google Scholar

16. Tewodros Habtegebrial, Kiran Varanasi, Christian Bailer, and Didier Stricker. 2018. Fast View Synthesis With Deep Stereo Vision. arXiv/1804.09690 (2018).Google Scholar

17. Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross B. Girshick. 2017. Mask R-Cnn. In IEEE International Conference on Computer Vision.Google Scholar

18. Peter Hedman, Suhib Alsisan, Richard Szeliski, and Johannes Kopf. 2017. Casual 3D Photography. ACM Transactions on Graphics 36, 6 (2017), 234:1–234:15.Google ScholarDigital Library

19. Peter Hedman and Johannes Kopf. 2018. Instant 3D Photography. ACM Transactions on Graphics 37, 4 (2018), 101:1–101:12.Google ScholarDigital Library

20. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel J. Brostow. 2018. Deep Blending for Free-Viewpoint Image-Based Rendering. ACM Transactions on Graphics 37, 6 (2018), 257:1–257:15.Google ScholarDigital Library

21. Derek Hoiem, Alexei A. Efros, and Martial Hebert. 2005. Automatic Photo Pop-Up. ACM Transactions on Graphics 24, 3 (2005), 577–584.Google ScholarDigital Library

22. Youichi Horry, Ken-Ichi Anjyo, and Kiyoshi Arai. 1997. Tour Into the Picture: Using a Spidery Mesh Interface to Make Animation From a Single Image. In Conference on Computer Graphics and Interactive Techniques.Google ScholarDigital Library

23. Jun-Ting Hsieh, Bingbin Liu, De-An Huang, Fei-Fei Li, and Juan Carlos Niebles. 2018. Learning to Decompose and Disentangle Representations for Video Prediction. In Advances in Neural Information Processing Systems.Google Scholar

24. Jingwei Huang, Zhili Chen, Duygu Ceylan, and Hailin Jin. 2017. 6-Dof VR Videos With a Single 360-Camera. In IEEE Virtual Reality.Google Scholar

25. Dinghuang Ji, Junghyun Kwon, Max McFarland, and Silvio Savarese. 2017. Deep View Morphing. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

26. Nima Khademi Kalantari, Ting-Chun Wang, and Ravi Ramamoorthi. 2016. Learning-Based View Synthesis for Light Field Cameras. ACM Transactions on Graphics 35, 6 (2016), 193:1–193:10.Google ScholarDigital Library

27. Hyung Woo Kang, Soon Hyung Pyo, Ken ichi Anjyo, and Sung Yong Shin. 2001. Tour Into the Picture Using a Vanishing Line and Its Extension to Panoramic Images. Computer Graphics Forum 20, 3 (2001), 132–141.Google ScholarCross Ref

28. Sing Bing Kang, Yin Li, Xin Tong, and Heung-Yeung Shum. 2006. Image-Based Rendering. Foundations and Trends in Computer Graphics and Vision 2, 3 (2006), 173–258.Google ScholarDigital Library

29. Petr Kellnhofer, Piotr Didyk, Szu-Po Wang, Pitchaya Sitthi-amorn, William T. Freeman, Frédo Durand, and Wojciech Matusik. 2017. 3DTV at Home: Eulerian-Lagrangian Stereo-To-Multiview Conversion. ACM Transactions on Graphics 36, 4 (2017), 146:1–146:13.Google ScholarDigital Library

30. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. arXiv/1412.6980 (2014).Google Scholar

31. Felix Klose, Oliver Wang, Jean Charles Bazin, Marcus A. Magnor, and Alexander Sorkine-Hornung. 2015. Sampling Based Scene-Space Video Processing. ACM Transactions on Graphics 34, 4 (2015), 67:1–67:11.Google ScholarDigital Library

32. Tobias Koch, Lukas Liebel, Friedrich Fraundorfer, and Marco Körner. 2018. Evaluation of CNN-based Single-Image Depth Estimation Methods. arXiv/1805.01328 (2018).Google Scholar

33. Johannes Kopf. 2016. 360°Video Stabilization. ACM Transactions on Graphics 35, 6 (2016), 195:1–195:9.Google ScholarDigital Library

34. Chun-Jui Lai, Ping-Hsuan Han, and Yi-Ping Hung. 2016. View Interpolation for Video See-Through Head-Mounted Display. In Conference on Computer Graphics and Interactive Techniques.Google Scholar

35. Iro Laina, Christian Rupprecht, Vasileios Belagiannis, Federico Tombari, and Nassir Navab. 2016. Deeper Depth Prediction With Fully Convolutional Residual Networks. In International Conference on 3D Vision.Google Scholar

36. Manuel Lang, Alexander Hornung, Oliver Wang, Steven Poulakos, Aljoscha Smolic, and Markus H. Gross. 2010. Nonlinear Disparity Mapping for Stereoscopic 3D. ACM Transactions on Graphics 29, 4 (2010), 75:1–75:10.Google ScholarDigital Library

37. Alex X. Lee, Richard Zhang, Frederik Ebert, Pieter Abbeel, Chelsea Finn, and Sergey Levine. 2018. Stochastic Adversarial Video Prediction. arXiv/1804.01523 (2018).Google Scholar

38. Nan Li and Zhiyong Huang. 2001. Tour Into the Picture Revisited. In Conference on Computer Graphics, Visualization and Computer Vision.Google Scholar

39. Yijun Li, Jia-Bin Huang, Narendra Ahuja, and Ming-Hsuan Yang. 2016. Deep Joint Image Filtering. In European Conference on Computer Vision.Google Scholar

40. Zhengqi Li, Tali Dekel, Forrester Cole, Richard Tucker, Noah Snavely, Ce Liu, and William T. Freeman. 2019. Learning the Depths of Moving People by Watching Frozen People. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

41. Zhengqi Li and Noah Snavely. 2018. MegaDepth: Learning Single-View Depth Prediction From Internet Photos. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

42. Xiaodan Liang, Lisa Lee, Wei Dai, and Eric P. Xing. 2017. Dual Motion GAN for Future-Flow Embedded Video Prediction. In IEEE International Conference on Computer Vision.Google Scholar

43. Beyang Liu, Stephen Gould, and Daphne Koller. 2010. Single Image Depth Estimation From Predicted Semantic Labels. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

44. Feng Liu, Michael Gleicher, Hailin Jin, and Aseem Agarwala. 2009. Content-Preserving Warps for 3D Video Stabilization. ACM Transactions on Graphics 28, 3 (2009), 44.Google ScholarDigital Library

45. Miaomiao Liu, Xuming He, and Mathieu Salzmann. 2018. Geometry-Aware Deep Network for Single-Image Novel View Synthesis. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

46. Yue Luo, Jimmy S. J. Ren, Mude Lin, Jiahao Pang, Wenxiu Sun, Hongsheng Li, and Liang Lin. 2018. Single View Stereo Matching. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

47. Michaël Mathieu, Camille Couprie, and Yann LeCun. 2015. Deep Multi-Scale Video Prediction Beyond Mean Square Error. arXiv/1511.05440 (2015).Google Scholar

48. Moustafa Meshry, Dan B. Goldman, Sameh Khamis, Hugues Hoppe, Rohit Pandey, Noah Snavely, and Ricardo Martin-Brualla. 2019. Neural Rerendering in the Wild. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

49. Ben Mildenhall, Pratul P. Srinivasan, Rodrigo Ortiz Cayon, Nima Khademi Kalantari, Ravi Ramamoorthi, Ren Ng, and Abhishek Kar. 2019. Local Light Field Fusion: Practical View Synthesis With Prescriptive Sampling Guidelines. ACM Transactions on Graphics 38, 4 (2019), 29:1–29:14.Google ScholarDigital Library

50. Arsalan Mousavian, Hamed Pirsiavash, and Jana Kosecka. 2016. Joint Semantic Segmentation and Depth Estimation With Deep Convolutional Networks. In International Conference on 3D Vision.Google Scholar

51. Kamyar Nazeri, Eric Ng, Tony Joseph, Faisal Z. Qureshi, and Mehran Ebrahimi. 2019. EdgeConnect: Generative Image Inpainting With Adversarial Edge Learning. arXiv/1901.00212 (2019).Google Scholar

52. Vladimir Nekrasov, Thanuja Dharmasiri, Andrew Spek, Tom Drummond, Chunhua Shen, and Ian D. Reid. 2018. Real-Time Joint Semantic Segmentation and Depth Estimation Using Asymmetric Annotations. arXiv/1809.04766 (2018).Google Scholar

53. Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. 2019. HoloGAN: Unsupervised Learning of 3D Representations From Natural Images. arXiv/1904.01326 (2019).Google Scholar

54. Simon Niklaus and Feng Liu. 2018. Context-Aware Synthesis for Video Frame Interpolation. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

55. Augustus Odena, Vincent Dumoulin, and Chris Olah. 2016. Deconvolution and Checkerboard Artifacts. Technical Report.Google Scholar

56. Kyle Olszewski, Sergey Tulyakov, Oliver J. Woodford, Hao Li, and Linjie Luo. 2019. Transformable Bottleneck Networks. arXiv/1904.06458 (2019).Google Scholar

57. Eunbyung Park, Jimei Yang, Ersin Yumer, Duygu Ceylan, and Alexander C. Berg. 2017. Transformation-Grounded Image Generation Network for Novel 3D View Synthesis. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

58. Eric Penner and Li Zhang. 2017. Soft 3D Reconstruction for View Synthesis. ACM Transactions on Graphics 36, 6 (2017), 235:1–235:11.Google ScholarDigital Library

59. Xiaojuan Qi, Renjie Liao, Zhengzhe Liu, Raquel Urtasun, and Jiaya Jia. 2018. GeoNet: Geometric Neural Network for Joint Depth and Surface Normal Estimation. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

60. D. M. Motiur Rahaman and Manoranjan Paul. 2018. Virtual View Synthesis for Free Viewpoint Video and Multiview Video Compression Using Gaussian Mixture Modelling. IEEE Transactions on Image Processing 27, 3 (2018), 1190–1201.Google ScholarCross Ref

61. Nicola Ranieri, Simon Heinzle, Quinn Smithwick, Daniel Reetz, Lanny S. Smoot, Wojciech Matusik, and Markus H. Gross. 2012. Multi-Layered Automultiscopic Displays. Computer Graphics Forum 31, 7-2 (2012), 2135–2143.Google ScholarDigital Library

62. Fitsum A. Reda, Guilin Liu, Kevin J. Shih, Robert Kirby, Jon Barker, David Tarjan, Andrew Tao, and Bryan Catanzaro. 2018. SDC-Net: Video Prediction Using Spatially-Displaced Convolution. In European Conference on Computer Vision.Google Scholar

63. Konstantinos Rematas, Ira Kemelmacher-Shlizerman, Brian Curless, and Steve Seitz. 2018. Soccer on Your Tabletop. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

64. Konstantinos Rematas, Chuong H. Nguyen, Tobias Ritschel, Mario Fritz, and Tinne Tuytelaars. 2017. Novel Views of Objects From a Single Image. IEEE Transactions on Pattern Analysis and Machine Intelligence 39, 8 (2017), 1576–1590.Google ScholarDigital Library

65. Ashutosh Saxena, Min Sun, and Andrew Y. Ng. 2009. Make3D: Learning 3D Scene Structure From a Single Still Image. IEEE Transactions on Pattern Analysis and Machine Intelligence 31, 5 (2009), 824–840.Google ScholarDigital Library

66. Nathan Silberman, Derek Hoiem, Pushmeet Kohli, and Rob Fergus. 2012. Indoor Segmentation and Support Inference From RGBD Images. In European Conference on Computer Vision.Google Scholar

67. Karen Simonyan and Andrew Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv/1409.1556 (2014).Google Scholar

68. Vincent Sitzmann, Justus Thies, Felix Heide, Matthias Niessner, Gordon Wetzstein, and Michael Zollhofer. 2019. DeepVoxels: Learning Persistent 3D Feature Embeddings. In IEEE Conference on Computer Vision and Pattern Recognition.Google ScholarCross Ref

69. Pratul P. Srinivasan, Richard Tucker, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng, and Noah Snavely. 2019. Pushing the Boundaries of View Extrapolation With Multiplane Images. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

70. Pratul P. Srinivasan, Tongzhou Wang, Ashwin Sreelal, Ravi Ramamoorthi, and Ren Ng.Google Scholar

71. 2017. Learning to Synthesize a 4D RGBD Light Field From a Single Image. In IEEE International Conference on Computer Vision.Google Scholar

72. Savil Srivastava, Ashutosh Saxena, Christian Theobalt, Sebastian Thrun, and Andrew Y. Ng. 2009. I23 – Rapid Interactive 3D Reconstruction From a Single Image. In Vision, Modeling, and Visualization Workshop.Google Scholar

73. Maxim Tatarchenko, Alexey Dosovitskiy, and Thomas Brox. 2015. Single-View to Multi-View: Reconstructing Unseen Views With a Convolutional Network. arXiv/1511.06702 (2015).Google Scholar

74. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred Neural Rendering: Image Synthesis Using Neural Textures. ACM Transactions on Graphics 38, 4 (2019), 66:1–66:12.Google ScholarDigital Library

75. Justus Thies, Michael Zollhöfer, Christian Theobalt, Marc Stamminger, and Matthias Nießner. 2018. IGNOR: Image-Guided Neural Object Rendering. arXiv/1811.10720 (2018).Google Scholar

76. Shubham Tulsiani, Richard Tucker, and Noah Snavely. 2018. Layer-Structured 3D Scene Inference via View Synthesis. In European Conference on Computer Vision.Google ScholarCross Ref

77. Benjamin Ummenhofer, Huizhong Zhou, Jonas Uhrig, Nikolaus Mayer, Eddy Ilg, Alexey Dosovitskiy, and Thomas Brox. 2017. DeMoN: Depth and Motion Network for Learning Monocular Stereo. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

78. Carl Vondrick, Hamed Pirsiavash, and Antonio Torralba. 2016. Generating Videos With Scene Dynamics. In Advances in Neural Information Processing Systems.Google Scholar

79. Neal Wadhwa, Rahul Garg, David E. Jacobs, Bryan E. Feldman, Nori Kanazawa, Robert Carroll, Yair Movshovitz-Attias, Jonathan T. Barron, Yael Pritch, and Marc Levoy. 2018. Synthetic Depth-Of-Field With a Single-Camera Mobile Phone. ACM Transactions on Graphics 37, 4 (2018), 64:1–64:13.Google ScholarDigital Library

80. Lijun Wang, Xiaohui Shen, Jianming Zhang, Oliver Wang, Zhe L. Lin, Chih-Yao Hsieh, Sarah Kong, and Huchuan Lu. 2018. DeepLens: Shallow Depth of Field From a Single Image. ACM Transactions on Graphics 37, 6 (2018), 245:1–245:11.Google ScholarDigital Library

81. Ke Xian, Chunhua Shen, Zhiguo Cao, Hao Lu, Yang Xiao, Ruibo Li, and Zhenbo Luo. 2018. Monocular Relative Depth Perception With Web Stereo Data Supervision. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

82. Junyuan Xie, Ross B. Girshick, and Ali Farhadi. 2016. Deep3D: Fully Automatic 2d-To-3d Video Conversion With Deep Convolutional Neural Networks. In European Conference on Computer Vision.Google ScholarCross Ref

83. Jingwei Xu, Bingbing Ni, Zefan Li, Shuo Cheng, and Xiaokang Yang. 2018. Structure Preserving Video Prediction. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

84. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019. Deep View Synthesis From Sparse Photometric Images. ACM Transactions on Graphics 38, 4 (2019), 76:1–76:13.Google ScholarDigital Library

85. Xinchen Yan, Jimei Yang, Ersin Yumer, Yijie Guo, and Honglak Lee. 2016. Perspective Transformer Nets: Learning Single-View 3D Object Reconstruction Without 3D Supervision. In Advances in Neural Information Processing Systems.Google Scholar

86. Jimei Yang, Scott E. Reed, Ming-Hsuan Yang, and Honglak Lee. 2015. Weakly-Supervised Disentangling With Recurrent Transformations for 3D View Synthesis. In Advances in Neural Information Processing Systems.Google Scholar

87. Jiahui Yu, Zhe Lin, Jimei Yang, Xiaohui Shen, Xin Lu, and Thomas S. Huang. 2018. Generative Image Inpainting With Contextual Attention. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

88. Kecheng Zheng, Zheng-Jun Zha, Yang Cao, Xuejin Chen, and Feng Wu. 2018. LA-Net: Layout-Aware Dense Network for Monocular Depth Estimation. In ACM Multimedia.Google Scholar

89. Ke Colin Zheng, Alex Colburn, Aseem Agarwala, Maneesh Agrawala, David Salesin, Brian Curless, and Michael F. Cohen. 2009. Parallax Photography: Creating 3D Cinematic Effects From Stills. In Graphics Interface Conference.Google Scholar

90. Tinghui Zhou, Matthew Brown, Noah Snavely, and David G. Lowe. 2017. Unsupervised Learning of Depth and Ego-Motion From Video. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

91. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis Using Multiplane Images. ACM Transactions on Graphics 37, 4 (2018), 65:1–65:12.Google ScholarDigital Library

92. Tinghui Zhou, Shubham Tulsiani, Weilun Sun, Jitendra Malik, and Alexei A. Efros. 2016. View Synthesis by Appearance Flow. In European Conference on Computer Vision.Google Scholar

93. C. Lawrence Zitnick, Sing Bing Kang, Matthew Uyttendaele, Simon A. J. Winder, and Richard Szeliski. 2004. High-Quality Video View Interpolation Using a Layered Representation. ACM Transactions on Graphics 23, 3 (2004), 600–608.Google ScholarDigital Library

94. Daniel Zoran, Phillip Isola, Dilip Krishnan, and William T. Freeman. 2015. Learning Ordinal Relationships for Mid-Level Vision. In IEEE International Conference on Computer Vision.Google Scholar