“TactGAN: Vibrotactile Designing Driven by GAN-based Automatic Generation” by Ban and Ujitoko

Conference:

Experience Type(s):

Title:

- TactGAN: Vibrotactile Designing Driven by GAN-based Automatic Generation

Organizer(s)/Presenter(s):

Description:

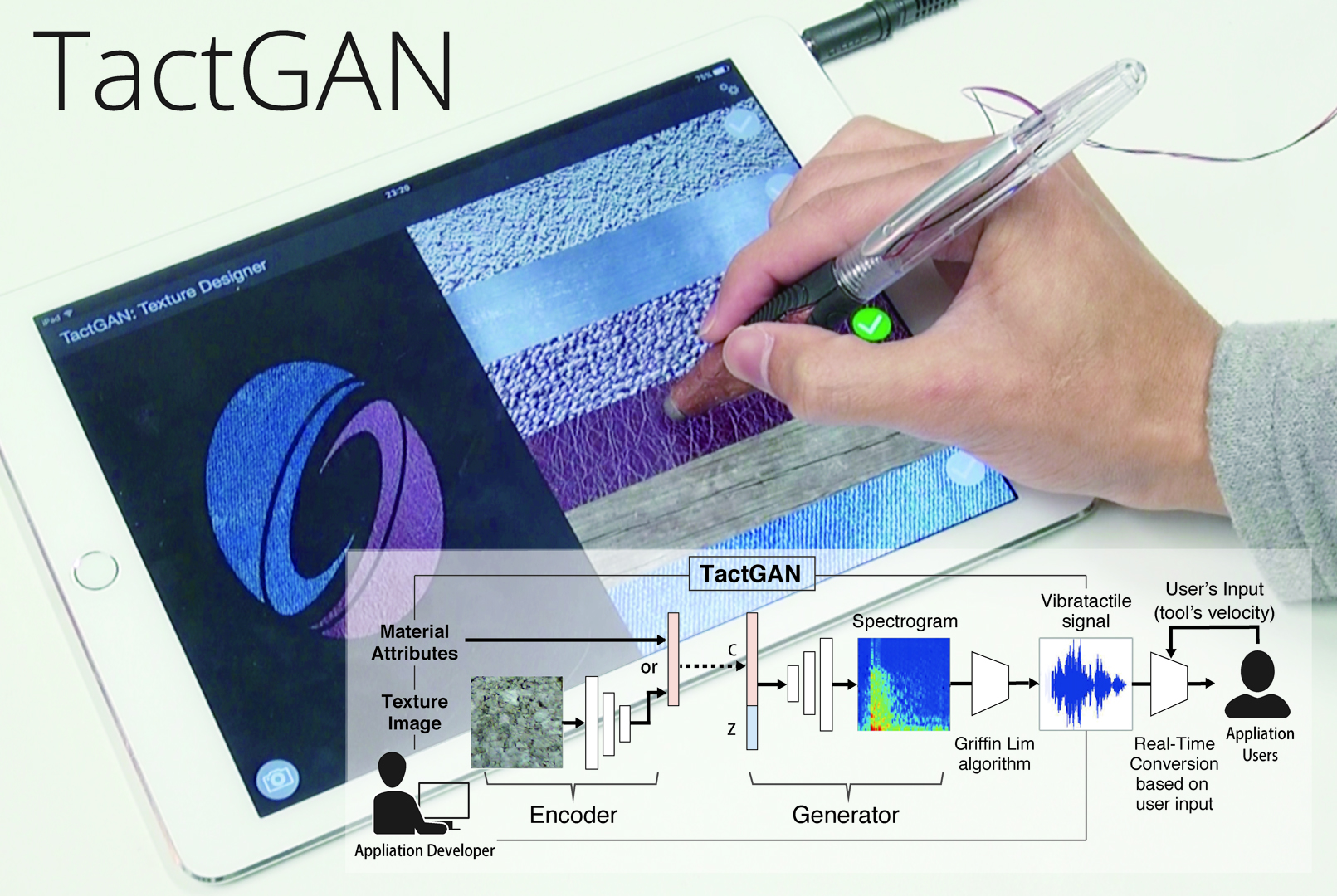

TactGAN helps us to design vibrotactile feedbacks rapidly. With this system, we can generate vibrotactile stimuli from images and user-defined attribute values such as material kinds or tactile words. We can design vibrotactile feedback on the touchscreen while touching and comparing the generated stimuli.

In this study, we propose the vibrotactile feedback designing system using GAN (Generative Adversarial Network)-based vibrotactile signal generator (TactGAN). Preparing appropriate vibrotactile signals for applications is difficult and takes much time because we need recording or directly hand tuning signals if the required signals do not exist in the database of vibrotactile stimuli. To solve these problems, TactGAN can generate signals presenting specific tactile impression based on user-defined parameters. It can also automatically generate signals presenting the tactile impression of images. It realizes the rapid designing of vibrotactile signals for application with such feedback. Users can experience the rapid designing process of the vibrotactile stimuli for specific user interfaces or specific contents on applications. TactGAN enables us to apply various vibrotactile stimuli to UI components like buttons using material kinds or tactile words, and to attach textures with vibrotactile feedback to the 3D model.

References:

[1] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. Advances in Neural Information Processing Systems 27 (2014), 2672–2680. arXiv:arXiv:1406.2661v1

[2] KaimingHe, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Deep Residual Learning for Image Recognition. (dec 2015). arXiv:1512.03385 http://arxiv.org/abs/1512.03385

[3] Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. CoRR abs/1411.1 (2014). arXiv:1411.1784 http://arxiv.org/abs/1411.1784

[4] Matti Strese, Clemens Schuwerk, Albert Iepure, and Eckehard Steinbach. 2017. Multi-modal Feature-Based Surface Material Classification. IEEE Transactions on Haptics 10, 2 (2017), 226–239.

[5] Yusuke Ujitoko and Yuki Ban. 2018. Vibrotactile Signal Generation from Texture Images or Attributes Using Generative Adversarial Network. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and LectureNotes in Bioinformatics), Vol. 10894 LNCS. 25–36.