“Shall We Dance?” by Ebihara

Conference:

Experience Type(s):

Title:

- Shall We Dance?

Program Title:

- Enhanced Realities

Entry Number: 26

Organizer(s)/Presenter(s):

Collaborator(s):

Project Affiliation:

- University of Maryland

Description:

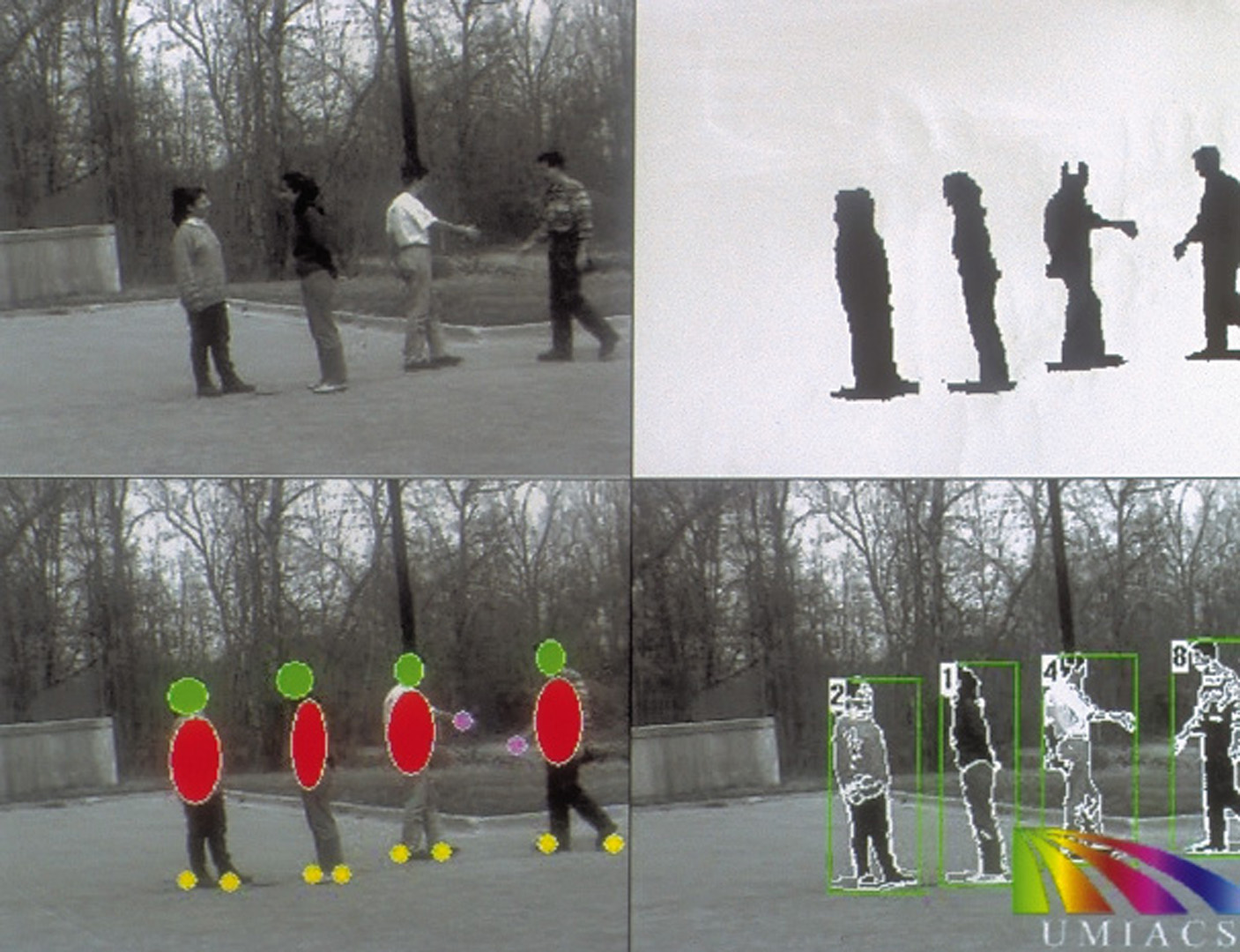

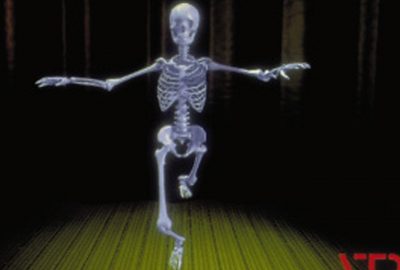

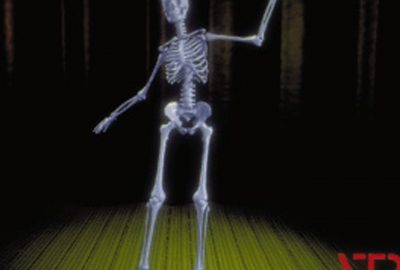

Real-time 3D computer vision gives users control over both the movement and facial expression of a virtual puppet and the music to which the puppet “dances.” Multiple cameras observe a person, and human silhouette analysis achieves real-time 3D estimation of human postures. Facial expressions are estimated from images acquired by a viewing-direction controllable camera, so that the face can be tracked. From the facial images, deformations of each facial component are estimated. The estimated body postures and facial expressions are reproduced in the pup- pet model by deforming the model according to the estimated data. All the estimation and rendering processes run in real time on PC-based systems. Attendees can see themselves dancing in a virtual scene as virtual puppets.

Additional Images:

- 1998 ETech: Ebihara_Shall We Dance?

- 1998 ETech: Ebihara_Shall We Dance?

- 1998 ETech: Ebihara_Shall We Dance?

- 1998 ETech: Ebihara_Shall We Dance?