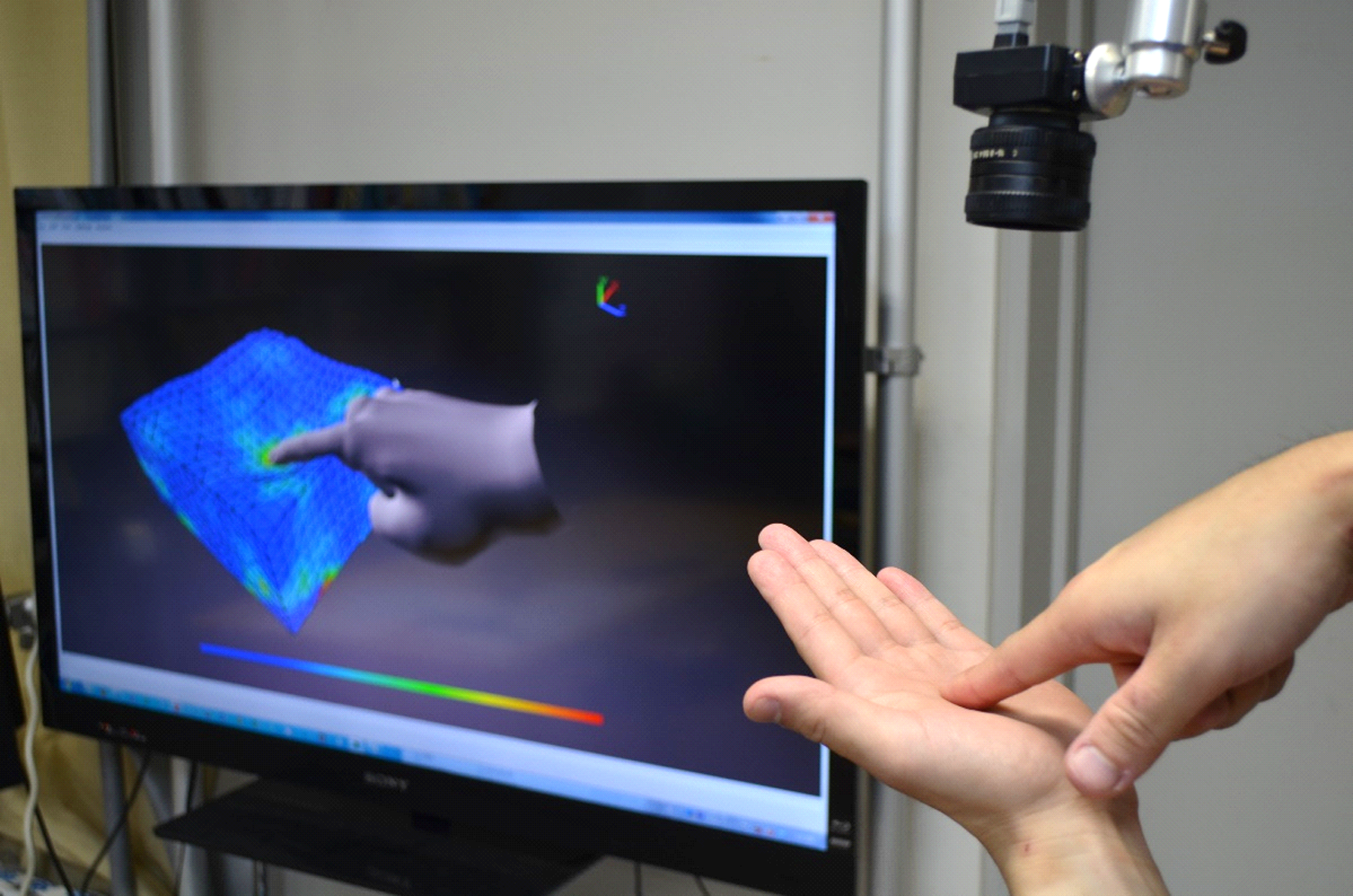

“Palm+Act: Operation by Visually Captured 3D Force on Palm” by Ono, Yoshimoto and Sato

Conference:

Experience Type(s):

Title:

- Palm+Act: Operation by Visually Captured 3D Force on Palm

Organizer(s)/Presenter(s):

Description:

Recently, there has been considerable interest in technologies that appropriate human body parts, especially the skin, as an input surface for human-computer interfaces. Since human skin is always available and highly accessible, such on-body touch interfaces enable the control of various systems in ubiquitous environments. An interface utilizing skin as an input surface provides a mobile input system that does not require dedicated control devices for a specific system. There are many researches that demonstrate the concept of on-body touch interaction with a ubiquitous sensing device [Harrison et al. 2011].

The goal of this research is to detect multi-dimensional touch vectors on the body, especially the palm with fingers. Compared to the traditional on-body interaction, this research enriches the available information. For example, 3D touching force and torque are some of them. Although simple sensing of these multi-dimensional touch vectors is difficult, our technical innovation helps to detect them. The proposed technology enables us to estimate force by tracking 2D skin displacement using a single RGB camera. This simple sensing method of multi-dimensional touch vectors is expected to enable users to control various complex systems intuitively. As a social impact with this technology, on-palm interaction becomes a universal controller for various systems in daily life, such as mobile robots, home electronics and digital contents.

References:

[1] Farneback, G. 2000. Fast and accurate motion estimation using orientation tensors and parametric motion models. In Pattern Recognition, 2000. Proceedings. 15th International Conference on, vol. 1, IEEE, 135–139.

[2] Harrison, C., Tan, D., and Morris, D. 2010. Skinput: appropriating the body as an input surface. In Proceedings of the 28th international conference on Human factors in computing systems, vol. 3, 453–462.

[3] Harrison, C., Benko, H., and Wilson, A. D. 2011. Omnitouch: wearable multitouch interaction everywhere. In Proceedings of the 24th annual ACM symposium on User interface software and technology, UIST ’11, 441–450.

[4] Makino, Y., Sugiura, Y., Ogata, M., and Inami, M. 2013. Tangential force sensing system on forearm. In Proceedings of the 4th Augmented Human International Conference, ACM, AH ’13, 29–34.

[5] Sun, Y., Hollerbach, J., and Mascaro, S. 2009. Estimation of fingertip force direction with computer vision. Robotics, IEEE Transactions on 25, 6, 1356–1369.

[6] Vlack, K., Mizota, T., Kawakami, N., Kamiyama, K., Kajimoto, H., and Tachi, S. 2005. Gelforce: a vision-based traction field computer interface. In CHI ’05 Extended Abstracts on Human Factors in Computing Systems, ACM, CHI EA ’05, 1154–1155.

[7] Yoshimoto, S., Kuroda, Y., Imura, M., and Oshiro, O. 2012. Spatially transparent tactile sensor utilizing electromechanical properties of skin. Advanced Biomedical Engineering 1, 89–97.