“NPSNET and AFIT-HOTAS: Interconnecting Heterogeneously Developed Virtual Environments” by Pratt, Falby and Zyda

Conference:

Experience Type(s):

Title:

- NPSNET and AFIT-HOTAS: Interconnecting Heterogeneously Developed Virtual Environments

Program Title:

- Tomorrow's Realities

Entry Number: 42

Organizer(s)/Presenter(s):

Collaborator(s):

- Philip Amburn

- Rex Haddix

- Dean McCarty

- Marty Stytz

- Daniel Corbin

- John Hearne

- Kristen Kelleher

- Sehung Kwak

- John Locke

- Chuck Lombardo

- Bert Lundy

- John Roesli

- Dennis Schmidt

- Richard Smith

- Dave Young

- Steven Zeswitz

- John Switzer

Project Affiliation:

- Naval Postgraduate School and Air Force Institute of Technology

Description:

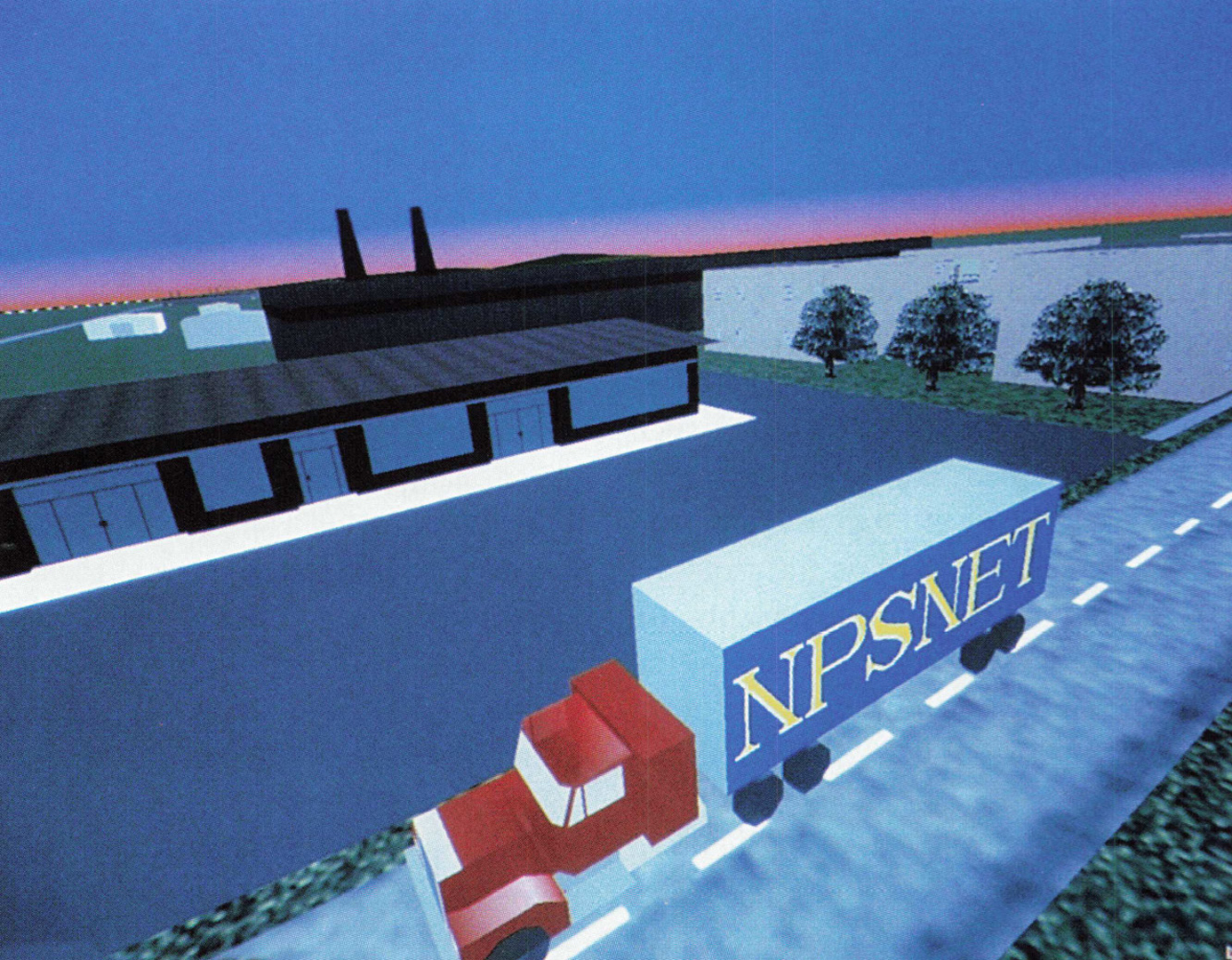

This joint Naval Postgraduate School (NPS)/ Air Force Institute of Technology (AFIT) demonstration shows two separately developed virtual environments interoperating using a common communications protocol, a common terrain/model/agent database, and multiple user interaction paradigms. NPSNET has been developed at the Naval Postgraduate School as a realtime, workstation-based, 3D visual simulation system capable of displaying vehicle movement over the ground or in the air utilizing Distributive Interactive Simulation (DIS) networking protocols, The Air Force Institute of Technology has been conducting research in various applications of head-worn display devices in support of immersive virtual environments. Specific applications include operating an air vehicle and independently viewing the virtual environment from any viewpoint. This exhibit shows the interconnection of these heterogeneous projects and consists of six networked nodes.

Conference attendees see and interact with three other attendees and numerous autonomous agents in a shared virtual environment. Each of the first four user nodes interact using a different paradigm: BOOM with buttons, Head Mounted Display (HMO) with throttle/stick/buttons, LCD shutter glasses with Ascension Bird/buttons, and Out The Window (OTW) on a workstation monitor with Spaceball. Attendees can also view other attendees using these interaction paradigms and see the virtual world view via monitors. Attendees are able to see the social and cultural implications of interacting in a shared virtual world populated not only with intelligent users but also autonomous agents while using multiple interaction (view and input) paradigms, They are able to see and experience the strengths and weaknesses of the different paradigms. In addition, the virtual world being experienced by the BOOM user is visible on a large-screen projection system and heard from speakers spaced throughout the exhibit area.

Each station is able to interact with other stations on the network using DIS protocols. The terrain is a custom design 25km x 25km complete with models. Each quadrant of the terrain contains a separate theme: mountain area, farmland, town with small airport complex, and wooded area with lake.

At the BOOM node, a Fake Space Systems BOOM2C-C high-resolution monochrome display is used to view the environment. The viewpoint can be attached to a participant’s location or interactively controlled by pointing the BOOM and using the buttons to move about. Weapons firing is via buttons.

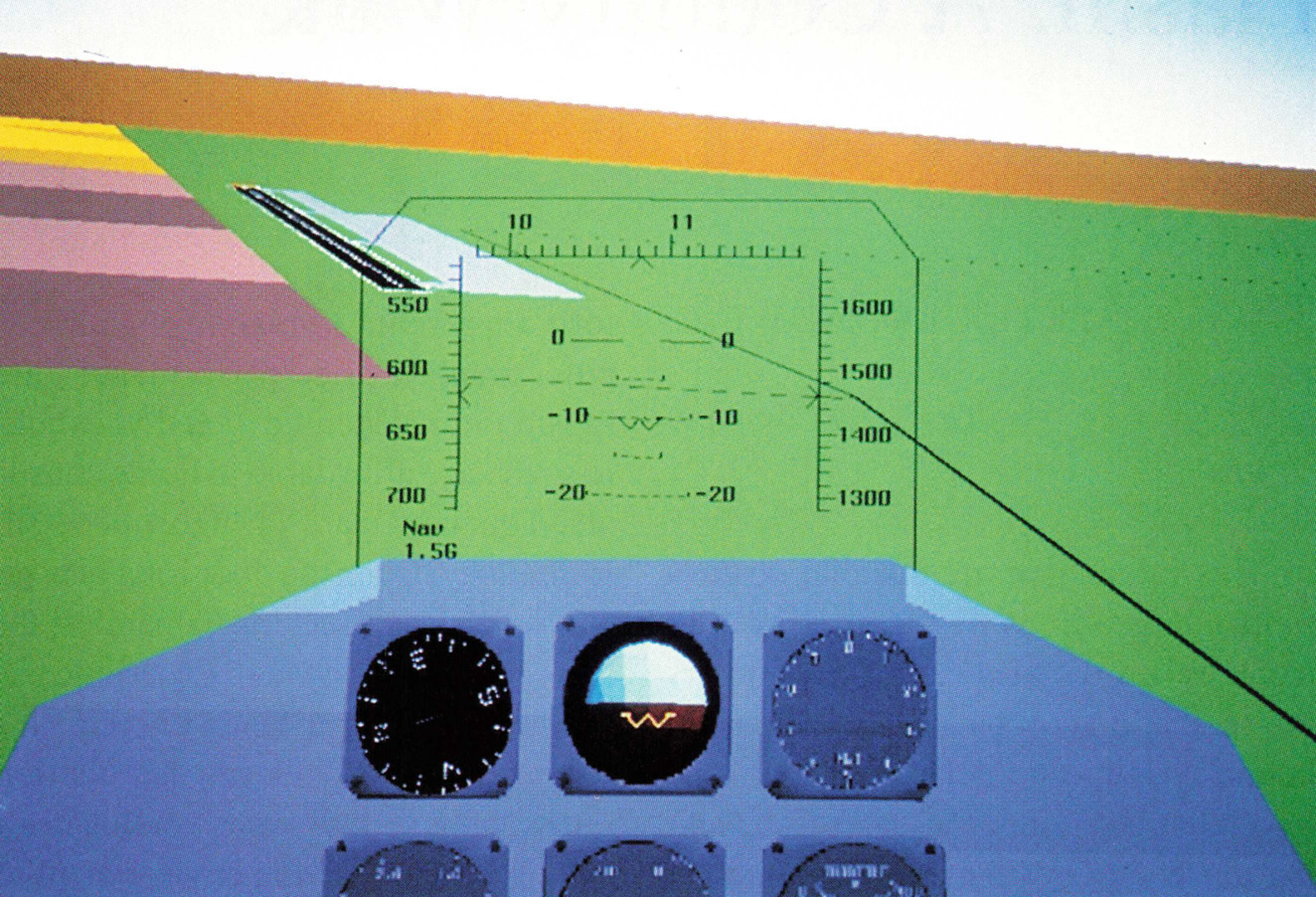

The HMD node uses a Polhemus Laboratories Inc. “looking glass” fiber optic-based head-worn display with a throttle and stick for a “virtual cockpit.” The throttle and stick allow the user to move through the environment and to fire weapons. A simplified cockpit display is provided.

Stereographic’s LCD shutter glasses are used at the LCD node to provide a 3D view of the environment. User input is via Ascension Bird six Degree Of Freedom (DOF) device using the eye-in-hand metaphor.

The Out The Window (OTW) node presents the user with a 3D monitor view of the environment. Basic vehicle control is via a Spaceball. This serves as the baseline model of the system.

Two additional nodes are part of the network. One is the Autonomous Agent controller. The autonomous agents populating the virtual environment respond to users at specific locations. Two to four trucks drive along the roads. These trucks stop at lights and avoid running into each other and user-driven vehicles. The lake has a sailboat out on a nice day. Several aircraft fly around and interact with the user-driven aircraft. In addition to these vehicles, there are several special entities that interact with the users at specific locations. For example, the Abominable Snowman comes after slow-moving ground vehicles in the mountains. Over the lake, the Loch Ness monster goes after low-flying aircraft. The Autonomous Agent controller display is a simple 2D map showing the location of all the entities in the virtual world, both autonomous and live. The second additional node is the sound server.

The networking protocols used are a subset of the DIS Protocol Data Units (PDUs). To facilitate the use of the PDU’s, a modified coordinate system is used. All PDUs are broadcast utilizing UDP/IP. The system is written in ANSI-C and AT&T C++ using the Silicon Graphics Performer APL All databases were developed utilizing Software Systems MultiGen. The system runs on Silicon Graphics IRIS workstations in all its incarnations (Personal IRIS, Indigo Elan, GT, GTX, VGX, RE).

This system is unique in several aspects. The social interaction of the participants using different paradigms in a common virtual world provides an exciting technological, entertainment, and educational demonstration. As was demonstrated in the tomorrow’s reality gallery at SIGGRAPH ’91, NPSNET is an exciting and informative exhibit. This system is several generations more advanced.

We are sponsored by ARPA/ ASTO, U.S. Army STRICOM, HDQA AI Center, TRAC Monterey, and the Defense Modeling and Simulation Office.

Hardware

■ One Boom

■ One HMO

■ One LCD shutter glasses

■ One Spaceball

■ One Ascension Bird

■ One Throttle/Stick

■ MIDI Sampler

■ Four Speakers w/wire

■ Ethernet system

■ Four Silicon Graphics IRIS 4D Reality Engines w/64Mb, 4.0.5F Development DAT drive or 150Mb cartridge tape drive

■ Two IRIS Indigo Elan 4000 w/32Mb, 4.0.5F Devepment System, C++ compiler version 3.0 and either DAT drive or 150Mb cartridge tape drive

■ Four 1.2 Gig disks, one each for the four Reality Engines

■ Two 435Mb disks, one each for the Indigo Elans

■ One 70 inch color monitor (or projection system) w/cables and encoder/decoder for hookup to Reality Engine

■ Two color repeater monitors