“HandsIn3D: Augmenting the Shared 3D Visual Space with Unmediated Hand Gestures”

Conference:

Experience Type(s):

Title:

- HandsIn3D: Augmenting the Shared 3D Visual Space with Unmediated Hand Gestures

Organizer(s)/Presenter(s):

Description:

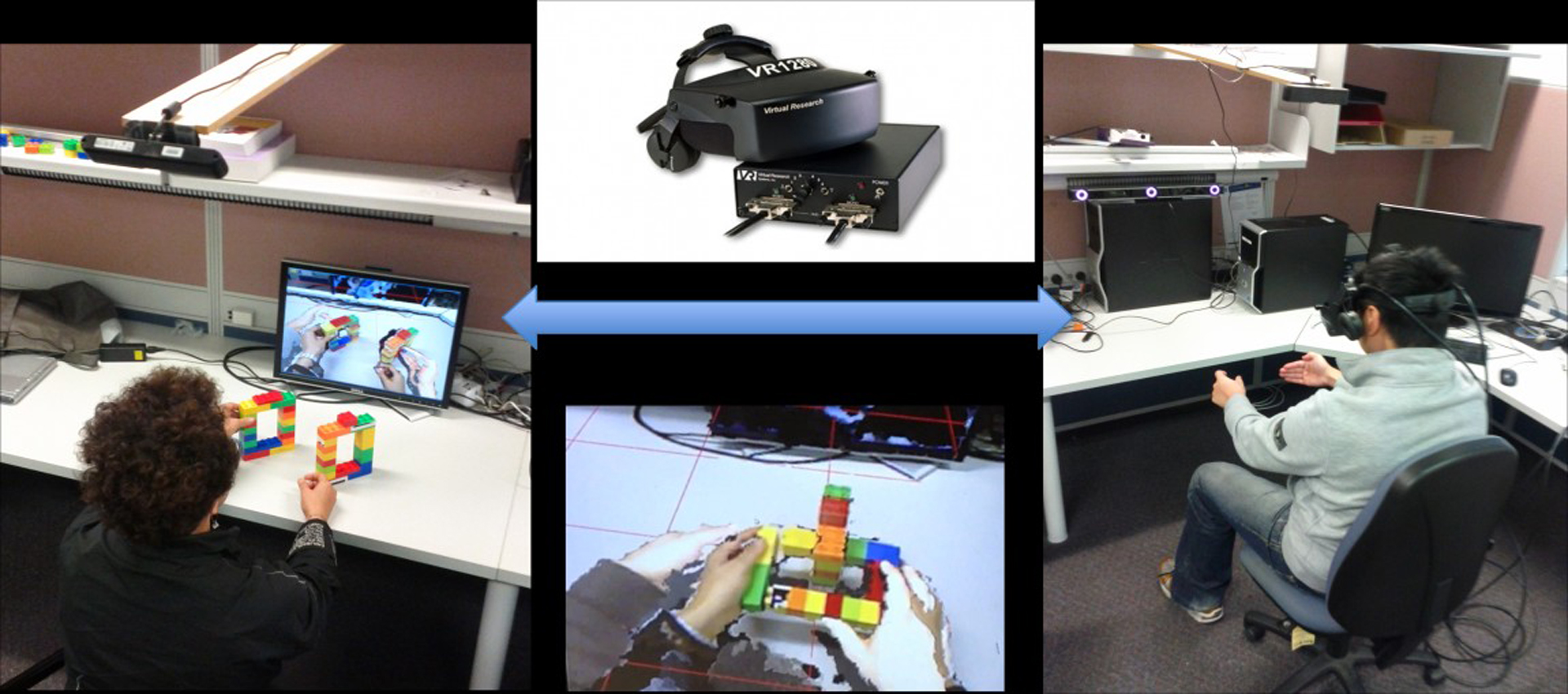

Nowadays technologies used in equipment and machinery are becoming increasingly complex and ubiquitous. The technical complexities of the equipment often require specialized knowledge and expertise to operate and maintain. However, experts who have the required knowledge and expertise are not always locally available. When a machine breaks down, there is a need to arrange and fly an expert in and out to have the machine fixed, which can be time-consuming and is not cost effective. It is often seen that the lack of adequate skill sets and the cost of bringing an expert onsite translate to the loss in productivity [Alem et al. 2011]. Therefore, there is a high demand in industries for technologies that support remote collaboration in which a remote helper guides a local worker performing tasks on physical objects. With such technologies, the expert would no longer need to be flown onsite and is able to, for example, fix a machine remotely with assistance from a local technician.

References:

[1]

Alem, L., Tecchia, F. and Huang, W. (2011) HandsOnVideo: Towards a gesture based mobile AR system for remote collaboration. In Recent Trends of Mobile Collaborative Augmented Reality. 127–138.

[2]

Fussell, S. R., Setlock, L. D., Yang, J., Ou, J., Mauer, E. and Kramer, A. D. I. (2004) Gestures over video streams to support remote collaboration on physical tasks. Human-Computer Interaction, 19: 273–309.

[3]

Huang, W. and Alem, L. (2011) Supporting Hand Gestures in Mobile Remote Collaboration: A Usability Evaluation. In Proceedings of the 25th BCS Conference on Human Computer Interaction. 211–216.

[4]

Huang, W. and Alem, L. (2013a) HandsinAir: a wearable system for remote collaboration on physical tasks. In Proceedings of the 2013 conference on Computer supported cooperative work companion. 153–156.

[5]

Huang, W. and Alem, L. (2013b) Gesturing in the Air: Supporting Full Mobility in Remote Collaboration on Physical Tasks. Journal of Universal Computer Science.

[6]

Kraut, R. E., Gergle, D. and Fussell, S. R. (2002) The Use of Visual Information in Shared Visual Spaces: Informing the Development of Virtual Co-Presence. In CSCW’02, 31–40.

[7]

Mortensen, J., Vinayagamoorthy, V., Slater, M., Steed, A., Lok, B. and Whitton, M. C. (2002) Collaboration in tele-immersive environments. In EGVE’02, 93–101.

[8]

Schuchardt, P. and Bowman, D. A. (2007) The benefits of immersion for spatial understanding of complex underground cave systems. In VRST’07, 121–124.

[9]

Tecchia, F., Alem, L. and Huang, W. (2012) 3D helping hands: a gesture based MR system for remote collaboration. In Proceedings of the 11th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry. 323–328.

[10]

Huang, W., Alem, L. and Tecchia, F. (2013) HandsIn3D: Supporting Remote Guidance with Immersive Virtual Environments. Human-Computer Interaction – INTERACT 2013. Lecture Notes in Computer Science Volume 8117, 70–77.