“Rapid 3D Building Modeling by Sketching” by Chen and Lin

Conference:

Type(s):

Title:

- Rapid 3D Building Modeling by Sketching

Presenter(s)/Author(s):

Entry Number:

- 05

Abstract:

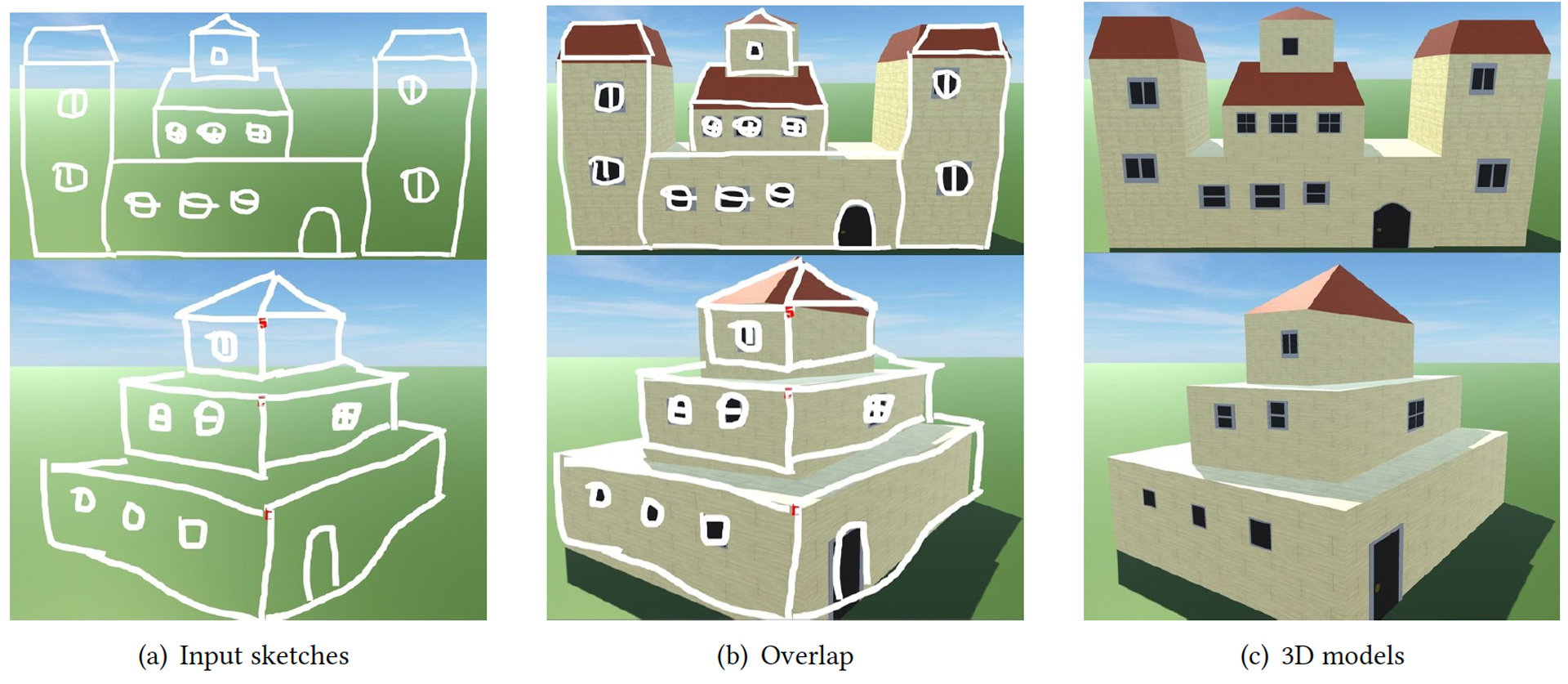

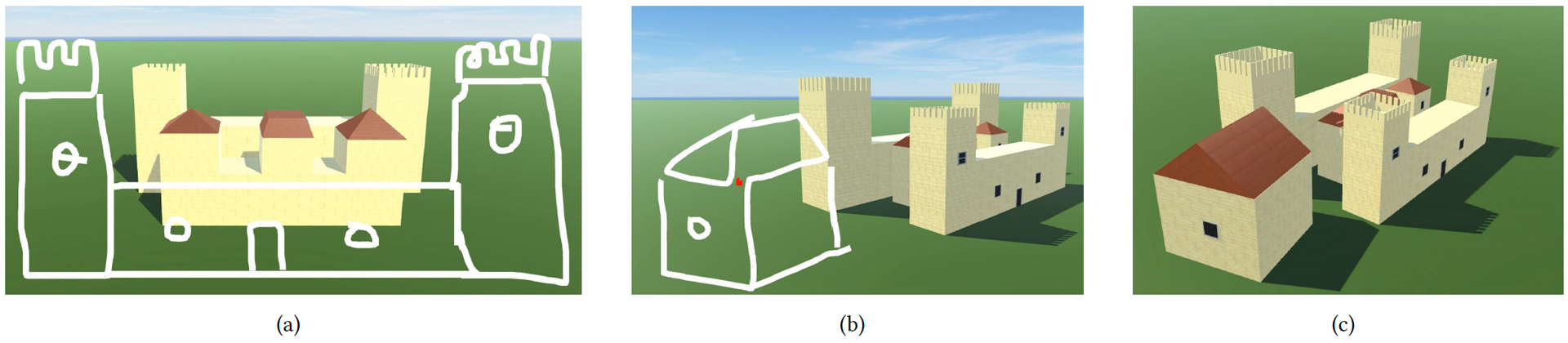

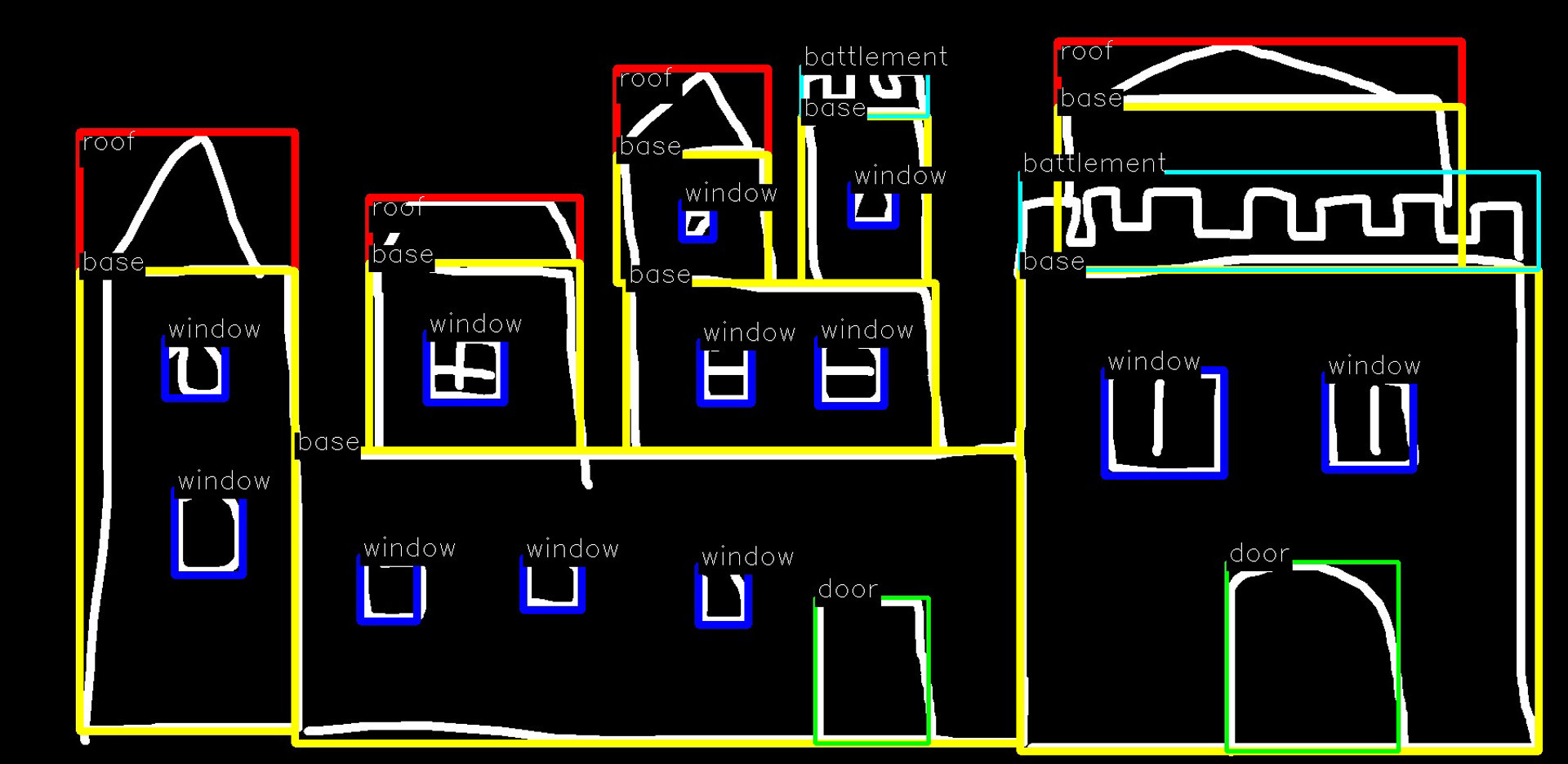

This paper proposes an intuitive tool for users to create 3D architectural models through 2D sketch input. A user only needs to draw the outline of a frontal or oblique view of a building. Our system recognizes the parts drawn in a sketch and estimates their types. The estimated information is then used to compose the corresponding 3D model. Besides, our system provides additional assistant tools for rapid editing. The modeling process can be iterative and incremental. To accomplish a building complex, a user can gradually create their models from one view to another. Our experiment shows that the proposed interface with sketch analysis tools eases the process of 3D building modeling.

References:

- Takeo Igarashi and John F. Hughes. 2001. A Suggestive Interface for 3D Drawing. In Proceedings of the 14th Annual ACM Symposium on User Interface Software and Technology (UIST ’01). ACM, 173–181.

- Yin-Hsuan Lee, Yu-Kai Chang, Yu-Lun Chang, I-Chen Lin, Yu-Shuen Wang, and Wen-Chieh Lin. 2018. Enhancing the Realism of Sketch and Painted Portraits with Adaptable Patches. Computer Graphics Forum 37, 1 (2018), 214–225.

- Gen Nishida, Ignacio Garcia-Dorado, Daniel G. Aliaga, Bedrich Benes, and Adrien Bousseau. 2016. Interactive Sketching of Urban Procedural Models. ACM Transactions on Graphics (SIGGRAPH Conference Proceedings) 35, 4 (2016), 130:1–11.

- Shaoqing Ren, Kaiming He, and Ross Girshick. 2015. Faster R-CNN: Towards real-time object detection with region proposal networks. In Neural Information Processing Systems (NIPS)., Vol. 1. 91 – 99.

- Youyi Zheng, Han Liu, Julie Dorsey, and Niloy J. Mitra. 2016. SmartCanvas: Context inferred Interpretation of Sketches for Preparatory Design Studies. Computer Graphics Forum (also in Eurographics’16) 35, 2 (2016), 37–48.

Keyword(s):

Acknowledgements:

This paper was partially supported by the Ministry of Science and Technology, Taiwan under grant no. MOST 106-2221-E-009-178- MY2, and 107-2221-E-009-140-MY2.