Jessica In, George Profenza, Sam Price: NORAA [Machinic Doodles]

Artist(s):

Title:

- NORAA [Machinic Doodles]

Exhibition:

Category:

Artist Statement:

Summary

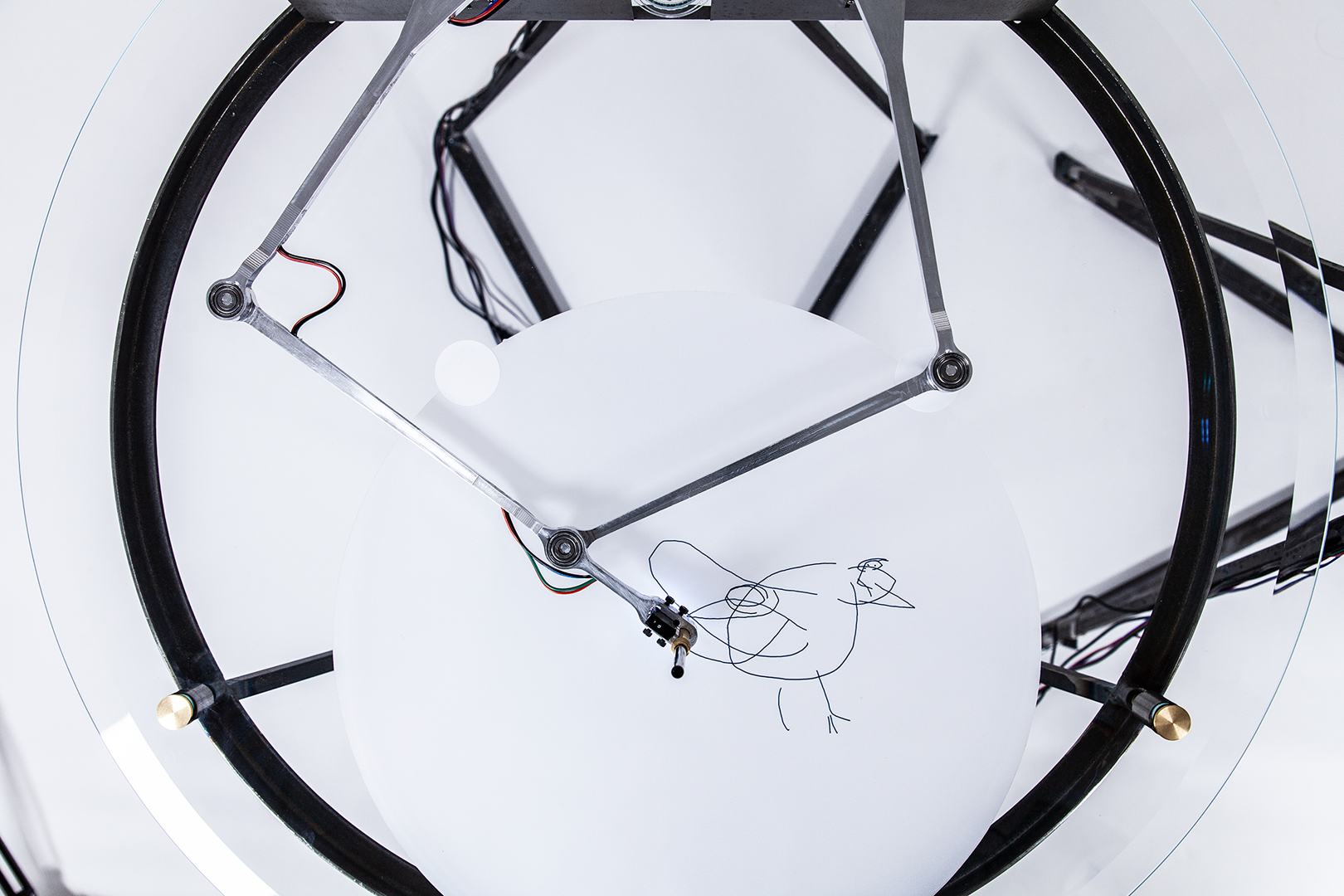

A live, interactive drawing installation that facilitates collaboration between human and a robot named NORAA, a machine learning how to draw. It explores how we communicate ideas through drawing strokes, and how might a machine also be taught to draw through machine learning, instead of via pre- programmed, explicit instruction.

Abstract

How does machine learning contribute to our understanding of how ideas are communicated through drawing? Specifically, how can networks capable of exhibiting dynamic temporal behaviour for time sequences be used for the generation of line (vector) drawings? Can machine-learning algorithms reveal something about the way we draw? Can we better understand the way we encode ideas into drawings from these algorithms?

While simple pen strokes may not resemble reality as captured by more sophisticated visual representations, they do tell us something about how people represent and reconstruct the world around them. The ability to immediately recognise, depict objects and even emotions from a few marks, strokes and lines, is something that humans learn as children.?Machinic Doodles?is interested in the semantics of lines, the patterns that emerge in how people around the world draw ? what governs the rule of geometry that makes us draw from one point to another in a specific order? The order, speed- pace and expression of a line, its constructed and semantic associations are of primary interest, generated figures are simply the means and the record of the interaction, not the final motivation.

The installation is essentially a game of human-robot Pictionary: you draw, the machine takes a guess, and then draws something back in response. The project demonstrates how a drawing game based on a recurrent-neural-network, combined with real-time human drawing interaction, can be used to generate a sequence of human- machine doodle drawings. As the number of classification models is greater than the generational models (i.e. ability to identify is higher than drawing ability), the work inherently explores this gap in the machine?s knowledge, as well as creative possibilities afforded by misinterpretations of the machine. Drawings are not just for guessing, but analysed for spatial and temporal characteristics to inform drawing generation.

Technical Information:

Dataset: Google QuickDraw dataset, custom trained models for SketchRNN. Neural Networks: QuickDraw Classifier network (Google), SketchRNN (David Ha). Main software interface built with Processing (interaction + drawing kinematics); Python (actuator communication); and Node.js (QuickDraw + SketchRNN communication); Physical machine:

Bespoke drawing mechanism installation comprising of x2 safety glass tops + steel stands; custom machined aluminium arms + pen apparatus; Dynamixel smart actuators; interactive lighting elements (light caps integrated into glass table); Tracing paper, stickers and pen refills.

Process Information:

My earliest interests in drawing and machine learning began with an intention to explore how drawing with a machine could be a generative, creative and collaborative process. Specifically, I am interested in how neural networks capable of exhibiting dynamic temporal behaviour for time sequences can be used for the generation of line (vector) drawings. Can machine-learning algorithms reveal something about the way we draw? Can we better understand the way we encode ideas into drawings from these algorithms?

The intent is to maintain the explorations around a time-based approach to both drawing and machine ? the idea of drawing as process, where the surface (be it paper, trace, 2d screen or 3d modelling coordinate space), holds a record of the changes of meaning or reveals traces of the drawing?s development is the primary concern, rather than contemplation of a fixed, complete image. These interests in the temporal nature of drawing tie into a fascination with drawing machines, how they insert themselves into the style-hand-eye circuit, how they are drawn out over time, their physical nature inherently creating qualities that other mechanised image making processes (such as photography and inkjet prints) cannot ? the slow reveal, and the gradual accumulation of contours and marks into the image.

Recent advances in generative image modelling using neural nets and its increased accessibility have seen these techniques become hugely popular within the creative arts. There are many other projects that aim to understand human-input drawings, however they predominantly involve pixel based image recognition and generation and lack a time-based approach to their models. The?NORAA?project is notable in that it explores time based drawing with neural networks for the generation of vector line drawings. The aesthetic quality of the drawings is crude given the limited conditions under which the dataset was produced (the drawing skill of the players, the time constraint, the use of a non-intuitive drawing input (e.g. mouse). The medium of simple pen strokes also inherently limits the ability to resemble reality as captured by other, more sophisticated techniques of visual representation. In spite of these limitations the work reveals ideas of how people abstract ideas of the world and how they are communicated. Insights and similarities in the way people draw universal shapes such as circles can be dependent on geographic location, cultural habits and language. It is important to note that the classification does not measure aesthetic quality nor clarity of representation (i.e. recognisability of a drawing figure), but rather how likely someone is to come up with drawing the figure in this way.

Technically the?NORAA?project makes use of two neural networks ? one for classification and the other for drawing generation. The latter, for synthesis and new drawing prediction, is based on David Ha?s?SketchRNN?neural network, trained on datasets of many drawings which not only include the x,y coordinates of each point within each line, but also the timestamp, encoding both spatial and temporal qualities. The classification network analyses and classifies timed sequences of strokes that people draw while interacting with the system. As the number of classification models is greater than the generational models (i.e. ability to classify is higher than drawing ability), the work inherently explores this gap in the machine?s knowledge, as well as the creative possibilities afforded by the misinterpretations of the machine. Drawings are not just for guessing, but analysed for spatial and temporal characteristics to inform drawing generation.

Another interesting aspect of the work is that?NORAA?is visually ?blind? in the sense that there is no camera, no traditional image analysis ? the drawings are encoded by the machine through a movement and sequence based approach (recording the motor rotations over time, along with timestamps for the pen up/down switch). This is different from other systems that may use digital stylus or computer vision based input methods. The motor angle recordings are then translated to stroke data through the kinematics of the mechanism, before being sent to the drawing classifier. In the case of no direct match between classification and generation, an alternative generative model is chosen and drawn by the machine.

Other Information:

-

- A creative application of recurrent neural networks for exploring the semantic and temporal nature of drawings

- The immediacy and relate-ability of a device drawing across a material surface, with its image qualities of a physical mark ? time and motion ? directly linked to the dataset, and the performative nature of watching a drawing emerge over time

- The possibilities of image generation through a publicly contributed dataset to embody a creative drawing machine about the collective, not e.g. an individual artist.

Inspiration Behind the Project

The inspiration and motives behind the?NORAA?project is in how machine learning contributes to our understanding of how ideas are communicated through drawing. Specifically, the project explores how networks capable of exhibiting dynamic temporal behaviour for time sequences can be used for the generation of line (vector) drawings. The project examines how semantics are communicated through the strokes of a drawing, and how might a machine also be taught to draw through learning, instead of via pre-programmed, explicit instruction.

Can machine-learning algorithms reveal something about the way we draw? Can we better understand the way we encode ideas into drawings from these algorithms?

The earliest interests in drawing and machine learning began with an intention to explore how drawing with a machine could be a generative, creative and collaborative process. The intent is to maintain the explorations around a time-based approach to both drawing and machine ? the idea of drawing as process, not artifact. The ?surface? (be it paper, tracing film, 2d screen or 3d modelling coordinate space), holds a record of the changes of meaning. Traces and layers of the drawing?s development is of the primary concern, rather than contemplation of a fixed, complete image.

These interests in the temporal nature of drawing tie into a fascination with drawing machines, how they insert themselves into the style-hand-eye circuit, how they are drawn out over time, their physical nature inherently creating qualities that other mechanised image making processes (such as photography and inkjet prints) cannot ? the slow reveal, and the gradual accumulation of contours and marks into the image.

Key Takeaways for the Audience

We hope that the audience recognize in the?NORAA?project:

![NORAA [Machinic Doodles]](https://history.siggraph.org/wp-content/uploads/2021/05/Asia2020_In_Profenza_Price_NORAAMachinicDoodles2-150x150.jpg)