“Augmented Reality and Vision-Language Models to Guide Humans Across Manual Tasks” by Kottom, Gharib, Humml and Stefan-Zavala

Conference:

Experience Type(s):

Title:

- Augmented Reality and Vision-Language Models to Guide Humans Across Manual Tasks

Presenter(s):

Description:

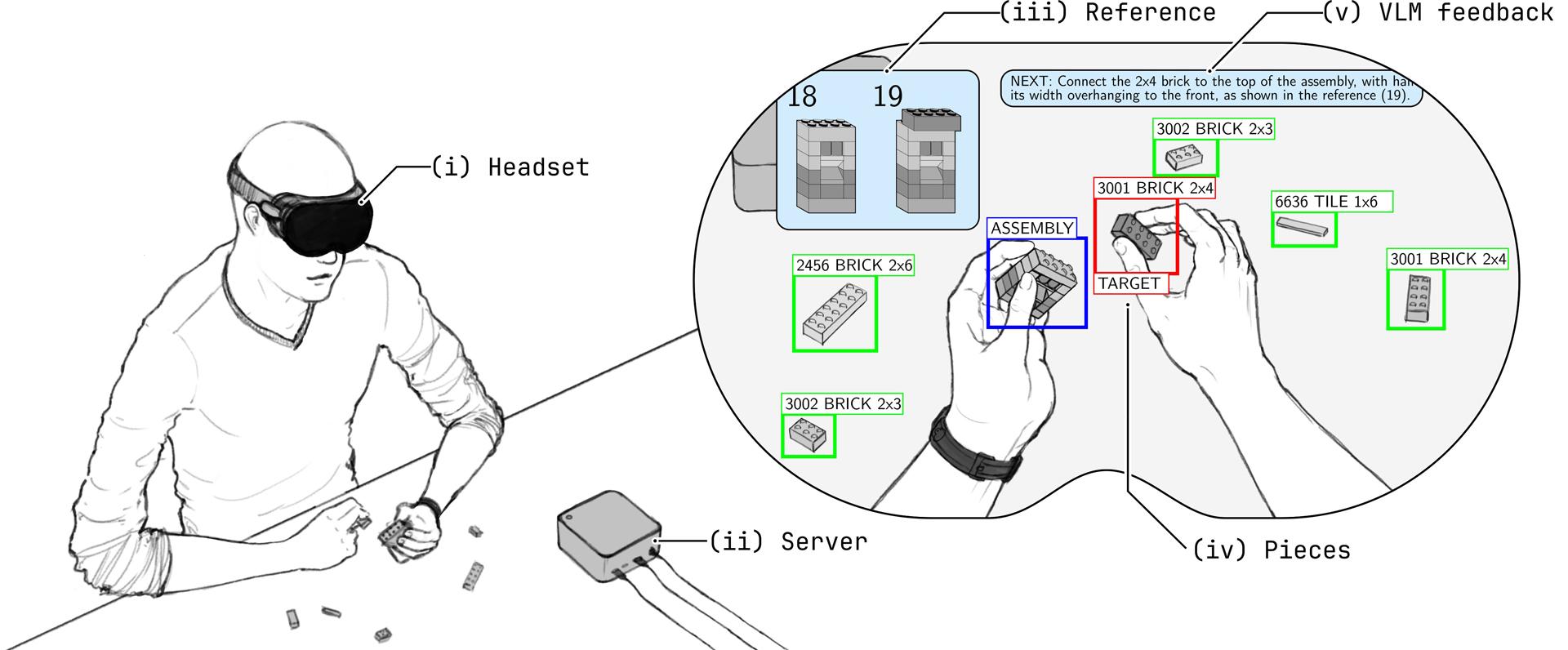

We present a novel mixed-reality pipeline for assisted assembly verification that enhances productivity, skill development, reliability and operational efficiency in manual tasks such as manufacturing, maintenance and DIY end-user assembly. Our system incorporates modern augmented reality (AR) headsets, vision-language models (VLM) and object detection models to construct an immersive assembly guidance platform. In real time, our system parses the state of the assembly and location of components from the user’s point of view, providing labels in three-dimensional space, as well as visual and textual feedback, through an AR interface. Each assembly step is validated using the VLM and a state-space encoding of all sub-assemblies, such that user mistakes are detected and clear feedback is given on how to revert to a valid state. In this work, we present a proof-of-concept instance of this platform for the test case of guiding users through building a LEGO set. Our proof-of-concept uses an Apple VisionPro AR headset for spatial computing and user interfacing, a CNN-based foundational object detection model to label and track individual LEGO pieces, and a foundational vision-language model to validate assemblies and provide textual feedback. Users with no prior knowledge of the LEGO set’s assembly are guided step-by-step throughout the process, detecting mistakes and giving textual and visual guidance on corrective measures, until the final assembly is validated. We present this test case as proof of a new paradigm in human-machine integration, where human judgment, perception and skill are augmented by artificial intelligence, seamlessly, in the human’s domain.

References:

[1] Gerald J Agin. 1980. Computer vision systems for industrial inspection and assembly. Computer 13, 05 (1980), 11–20.

[2] Valentina Di Pasquale, Salvatore Miranda, Walther Patrick Neumann, and Azin Setayesh. 2018. Human reliability in manual assembly systems: a Systematic Literature Review. Ifac-Papersonline 51, 11 (2018), 675–680.

[3] Fabio Frustaci, Stefania Perri, Giuseppe Cocorullo, and Pasquale Corsonello. 2020. An embedded machine vision system for an in-line quality check of assembly processes. Procedia Manufacturing 42 (2020), 211–218.

[4] Sungjin Kim, Soowon Chang, and Daniel Castro-Lacouture. 2020. Dynamic modeling for analyzing impacts of skilled labor shortage on construction project management. Journal of Management in Engineering 36, 1 (2020), 04019035.

[5] Assel Mussagulova, Samuel Chng, Zi An Galvyn Goh, Cheryl J Tang, and Dinithi N Jayasekara. 2023. When is a career transition successful? a systematic literature review and outlook (1980–2022). Frontiers in Psychology 14 (2023), 1141202.

[6] David Neumark, Hans Johnson, and Marisol Cuellar Mejia. 2013. Future skill shortages in the US economy? Economics of Education Review 32 (2013), 151–167.

[7] Yaniel Torres, Sylvie Nadeau, and Kurt Landau. 2021. Classification and quantification of human error in manufacturing: a case study in complex manual assembly. Applied Sciences 11, 2 (2021), 749.