“Diffusion-Based Image and Video Generation” by Mitra, Cohen-Or and Sung

Conference:

Type(s):

Title:

- Diffusion-Based Image and Video Generation

Organizer(s):

Presenter(s)/Author(s):

Abstract:

Diffusion models have become the leading technology for generating high-quality images and are being increasingly adopted for various image processing tasks, including conditional image generation. These models are expected to have significant applications in Computer Graphics and related research areas. This tutorial aims to guide participants through the intricacies of understanding and applying diffusion models, providing a foundational knowledge for leveraging them in graphics and creative fields. As advancements in large-scale video diffusion models emerge, their potential impact on visual computing and related fields becomes more evident. Traditional methods of motion and scene capture may soon be replaced by automated digital studios that can create high-quality, visually appealing content at the push of a button. This shift highlights the importance of understanding the theory behind diffusion models and developing tools to support new workflows in content generation. The ability to create such models in an accessible and efficient way will be crucial for ensuring responsible content generation across industries. Targeting graphics researchers and practitioners, this tutorial will introduce attendees to the core principles of diffusion models. By exploring real-world applications, participants will gain the practical skills necessary to integrate these models into their work. The tutorial will cover the generation of realistic textures, manipulation of details, and creation of dynamic visual effects. Moreover, we will discuss the emerging trends in 3D and motion synthesis. By the end of this tutorial, participants will be equipped with both theoretical understanding and practical skills to use diffusion models effectively in their projects. This will enable them to explore new creative possibilities in image synthesis and related media formats, while also contributing to the evolution of generative content creation.

Additional Information:

- Image processing

- Computer graphics and imaging

- Linear algebra

- Basic machine learning concepts (classification, regression, optimization, UNet architecture)

- Familiarity with Python programming

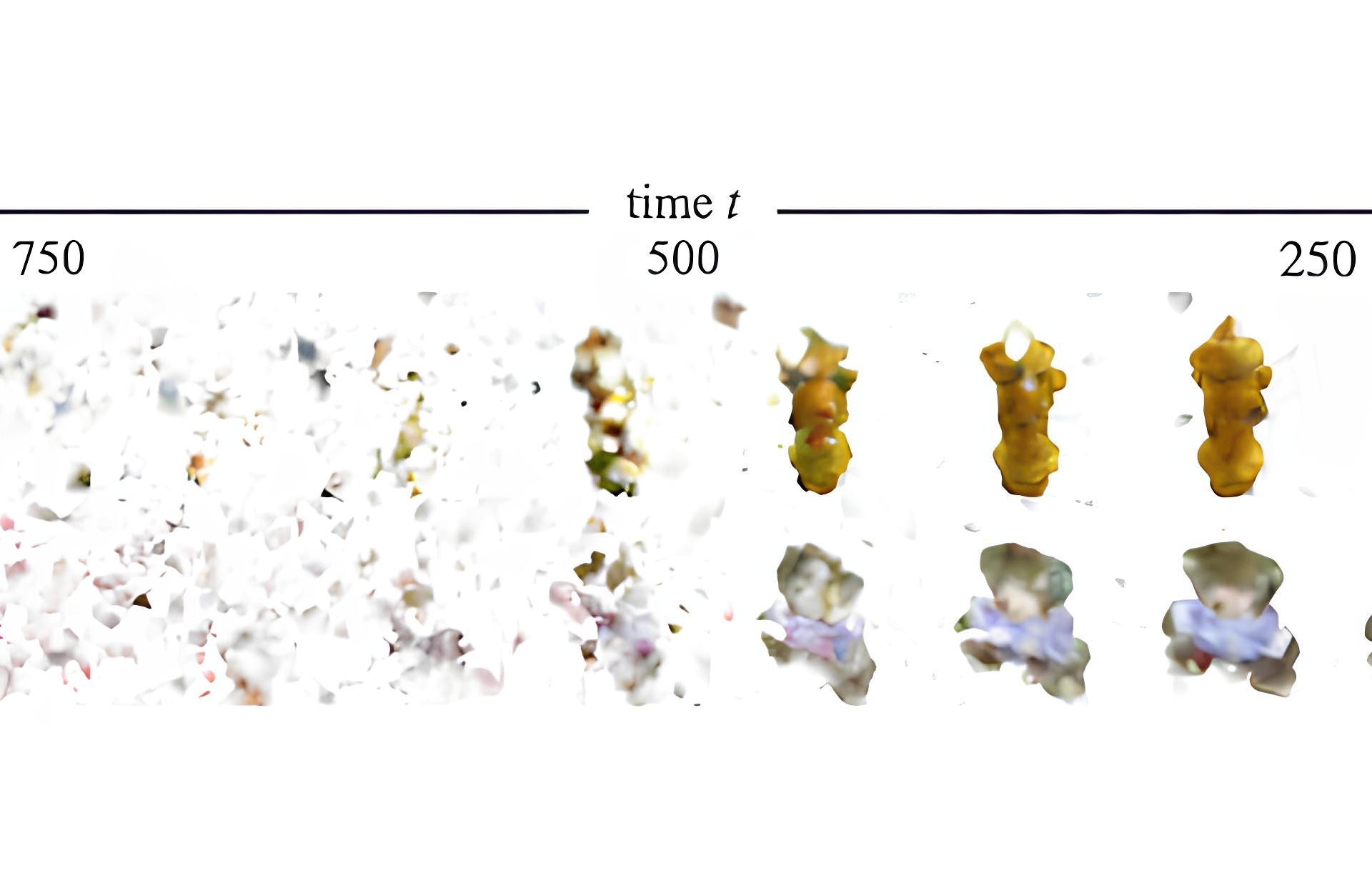

- Session 1: Introduction to Diffusion Models Overview of diffusion models, theory behind forward and backward passes, architectures, training objectives, and variants in practice (DDPM, DDIM, denoising schedules).

- Duration: 30 minutes

- Session 2: Guidance and Conditional Sampling Methods to condition diffusion models on inputs like text or images. Exploration of Latent Diffusion Models and StableDiffusion.

- Duration: 30 minutes

- Session 3: Features, Latent Space, and Image Priors Discussion on image manipulation, latent space exploration, and pretrained diffusion models used as image priors.

- Duration: 30 minutes

- Session 4: Personalization and Editing Techniques for customizing diffusion models, such as DreamBooth and LoRA. Exploration of object layout control and personalization for image editing.

- Duration: 30 minutes

- Session 5: 2D Diffusion Models for Multiview Expanding the scope of diffusion models beyond RGB images, including multi-view applications.

- Duration: 30 minutes

- Session 6: Video Diffusion Models Topics related to video generation and editing, including image-to-video generation, controlling camera paths, editing objects within a scene, generating videos of unlimited length, and more.

- Duration: 30 minutes

Intermediate

Prerequisite: While the course is self-contained and does not require prior experience with diffusion models, participants should have a foundational understanding of the following topics:

Topics: DeNoise, Generative AI

List of topics and approximate times:

Additional Info: This tutorial focuses on diffusion models, cutting-edge tools for image and video generation. Designed for graphics researchers and practitioners, it offers insights into the theory, practical applications, and real-world use cases. Participants will learn how to effectively leverage diffusion models for creative projects in computer graphics.