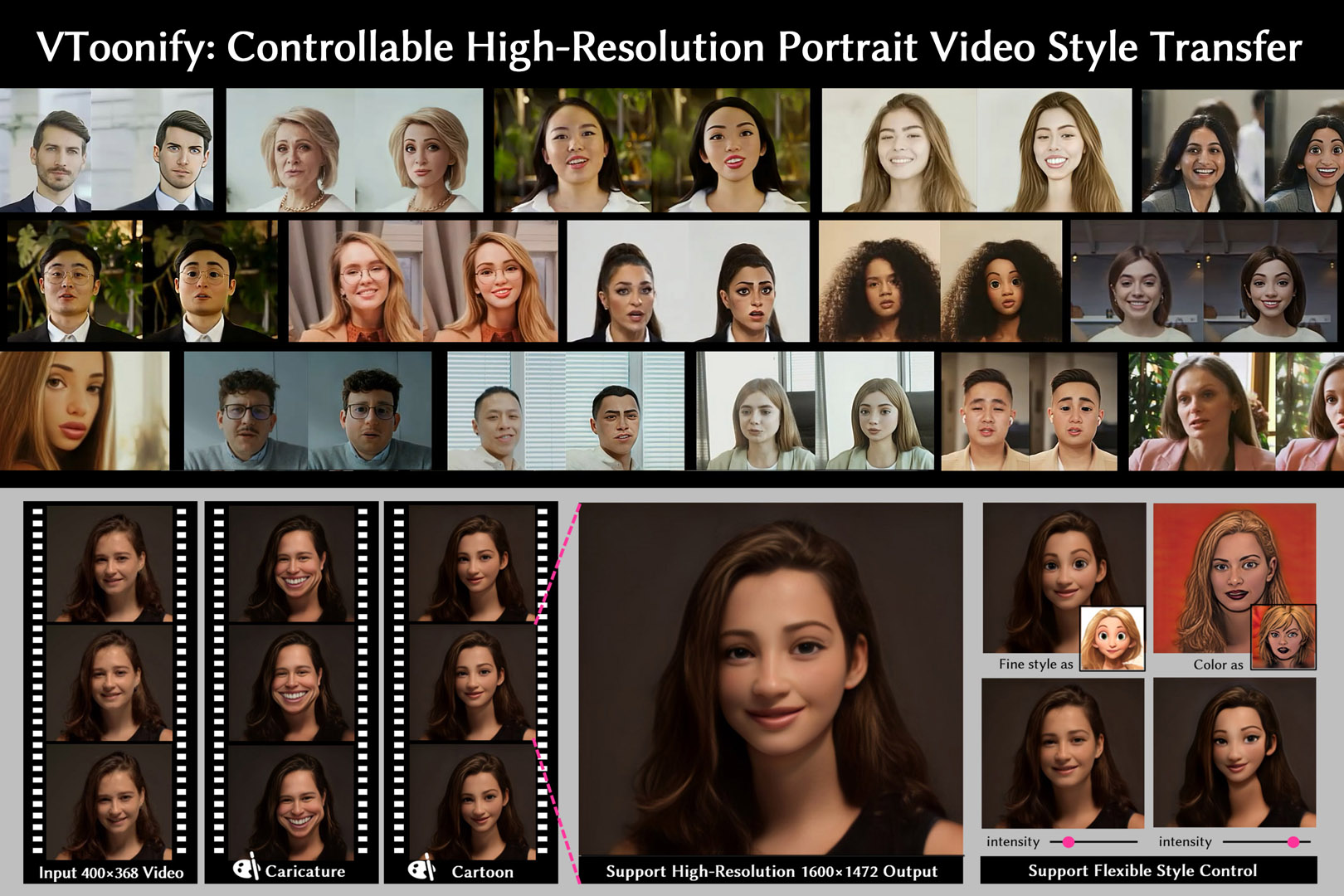

“VToonify: Controllable High-Resolution Portrait Video Style Transfer” by Yang, Jiang, Liu and Loy

Conference:

Type(s):

Title:

- VToonify: Controllable High-Resolution Portrait Video Style Transfer

Session/Category Title: Styilzation and Colorization

Presenter(s)/Author(s):

Abstract:

Generating high-quality artistic portrait videos is an important and desirable task in computer graphics and vision. Although a series of successful portrait image toonification models built upon the powerful StyleGAN have been proposed, these image-oriented methods have obvious limitations when applied to videos, such as the fixed frame size, the requirement of face alignment, missing non-facial details and temporal inconsistency. In this work, we investigate the challenging controllable high-resolution portrait video style transfer by introducing a novel VToonify framework. Specifically, VToonify leverages the mid- and high-resolution layers of StyleGAN to render high-quality artistic portraits based on the multi-scale content features extracted by an encoder to better preserve the frame details. The resulting fully convolutional architecture accepts non-aligned faces in videos of variable size as input, contributing to complete face regions with natural motions in the output. Our framework is compatible with existing StyleGAN-based image toonification models to extend them to video toonification, and inherits appealing features of these models for flexible style control on color and intensity. This work presents two instantiations of VToonify built upon Toonify and DualStyleGAN for collection-based and exemplar-based portrait video style transfer, respectively. Extensive experimental results demonstrate the effectiveness of our proposed VToonify framework over existing methods in generating high-quality and temporally-coherent artistic portrait videos with flexible style controls. Code and pretrained models are available at our project page: www.mmlab-ntu.com/project/vtoonify/.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2stylegan: How to embed images into the stylegan latent space?. In Proc. Int’l Conf. Computer Vision. 4432–4441.

2. Yuval Alaluf, Or Patashnik, and Daniel Cohen-Or. 2021. Restyle: A residual-based stylegan encoder via iterative refinement. In Proc. Int’l Conf. Computer Vision. 6711–6720.

3. Yuval Alaluf, Or Patashnik, Zongze Wu, Asif Zamir, Eli Shechtman, Dani Lischinski, and Daniel Cohen-Or. 2022a. Third Time’s the Charm? Image and Video Editing with StyleGAN3. arXiv:2201.13433 [cs.CV]

4. Yuval Alaluf, Omer Tov, Ron Mokady, Rinon Gal, and Amit Bermano. 2022b. Hyperstyle: Stylegan inversion with hypernetworks for real image editing. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 18511–18521.

5. Kaidi Cao, Jing Liao, and Lu Yuan. 2018. CariGANs: unpaired photo-to-caricature translation. ACM Transactions on Graphics 37, 6 (2018), 1–14.

6. Kelvin CK Chan, Xintao Wang, Xiangyu Xu, Jinwei Gu, and Chen Change Loy. 2021. GLEAN: Generative Latent Bank for Large-Factor Image Super-Resolution. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition.

7. Dongdong Chen, Jing Liao, Lu Yuan, Nenghai Yu, and Gang Hua. 2017. Coherent online video style transfer. In Proc. Int’l Conf. Computer Vision. 1105–1114.

8. Jie Chen, Gang Liu, and Xin Chen. 2019. AnimeGAN: A novel lightweight gan for photo animation. In International Symposium on Intelligence Computation and Applications. Springer, 242–256.

9. Min Jin Chong and David Forsyth. 2021. GANs N’ Roses: Stable, Controllable, Diverse Image to Image Translation. arXiv preprint arXiv:2106.06561 (2021).

10. Tan M Dinh, Anh Tuan Tran, Rang Nguyen, and Binh-Son Hua. 2022. Hyperinverter: Improving stylegan inversion via hypernetwork. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 11389–11398.

11. Gereon Fox, Ayush Tewari, Mohamed Elgharib, and Christian Theobalt. 2021. Stylevideogan: A temporal generative model using a pretrained stylegan. In Proc. British Machine Vision Conference.

12. Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. StyleGAN-NADA: CLIP-guided domain adaptation of image generators. ACM Transactions on Graphics 41, 4 (2022), 1–13.

13. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 2414–2423.

14. Agrim Gupta, Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2017. Characterizing and improving stability in neural style transfer. In Proc. Int’l Conf. Computer Vision. 4067–4076.

15. Erik Härkönen, Aaron Hertzman, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN controls. In Advances in Neural Information Processing Systems.

16. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 770–778.

17. Fa-Ting Hong, Longhao Zhang, Li Shen, and Dan Xu. 2022. Depth-Aware Generative Adversarial Network for Talking Head Video Generation. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition.

18. Haozhi Huang, Hao Wang, Wenhan Luo, Lin Ma, Wenhao Jiang, Xiaolong Zhu, Zhifeng Li, and Wei Liu. 2017. Real-time neural style transfer for videos. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 783–791.

19. Phillip Isola, Jun Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 5967–5976.

20. Wonjong Jang, Gwangjin Ju, Yucheol Jung, Jiaolong Yang, Xin Tong, and Seungyong Lee. 2021. StyleCariGAN: caricature generation via StyleGAN feature map modulation. ACM Transactions on Graphics 40, 4 (2021), 1–16.

21. Yuming Jiang, Ziqi Huang, Xingang Pan, Chen Change Loy, and Ziwei Liu. 2021. Talk-to-edit: Fine-grained facial editing via dialog. In Proc. Int’l Conf. Computer Vision. 13799–13808.

22. Justin Johnson, Alexandre Alahi, and Fei Fei Li. 2016. Perceptual Losses for RealTime Style Transfer and Super-Resolution. In Proc. European Conf. Computer Vision. Springer, 694–711.

23. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training generative adversarial networks with limited data. In Advances in Neural Information Processing Systems, Vol. 33. 12104–12114.

24. Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems 34 (2021).

25. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 4401–4410.

26. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of stylegan. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 8110–8119.

27. Junho Kim, Minjae Kim, Hyeonwoo Kang, and Kwang Hee Lee. 2019. U-GAT-IT: Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation. In Proc. Int’l Conf. Learning Representations.

28. Bing Li, Yuanlue Zhu, Yitong Wang, Chia-Wen Lin, Bernard Ghanem, and Linlin Shen. 2021. AniGAN: Style-Guided Generative Adversarial Networks for Unsupervised Anime Face Generation. IEEE Transactions on Multimedia (2021).

29. Feng-Lin Liu, Shu-Yu Chen, Yu-Kun Lai, Chunpeng Li, Yue-Ren Jiang, Hongbo Fu, and Lin Gao. 2022. DeepFaceVideoEditing: Sketch-Based Deep Editing of Face Videos. ACM Transactions on Graphics 40, 4 (2022), 1–16.

30. Ori Nizan and Ayellet Tal. 2020. Breaking the cycle-colleagues are all you need. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 7860–7869.

31. Gaurav Parmar, Yijun Li, Jingwan Lu, Richard Zhang, Jun-Yan Zhu, and Krishna Kumar Singh. 2022. Spatially-Adaptive Multilayer Selection for GAN Inversion and Editing. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 11399–11409.

32. Justin NM Pinkney and Doron Adler. 2020. Resolution Dependent GAN Interpolation for Controllable Image Synthesis Between Domains. arXiv preprint arXiv:2010.05334 (2020).

33. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In Proc. IEEE Int’l Conf. Machine Learning. PMLR, 8748–8763.

34. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a stylegan encoder for image-to-image translation. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition.

35. Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. 2022. Pivotal tuning for latent-based editing of real images. ACM Transactions on Graphics (2022).

36. Andreas Rössler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Nießner. 2019. FaceForensics++: Learning to Detect Manipulated Facial Images. In Proc. Int’l Conf. Computer Vision.

37. Manuel Ruder, Alexey Dosovitskiy, and Thomas Brox. 2016. Artistic style transfer for videos. In German conference on pattern recognition. Springer, 26–36.

38. Ahmed Selim, Mohamed Elgharib, and Linda Doyle. 2016. Painting style transfer for head portraits using convolutional neural networks. ACM Transactions on Graphics 35, 4 (2016), 1–18.

39. Xuning Shao and Weidong Zhang. 2021. SPatchGAN: A Statistical Feature Based Discriminator for Unsupervised Image-to-Image Translation. In Proc. Int’l Conf. Computer Vision. 6546–6555.

40. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the latent space of gans for semantic face editing. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 9243–9252.

41. Yujun Shen and Bolei Zhou. 2021. Closed-form factorization of latent semantics in gans. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 1532–1540.

42. Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci, and Nicu Sebe. 2019. First order motion model for image animation. Advances in Neural Information Processing Systems 32.

43. Guoxian Song, Linjie Luo, Jing Liu, Wan-Chun Ma, Chunpong Lai, Chuanxia Zheng, and Tat-Jen Cham. 2021. AgileGAN: stylizing portraits by inversion-consistent transfer learning. ACM Transactions on Graphics 40, 4 (2021), 1–13.

44. Zachary Teed and Jia Deng. 2020. Raft: Recurrent all-pairs field transforms for optical flow. In Proc. European Conf. Computer Vision. Springer, 402–419.

45. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics 40, 4 (2021), 1–14.

46. Rotem Tzaban, Ron Mokady, Rinon Gal, Amit H. Bermano, and Daniel Cohen-Or. 2022. Stitch it in Time: GAN-Based Facial Editing of Real Videos. arXiv:2201.08361 [cs.CV]

47. Yuri Viazovetskyi, Vladimir Ivashkin, and Evgeny Kashin. 2020. Stylegan2 distillation for feed-forward image manipulation. In Proc. European Conf. Computer Vision. Springer, 170–186.

48. Tengfei Wang, Yong Zhang, Yanbo Fan, Jue Wang, and Qifeng Chen. 2022. High-fidelity gan inversion for image attribute editing. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition. 11379–11388.

49. Ting Chun Wang, Ming Yu Liu, Jun Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition.

50. Wenjing Wang, Jizheng Xu, Li Zhang, Yue Wang, and Jiaying Liu. 2020. Consistent video style transfer via compound regularization, Vol. 34. 12233–12240.

51. Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, and Ming-Hsuan Yang. 2022. Gan inversion: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence (2022).

52. Shuai Yang, Liming Jiang, Ziwei Liu, and Chen Change Loy. 2022. Pastiche Master: Exemplar-Based High-Resolution Portrait Style Transfer. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition.

53. Xu Yao, Alasdair Newson, Yann Gousseau, and Pierre Hellier. 2021. A latent transformer for disentangled face editing in images and videos. In Proc. Int’l Conf. Computer Vision. 13789–13798.

54. Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. 2018. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proc. European Conf. Computer Vision. Springer, 334–349.

55. Jiapeng Zhu, Ruili Feng, Yujun Shen, Deli Zhao, Zheng-Jun Zha, Jingren Zhou, and Qifeng Chen. 2021b. Low-rank subspaces in gans. In Advances in Neural Information Processing Systems, Vol. 34.

56. Jun Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proc. Int’l Conf. Computer Vision. 2242–2251.

57. Peihao Zhu, Rameen Abdal, John Femiani, and Peter Wonka. 2021a. Barbershop: GAN-based image compositing using segmentation masks. ACM Transactions on Graphics 40, 6 (2021), 1–13.