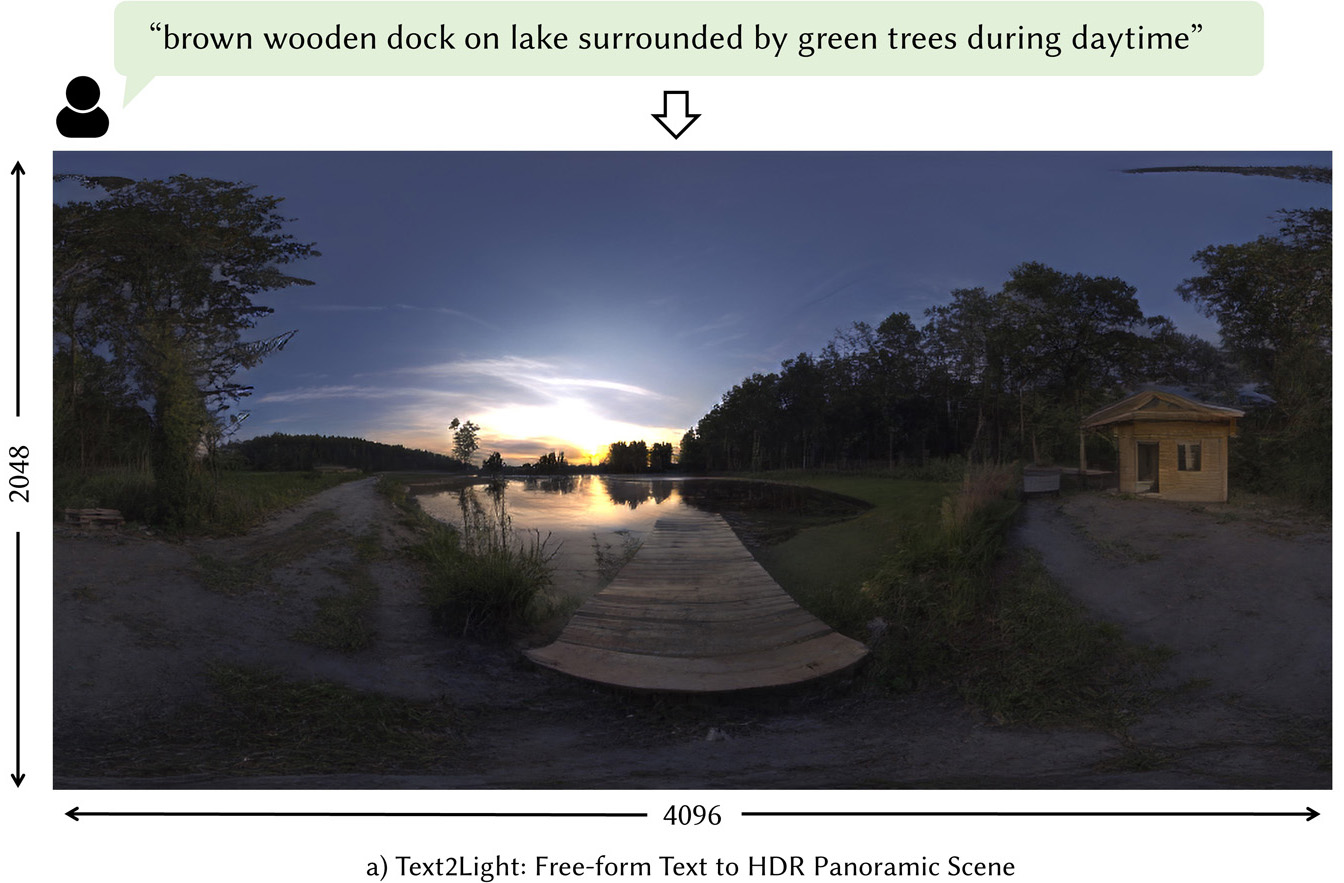

“Text2Light: Zero-Shot Text-Driven HDR Panorama Generation” by Chen, Wang and Liu

Conference:

Type(s):

Title:

- Text2Light: Zero-Shot Text-Driven HDR Panorama Generation

Session/Category Title: Image Generation

Presenter(s)/Author(s):

Abstract:

High-quality HDRIs (High Dynamic Range Images), typically HDR panoramas, are one of the most popular ways to create photorealistic lighting and 360-degree reflections of 3D scenes in graphics. Given the difficulty of capturing HDRIs, a versatile and controllable generative model is highly desired, where layman users can intuitively control the generation process. However, existing state-of-the-art methods still struggle to synthesize high-quality panoramas for complex scenes. In this work, we propose a zero-shot text-driven framework, Text2Light, to generate 4K+ resolution HDRIs without paired training data. Given a free-form text as the description of the scene, we synthesize the corresponding HDRI with two dedicated steps: 1) text-driven panorama generation in low dynamic range (LDR) and low resolution (LR), and 2) super-resolution inverse tone mapping to scale up the LDR panorama both in resolution and dynamic range. Specifically, to achieve zero-shot text-driven panorama generation, we first build dual codebooks as the discrete representation for diverse environmental textures. Then, driven by the pre-trained Contrastive Language-Image Pre-training (CLIP) model, a text-conditioned global sampler learns to sample holistic semantics from the global codebook according to the input text. Furthermore, a structure-aware local sampler learns to synthesize LDR panoramas patch-by-patch, guided by holistic semantics. To achieve super-resolution inverse tone mapping, we derive a continuous representation of 360-degree imaging from the LDR panorama as a set of structured latent codes anchored to the sphere. This continuous representation enables a versatile module to upscale the resolution and dynamic range simultaneously. Extensive experiments demonstrate the superior capability of Text2Light in generating high-quality HDR panoramas. In addition, we show the feasibility of our work in realistic rendering and immersive VR.

References:

1. Francesco Banterle, Patrick Ledda, Kurt Debattista, and Alan Chalmers. 2006. Inverse tone mapping. In Proceedings of the 4th international conference on Computer graphics and interactive techniques in Australasia and Southeast Asia – GRAPHITE ’06. ACM Press, Kuala Lumpur, Malaysia, 349.

2. Andreas Blattmann, Robin Rombach, Kaan Oktay, and Björn Ommer. 2022. Retrieval-Augmented Diffusion Models.

3. Sam Bond-Taylor, Peter Hessey, Hiroshi Sasaki, Toby P. Breckon, and Chris G. Willcocks. 2021. Unleashing Transformers: Parallel Token Prediction with Discrete Absorbing Diffusion for Fast High-Resolution Image Generation from Vector-Quantized Codes. arXiv:2111.12701 [cs] (Nov. 2021). arXiv: 2111.12701.

4. Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu, and William T. Freeman. 2022. MaskGIT: Masked Generative Image Transformer. arXiv:2202.04200 [cs] (Feb. 2022). arXiv:2202.04200.

5. Guanying Chen, Chaofeng Chen, Shi Guo, Zhetong Liang, Kwan-Yee K. Wong, and Lei Zhang. 2021. HDR Video Reconstruction: A Coarse-to-fine Network and A Real-world Benchmark Dataset. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE, Montreal, QC, Canada, 2482–2491.

6. Zhaoxi Chen and Ziwei Liu. 2022. Relighting4D: Neural Relightable Human from Videos. In Proceedings of the European Conference on Computer Vision (ECCV).

7. Ming Ding, Zhuoyi Yang, Wenyi Hong, Wendi Zheng, Chang Zhou, Da Yin, Junyang Lin, Xu Zou, Zhou Shao, Hongxia Yang, et al. 2021. Cogview: Mastering text-to-image generation via transformers. Advances in Neural Information Processing Systems 34 (2021), 19822–19835.

8. Gabriel Eilertsen, Joel Kronander, Gyorgy Denes, Rafał K. Mantiuk, and Jonas Unger. 2017. HDR image reconstruction from a single exposure using deep CNNs. ACM Transactions on Graphics 36, 6 (Nov. 2017), 1–15.

9. Patrick Esser, Robin Rombach, and Bjorn Ommer. 2021. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12873–12883.

10. Marc-André Gardner, Kalyan Sunkavalli, Ersin Yumer, Xiaohui Shen, Emiliano Gambaretto, Christian Gagné, and Jean-François Lalonde. 2017. Learning to predict indoor illumination from a single image. arXiv preprint arXiv:1704.00090abs/1704.00090 (2017).

11. Shir Gur, Sagie Benaim, and Lior Wolf. 2020. Hierarchical patch vae-gan: Generating diverse videos from a single sample. Advances in Neural Information Processing Systems 33 (2020), 16761–16772.

12. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Advances in Neural Information Processing Systems, I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Curran Associates, Inc.

13. Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. arXiv:2006.11239 [cs, stat] (Dec. 2020). arXiv: 2006.11239.

14. Fangzhou Hong, Mingyuan Zhang, Liang Pan, Zhongang Cai, Lei Yang, and Ziwei Liu. 2022. AvatarCLIP: Zero-Shot Text-Driven Generation and Animation of 3D Avatars. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–19.

15. Xun Huang, Arun Mallya, Ting-Chun Wang, and Ming-Yu Liu. 2021. Multimodal Conditional Image Synthesis with Product-of-Experts GANs. arXiv:2112.05130 [cs] (Dec. 2021). arXiv: 2112.05130.

16. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.

17. Yuming Jiang, Shuai Yang, Haonan Qiu, Wayne Wu, Chen Change Loy, and Ziwei Liu. 2022. Text2Human: Text-Driven Controllable Human Image Generation. ACM Transactions on Graphics (TOG) 41, 4, Article 162 (2022), 11 pages.

18. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training generative adversarial networks with limited data. Advances in Neural Information Processing Systems 33, 12104–12114.

19. Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-Free Generative Adversarial Networks. In Advances in Neural Information Processing Systems, M. Ranzato, A. Beygelzimer, Y. Dauphin, P.S. Liang, and J. Wortman Vaughan (Eds.), Vol. 34. Curran Associates, Inc., 852–863.

20. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4401–4410.

21. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 8110–8119.

22. Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. 2022. DiffusionCLIP: Text-Guided Diffusion Models for Robust Image Manipulation. arXiv:2110.02711 [cs] (April 2022). arXiv: 2110.02711.

23. Soo Ye Kim, Jihyong Oh, and Munchurl Kim. 2020. Jsi-gan: Gan-based joint super-resolution and inverse tone-mapping with pixel-wise task-specific filters for uhd hdr video. In Proceedings of the AAAI Conference on Artificial Intelligence. 11287–11295.

24. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

25. Siyeong Lee, Gwon Hwan An, and Suk-Ju Kang. 2018. Deep Chain HDRI: Reconstructing a High Dynamic Range Image from a Single Low Dynamic Range Image. IEEE Access 6 (2018), 49913–49924. arXiv: 1801.06277.

26. Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. 2017. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 136–144.

27. Chieh Hubert Lin, Chia-Che Chang, Yu-Sheng Chen, Da-Cheng Juan, Wei Wei, and Hwann-Tzong Chen. 2019. COCO-GAN: Generation by Parts via Conditional Coordinating. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).

28. Chieh Hubert Lin, Hsin-Ying Lee, Yen-Chi Cheng, Sergey Tulyakov, and Ming-Hsuan Yang. 2021. InfinityGAN: Towards Infinite-Pixel Image Synthesis. arXiv:2104.03963 [cs] (Oct. 2021). arXiv: 2104.03963.

29. Xingchao Liu, Chengyue Gong, Lemeng Wu, Shujian Zhang, Hao Su, and Qiang Liu. 2021. FuseDream: Training-Free Text-to-Image Generation with Improved CLIP+GAN Space Optimization. arXiv:2112.01573 [cs] (Dec. 2021). arXiv: 2112.01573.

30. Yu-Lun Liu, Wei-Sheng Lai, Yu-Sheng Chen, Yi-Lung Kao, Ming-Hsuan Yang, Yung-Yu Chuang, and Jia-Bin Huang. 2020. Single-Image HDR Reconstruction by Learning to Reverse the Camera Pipeline. arXiv:2004.01179 [cs, eess] (April 2020). arXiv:2004.01179.

31. Demetris Marnerides, Thomas Bashford-Rogers, Jonathan Hatchett, and Kurt Debattista. 2019. ExpandNet: A Deep Convolutional Neural Network for High Dynamic Range Expansion from Low Dynamic Range Content. arXiv:1803.02266 [cs] (Sept. 2019). arXiv: 1803.02266.

32. Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2022. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. arXiv:2112.10741 [cs] (March 2022). arXiv: 2112.10741.

33. Aaron van den Oord, Oriol Vinyals, and Koray Kavukcuoglu. 2018. Neural Discrete Representation Learning. arXiv:1711.00937 [cs] (May 2018). arXiv: 1711.00937.

34. Rohit Pandey, Sergio Orts Escolano, Chloe Legendre, Christian Häne, Sofien Bouaziz, Christoph Rhemann, Paul Debevec, and Sean Fanello. 2021. Total Relighting: Learning to Relight Portraits for Background Replacement. ACM Trans. Graph. 40, 4, Article 43 (jul 2021), 21 pages.

35. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery. arXiv:2103.17249 [cs] (March 2021). arXiv: 2103.17249.

36. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. arXiv:2103.00020 [cs] (Feb. 2021). arXiv: 2103.00020.

37. Nasim Rahaman, Aristide Baratin, Devansh Arpit, Felix Draxler, Min Lin, Fred Hamprecht, Yoshua Bengio, and Aaron Courville. 2019. On the spectral bias of neural networks. In International Conference on Machine Learning. PMLR, 5301–5310.

38. Prarabdh Raipurkar, Rohil Pal, and Shanmuganathan Raman. 2021. HDR-cGAN: Single LDR to HDR Image Translation using Conditional GAN. arXiv:2110.01660 [cs, eess] (Oct. 2021). arXiv: 2110.01660.

39. Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. (2022), 24.

40. Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-Shot Text-to-Image Generation.

41. Ali Razavi, Aaron Van den Oord, and Oriol Vinyals. 2019. Generating diverse high-fidelity images with vq-vae-2. Advances in neural information processing systems 32 (2019).

42. E. Reinhard and K. Devlin. 2005. Dynamic range reduction inspired by photoreceptor physiology. IEEE Transactions on Visualization and Computer Graphics 11, 1 (2005), 13–24.

43. Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2021a. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv:2112.10752 [cs] (Dec. 2021). arXiv: 2112.10752.

44. Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2021b. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv:2112.10752 [cs.CV]

45. Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved techniques for training gans. Advances in neural information processing systems 29 (2016).

46. Peter Schaldenbrand, Zhixuan Liu, and Jean Oh. 2021. StyleCLIPDraw: Coupling Content and Style in Text-to-Drawing Synthesis. arXiv:2111.03133 [cs] (Nov. 2021). arXiv: 2111.03133.

47. Ivan Skorokhodov, Grigorii Sotnikov, and Mohamed Elhoseiny. 2021. Aligning Latent and Image Spaces to Connect the Unconnectable. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE, Montreal, QC, Canada, 14124–14133.

48. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

49. Guangcong Wang, Yinuo Yang, Chen Change Loy, and Ziwei Liu. 2022b. StyleLight: HDR Panorama Generation for Lighting Estimation and Editing. In European Conference on Computer Vision (ECCV).

50. Lin Wang and Kuk-Jin Yoon. 2021. Deep Learning for HDR Imaging: State-of-the-Art and Future Trends. arXiv:2110.10394 [cs, eess] (Nov. 2021). arXiv: 2110.10394.

51. Zihao Wang, Wei Liu, Qian He, Xinglong Wu, and Zili Yi. 2022a. CLIP-GEN: Language-Free Training of a Text-to-Image Generator with CLIP. arXiv:2203.00386 [cs] (March 2022). arXiv: 2203.00386.

52. Wei Wei, Li Guan, Yue Liu, Hao Kang, Haoxiang Li, Ying Wu, and Gang Hua. 2021. Beyond Visual Attractiveness: Physically Plausible Single Image HDR Reconstruction for Spherical Panoramas. arXiv:2103.12926 [cs, eess] (March 2021). arXiv: 2103.12926.

53. Yichong Xu, Tianjun Xiao, Jiaxing Zhang, Kuiyuan Yang, and Zheng Zhang. 2014. Scale-invariant convolutional neural networks. arXiv preprint arXiv:1411.6369 (2014).

54. Hanning Yu, Wentao Liu, Chengjiang Long, Bo Dong, Qin Zou, and Chunxia Xiao. 2021. Luminance Attentive Networks for HDR Image and Panorama Reconstruction. arXiv:2109.06688 [cs, eess] (Sept. 2021). arXiv: 2109.06688.

55. Jinsong Zhang and Jean-Francois Lalonde. 2017. Learning High Dynamic Range from Outdoor Panoramas. In 2017 IEEE International Conference on Computer Vision (ICCV). IEEE, Venice, 4529–4538.

56. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.

57. Yufan Zhou, Ruiyi Zhang, Changyou Chen, Chunyuan Li, Chris Tensmeyer, Tong Yu, Jiuxiang Gu, Jinhui Xu, and Tong Sun. 2022. LAFITE: Towards Language-Free Training for Text-to-Image Generation. arXiv:2111.13792 [cs] (March 2022). arXiv:2111.13792.