“LiveNVS: Neural View Synthesis on Live RGB-D Streams” by Fink, Franke, Keinert and Stamminger

Conference:

Type(s):

Title:

- LiveNVS: Neural View Synthesis on Live RGB-D Streams

Session/Category Title: Anything Can be Neural

Presenter(s)/Author(s):

Abstract:

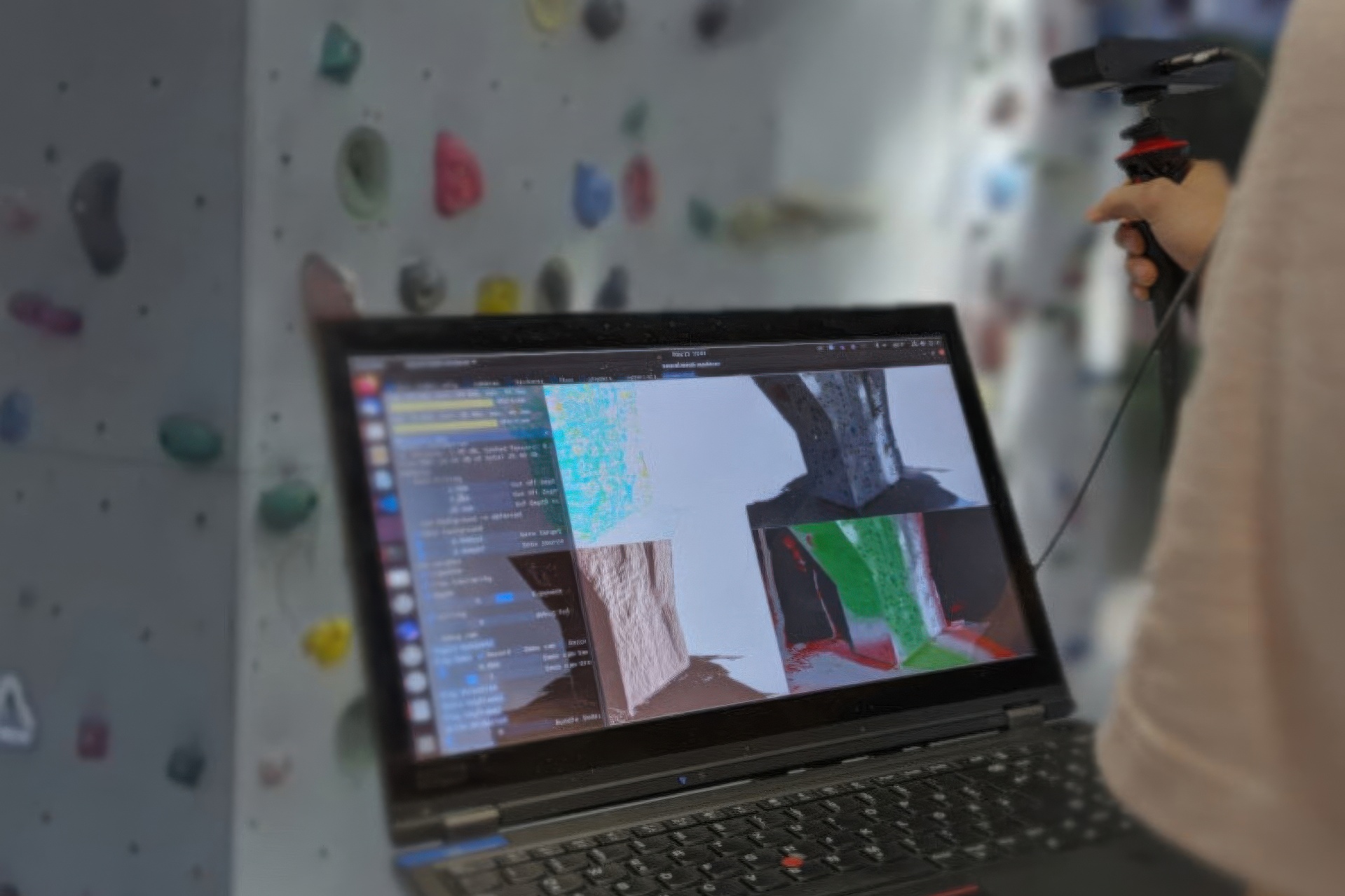

Existing real-time RGB-D reconstruction approaches, like Kinect Fusion, lack real-time photo-realistic visualization. This is due to noisy, oversmoothed or incomplete geometry and blurry textures which are fused from imperfect depth maps and camera poses. Recent neural rendering methods can overcome many of such artifacts but are mostly optimized for offline usage, hindering the integration into a live reconstruction pipeline. In this paper, we present LiveNVS, a system that allows for neural novel view synthesis on a live RGB-D input stream with very low latency and real-time rendering. Based on the RGB-D input stream, novel views are rendered by projecting neural features into the target view via a densely fused depth map and aggregating the features in image-space to a target feature map. A generalizable neural network then translates the target feature map into a high-quality RGB image. LiveNVS achieves state-of-the-art neural rendering quality of unknown scenes during capturing, allowing users to virtually explore the scene and assess reconstruction quality in real-time.

References:

[1]

Kara-Ali Aliev, Artem Sevastopolsky, Maria Kolos, Dmitry Ulyanov, and Victor Lempitsky. 2020. Neural Point-Based Graphics (NPBG). In European Conference on Computer Vision (ECCV)(Lecture urls in Computer Science), Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer, Cham, 696–712. https://doi.org/10.1007/978-3-030-58542-6_42

[2]

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, and Peter Hedman. 2022. Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields. CVPR (2022).

[3]

Alexander W. Bergman, Petr Kellnhofer, and Gordon Wetzstein. 2021. Fast Training of Neural Lumigraph Representations Using Meta Learning. arXiv (June 2021). arxiv:2106.14942

[4]

Ang Cao, Chris Rockwell, and Justin Johnson. 2022. FWD: Real-Time Novel View Synthesis With Forward Warping and Depth. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 15713–15724.

[5]

Junli Cao, Huan Wang, Pavlo Chemerys, Vladislav Shakhrai, Ju Hu, Yun Fu, Denys Makoviichuk, Sergey Tulyakov, and Jian Ren. 2023. Real-Time Neural Light Field on Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8328–8337.

[6]

Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. 2015. ShapeNet: An Information-Rich 3D Model Repository. arxiv:1512.03012

[7]

Anpei Chen, Zexiang Xu, Fuqiang Zhao, Xiaoshuai Zhang, Fanbo Xiang, Jingyi Yu, and Hao Su. 2021. Mvsnerf: Fast generalizable radiance field reconstruction from multi-view stereo. (2021), 14124–14133.

[8]

Jaesung Choe, Sunghoon Im, Francois Rameau, Minjun Kang, and In So Kweon. 2021. Volumefusion: Deep depth fusion for 3d scene reconstruction. (2021), 16086–16095.

[9]

Sungjoon Choi, Qian-Yi Zhou, Stephen Miller, and Vladlen Koltun. 2016. A Large Dataset of Object Scans. arXiv:1602.02481 [cs] (May 2016). arxiv:1602.02481 [cs]

[10]

Ronald Clark. 2022. Volumetric Bundle Adjustment for Online Photorealistic Scene Capture. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, New Orleans, LA, USA, 6114–6122. https://doi.org/10.1109/CVPR52688.2022.00603

[11]

Angela Dai, Angel X. Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. 2017a. Scannet: Richly-annotated 3d Reconstructions of Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5828–5839.

[12]

Angela Dai, Matthias Nießner, Michael Zollhöfer, Shahram Izadi, and Christian Theobalt. 2017b. Bundlefusion: Real-time globally consistent 3d reconstruction using on-the-fly surface reintegration. ACM Transactions on Graphics (ToG) 36, 4 (2017), 1.

[13]

Stefano Esposito, Daniele Baieri, Stefan Zellmann, André Hinkenjann, and Emanuele Rodolà. 2022. KiloNeuS: A Versatile Neural Implicit Surface Representation for Real-Time Rendering. arxiv:2206.10885 [cs]

[14]

Yasutaka Furukawa and Carlos Hernández. 2015. Multi-View Stereo: A Tutorial. Number 9,1/2 in Foundation and Trends in Computer Graphics and Vision. Now, Boston Delft.

[15]

Carsten Griwodz, Simone Gasparini, Lilian Calvet, Pierre Gurdjos, Fabien Castan, Benoit Maujean, Gregoire De Lillo, and Yann Lanthony. 2021. AliceVision Meshroom: An Open-Source 3D Reconstruction Pipeline. In MMSys: ACM Multimedia Systems Conference. Istanbul Turkey, 241–247. https://doi.org/10.1145/3458305.3478443

[16]

Dennis Haitz, Boris Jutzi, Markus Ulrich, Miriam Jaeger, and Patrick Huebner. 2023. Combining HoloLens with Instant-NeRFs: Advanced Real-Time 3D Mobile Mapping. arxiv:2304.14301 [cs]

[17]

Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel Brostow. 2019. Deep Blending for Free-Viewpoint Image-Based Rendering. ACM Transactions on Graphics 37, 6 (Jan. 2019), 1–15. https://doi.org/10.1145/3272127.3275084

[18]

Hamed Z. Jahromi, Ivan Bartolec, Edwin Gamboa, Andrew Hines, and Raimund Schatz. 2020. You Drive Me Crazy! Interactive QoE Assessment for Telepresence Robot Control. In 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX). 1–6. https://doi.org/10.1109/QoMEX48832.2020.9123117

[19]

Nishant Jain, Suryansh Kumar, and Luc Van Gool. 2023. Enhanced Stable View Synthesis. arxiv:2303.17094 [cs]

[20]

Rasmus Jensen, Anders Dahl, George Vogiatzis, Engil Tola, and Henrik Aanaes. 2014. Large Scale Multi-view Stereopsis Evaluation. In 2014 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Columbus, OH, USA, 406–413. https://doi.org/10.1109/CVPR.2014.59

[21]

Yuheng Jiang, Kaixin Yao, Zhuo Su, Zhehao Shen, Haimin Luo, and Lan Xu. 2023. Instant-NVR: Instant Neural Volumetric Rendering for Human-object Interactions from Monocular RGBD Stream. arxiv:2304.03184 [cs]

[22]

Arno Knapitsch, Jaesik Park, Qian-Yi Zhou, and Vladlen Koltun. 2017. Tanks and temples: Benchmarking large-scale scene reconstruction. ACM Transactions on Graphics (ToG) 36, 4 (2017), 1–13.

[23]

Lukas Koestler, Nan Yang, Niclas Zeller, and Daniel Cremers. 2022. TANDEM: Tracking and Dense Mapping in Real-time Using Deep Multi-view Stereo. In Proceedings of the 5th Conference on Robot Learning. PMLR, 34–45.

[24]

Georgios Kopanas, Julien Philip, Thomas Leimkühler, and George Drettakis. 2021. Point-Based Neural Rendering with Per-View Optimization. Computer Graphics Forum (2021), 16. https://doi.org/10/gk669d

[25]

Chaojian Li, Sixu Li, Yang Zhao, Wenbo Zhu, and Yingyan Lin. 2022a. RT-NeRF: Real-Time On-Device Neural Radiance Fields Towards Immersive AR/VR Rendering. arxiv:2212.01120 [cs]

[26]

Lingzhi Li, Zhen Shen, Zhongshu Wang, Li Shen, and Ping Tan. 2022b. Streaming Radiance Fields for 3D Video Synthesis. arxiv:2210.14831 [cs]

[27]

Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, and Noah Snavely. 2023. DynIBaR: Neural Dynamic Image-Based Rendering. arxiv:2211.11082 [cs]

[28]

Haotong Lin, Sida Peng, Zhen Xu, Yunzhi Yan, Qing Shuai, Hujun Bao, and Xiaowei Zhou. 2022. Efficient Neural Radiance Fields for Interactive Free-viewpoint Video. In SIGGRAPH Asia 2022 Conference Papers. ACM, Daegu Republic of Korea, 1–9. https://doi.org/10.1145/3550469.3555376

[29]

Stefan Lionar, Lukas Schmid, Cesar Cadena, Roland Siegwart, and Andrei Cramariuc. 2021. NeuralBlox: Real-Time Neural Representation Fusion for Robust Volumetric Mapping. In 2021 International Conference on 3D Vision (3DV). 1279–1289. https://doi.org/10/gpcgwz

[30]

Andréa Macario Barros, Maugan Michel, Yoann Moline, Gwenolé Corre, and Frédérick Carrel. 2022. A Comprehensive Survey of Visual SLAM Algorithms. Robotics 11, 1 (Feb. 2022), 24. https://doi.org/10/gpjddp

[31]

Tanwi Mallick, Partha Pratim Das, and Arun Kumar Majumdar. 2014. Characterizations of Noise in Kinect Depth Images: A Review. IEEE Sensors Journal 14, 6 (June 2014), 1731–1740. https://doi.org/10.1109/JSEN.2014.2309987

[32]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In European Conference on Computer Vision (ECCV), Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer, Cham, 405–421.

[33]

Thomas Müller, Alex Evans, Christoph Schied, Marco Foco, András Bódis-Szomorú, Isaac Deutsch, Michael Shelley, and Alexander Keller. 2022b. Instant Neural Radiance Fields. In Special Interest Group on Computer Graphics and Interactive Techniques Conference Real-Time Live!ACM, Vancouver BC Canada, 1–2. https://doi.org/10/gqkshw

[34]

Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022a. Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics (ToG) 41, 4 (2022), 1–15.

[35]

Raul Mur-Artal and Juan D. Tardos. 2017. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras (arXiv). IEEE Transactions on Robotics 33, 5 (Oct. 2017), 1255–1262. https://doi.org/10/gdz797 arxiv:1610.06475

[36]

Richard A. Newcombe, Shahram Izadi, Otmar Hilliges, David Molyneaux, David Kim, Andrew J. Davison, Pushmeet Kohi, Jamie Shotton, Steve Hodges, and Andrew Fitzgibbon. 2011. KinectFusion: Real-time Dense Surface Mapping and Tracking. In 2011 10th IEEE International Symposium on Mixed and Augmented Reality. 127–136. https://doi.org/10/dhvm3p

[37]

Joseph Ortiz, Alexander Clegg, Jing Dong, Edgar Sucar, David Novotny, Michael Zollhoefer, and Mustafa Mukadam. 2022. iSDF: Real-Time Neural Signed Distance Fields for Robot Perception. arXiv:2204.02296 [cs] (April 2022). arxiv:2204.02296 [cs]

[38]

Ruslan Rakhimov, Andrei-Timotei Ardelean, Victor Lempitsky, and Evgeny Burnaev. 2022. NPBG++: Accelerating Neural Point-Based Graphics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 15969–15979.

[39]

Christian Reiser, Richard Szeliski, Dor Verbin, Pratul P. Srinivasan, Ben Mildenhall, Andreas Geiger, Jonathan T. Barron, and Peter Hedman. 2023. MERF: Memory-Efficient Radiance Fields for Real-time View Synthesis in Unbounded Scenes. arxiv:2302.12249 [cs]

[40]

Gernot Riegler and Vladlen Koltun. 2020. Free View Synthesis. arXiv:2008.05511 [cs] (Aug. 2020). arxiv:2008.05511 [cs]

[41]

Gernot Riegler and Vladlen Koltun. 2021. Stable View Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12216–12225.

[42]

Antoni Rosinol, John J. Leonard, and Luca Carlone. 2022. NeRF-SLAM: Real-Time Dense Monocular SLAM with Neural Radiance Fields. arxiv:2210.13641 [cs]

[43]

Darius Rückert, Linus Franke, and Marc Stamminger. 2022. Adop: Approximate differentiable one-pixel point rendering. ACM Transactions on Graphics (ToG) 41, 4 (2022), 1–14.

[44]

D. Rückert, M. Innmann, and M. Stamminger. 2019. FragmentFusion: A Light-Weight SLAM Pipeline for Dense Reconstruction. In 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct). 342–347. https://doi.org/10/gjhfv4

[45]

Darius Rückert and Marc Stamminger. 2021. Snake-SLAM: Efficient Global Visual Inertial SLAM Using Decoupled Nonlinear Optimization. In 2021 International Conference on Unmanned Aircraft Systems (ICUAS). 219–228. https://doi.org/10.1109/ICUAS51884.2021.9476760

[46]

Johannes L. Schönberger, Enliang Zheng, Jan-Michael Frahm, and Marc Pollefeys. 2016. Pixelwise View Selection for Unstructured Multi-View Stereo (COLMAP). In European Conference on Computer Vision (ECCV), Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling (Eds.). Vol. 9907. European Conference on Computer Vision (ECCV), Springer, Cham, 501–518. https://doi.org/10.1007/978-3-319-46487-9_31

[47]

Thomas Schöps, Torsten Sattler, and Marc Pollefeys. 2020. SurfelMeshing: Online Surfel-Based Mesh Reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence 42, 10 (Oct. 2020), 2494–2507. https://doi.org/10/gh84zp

[48]

Liangchen Song, Anpei Chen, Zhong Li, Zhang Chen, Lele Chen, Junsong Yuan, Yi Xu, and Andreas Geiger. 2022. NeRFPlayer: A Streamable Dynamic Scene Representation with Decomposed Neural Radiance Fields. arxiv:2210.15947 [cs]

[49]

Documentation Stereolabs. 2023. Depth Sensing: Depth Settings. https://www.stereolabs.com/docs/depth-sensing/depth-settings/.

[50]

Edgar Sucar, Shikun Liu, Joseph Ortiz, and Andrew J Davison. 2021. iMAP: Implicit Mapping and Positioning in Real-Time. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6229–6238.

[51]

Cheng Sun, Min Sun, and Hwann-Tzong Chen. 2021. Direct Voxel Grid Optimization: Super-fast Convergence for Radiance Fields Reconstruction (DVGO). arXiv:2111.11215 [cs] (Nov. 2021). arxiv:2111.11215 [cs]

[52]

Joo Kooi Tan and Akitoshi Sato. 2020. Human-Robot Cooperation Based on Visual Communication. https://doi.org/10.24507/ijicic.16.02.543

[53]

Ayush Tewari, Ohad Fried, Justus Thies, Vincent Sitzmann, Stephen Lombardi, Kalyan Sunkavalli, Ricardo Martin-Brualla, Tomas Simon, Jason Saragih, Matthias Nießner, 2020. State of the art on neural rendering. In Computer Graphics Forum, Vol. 39. Wiley Online Library, 701–727.

[54]

Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred Neural Rendering: Image Synthesis Using Neural Textures. ACM Transactions on Graphics 38, 4 (July 2019), 1–12. https://doi.org/10.1145/3306346.3323035 arxiv:1904.12356

[55]

Fangjinhua Wang, Silvano Galliani, Christoph Vogel, Pablo Speciale, and Marc Pollefeys. 2021a. Patchmatchnet: Learned multi-view patchmatch stereo. (2021), 14194–14203.

[56]

Qianqian Wang, Zhicheng Wang, Kyle Genova, Pratul P. Srinivasan, Howard Zhou, Jonathan T. Barron, Ricardo Martin-Brualla, Noah Snavely, and Thomas Funkhouser. 2021b. IBRNet: Learning Multi-View Image-Based Rendering. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Virtual, 4690–4699.

[57]

Silvan Weder, Johannes L Schonberger, Marc Pollefeys, and Martin R Oswald. 2021. Neuralfusion: Online depth fusion in latent space. (2021), 3162–3172.

[58]

Thomas Whelan, Renato F Salas-Moreno, Ben Glocker, Andrew J Davison, and Stefan Leutenegger. 2016. ElasticFusion: Real-time Dense SLAM and Light Source Estimation. The International Journal of Robotics Research 35, 14 (Dec. 2016), 1697–1716. https://doi.org/10/f9k45t

[59]

Yiheng Xie, Towaki Takikawa, Shunsuke Saito, Or Litany, Shiqin Yan, Numair Khan, Federico Tombari, James Tompkin, Vincent Sitzmann, and Srinath Sridhar. 2022. Neural fields in visual computing and beyond., 641–676 pages.

[60]

Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, Salt Lake City, UT, 586–595. https://doi.org/10/gfz33w

[61]

Qingtian Zhu, Chen Min, Zizhuang Wei, Yisong Chen, and Guoping Wang. 2021. Deep Learning for Multi-View Stereo via Plane Sweep: A Survey. arXiv:2106.15328 [cs] (July 2021). arxiv:2106.15328 [cs]

[62]

Zihan Zhu, Songyou Peng, Viktor Larsson, Weiwei Xu, Hujun Bao, Zhaopeng Cui, Martin R Oswald, and Marc Pollefeys. 2022. NICE-SLAM: Neural Implicit Scalable Encoding for SLAM. (2022), 12786–12796.