“Reconstructing Close Human Interaction from Multiple Views” by Shuai, Yu, Zhou, Fan, Yang, et al. …

Conference:

Type(s):

Title:

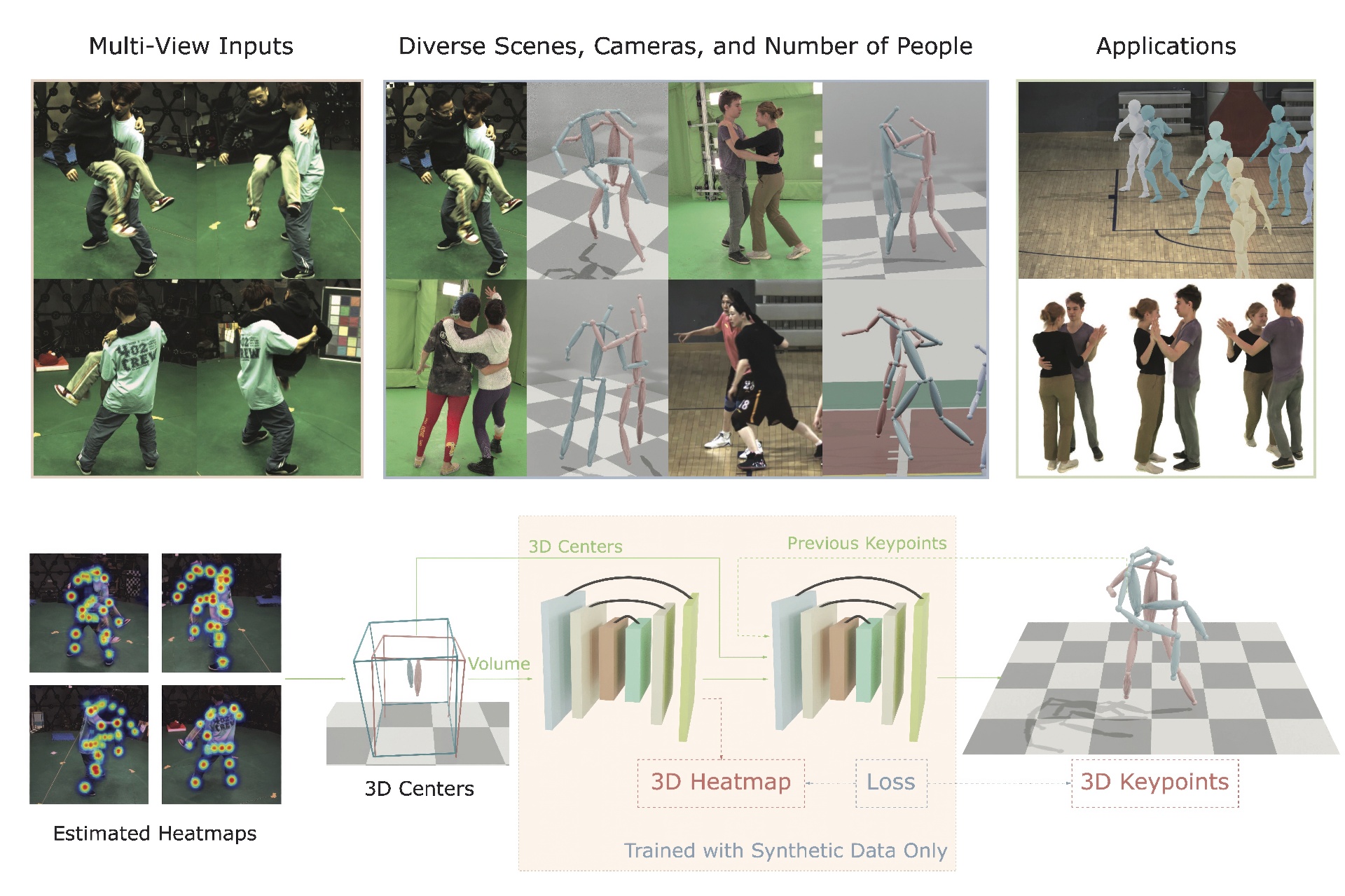

- Reconstructing Close Human Interaction from Multiple Views

Session/Category Title: Motion Capture and Reconstruction

Presenter(s)/Author(s):

Abstract:

This paper addresses the challenging task of reconstructing the poses of multiple individuals engaged in close interactions, captured by multiple calibrated cameras. The difficulty arises from the noisy or false 2D keypoint detection due to inter-person occlusion, the heavy ambiguity to associate keypoints to individuals due to the close interactions, and the scarcity of training data, as collecting and annotating extensive data in crowd scenes is resource-intensive. We introduce a novel learning-based system to address these challenges. Our approach constructs a 3D volume from multi-view 2D keypoint heatmaps, which is then fed into a conditional volumetric network to estimate the 3D pose for each individual. As the network doesn’t need images as input, we can leverage known camera parameters from test scenes and a large quantity of existing motion capture data to synthesize massive training data that mimics the distribution of the real data in the test scenes. Extensive experiments across various camera setups and population sizes demonstrate that our approach significantly surpasses previous approaches in terms of both pose accuracy and generalizability. The code will be made publicly available upon acceptance of the paper.