“ExtraSS: A Framework for Joint Spatial Super Sampling and Frame Extrapolation” by Wu, Kim, Zeng, Vembar, Jha, et al. …

Conference:

Type(s):

Title:

- ExtraSS: A Framework for Joint Spatial Super Sampling and Frame Extrapolation

Session/Category Title: What're Your Points?

Presenter(s)/Author(s):

Abstract:

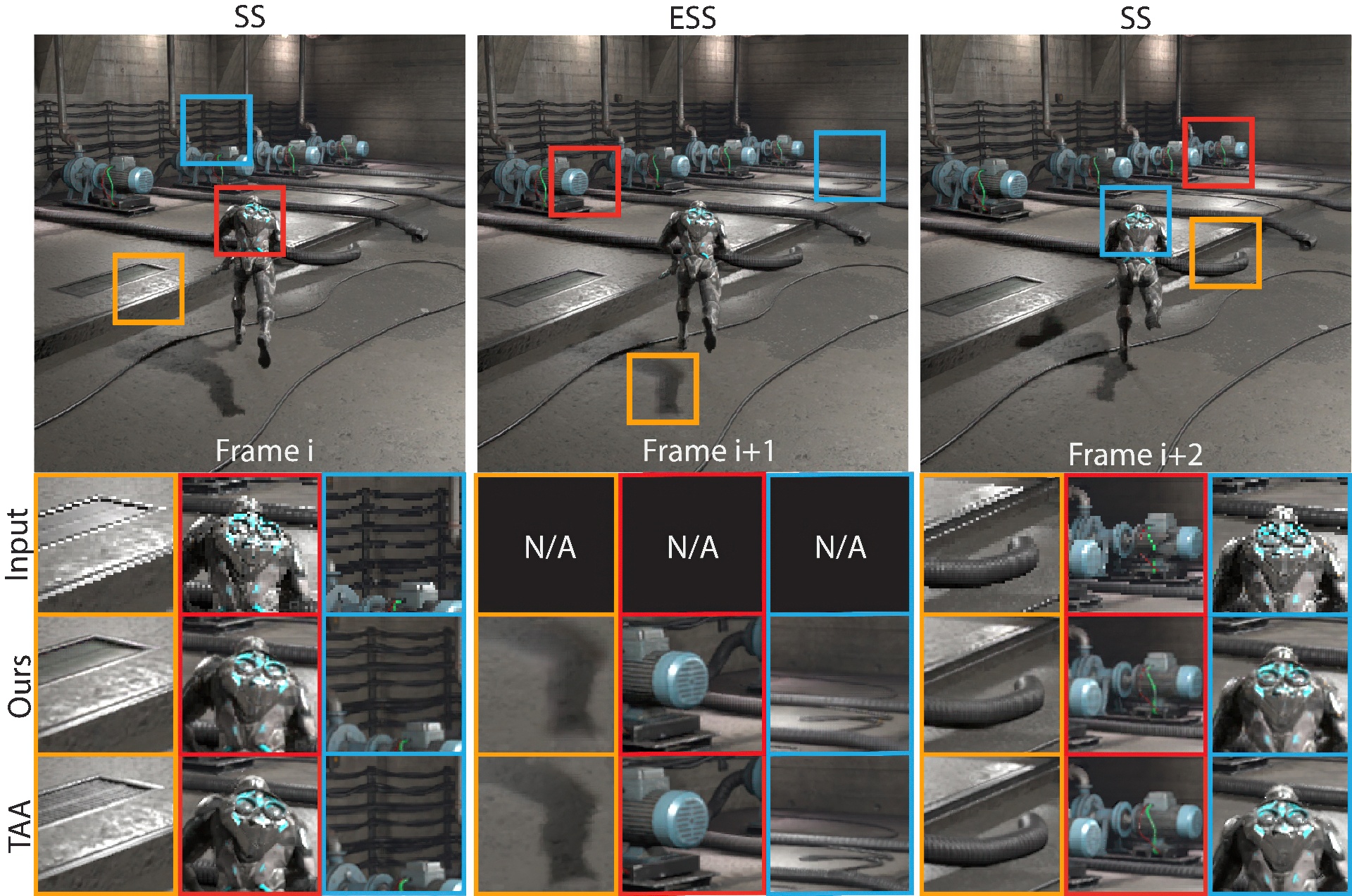

We introduce ExtraSS, a novel framework that combines spatial super sampling and frame extrapolation to enhance real-time rendering performance. By integrating these techniques, our approach achieves a balance between performance and quality, generating temporally stable and high-quality, high-resolution results. Leveraging lightweight modules on warping and the ExtraSSNet for refinement, we exploit spatial-temporal information, improve rendering sharpness, handle moving shadings accurately, and generate temporally stable results. Computational costs are significantly reduced compared to traditional rendering methods, enabling higher frame rates and alias-free high resolution results. Evaluation using Unreal Engine demonstrates the benefits of our framework over conventional individual spatial or temporal super sampling methods, delivering improved rendering speed and visual quality. With its ability to generate temporally stable high-quality results, our framework creates new possibilities for real-time rendering applications, advancing the boundaries of performance and photo-realistic rendering in various domains.

References:

[1]

Kurt Akeley. 1993. Reality engine graphics. In Proceedings of the 20th annual conference on Computer graphics and interactive techniques. 109–116.

[2]

AMD. 2021. AMD FidelityFX™ Super Resolution. https://www.amd.com/en/technologies/fidelityfx-super-resolution Accessed: 2023-05-23.

[3]

Simon Baker, Daniel Scharstein, JP Lewis, Stefan Roth, Michael J Black, and Richard Szeliski. 2011. A database and evaluation methodology for optical flow. International journal of computer vision 92 (2011), 1–31.

[4]

Wenbo Bao, Wei-Sheng Lai, Chao Ma, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019. Depth-aware video frame interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3703–3712.

[5]

Karlis Martins Briedis, Abdelaziz Djelouah, Mark Meyer, Ian McGonigal, Markus Gross, and Christopher Schroers. 2021. Neural frame interpolation for rendered content. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–13.

[6]

Pierre Charbonnier, Laure Blanc-Feraud, Gilles Aubert, and Michel Barlaud. 1994. Two deterministic half-quadratic regularization algorithms for computed imaging. In Proceedings of 1st international conference on image processing, Vol. 2. IEEE, 168–172.

[7]

Myungsub Choi, Heewon Kim, Bohyung Han, Ning Xu, and Kyoung Mu Lee. 2020. Channel attention is all you need for video frame interpolation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 10663–10671.

[8]

Holger Dammertz, Daniel Sewtz, Johannes Hanika, and Hendrik PA Lensch. 2010. Edge-avoiding a-trous wavelet transform for fast global illumination filtering. In Proceedings of the Conference on High Performance Graphics. 67–75.

[9]

Epic Games. 2022. Unreal Engine. https://www.unrealengine.com

[10]

Jie Guo, Xihao Fu, Liqiang Lin, Hengjun Ma, Yanwen Guo, Shiqiu Liu, and Ling-Qi Yan. 2021. ExtraNet: real-time extrapolated rendering for low-latency temporal supersampling. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–16.

[11]

Yu-Xiao Guo, Guojun Chen, Yue Dong, and Xin Tong. 2022. Classifier Guided Temporal Supersampling for Real-time Rendering. In Computer Graphics Forum, Vol. 41. Wiley Online Library, 237–246.

[12]

Intel. 2022. Intel® Arc™- Xe Super Sampling. https://www.intel.com/content/www/us/en/products/docs/discrete-gpus/arc/technology/xess.html Accessed: 2023-05-23.

[13]

Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. 2018. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 9000–9008.

[14]

Jorge Jimenez, Diego Gutierrez, Jason Yang, Alexander Reshetov, Pete Demoreuille, Tobias Berghoff, Cedric Perthuis, Henry Yu, Morgan McGuire, Timothy Lottes, 2011. Filtering approaches for real-time anti-aliasing.SIGGRAPH Courses 2, 3 (2011), 4.

[15]

Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14. Springer, 694–711.

[16]

Tarun Kalluri, Deepak Pathak, Manmohan Chandraker, and Du Tran. 2023. Flavr: Flow-agnostic video representations for fast frame interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2071–2082.

[17]

Brian Karis, Natasha Tatarchuk, Michal Drobot, Nicolas Schulz, Jerome Charles, and Theodor Mader. 2014. Advances in real-time rendering in games, part I. In ACM SIGGRAPH 2014 Courses. ACM, 10.

[18]

Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

[19]

Lingtong Kong, Boyuan Jiang, Donghao Luo, Wenqing Chu, Xiaoming Huang, Ying Tai, Chengjie Wang, and Jie Yang. 2022. Ifrnet: Intermediate feature refine network for efficient frame interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1969–1978.

[20]

Johannes Kopf, Michael F Cohen, Dani Lischinski, and Matt Uyttendaele. 2007. Joint bilateral upsampling. ACM Transactions on Graphics (ToG) 26, 3 (2007), 96–es.

[21]

Zhan Li, Carl S Marshall, Deepak S Vembar, and Feng Liu. 2022. Future Frame Synthesis for Fast Monte Carlo Rendering. In Graphics Interface 2022.

[22]

Edward Liu. 2020. DLSS 2.0-Image reconstruction for real-time rendering with deep learning. In GPU Technology Conference (GTC).

[23]

Gucan Long, Laurent Kneip, Jose M Alvarez, Hongdong Li, Xiaohu Zhang, and Qifeng Yu. 2016. Learning image matching by simply watching video. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VI 14. Springer, 434–450.

[24]

Simon Meister, Junhwa Hur, and Stefan Roth. 2018. Unflow: Unsupervised learning of optical flow with a bidirectional census loss. In Proceedings of the AAAI conference on artificial intelligence, Vol. 32.

[25]

Simone Meyer, Oliver Wang, Henning Zimmer, Max Grosse, and Alexander Sorkine-Hornung. 2015. Phase-based frame interpolation for video. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1410–1418.

[26]

Fernando Navarro and Diego Gutierrez. 2011. Practical Morphological Antialiasing. GPU Pro 2 (2011), 95–113.

[27]

Simon Niklaus, Long Mai, and Feng Liu. 2017. Video frame interpolation via adaptive separable convolution. In Proceedings of the IEEE international conference on computer vision. 261–270.

[28]

Simon Niklaus, Long Mai, and Oliver Wang. 2021. Revisiting adaptive convolutions for video frame interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1099–1109.

[29]

NVIDIA. 2020. NVIDIA Reflex. https://www.nvidia.com/en-us/geforce/technologies/reflex/ Accessed: 2023-05-23.

[30]

Junheum Park, Keunsoo Ko, Chul Lee, and Chang-Su Kim. 2020. Bmbc: Bilateral motion estimation with bilateral cost volume for video interpolation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIV 16. Springer, 109–125.

[31]

Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32 (2019).

[32]

Fitsum Reda, Janne Kontkanen, Eric Tabellion, Deqing Sun, Caroline Pantofaru, and Brian Curless. 2022. FILM: Frame Interpolation for Large Motion. In European Conference on Computer Vision (ECCV).

[33]

Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. Springer, 234–241.

[34]

Wang Shen, Wenbo Bao, Guangtao Zhai, Charlie L Wang, Jerry W Hu, and Zhiyong Gao. 2021. Prediction-assistant frame super-resolution for video streaming. arXiv preprint arXiv:2103.09455 (2021).

[35]

Tiago Sousa, Nick Kasyan, and Nicolas Schulz. 2011. Secrets of CryENGINE 3 graphics technology. In ACM SIGGRAPH, Vol. 1.

[36]

Thijs Vogels, Fabrice Rousselle, Brian McWilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with kernel prediction and asymmetric loss functions. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–15.

[37]

Lei Xiao, Salah Nouri, Matt Chapman, Alexander Fix, Douglas Lanman, and Anton Kaplanyan. 2020. Neural supersampling for real-time rendering. ACM Transactions on Graphics (TOG) 39, 4 (2020), 142–1.

[38]

Tianfan Xue, Baian Chen, Jiajun Wu, Donglai Wei, and William T Freeman. 2019. Video enhancement with task-oriented flow. International Journal of Computer Vision 127 (2019), 1106–1125.

[39]

Lei Yang, Shiqiu Liu, and Marco Salvi. 2020. A survey of temporal antialiasing techniques. In Computer graphics forum, Vol. 39. Wiley Online Library, 607–621.

[40]

Zheng Zeng, Shiqiu Liu, Jinglei Yang, Lu Wang, and Ling-Qi Yan. 2021. Temporally Reliable Motion Vectors for Real-time Ray Tracing. In Computer Graphics Forum, Vol. 40. Wiley Online Library, 79–90.