“FuseSR: Super Resolution for Real-time Rendering through Efficient Multi-resolution Fusion” by Zhong, Zhu, Dai, Zheng, Huo, et al. …

Conference:

Type(s):

Title:

- FuseSR: Super Resolution for Real-time Rendering through Efficient Multi-resolution Fusion

Session/Category Title: Rendering

Presenter(s)/Author(s):

Abstract:

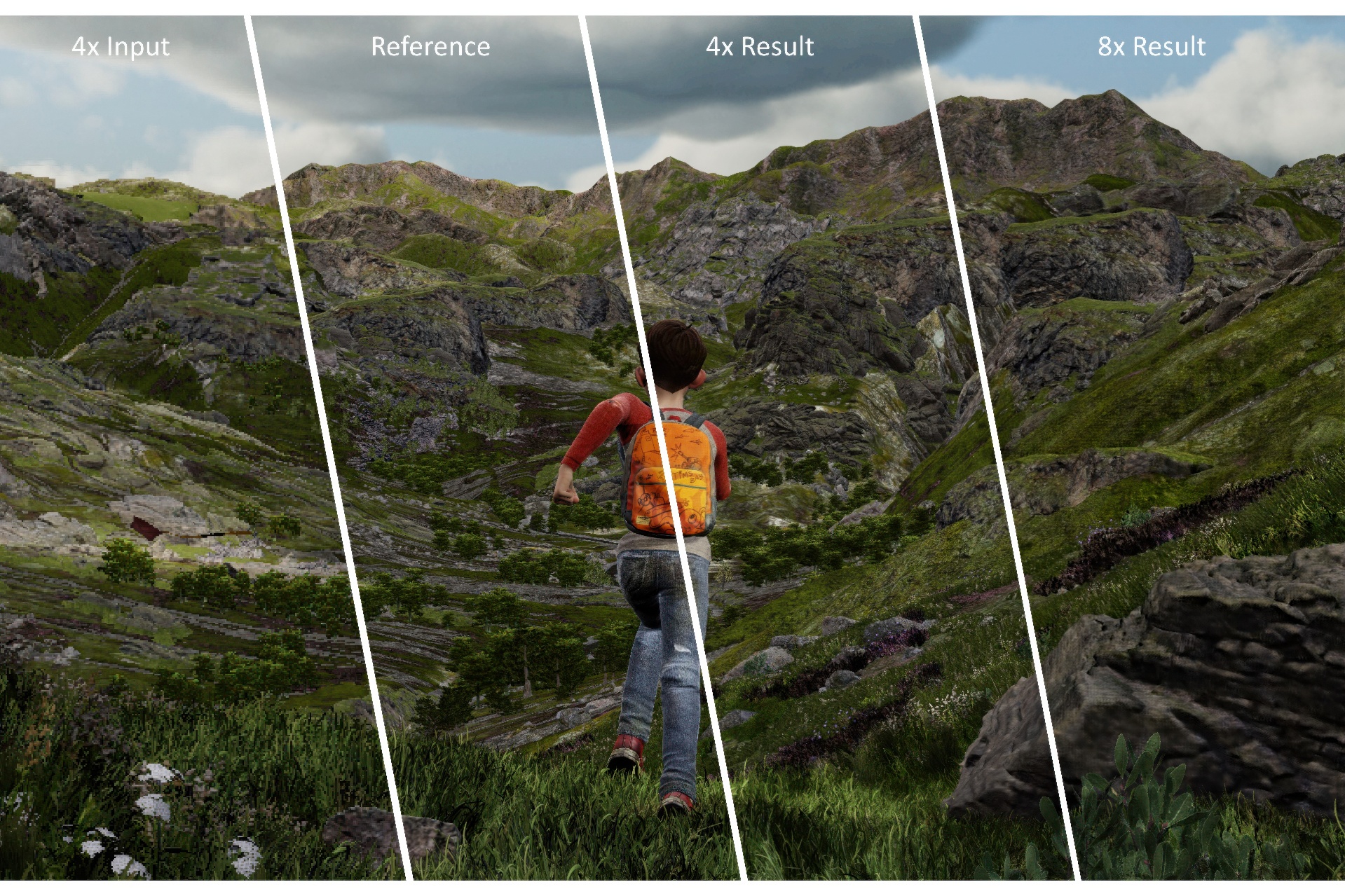

The workload of real-time rendering is steeply increasing as the demand for high resolution, high refresh rates, and high realism rises, overwhelming most graphics cards. To mitigate this problem, one of the most popular solutions is to render images at a low resolution to reduce rendering overhead, and then manage to accurately upsample the low-resolution rendered image to the target resolution, a.k.a. super-resolution techniques. Most existing methods focus on exploiting information from low-resolution inputs, such as historical frames. The absence of high frequency details in those LR inputs makes them hard to recover fine details in their high-resolution predictions. In this paper, we propose an efficient and effective super-resolution method that predicts high-quality upsampled reconstructions utilizing low-cost high-resolution auxiliary G-Buffers as additional input. With LR images and HR G-buffers as input, the network requires to align and fuse features at multi resolution levels. We introduce an efficient and effective H-Net architecture to solve this problem and significantly reduce rendering overhead without noticeable quality deterioration. Experiments show that our method is able to produce temporally consistent reconstructions in $4 \times 4$ and even challenging $8 \times 8$ upsampling cases at 4K resolution with real-time performance, with substantially improved quality and significant performance boost compared to existing works.

References:

[1]

Kurt Akeley. 1993. Reality engine graphics. In Proceedings of the 20th annual conference on Computer graphics and interactive techniques. 109–116.

[2]

AMD. 2021. AMD FidelityFX™ Super Resolution. https://www.amd.com/en/technologies/fidelityfx-super-resolution/

[3]

Steve Bako, Thijs Vogels, Brian McWilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-predicting convolutional networks for denoising Monte Carlo renderings.ACM Trans. Graph. 36, 4 (2017), 97–1.

[4]

Yinbo Chen, Sifei Liu, and Xiaolong Wang. 2021. Learning continuous image representation with local implicit image function. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 8628–8638.

[5]

Epic Games. 2020a. Screen Percentage with Temporal Upscale in Unreal Engine. https://docs.unrealengine.com/en-US/screen-percentage-with-temporal-upscale-in-unreal-engine/

[6]

Epic Games. 2020b. Unreal Engine. https://www.unrealengine.com/

[7]

Epic Games. 2021. Unreal Engine. https://www.unrealengine.com/en-US/unreal-engine-5.

[8]

Hangming Fan, Rui Wang, Yuchi Huo, and Hujun Bao. 2021. Real-time Monte Carlo Denoising with Weight Sharing Kernel Prediction Network. In Computer Graphics Forum, Vol. 40. Wiley Online Library, 15–27.

[9]

Michaël Gharbi, Gaurav Chaurasia, Sylvain Paris, and Frédo Durand. 2016. Deep joint demosaicking and denoising. ACM Transactions on Graphics (ToG) 35, 6 (2016), 1–12.

[10]

Jie Guo, Xihao Fu, Liqiang Lin, Hengjun Ma, Yanwen Guo, Shiqiu Liu, and Ling-Qi Yan. 2021. ExtraNet: real-time extrapolated rendering for low-latency temporal supersampling. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–16.

[11]

Intel. 2022. Intel Xe Super Sampling. https://www.intel.com/content/www/us/en/products/docs/arc-discrete-graphics/xess.html/

[12]

Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.

[13]

James T Kajiya. 1986. The rendering equation. In Proceedings of the 13th annual conference on Computer graphics and interactive techniques. 143–150.

[14]

Anton S Kaplanyan, Anton Sochenov, Thomas Leimkühler, Mikhail Okunev, Todd Goodall, and Gizem Rufo. 2019. DeepFovea: Neural reconstruction for foveated rendering and video compression using learned statistics of natural videos. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–13.

[15]

Brian Karis. 2013. Real shading in unreal engine 4. In SIGGRAPH Courses: Physically Based Shading in Theory and Practice.

[16]

Brian Karis. 2014. High Quality Temporal Anti-Aliasing. In SIGGRAPH Courses: Advances in Real-Time Rendering.

[17]

NVIDIA 2018. Deep Learning Super Sampling (DLSS) Technology | NVIDIA. NVIDIA. https://www.nvidia.com/en-us/geforce/technologies/dlss/

[18]

NVIDIA. 2018. TensorRT. https://developer.nvidia.com/tensorrt/

[19]

Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32 (2019).

[20]

Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. 2018. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4510–4520.

[21]

Christoph Schied, Anton Kaplanyan, Chris Wyman, Anjul Patney, Chakravarty R Alla Chaitanya, John Burgess, Shiqiu Liu, Carsten Dachsbacher, Aaron Lefohn, and Marco Salvi. 2017. Spatiotemporal variance-guided filtering: real-time reconstruction for path-traced global illumination. In Proceedings of High Performance Graphics. 1–12.

[22]

Wenzhe Shi, Jose Caballero, Ferenc Huszár, Johannes Totz, Andrew P Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. 2016. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1874–1883.

[23]

Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. 2004. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13, 4 (2004), 600–612.

[24]

Lei Xiao, Salah Nouri, Matt Chapman, Alexander Fix, Douglas Lanman, and Anton Kaplanyan. 2020. Neural supersampling for real-time rendering. ACM Transactions on Graphics (TOG) 39, 4 (2020), 142–1.

[25]

Lei Yang, Shiqiu Liu, and Marco Salvi. 2020. A survey of temporal antialiasing techniques. In Computer graphics forum, Vol. 39. Wiley Online Library, 607–621.

[26]

Sipeng Yang, Yunlu Zhao, Yuzhe Luo, He Wang, Hongyu Sun, Chen Li, Binghuang Cai, and Xiaogang Jin. 2023. MNSS: Neural Supersampling Framework for Real-Time Rendering on Mobile Devices. IEEE Transactions on Visualization and Computer Graphics (2023), 1–14. https://doi.org/10.1109/TVCG.2023.3259141

[27]

Peter Young. 2006. Coverage sampled anti-aliasing. Technical Report. NVIDIA Corporation.

[28]

Zheng Zeng, Shiqiu Liu, Jinglei Yang, Lu Wang, and Ling-Qi Yan. 2021. Temporally Reliable Motion Vectors for Real-time Ray Tracing. In Computer Graphics Forum, Vol. 40. Wiley Online Library, 79–90.

[29]

Tao Zhuang, Pengfei Shen, Beibei Wang, and Ligang Liu. 2021. Real-time Denoising Using BRDF Pre-integration Factorization. In Computer Graphics Forum, Vol. 40. Wiley Online Library, 173–180.