“Neural Motion Graph” by Tao, Hou, Zou, Bao and Xu

Conference:

Type(s):

Title:

- Neural Motion Graph

Session/Category Title: Flesh & Bones

Presenter(s)/Author(s):

Abstract:

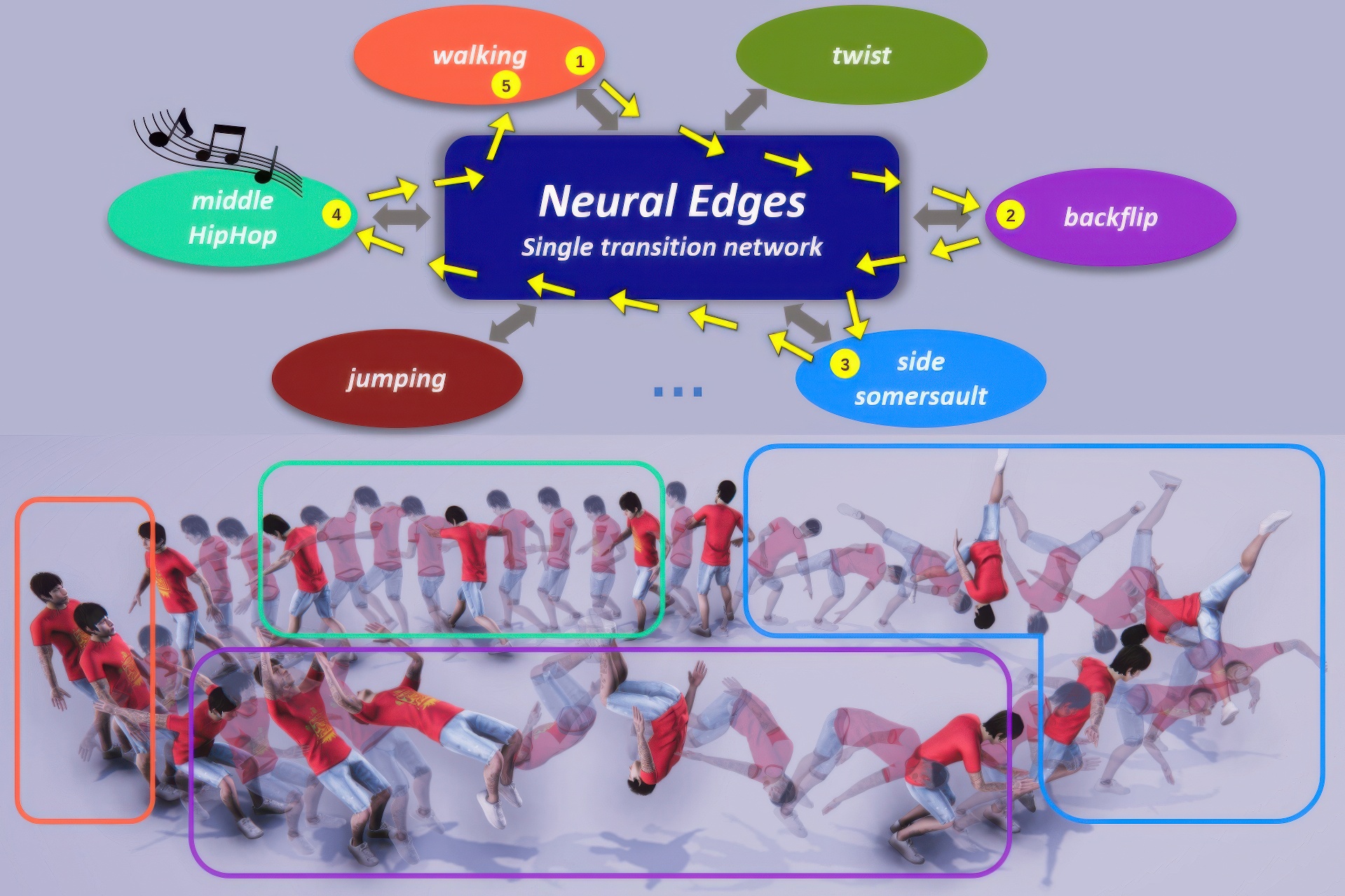

Deep learning techniques have been employed to design a controllable human motion synthesizer. Despite their potential, however, designing a neural network-based motion synthesis that enables flexible user interaction, fine-grained controllability, and the support of new types of motions at reduced time and space consumption costs remains a challenge. In this paper, we propose a novel approach, a neural motion graph, that addresses the challenge by enabling scalability to new motions while using compact neural networks. Our approach represents each type of motion with a separate neural node to reduce the cost of adding new motion types. In addition, designing a separate neural node for each motion type enables task-specific control strategies and has greater potential to achieve a high-quality synthesis of complex motions, such as the Mongolian dance. Furthermore, a single transition network, which acts as neural edges, is used to model the transition between two motion nodes. The transition network is designed with a lightweight control module to achieve a fine-grained response to user control signals. Overall, the design choice makes the neural motion graph highly controllable and scalable. In addition to being fully flexible to user interaction through high-level and fine-grained user-control signals, our experimental and subjective evaluation results demonstrate that our proposed approach, neural motion graph, outperforms state-of-the-art human motion synthesis methods in terms of the quality of controlled motion generation.

References:

[1]

Kfir Aberman, Peizhuo Li, Dani Lischinski, Olga Sorkine-Hornung, Daniel Cohen-Or, and Baoquan Chen. 2020. Skeleton-aware networks for deep motion retargeting. ACM Transactions on Graphics (TOG) 39, 4 (2020), 62–1.

[2]

Okan Arikan, David A. Forsyth, and James F. O’Brien. 2003. Motion Synthesis from Annotations. ACM Trans. Graph. 22, 3 (jul 2003), 402–408. https://doi.org/10.1145/882262.882284

[3]

Michael Büttner and Simon Clavet. 2015. Motion Matching – The Road to Next Gen Animation. Proc. of Nucl.ai 2015. https://www.youtube.com/watch?v=z_wpgHFSWss&t=658s [Online; accessed 14-April-2023].

[4]

Jinxiang Chai and Jessica K. Hodgins. 2007. Constraint-Based Motion Optimization Using a Statistical Dynamic Model. ACM Trans. Graph. 26, 3 (jul 2007), 8–es. https://doi.org/10.1145/1276377.1276387

[5]

Wenheng Chen, He Wang, Yi Yuan, Tianjia Shao, and Kun Zhou. 2020. Dynamic Future Net: Diversified Human Motion Generation. In Proceedings of the 28th ACM International Conference on Multimedia (Seattle, WA, USA) (MM ’20). Association for Computing Machinery, New York, NY, USA, 2131–2139. https://doi.org/10.1145/3394171.3413669

[6]

Loïc Ciccone, Cengiz Öztireli, and Robert W Sumner. 2019. Tangent-space optimization for interactive animation control. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–10.

[7]

Ning Ding, Yujia Qin, Guang Yang, Fuchao Wei, Zonghan Yang, Yusheng Su, Shengding Hu, Yulin Chen, Chi-Min Chan, Weize Chen, Jing Yi, Weilin Zhao, Xiaozhi Wang, Zhiyuan Liu, Hai-Tao Zheng, Jianfei Chen, Yang Liu, Jie Tang, Juanzi Li, and Maosong Sun. 2023. Parameter-efficient fine-tuning of large-scale pre-trained language models. Nature Machine Intelligence 5, 3 (01 Mar 2023), 220–235. https://doi.org/10.1038/s42256-023-00626-4

[8]

Yinglin Duan, Tianyang Shi, Zhengxia Zou, Yenan Lin, Zhehui Qian, Bohan Zhang, and Yi Yuan. 2021. Single-Shot Motion Completion with Transformer. arXiv:2103.00776https://arxiv.org/abs/2103.00776

[9]

Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent Network Models for Human Dynamics. arxiv:1508.00271 [cs.CV]

[10]

Félix G. Harvey and Christopher Pal. 2018. Recurrent Transition Networks for Character Locomotion. In SIGGRAPH Asia 2018 Technical Briefs (Tokyo, Japan) (SA ’18). Association for Computing Machinery, New York, NY, USA, Article 4, 4 pages. https://doi.org/10.1145/3283254.3283277

[11]

Félix G Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics (TOG) 39, 4 (2020), 60–1.

[12]

Rachel Heck and Michael Gleicher. 2007. Parametric Motion Graphs. In Proceedings of the 2007 Symposium on Interactive 3D Graphics and Games (Seattle, Washington) (I3D ’07). Association for Computing Machinery, New York, NY, USA, 129–136. https://doi.org/10.1145/1230100.1230123

[13]

Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics (TOG) 39, 4 (2020), 53–1.

[14]

Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.

[15]

Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.

[16]

Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning Motion Manifolds with Convolutional Autoencoders. In SIGGRAPH Asia 2015 Technical Briefs. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/2820903.2820918

[17]

Shuaiying Hou, Hongyu Tao, Hujun Bao, and Weiwei Xu. 2023. A Two-part Transformer Network for Controllable Motion Synthesis. IEEE Transactions on Visualization and Computer Graphics (2023), 1–16. https://doi.org/10.1109/TVCG.2023.3284402

[18]

Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2013. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE transactions on pattern analysis and machine intelligence 36, 7 (2013), 1325–1339.

[19]

Ashesh Jain, Amir R. Zamir, Silvio Savarese, and Ashutosh Saxena. 2016. Structural-RNN: Deep Learning on Spatio-Temporal Graphs. arxiv:1511.05298 [cs.CV]

[20]

Yanghua Jin, Jiakai Zhang, Minjun Li, Yingtao Tian, Huachun Zhu, and Zhihao Fang. 2017. Towards the Automatic Anime Characters Creation with Generative Adversarial Networks. arXiv:1708.05509http://arxiv.org/abs/1708.05509

[21]

Manuel Kaufmann, Emre Aksan, Jie Song, Fabrizio Pece, Remo Ziegler, and Otmar Hilliges. 2020. Convolutional Autoencoders for Human Motion Infilling., 918–927 pages. https://doi.org/10.1109/3DV50981.2020.00102

[22]

Jihoon Kim, Taehyun Byun, Seungyoun Shin, Jungdam Won, and Sungjoon Choi. 2022. Conditional motion in-betweening. Pattern Recognition 132 (2022), 108894.

[23]

Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion Graphs. ACM Trans. Graph. 21, 3 (jul 2002), 473–482.

[24]

Jehee Lee, Jinxiang Chai, Paul S. A. Reitsma, Jessica K. Hodgins, and Nancy S. Pollard. 2002. Interactive Control of Avatars Animated with Human Motion Data. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques (San Antonio, Texas) (SIGGRAPH ’02). Association for Computing Machinery, New York, NY, USA, 491–500. https://doi.org/10.1145/566570.566607

[25]

Jehee Lee and Kang Hoon Lee. 2004. Precomputing Avatar Behavior from Human Motion Data. In Proceedings of the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (Grenoble, France) (SCA ’04). Eurographics Association, Goslar, DEU, 79–87. https://doi.org/10.1145/1028523.1028535

[26]

Kyungho Lee, Seyoung Lee, and Jehee Lee. 2018. Interactive character animation by learning multi-objective control. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.

[27]

Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popović, and Zoran Popović. 2010. Motion Fields for Interactive Character Locomotion. In ACM SIGGRAPH Asia 2010 Papers. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/1866158.1866160

[28]

Andreas M. Lehrmann, Peter V. Gehler, and Sebastian Nowozin. 2014. Efficient Nonlinear Markov Models for Human Motion., 1314–1321 pages. https://doi.org/10.1109/CVPR.2014.171

[29]

Ruilong Li, Shan Yang, David A. Ross, and Angjoo Kanazawa. 2021. AI Choreographer: Music Conditioned 3D Dance Generation with AIST++. arxiv:2101.08779 [cs.CV]

[30]

Zimo Li, Yi Zhou, Shuangjiu Xiao, Chong He, Zeng Huang, and Hao Li. 2017. Auto-Conditioned Recurrent Networks for Extended Complex Human Motion Synthesis. arxiv:1707.05363 [cs.LG]

[31]

Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel Van De Panne. 2020. Character controllers using motion vaes. ACM Transactions on Graphics (TOG) 39, 4 (2020), 40–1.

[32]

Libin Liu and Jessica Hodgins. 2018. Learning basketball dribbling skills using trajectory optimization and deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.

[33]

Wan-Yen Lo and Matthias Zwicker. 2008. Real-Time Planning for Parameterized Human Motion. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (Dublin, Ireland) (SCA ’08). Eurographics Association, Goslar, DEU, 29–38.

[34]

Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++ a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics (TOG) 31, 6 (2012), 1–12.

[35]

Luis Molina-Tanco and Adrian Hilton. 2000. Realistic Synthesis of Novel Human Movements from a Database of Motion Capture Examples., 137–142 pages. https://doi.org/10.1109/HUMO.2000.897383

[36]

Andrei Nicolae. 2018. PLU: The Piecewise Linear Unit Activation Function. arxiv:1809.09534 [cs.NE]

[37]

Jia Qin, Youyi Zheng, and Kun Zhou. 2022. Motion In-betweening via Two-stage Transformers. ACM Transactions on Graphics (TOG) 41, 6 (2022), 1–16.

[38]

Sigal Raab, Inbal Leibovitch, Peizhuo Li, Kfir Aberman, Olga Sorkine-Hornung, and Daniel Cohen-Or. 2023. MoDi: Unconditional Motion Synthesis From Diverse Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 13873–13883.

[39]

Alejandro Hernandez Ruiz, Jürgen Gall, and Francesc Moreno. 2019. Human Motion Prediction via Spatio-Temporal Inpainting., 7133–7142 pages. https://doi.org/10.1109/ICCV.2019.00723

[40]

Alla Safonova and Jessica K. Hodgins. 2007. Construction and Optimal Search of Interpolated Motion Graphs. ACM Trans. Graph. 26, 3 (jul 2007), 106–es. https://doi.org/10.1145/1276377.1276510

[41]

Alla Safonova and Jessica K. Hodgins. 2008. Synthesizing Human Motion from Intuitive Constraints., 15–39 pages. https://doi.org/10.1007/978-3-540-85128-8_2

[42]

Hubert P. H. Shum, Taku Komura, and Shuntaro Yamazaki. 2008. Simulating Interactions of Avatars in High Dimensional State Space. In Proceedings of the 2008 Symposium on Interactive 3D Graphics and Games (Redwood City, California) (I3D ’08). Association for Computing Machinery, New York, NY, USA, 131–138. https://doi.org/10.1145/1342250.1342271

[43]

Li Siyao, Weijiang Yu, Tianpei Gu, Chunze Lin, Quan Wang, Chen Qian, Chen Change Loy, and Ziwei Liu. 2022. Bailando: 3d dance generation by actor-critic gpt with choreographic memory., 11050–11059 pages.

[44]

Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. Journal of Machine Learning Research 15, 56 (2014), 1929–1958. http://jmlr.org/papers/v15/srivastava14a.html

[45]

Sebastian Starke, Ian Mason, and Taku Komura. 2022. Deepphase: Periodic autoencoders for learning motion phase manifolds. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–13.

[46]

Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural state machine for character-scene interactions.ACM Trans. Graph. 38, 6 (2019), 209–1.

[47]

Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Transactions on Graphics (TOG) 39, 4 (2020), 54–1.

[48]

Sebastian Starke, Yiwei Zhao, Fabio Zinno, and Taku Komura. 2021. Neural animation layering for synthesizing martial arts movements. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–16.

[49]

Xiangjun Tang, He Wang, Bo Hu, Xu Gong, Ruifan Yi, Qilong Kou, and Xiaogang Jin. 2022. Real-time controllable motion transition for characters. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–10.

[50]

Guy Tevet, Sigal Raab, Brian Gordon, Yoni Shafir, Daniel Cohen-or, and Amit Haim Bermano. 2023. Human Motion Diffusion Model. In The Eleventh International Conference on Learning Representations. https://openreview.net/forum?id=SJ1kSyO2jwu

[51]

Guillermo Valle-Pérez, Gustav Eje Henter, Jonas Beskow, Andre Holzapfel, Pierre-Yves Oudeyer, and Simon Alexanderson. 2021. Transflower: Probabilistic Autoregressive Dance Generation with Multimodal Attention. ACM Trans. Graph. 40, 6, Article 195 (dec 2021), 14 pages. https://doi.org/10.1145/3478513.3480570

[52]

Jack M Wang, David J Fleet, and Aaron Hertzmann. 2007. Gaussian process dynamical models for human motion. IEEE transactions on pattern analysis and machine intelligence 30, 2 (2007), 283–298.

[53]

Xiaolin Wei, Jianyuan Min, and Jinxiang Chai. 2011. Physically valid statistical models for human motion generation. ACM Transactions on Graphics (TOG) 30, 3 (2011), 19.

[54]

Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2021. Control strategies for physically simulated characters performing two-player competitive sports. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–11.

[55]

He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.

[56]

Xinyi Zhang and Michiel van de Panne. 2018. Data-Driven Autocompletion for Keyframe Animation. In Proceedings of the 11th ACM SIGGRAPH Conference on Motion, Interaction and Games (Limassol, Cyprus) (MIG ’18). Association for Computing Machinery, New York, NY, USA, Article 10, 11 pages. https://doi.org/10.1145/3274247.3274502

[57]

Da-Wei Zhou, Qi-Wei Wang, Zhi-Hong Qi, Han-Jia Ye, De-Chuan Zhan, and Ziwei Liu. 2023. Deep Class-Incremental Learning: A Survey. https://doi.org/10.48550/arXiv.2302.03648 arXiv:2302.03648

[58]

Yi Zhou, Connelly Barnes, Jingwan Lu, Jimei Yang, and Hao Li. 2019. On the Continuity of Rotation Representations in Neural Networks., 5745–5753 pages. https://doi.org/10.1109/CVPR.2019.00589