“Zero-Shot 3D Shape Correspondence” by Abdelreheem, Eldesokey, Ovsjanikov and Wonka

Conference:

Type(s):

Title:

- Zero-Shot 3D Shape Correspondence

Session/Category Title: Embed to a Different Space

Presenter(s)/Author(s):

Abstract:

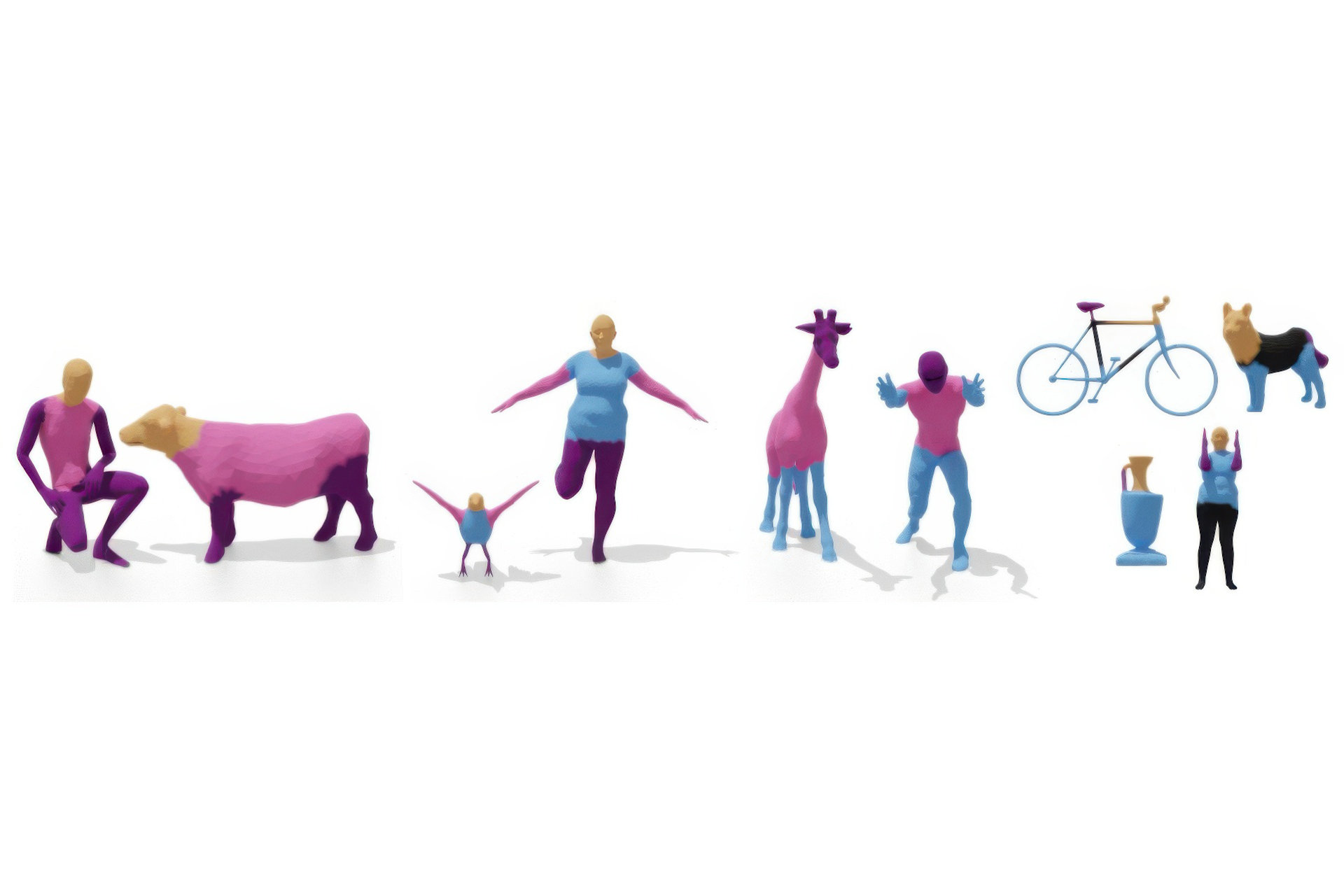

We propose a novel zero-shot approach to computing correspondences between 3D shapes. Existing approaches mainly focus on isometric and near-isometric shape pairs (e.g., human vs. human), but less attention has been given to strongly non-isometric and inter-class shape matching (e.g., human vs. cow). To this end, we introduce a fully automatic method that exploits the exceptional reasoning capabilities of recent foundation models in language and vision to tackle difficult shape correspondence problems. Our approach comprises multiple stages. First, we classify the 3D shapes in a zero-shot manner by feeding rendered shape views to a language-vision model (e.g., BLIP2) to generate a list of class proposals per shape. These proposals are unified into a single class per shape by employing the reasoning capabilities of ChatGPT. Second, we attempt to segment the two shapes in a zero-shot manner, but in contrast to the co-segmentation problem, we do not require a mutual set of semantic regions. Instead, we propose to exploit the in-context learning capabilities of ChatGPT to generate two different sets of semantic regions for each shape and a semantic mapping between them. This enables our approach to match strongly non-isometric shapes with significant differences in geometric structure. Finally, we employ the generated semantic mapping to produce coarse correspondences that can further be refined by the functional maps framework to produce dense point-to-point maps. Our approach, despite its simplicity, produces highly plausible results in a zero-shot manner, especially between strongly non-isometric shapes.

References:

[1]

Ahmed Abdelreheem, Kyle Olszewski, Hsin-Ying Lee, Peter Wonka, and Panos Achlioptas. 2022. ScanEnts3D: Exploiting Phrase-to-3D-Object Correspondences for Improved Visio-Linguistic Models in 3D Scenes. ArXiv abs/2212.06250 (2022).

[2]

Ahmed Abdelreheem, Ivan Skorokhodov, Maks Ovsjanikov, and Peter Wonka. 2023. SATR: Zero-Shot Semantic Segmentation of 3D Shapes. arxiv:2304.04909 [cs.CV]

[3]

Mathieu Aubry, Ulrich Schlickewei, and Daniel Cremers. 2011. The wave kernel signature: A quantum mechanical approach to shape analysis. In 2011 IEEE international conference on computer vision workshops (ICCV workshops). IEEE, 1626–1633.

[4]

Federica Bogo, Javier Romero, Matthew Loper, and Michael J Black. 2014. FAUST: Dataset and evaluation for 3D mesh registration. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3794–3801.

[5]

D. Boscaini, J. Masci, S. Melzi, M. M. Bronstein, U. Castellani, and P. Vandergheynst. 2015. Learning class-specific descriptors for deformable shapes using localized spectral convolutional networks. Computer Graphics Forum 34, 5 (2015), 13–23. https://doi.org/10.1111/cgf.12693 _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.12693.

[6]

Davide Boscaini, Jonathan Masci, Emanuele Rodolà, and Michael Bronstein. 2016. Learning shape correspondence with anisotropic convolutional neural networks. In Advances in Neural Information Processing Systems, Vol. 29. Curran Associates, Inc.https://proceedings.neurips.cc/paper/2016/hash/228499b55310264a8ea0e27b6e7c6ab6-Abstract.html

[7]

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, 2020. Language models are few-shot learners. Advances in neural information processing systems 33 (2020), 1877–1901.

[8]

Dongliang Cao and Florian Bernard. 2023. Self-Supervised Learning for Multimodal Non-Rigid 3D Shape Matching. https://doi.org/10.48550/arXiv.2303.10971 arXiv:2303.10971 [cs].

[9]

Dongliang Cao, Paul Roetzer, and Florian Bernard. 2023. Unsupervised Learning of Robust Spectral Shape Matching. arXiv preprint arXiv:2304.14419 (2023).

[10]

Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. 2021. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision. 9650–9660.

[11]

Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, 2015. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015).

[12]

Runnan Chen, Xinge Zhu, Nenglun Chen, Wei Li, Yuexin Ma, Ruigang Yang, and Wenping Wang. 2022. Zero-shot Point Cloud Segmentation by Transferring Geometric Primitives. arXiv preprint arXiv:2210.09923 (2022).

[13]

Ali Cheraghian, Shafin Rahman, Dylan Campbell, and Lars Petersson. 2020. Transductive zero-shot learning for 3d point cloud classification. In Proceedings of the IEEE/CVF winter conference on applications of computer vision. 923–933.

[14]

Ali Cheraghian, Shafin Rahman, and Lars Petersson. 2019. Zero-shot learning of 3d point cloud objects. In 2019 16th International Conference on Machine Vision Applications (MVA). IEEE, 1–6.

[15]

Dale Decatur, Itai Lang, and Rana Hanocka. 2023. 3d highlighter: Localizing regions on 3d shapes via text descriptions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 20930–20939.

[16]

Runyu Ding, Jihan Yang, Chuhui Xue, Wenqing Zhang, Song Bai, and Xiaojuan Qi. 2022. Language-driven Open-Vocabulary 3D Scene Understanding. arXiv preprint arXiv:2211.16312 (2022).

[17]

Nicolas Donati, Abhishek Sharma, and Maks Ovsjanikov. 2020. Deep Geometric Functional Maps: Robust Feature Learning for Shape Correspondence. 8592–8601. https://openaccess.thecvf.com/content_CVPR_2020/html/Donati_Deep_Geometric_Functional_Maps_Robust_Feature_Learning_for_Shape_Correspondence_CVPR_2020_paper.html

[18]

Zhiwen Fan, Peihao Wang, Yifan Jiang, Xinyu Gong, Dejia Xu, and Zhangyang Wang. 2022. NeRF-SOS: Any-View Self-supervised Object Segmentation on Complex Scenes. arXiv preprint arXiv:2209.08776 (2022).

[19]

Xiao Fu, Shangzhan Zhang, Tianrun Chen, Yichong Lu, Lanyun Zhu, Xiaowei Zhou, Andreas Geiger, and Yiyi Liao. 2022. Panoptic nerf: 3d-to-2d label transfer for panoptic urban scene segmentation. arXiv preprint arXiv:2203.15224 (2022).

[20]

Afzal Godil, Helin Dutagaci, Ceyhun Burak Akgül, Apostolos Axenopoulos, Benjamín Bustos, Mohamed Chaouch, Petros Daras, Takahiko Furuya, Sebastian Kreft, Zhouhui Lian, Thibault Napoléon, Athanasios Mademlis, Ryutarou Ohbuchi, Paul L. Rosin, Bülent Sankur, Tobias Schreck, Xianfang Sun, Masaki Tezuka, Anne Verroust-Blondet, Michael Walter, and Yücel Yemez. 2009. SHREC 2009 – Generic Shape Retrieval Contest.

[21]

Rahul Goel, Dhawal Sirikonda, Saurabh Saini, and PJ Narayanan. 2022. Interactive Segmentation of Radiance Fields. arXiv preprint arXiv:2212.13545 (2022).

[22]

Oshri Halimi, Or Litany, Emanuele Rodolà Rodolà, Alex M. Bronstein, and Ron Kimmel. 2019. Unsupervised Learning of Dense Shape Correspondence. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4365–4374. https://doi.org/10.1109/CVPR.2019.00450 ISSN: 2575-7075.

[23]

Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Dollár, and Ross Girshick. 2023. Segment Anything. arXiv:2304.02643 (2023).

[24]

Yanir Kleiman and Maks Ovsjanikov. 2019. Robust structure-based shape correspondence. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 7–20.

[25]

Sosuke Kobayashi, Eiichi Matsumoto, and Vincent Sitzmann. 2022. Decomposing NeRF for Editing via Feature Field Distillation. arXiv preprint arXiv:2205.15585 (2022).

[26]

Abhijit Kundu, Kyle Genova, Xiaoqi Yin, Alireza Fathi, Caroline Pantofaru, Leonidas J Guibas, Andrea Tagliasacchi, Frank Dellaert, and Thomas Funkhouser. 2022. Panoptic neural fields: A semantic object-aware neural scene representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12871–12881.

[27]

Boyi Li, Kilian Q Weinberger, Serge Belongie, Vladlen Koltun, and René Ranftl. 2022c. Language-driven semantic segmentation. arXiv preprint arXiv:2201.03546 (2022).

[28]

Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. 2023. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arxiv:2301.12597 [cs.CV]

[29]

Lei Li, Souhaib Attaiki, and Maks Ovsjanikov. 2022a. SRFeat: Learning Locally Accurate and Globally Consistent Non-Rigid Shape Correspondence. In International Conference on 3D Vision (3DV). IEEE.

[30]

Liunian Harold Li, Pengchuan Zhang, Haotian Zhang, Jianwei Yang, Chunyuan Li, Yiwu Zhong, Lijuan Wang, Lu Yuan, Lei Zhang, Jenq-Neng Hwang, 2022d. Grounded language-image pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10965–10975.

[31]

Yang Li, Hikari Takehara, Takafumi Taketomi, Bo Zheng, and Matthias Nießner. 2021. 4DComplete: Non-Rigid Motion Estimation Beyond the Observable Surface. arxiv:2105.01905 [cs.CV]

[32]

Yuchen Li, Ujjwal Upadhyay, Habib Slim, Ahmed Abdelreheem, Arpita Prajapati, Suhail Pothigara, Peter Wonka, and Mohamed Elhoseiny. 2022b. 3D CoMPaT: Composition of Materials on Parts of 3D Things. In European Conference on Computer Vision.

[33]

Or Litany, Tal Remez, Emanuele Rodolà, Alex M. Bronstein, and Michael M. Bronstein. 2017. Deep Functional Maps: Structured Prediction for Dense Shape Correspondence. https://doi.org/10.48550/arXiv.1704.08686 arXiv:1704.08686 [cs].

[34]

Minghua Liu, Yinhao Zhu, Hong Cai, Shizhong Han, Zhan Ling, Fatih Porikli, and Hao Su. 2022. PartSLIP: Low-Shot Part Segmentation for 3D Point Clouds via Pretrained Image-Language Models. arXiv preprint arXiv:2212.01558 (2022).

[35]

Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, 2023. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv preprint arXiv:2303.05499 (2023).

[36]

Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural volumes: Learning dynamic renderable volumes from images. arXiv preprint arXiv:1906.07751 (2019).

[37]

Jonathan Masci, Davide Boscaini, Michael Bronstein, and Pierre Vandergheynst. 2015. Geodesic convolutional neural networks on riemannian manifolds. In Proceedings of the IEEE international conference on computer vision workshops. 37–45.

[38]

Björn Michele, Alexandre Boulch, Gilles Puy, Maxime Bucher, and Renaud Marlet. 2021. Generative zero-shot learning for semantic segmentation of 3d point clouds. In 2021 International Conference on 3D Vision (3DV). IEEE, 992–1002.

[39]

Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. Nerf: Representing scenes as neural radiance fields for view synthesis. In European conference on computer vision. Springer, 405–421.

[40]

Muhammad Ferjad Naeem, Evin Pinar Ornek, Yongqin Xian, Luc Van Gool, and Federico Tombari. 2021. 3D Compositional Zero-shot Learning with DeCompositional Consensus. ArXiv abs/2111.14673 (2021). https://api.semanticscholar.org/CorpusID:244714384

[41]

OpenAI. 2021. GPT-3.5 Language Model. OpenAI. https://www.openai.com/research/gpt-3Accessed: May 21, 2023.

[42]

Long Ouyang, Jeffrey Wu, Xu Jiang, Diogo Almeida, Carroll Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, 2022. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems 35 (2022), 27730–27744.

[43]

Maks Ovsjanikov, Mirela Ben-Chen, Justin Solomon, Adrian Butscher, and Leonidas Guibas. 2012. Functional maps: a flexible representation of maps between shapes. ACM Transactions on Graphics 31, 4 (July 2012), 30:1–30:11. https://doi.org/10.1145/2185520.2185526

[44]

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. 2020. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research 21, 1 (2020), 5485–5551.

[45]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 (2022).

[46]

Jing Ren, Adrien Poulenard, Peter Wonka, and Maks Ovsjanikov. 2018a. Continuous and orientation-preserving correspondences via functional maps. ACM Transactions on Graphics (TOG) 37 (2018), 1 – 16.

[47]

Jing Ren, Adrien Poulenard, Peter Wonka, and Maks Ovsjanikov. 2018b. Continuous and Orientation-preserving Correspondences via Functional Maps. arxiv:1806.04455 [cs.GR]

[48]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10684–10695.

[49]

Yusuf Sahillioğlu. 2020. Recent advances in shape correspondence. The Visual Computer 36, 8 (Aug. 2020), 1705–1721. https://doi.org/10.1007/s00371-019-01760-0

[50]

Teven Le Scao, Angela Fan, Christopher Akiki, Ellie Pavlick, Suzana Ilić, Daniel Hesslow, Roman Castagné, Alexandra Sasha Luccioni, François Yvon, Matthias Gallé, 2022. Bloom: A 176b-parameter open-access multilingual language model. arXiv preprint arXiv:2211.05100 (2022).

[51]

Nur Muhammad Mahi Shafiullah, Chris Paxton, Lerrel Pinto, Soumith Chintala, and Arthur Szlam. 2022. Clip-fields: Weakly supervised semantic fields for robotic memory. arXiv preprint arXiv:2210.05663 (2022).

[52]

Yawar Siddiqui, Lorenzo Porzi, Samuel Rota Buló, Norman Müller, Matthias Nießner, Angela Dai, and Peter Kontschieder. 2022. Panoptic Lifting for 3D Scene Understanding with Neural Fields. arXiv:arXiv:2212.09802

[53]

Vadim Tschernezki, Iro Laina, Diane Larlus, and Andrea Vedaldi. 2022. Neural feature fusion fields: 3d distillation of self-supervised 2d image representations. arXiv preprint arXiv:2209.03494 (2022).

[54]

Oliver Van Kaick, Hao Zhang, Ghassan Hamarneh, and Daniel Cohen-Or. 2011. A survey on shape correspondence. In Computer graphics forum, Vol. 30. Wiley Online Library, 1681–1707.

[55]

Suhani Vora, Noha Radwan, Klaus Greff, Henning Meyer, Kyle Genova, Mehdi SM Sajjadi, Etienne Pot, Andrea Tagliasacchi, and Daniel Duckworth. 2021. Nesf: Neural semantic fields for generalizable semantic segmentation of 3d scenes. arXiv preprint arXiv:2111.13260 (2021).

[56]

Lingyu Wei, Qixing Huang, Duygu Ceylan, Etienne Vouga, and Hao Li. 2016. Dense Human Body Correspondences Using Convolutional Networks. https://doi.org/10.48550/arXiv.1511.05904 arXiv:1511.05904 [cs].

[57]

Chenfei Wu, Shengming Yin, Weizhen Qi, Xiaodong Wang, Zecheng Tang, and Nan Duan. 2023. Visual chatgpt: Talking, drawing and editing with visual foundation models. arXiv preprint arXiv:2303.04671 (2023).

[58]

Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 2015. 3D ShapeNets: A Deep Representation for Volumetric Shapes. https://doi.org/10.48550/arXiv.1406.5670 arXiv:1406.5670 [cs].

[59]

Fanbo Xiang, Yuzhe Qin, Kaichun Mo, Yikuan Xia, Hao Zhu, Fangchen Liu, Minghua Liu, Hanxiao Jiang, Yifu Yuan, He Wang, Li Yi, Angel X. Chang, Leonidas J. Guibas, and Hao Su. 2020. SAPIEN: A SimulAted Part-based Interactive ENvironment. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[60]

Renrui Zhang, Ziyu Guo, Wei Zhang, Kunchang Li, Xupeng Miao, Bin Cui, Yu Qiao, Peng Gao, and Hongsheng Li. 2021. PointCLIP: Point Cloud Understanding by CLIP. arXiv preprint arXiv:2112.02413 (2021).

[61]

Shuaifeng Zhi, Tristan Laidlow, Stefan Leutenegger, and Andrew J. Davison. 2021. In-Place Scene Labelling and Understanding with Implicit Scene Representation. In Proceedings of theIEEE International Conference on Computer Vision.

[62]

Deyao Zhu, Jun Chen, Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. 2023. MiniGPT-4: Enhancing vision-language understanding with advanced large language models. arXiv preprint arXiv:2304.10592 (2023).

[63]

Xiangyang Zhu, Renrui Zhang, Bowei He, Ziyao Zeng, Shanghang Zhang, and Peng Gao. 2022. PointCLIP V2: Adapting CLIP for Powerful 3D Open-world Learning. ArXiv abs/2211.11682 (2022). https://api.semanticscholar.org/CorpusID:253735373

[64]

Silvia Zuffi, Angjoo Kanazawa, David Jacobs, and Michael J. Black. 2017. 3D Menagerie: Modeling the 3D Shape and Pose of Animals. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).