“DROP: Dynamics Responses from Human Motion Prior and Projective Dynamics” by Jiang, Won, Ye and Liu

Conference:

Type(s):

Title:

- DROP: Dynamics Responses from Human Motion Prior and Projective Dynamics

Session/Category Title: Technical Papers Fast-Forward

Presenter(s)/Author(s):

Abstract:

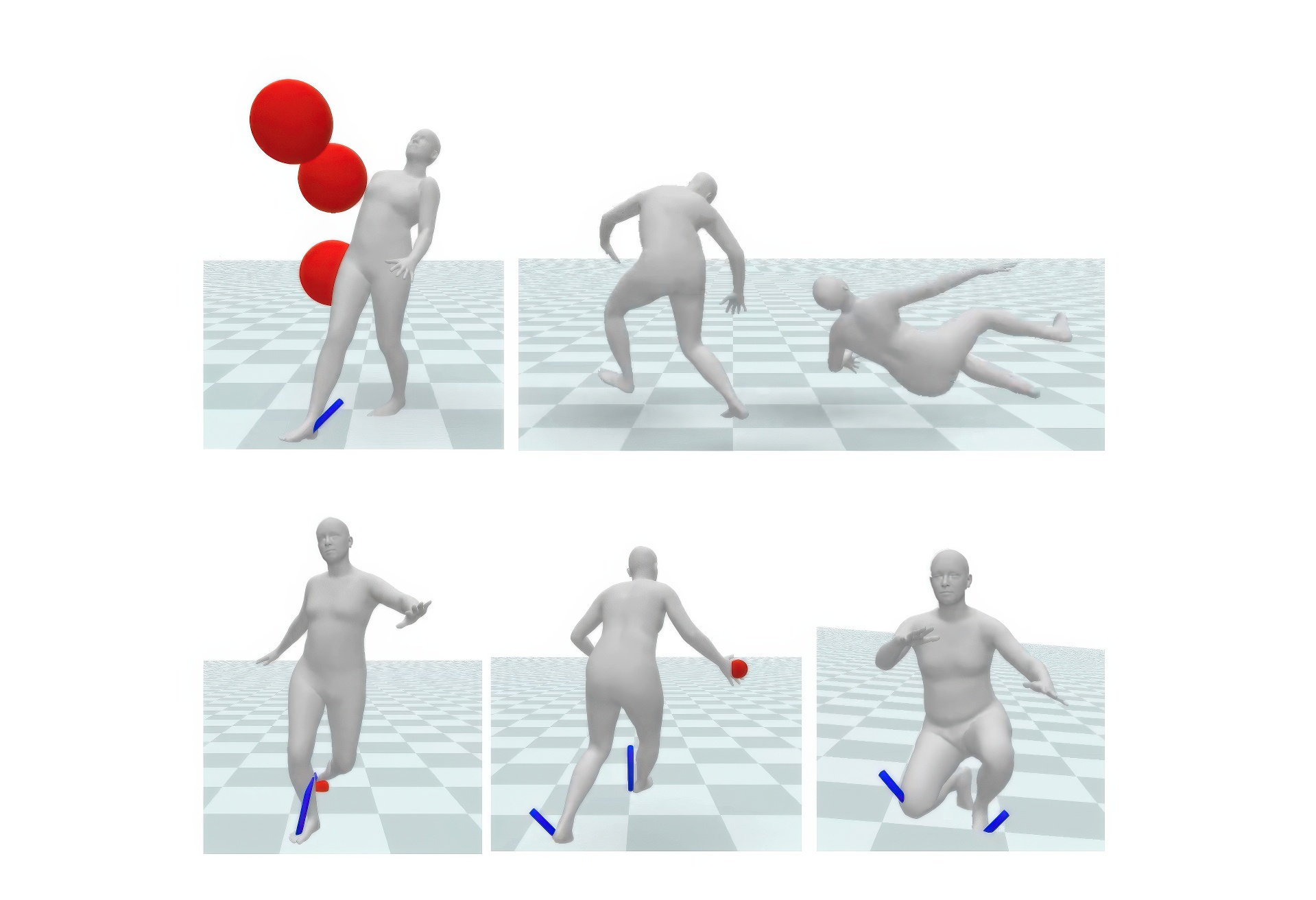

Synthesizing realistic human movements, dynamically responsive to the environment, is a long-standing objective in character animation, with applications in computer vision, sports, and healthcare, for motion prediction and data augmentation. Recent kinematics-based generative motion models offer impressive scalability in modeling extensive motion data, albeit without an interface to reason about and interact with physics. While simulator-in-the-loop learning approaches enable highly physically realistic behaviors, the challenges in training often affect scalability and adoption. We introduce DROP, a novel framework for modeling Dynamics Responses of humans using generative mOtion prior and Projective dynamics. DROP can be viewed as a highly stable, minimalist physics-based human simulator that interfaces with a kinematics-based generative motion prior. Utilizing projective dynamics, DROP allows flexible and simple integration of the learned motion prior as one of the projective energies, seamlessly incorporating control provided by the motion prior with Newtonian dynamics. Serving as a model-agnostic plug-in, DROP enables us to fully leverage recent advances in generative motion models for physics-based motion synthesis. We conduct extensive evaluations of our model across different motion tasks and various physical perturbations, demonstrating the scalability and diversity of responses.

References:

[1]

2003. Carnegie-Mellon Mocap Database.http://mocap.cs.cmu.edu/

[2]

Okan Arikan and David A. Forsyth. 2002. Interactive Motion Generation from Examples. ACM Trans. Graph. 21, 3 (jul 2002), 483–490. https://doi.org/10.1145/566654.566606

[3]

Okan Arikan, David A. Forsyth, and James F. O’Brien. 2005. Pushing People Around. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation(SCA ’05). 59–66.

[4]

Emad Barsoum, John Kender, and Zicheng Liu. 2018. Hp-gan: Probabilistic 3d human motion prediction via gan. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 1418–1427.

[5]

Samy Bengio, Oriol Vinyals, Navdeep Jaitly, and Noam Shazeer. 2015. Scheduled sampling for sequence prediction with recurrent neural networks. Advances in neural information processing systems 28 (2015).

[6]

Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: Data-Driven Responsive Control of Physics-Based Characters. ACM Trans. Graph. 38, 6, Article 206 (nov 2019), 11 pages. https://doi.org/10.1145/3355089.3356536

[7]

Sofien Bouaziz, Sebastian Martin, Tiantian Liu, Ladislav Kavan, and Mark Pauly. 2014. Projective Dynamics: Fusing Constraint Projections for Fast Simulation. ACM Trans. Graph. 33, 4, Article 154 (jul 2014), 11 pages. https://doi.org/10.1145/2601097.2601116

[8]

Yunuo Chen, Minchen Li, Lei Lan, Hao Su, Yin Yang, and Chenfanfu Jiang. 2022. A Unified Newton Barrier Method for Multibody Dynamics. ACM Trans. Graph. 41, 4, Article 66 (jul 2022), 14 pages. https://doi.org/10.1145/3528223.3530076

[9]

Simon Clavet. 2016. Motion Matching and The Road to Next-Gen Animation. In Game Developers Conference.

[10]

Marco da Silva, Yeuhi Abe, and Jovan Popović. 2008. Interactive Simulation of Stylized Human Locomotion. ACM Trans. Graph. 27, 3 (aug 2008), 1–10.

[11]

Levi Fussell, Kevin Bergamin, and Daniel Holden. 2021. SuperTrack: Motion Tracking for Physically Simulated Characters Using Supervised Learning. ACM Trans. Graph. 40, 6, Article 197 (dec 2021), 13 pages. https://doi.org/10.1145/3478513.3480527

[12]

Theodore F Gast, Craig Schroeder, Alexey Stomakhin, Chenfanfu Jiang, and Joseph M Teran. 2015. Optimization integrator for large time steps. IEEE transactions on visualization and computer graphics 21, 10 (2015), 1103–1115.

[13]

Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned Motion Matching. ACM Trans. Graph. 39, 4, Article 53 (aug 2020), 13 pages.

[14]

Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-Functioned Neural Networks for Character Control. ACM Trans. Graph. 36, 4, Article 42 (jul 2017), 13 pages.

[15]

Ben Jones, Nils Thuerey, Tamar Shinar, and Adam W. Bargteil. 2016. Example-Based Plastic Deformation of Rigid Bodies. ACM Trans. Graph. 35, 4, Article 34 (jul 2016), 11 pages. https://doi.org/10.1145/2897824.2925979

[16]

Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion Graphs. ACM Trans. Graph. 21, 3 (jul 2002), 473–482.

[17]

Lei Lan, Guanqun Ma, Yin Yang, Changxi Zheng, Minchen Li, and Chenfanfu Jiang. 2022. Penetration-Free Projective Dynamics on the GPU. ACM Trans. Graph. 41, 4, Article 69 (jul 2022), 16 pages. https://doi.org/10.1145/3528223.3530069

[18]

Yann LeCun, Sumit Chopra, Raia Hadsell, M Ranzato, and Fujie Huang. 2006. A tutorial on energy-based learning. Predicting structured data 1, 0 (2006).

[19]

Jehee Lee, Jinxiang Chai, Paul S. A. Reitsma, Jessica K. Hodgins, and Nancy S. Pollard. 2002. Interactive Control of Avatars Animated with Human Motion Data. ACM Trans. Graph. 21, 3 (jul 2002), 491–500.

[20]

Seunghwan Lee, Phil Sik Chang, and Jehee Lee. 2022. Deep Compliant Control. In ACM SIGGRAPH 2022 Conference Proceedings (Vancouver, BC, Canada) (SIGGRAPH ’22). Association for Computing Machinery, New York, NY, USA, Article 23, 9 pages. https://doi.org/10.1145/3528233.3530719

[21]

Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popović, and Zoran Popović. 2010. Motion Fields for Interactive Character Locomotion. ACM Trans. Graph. 29, 6, Article 138 (dec 2010), 8 pages.

[22]

Minchen Li, Zachary Ferguson, Teseo Schneider, Timothy Langlois, Denis Zorin, Daniele Panozzo, Chenfanfu Jiang, and Danny M. Kaufman. 2020. Incremental Potential Contact: Intersection-and Inversion-Free, Large-Deformation Dynamics. ACM Trans. Graph. 39, 4, Article 49 (aug 2020), 20 pages. https://doi.org/10.1145/3386569.3392425

[23]

Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel Van De Panne. 2020. Character controllers using motion vaes. ACM Transactions on Graphics (TOG) 39, 4 (2020), 40–1.

[24]

Libin Liu and Jessica Hodgins. 2017. Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning. ACM Trans. Graph. 36, 4, Article 42a (jul 2017), 14 pages. https://doi.org/10.1145/3072959.3083723

[25]

Tiantian Liu, Adam W Bargteil, James F O’Brien, and Ladislav Kavan. 2013. Fast simulation of mass-spring systems. ACM Transactions on Graphics (TOG) 32, 6 (2013), 1–7.

[26]

Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J. Black. 2015. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34, 6 (Oct. 2015), 248:1–248:16.

[27]

Naureen Mahmood, Nima Ghorbani, Nikolaus F. Troje, Gerard Pons-Moll, and Michael J. Black. 2019. AMASS: Archive of Motion Capture as Surface Shapes. In International Conference on Computer Vision. 5442–5451.

[28]

Sebastian Martin, Bernhard Thomaszewski, Eitan Grinspun, and Markus Gross. 2011. Example-Based Elastic Materials. In ACM SIGGRAPH 2011 Papers (Vancouver, British Columbia, Canada) (SIGGRAPH ’11). Association for Computing Machinery, New York, NY, USA, Article 72, 8 pages. https://doi.org/10.1145/1964921.1964967

[29]

Qianhui Men, Hubert P.H. Shum, Edmond S.L. Ho, and Howard Leung. 2022. GAN-based reactive motion synthesis with class-aware discriminators for human–human interaction. Computers & Graphics 102 (2022), 634–645.

[30]

Uldarico Muico, Yongjoon Lee, Jovan Popović, and Zoran Popović. 2009. Contact-Aware Nonlinear Control of Dynamic Characters. ACM Trans. Graph. 28, 3, Article 81 (jul 2009), 9 pages.

[31]

Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019. Learning predict-and-simulate policies from unorganized human motion data. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–11.

[32]

Georgios Pavlakos, Vasileios Choutas, Nima Ghorbani, Timo Bolkart, Ahmed A. A. Osman, Dimitrios Tzionas, and Michael J. Black. 2019. Expressive Body Capture: 3D Hands, Face, and Body from a Single Image. In Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 10975–10985.

[33]

Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills. ACM Trans. Graph. 37, 4, Article 143 (jul 2018), 14 pages. https://doi.org/10.1145/3197517.3201311

[34]

Xue Bin Peng, Yunrong Guo, Lina Halper, Sergey Levine, and Sanja Fidler. 2022. ASE: Large-Scale Reusable Adversarial Skill Embeddings for Physically Simulated Characters. ACM Trans. Graph. 41, 4, Article 94 (jul 2022), 17 pages. https://doi.org/10.1145/3528223.3530110

[35]

Xue Bin Peng, Ze Ma, Pieter Abbeel, Sergey Levine, and Angjoo Kanazawa. 2021. AMP: Adversarial Motion Priors for Stylized Physics-Based Character Control. ACM Trans. Graph. 40, 4, Article 144 (jul 2021), 20 pages. https://doi.org/10.1145/3450626.3459670

[36]

Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J. Guibas. 2021. HuMoR: 3D Human Motion Model for Robust Pose Estimation. In International Conference on Computer Vision (ICCV).

[37]

Takaaki Shiratori, Brooke Coley, Rakié Cham, and Jessica K. Hodgins. 2009. Simulating Balance Recovery Responses to Trips Based on Biomechanical Principles. In Proceedings of the 2009 ACM SIGGRAPH/Eurographics Symposium on Computer Animation(SCA ’09). 37–46.

[38]

Sebastian Starke, Ian Mason, and Taku Komura. 2022. DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds. ACM Trans. Graph. 41, 4, Article 136 (jul 2022), 13 pages.

[39]

Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural State Machine for Character-Scene Interactions. ACM Trans. Graph. 38, 6, Article 209 (nov 2019), 14 pages.

[40]

Guy Tevet, Sigal Raab, Brian Gordon, Yoni Shafir, Daniel Cohen-or, and Amit Haim Bermano. 2023. Human Motion Diffusion Model. In The Eleventh International Conference on Learning Representations. https://openreview.net/forum?id=SJ1kSyO2jwu

[41]

Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2020. A Scalable Approach to Control Diverse Behaviors for Physically Simulated Characters. ACM Trans. Graph. 39, 4, Article 33 (aug 2020), 12 pages. https://doi.org/10.1145/3386569.3392381

[42]

Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2022. Physics-Based Character Controllers Using Conditional VAEs. ACM Trans. Graph. 41, 4, Article 96 (jul 2022), 12 pages. https://doi.org/10.1145/3528223.3530067

[43]

Kaixiang Xie, Pei Xu, Sheldon Andrews, Victor Zordan, and Paul Kry. 2023. Too Stiff, Too Strong, Too Smart: Evaluating Fundamental Problems with Motion Control Policies. In The Proceedings of the ACM in Computer Graphics and Interactive Techniques.

[44]

Pei Xu and Ioannis Karamouzas. 2021. A GAN-Like Approach for Physics-Based Imitation Learning and Interactive Character Control. Proc. ACM Comput. Graph. Interact. Tech. 4, 3 (2021). https://doi.org/10.1145/3480148

[45]

Heyuan Yao, Zhenhua Song, Baoquan Chen, and Libin Liu. 2022. ControlVAE: Model-Based Learning of Generative Controllers for Physics-Based Characters. ACM Trans. Graph. 41, 6, Article 183 (nov 2022), 16 pages.

[46]

Yuting Ye and C. Karen Liu. 2008. Animating responsive characters with dynamic constraints in near-unactuated coordinates. In SIGGRAPH Asia ’08: ACM SIGGRAPH Asia 2008 papers. ACM, New York, NY, USA, 1–5.

[47]

Yuting Ye and C. Karen Liu. 2010a. Optimal feedback control for character animation using an abstract model. In SIGGRAPH ’10: ACM SIGGRAPH 2010 papers. ACM, 1–9.

[48]

Yuting Ye and C. Karen Liu. 2010b. Synthesis of Responsive Motion Using a Dynamic Model. Computer Graphics Forum 29, 2 (2010), 555–562.

[49]

He Zhang, Yuting Ye, Takaaki Shiratori, and Taku Komura. 2021. ManipNet: Neural Manipulation Synthesis with a Hand-Object Spatial Representation. ACM Trans. Graph. 40, 4, Article 121 (jul 2021), 14 pages. https://doi.org/10.1145/3450626.3459830

[50]

Yu Zheng and Katsu Yamane. 2013. Human motion tracking control with strict contact force constraints for floating-base humanoid robots. In 2013 13th IEEE-RAS International Conference on Humanoid Robots (Humanoids). 34–41. https://doi.org/10.1109/HUMANOIDS.2013.7029952

[51]

Victor Brian Zordan and Jessica K. Hodgins. 2002. Motion Capture-Driven Simulations That Hit and React. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation(SCA ’02). 89–96.

[52]

Victor Brian Zordan, Anna Majkowska, Bill Chiu, and Matthew Fast. 2005. Dynamic response for motion capture animation. ACM Transactions on Graphics (TOG) 24, 3 (2005), 697–701.