“Emotional Speech-Driven Animation with Content-Emotion Disentanglement” by Daněček, Chhatre, Tripathi, Wen, Black, et al. …

Conference:

Type(s):

Title:

- Emotional Speech-Driven Animation with Content-Emotion Disentanglement

Session/Category Title: Technical Papers Fast-Forward

Presenter(s)/Author(s):

Abstract:

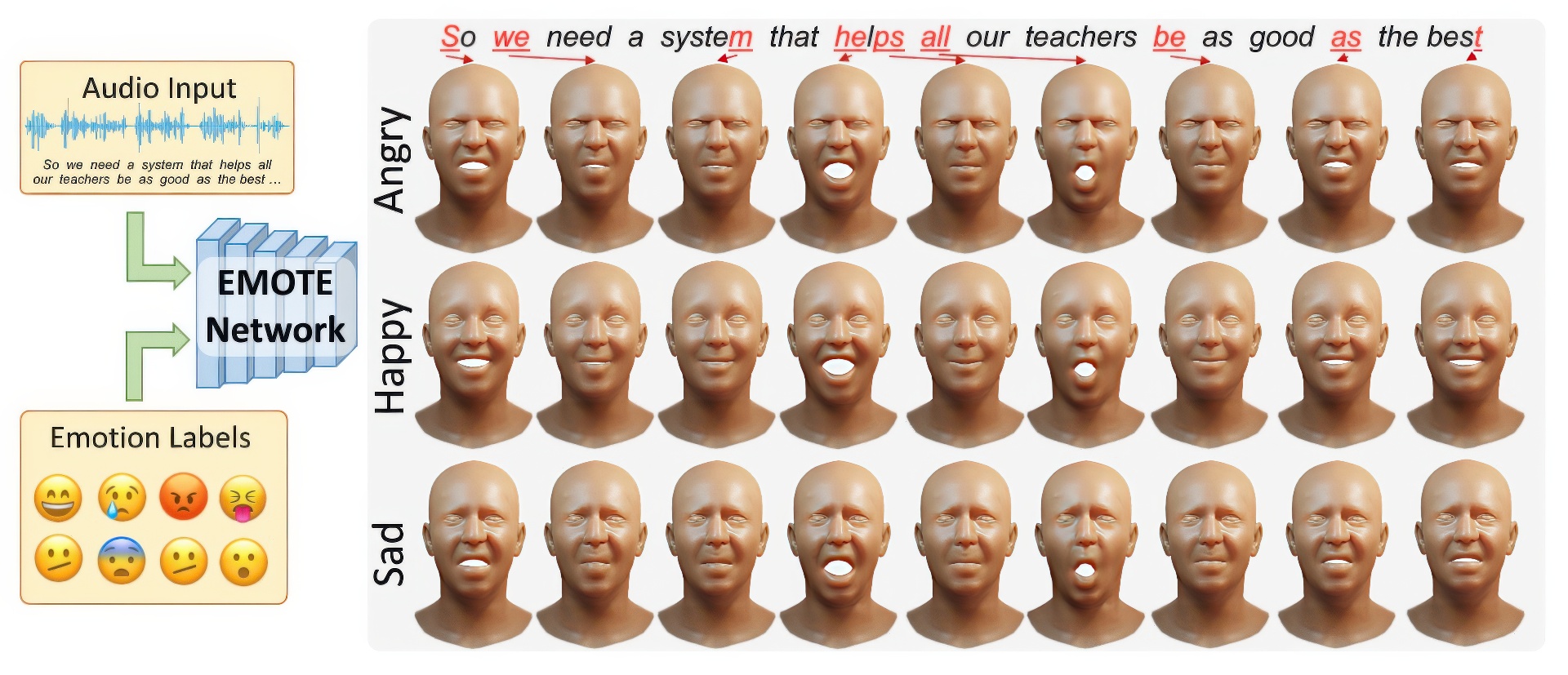

To be widely adopted, 3D facial avatars need to be animated easily, realistically, and directly, from speech signals. While the best recent methods generate 3D animations that are synchronized with the input audio, they largely ignore the impact of emotions on facial expressions. Instead, their focus is on modeling the correlations between speech and facial motion, resulting in animations that are unemotional or do not match the input emotion. We observe that there are two contributing factors resulting in facial animation – the speech and the emotion. We exploit these insights in EMOTE (Expressive Model Optimized for Talking with Emotion), which generates 3D talking head avatars that maintain lip sync while enabling explicit control over the expression of emotion. Due to the absence of high-quality aligned emotional 3D face datasets with speech, EMOTE is trained from an emotional video dataset (i.e., MEAD). To achieve this, we match speech-content between generated sequences and target videos differently from emotion content. Specifically, we train EMOTE with additional supervision in the form of a lip-reading objective to preserve the speech-dependent content (spatially local and high temporal frequency), while utilizing emotion supervision on a sequence-level (spatially global and low frequency). Furthermore, we employ a content-emotion exchange mechanism in order to supervise different emotion on the same audio, while maintaining the lip motion synchronized with the speech. To employ deep perceptual losses without getting undesirable artifacts, we devise a motion prior in form of a temporal VAE. Extensive qualitative, quantitative, and perceptual evaluations demonstrate that EMOTE produces state-of-the-art speech-driven facial animations, with lip sync on par with the best methods while offering additional, high-quality emotional control.

References:

[1]

Triantafyllos Afouras, Joon Son Chung, Andrew W. Senior, Oriol Vinyals, and Andrew Zisserman. 2022. Deep Audio-Visual Speech Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 44, 12 (2022), 8717–8727. https://doi.org/10.1109/TPAMI.2018.2889052

[2]

Triantafyllos Afouras, Joon Son Chung, and Andrew Zisserman. 2018. LRS3-TED: a large-scale dataset for visual speech recognition. CoRR abs/1809.00496 (2018). arXiv:1809.00496http://arxiv.org/abs/1809.00496

[3]

Hadar Averbuch-Elor, Daniel Cohen-Or, Johannes Kopf, and Michael F. Cohen. 2017. Bringing portraits to life. ACM Trans. Graph. 36, 6 (2017), 196:1–196:13. https://doi.org/10.1145/3130800.3130818

[4]

Alexei Baevski, Yuhao Zhou, Abdelrahman Mohamed, and Michael Auli. 2020. wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in Neural Information Processing Systems 33 (2020), 12449–12460.

[5]

AmirAli Bagher Zadeh, Paul Pu Liang, Soujanya Poria, Erik Cambria, and Louis-Philippe Morency. 2018. Multimodal Language Analysis in the Wild: CMU-MOSEI Dataset and Interpretable Dynamic Fusion Graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Melbourne, Australia, 2236–2246. https://doi.org/10.18653/v1/P18-1208

[6]

Adrian Bulat and Georgios Tzimiropoulos. 2017. How Far are We from Solving the 2D & 3D Face Alignment Problem? (and a Dataset of 230, 000 3D Facial Landmarks). In IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, October 22-29, 2017. IEEE Computer Society, 1021–1030. https://doi.org/10.1109/ICCV.2017.116

[7]

Houwei Cao, David Cooper, Michael Keutmann, Ruben Gur, Ani Nenkova, and Ragini Verma. 2014. CREMA-D: Crowd-sourced emotional multimodal actors dataset. IEEE transactions on affective computing 5 (10 2014), 377–390. https://doi.org/10.1109/TAFFC.2014.2336244

[8]

Yong Cao, Wen C. Tien, Petros Faloutsos, and Frédéric Pighin. 2005. Expressive Speech-Driven Facial Animation. ACM Trans. Graph. 24, 4 (oct 2005), 1283–1302. https://doi.org/10.1145/1095878.1095881

[9]

Prashanth Chandran, Gaspard Zoss, Markus H. Gross, Paulo F. U. Gotardo, and Derek Bradley. 2022. Facial Animation with Disentangled Identity and Motion using Transformers. Comput. Graph. Forum 41, 8, 267–277. https://doi.org/10.1111/cgf.14641

[10]

Lele Chen, Ross K. Maddox, Zhiyao Duan, and Chenliang Xu. 2019. Hierarchical Cross-Modal Talking Face Generation With Dynamic Pixel-Wise Loss. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019. Computer Vision Foundation / IEEE, 7832–7841. https://doi.org/10.1109/CVPR.2019.00802

[11]

Kun Cheng, Xiaodong Cun, Yong Zhang, Menghan Xia, Fei Yin, Mingrui Zhu, Xuan Wang, Jue Wang, and Nannan Wang. 2022. VideoReTalking: Audio-based Lip Synchronization for Talking Head Video Editing In the Wild. In SIGGRAPH Asia 2022 Conference Papers, SA 2022, Daegu, Republic of Korea, December 6-9, 2022, Soon Ki Jung, Jehee Lee, and Adam W. Bargteil (Eds.). ACM, 30:1–30:9. https://doi.org/10.1145/3550469.3555399

[12]

Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. StarGAN v2: Diverse Image Synthesis for Multiple Domains. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020. Computer Vision Foundation / IEEE, 8185–8194. https://doi.org/10.1109/CVPR42600.2020.00821

[13]

Joon Son Chung, Arsha Nagrani, and Andrew Zisserman. 2018. VoxCeleb2: Deep Speaker Recognition. In Interspeech 2018, 19th Annual Conference of the International Speech Communication Association, Hyderabad, India, 2-6 September 2018, B. Yegnanarayana (Ed.). ISCA, 1086–1090. https://doi.org/10.21437/Interspeech.2018-1929

[14]

Michael M. Cohen, Rashid Clark, and Dominic W. Massaro. 2001. Animated speech: research progress and applications. In Auditory-Visual Speech Processing, AVSP 2001, Aalborg, Denmark, September 7-9, 2001, Dominic W. Massaro, Joanna Light, and Kristin Geraci (Eds.). ISCA, 200. http://www.isca-speech.org/archive_open/avsp01/av01_200c.html

[15]

Daniel Cudeiro, Timo Bolkart, Cassidy Laidlaw, Anurag Ranjan, and Michael J. Black. 2019. Capture, Learning, and Synthesis of 3D Speaking Styles. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019. Computer Vision Foundation / IEEE, 10101–10111. https://doi.org/10.1109/CVPR.2019.01034

[16]

Radek Danecek, Michael J. Black, and Timo Bolkart. 2022. EMOCA: Emotion Driven Monocular Face Capture and Animation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, 20279–20290. https://doi.org/10.1109/CVPR52688.2022.01967

[17]

Stefano d’Apolito, Danda Pani Paudel, Zhiwu Huang, Andres Romero, and Luc Van Gool. 2021. GANmut: Learning Interpretable Conditional Space for Gamut of Emotions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 568–577.

[18]

Yu Deng, Jiaolong Yang, Sicheng Xu, Dong Chen, Yunde Jia, and Xin Tong. 2019. Accurate 3D Face Reconstruction With Weakly-Supervised Learning: From Single Image to Image Set. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2019, Long Beach, CA, USA, June 16-20, 2019. Computer Vision Foundation / IEEE, 285–295. https://doi.org/10.1109/CVPRW.2019.00038

[19]

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. (2019), 4171–4186. https://doi.org/10.18653/v1/n19-1423

[20]

Hui Ding, Kumar Sricharan, and Rama Chellappa. 2018. ExprGAN: Facial Expression Editing With Controllable Expression Intensity. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, Louisiana, USA, February 2-7, 2018, Sheila A. McIlraith and Kilian Q. Weinberger (Eds.). AAAI Press, 6781–6788. https://doi.org/10.1609/aaai.v32i1.12277

[21]

Pif Edwards, Chris Landreth, Eugene Fiume, and Karan Singh. 2016. JALI: An Animator-Centric Viseme Model for Expressive Lip Synchronization. ACM Trans. Graph. 35, 4, Article 127 (jul 2016), 11 pages. https://doi.org/10.1145/2897824.2925984

[22]

Pif Edwards, Chris Landreth, Mateusz Popławski, Robert Malinowski, Sarah Watling, Eugene Fiume, and Karan Singh. 2020. JALI-Driven Expressive Facial Animation and Multilingual Speech in Cyberpunk 2077. In ACM SIGGRAPH 2020 Talks (Virtual Event, USA) (SIGGRAPH ’20). Association for Computing Machinery, New York, NY, USA, Article 60, 2 pages. https://doi.org/10.1145/3388767.3407339

[23]

Bernhard Egger, William A. P. Smith, Ayush Tewari, Stefanie Wuhrer, Michael Zollhöfer, Thabo Beeler, Florian Bernard, Timo Bolkart, Adam Kortylewski, Sami Romdhani, Christian Theobalt, Volker Blanz, and Thomas Vetter. 2020. 3D Morphable Face Models – Past, Present, and Future. ACM Trans. Graph. 39, 5 (2020), 157:1–157:38. https://doi.org/10.1145/3395208

[24]

Yingruo Fan, Zhaojiang Lin, Jun Saito, Wenping Wang, and Taku Komura. 2022. FaceFormer: Speech-Driven 3D Facial Animation with Transformers. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, 18749–18758. https://doi.org/10.1109/CVPR52688.2022.01821

[25]

G. Fanelli, J. Gall, H. Romsdorfer, T. Weise, and L. Van Gool. 2010. A 3-D Audio-Visual Corpus of Affective Communication. IEEE Transactions on Multimedia 12, 6 (October 2010), 591 – 598.

[26]

Yao Feng, Haiwen Feng, Michael J. Black, and Timo Bolkart. 2021. Learning an Animatable Detailed 3D Face Model from In-the-Wild Images. Transactions on Graphics, (Proc. SIGGRAPH) 40, 4 (2021), 88:1–88:13.

[27]

Panagiotis P. Filntisis, George Retsinas, Foivos Paraperas-Papantoniou, Athanasios Katsamanis, Anastasios Roussos, and Petros Maragos. 2022. Visual Speech-Aware Perceptual 3D Facial Expression Reconstruction from Videos.

[28]

Kyle Genova, Forrester Cole, Aaron Maschinot, Aaron Sarna, Daniel Vlasic, and William T. Freeman. 2018. Unsupervised Training for 3D Morphable Model Regression. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018. Computer Vision Foundation / IEEE Computer Society, 8377–8386. https://doi.org/10.1109/CVPR.2018.00874

[29]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016. IEEE Computer Society, 770–778. https://doi.org/10.1109/CVPR.2016.90

[30]

Xinya Ji, Hang Zhou, Kaisiyuan Wang, Wayne Wu, Chen Change Loy, Xun Cao, and Feng Xu. 2021. Audio-Driven Emotional Video Portraits. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021. Computer Vision Foundation / IEEE, 14080–14089. https://doi.org/10.1109/CVPR46437.2021.01386

[31]

Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. 2017. Audio-driven facial animation by joint end-to-end learning of pose and emotion. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–12.

[32]

Hyeongwoo Kim, Mohamed Elgharib, Hans-Peter Zollöfer, Michael Seidel, Thabo Beeler, Christian Richardt, and Christian Theobalt. 2019. Neural Style-Preserving Visual Dubbing. ACM Transactions on Graphics (TOG) 38, 6 (2019), 178:1–13.

[33]

Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Yoshua Bengio and Yann LeCun (Eds.). http://arxiv.org/abs/1412.6980

[34]

Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. (2014). http://arxiv.org/abs/1312.6114

[35]

Tianye Li, Timo Bolkart, Michael. J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. Transactions on Graphics, (Proc. SIGGRAPH Asia) 36, 6 (2017), 194:1–194:17.

[36]

Alexandra Lindt, Pablo V. A. Barros, Henrique Siqueira, and Stefan Wermter. 2020. Facial Expression Editing with Continuous Emotion Labels. CoRR abs/2006.12210. arXiv:2006.12210https://arxiv.org/abs/2006.12210

[37]

Steven R Livingstone and Frank A Russo. 2018. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PloS one 13, 5 (2018), e0196391.

[38]

Camillo Lugaresi, Jiuqiang Tang, Hadon Nash, Chris McClanahan, Esha Uboweja, Michael Hays, Fan Zhang, Chuo-Ling Chang, Ming Guang Yong, Juhyun Lee, Wan-Teh Chang, Wei Hua, Manfred Georg, and Matthias Grundmann. 2019. MediaPipe: A Framework for Building Perception Pipelines. CoRR abs/1906.08172 (2019). arXiv:1906.08172http://arxiv.org/abs/1906.08172

[39]

Ali Mollahosseini, Behzad Hasani, and Mohammad H Mahoor. 2017. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Transactions on Affective Computing 10, 1 (2017), 18–31.

[40]

Arsha Nagrani, Joon Son Chung, and Andrew Zisserman. 2017. VoxCeleb: A Large-Scale Speaker Identification Dataset. (2017), 2616–2620. https://doi.org/10.21437/Interspeech.2017-950

[41]

Evonne Ng, Hanbyul Joo, Liwen Hu, Hao Li, Trevor Darrell, Angjoo Kanazawa, and Shiry Ginosar. 2022. Learning to Listen: Modeling Non-Deterministic Dyadic Facial Motion. (2022), 20363–20373. https://doi.org/10.1109/CVPR52688.2022.01975

[42]

Foivos Paraperas Papantoniou, Panagiotis Paraskevas Filntisis, Petros Maragos, and Anastasios Roussos. 2022. Neural Emotion Director: Speech-preserving semantic control of facial expressions in “in-the-wild” videos. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, 18759–18768. https://doi.org/10.1109/CVPR52688.2022.01822

[43]

Ziqiao Peng, Haoyu Wu, Zhenbo Song, Hao Xu, Xiangyu Zhu, Hongyan Liu, Jun He, and Zhaoxin Fan. 2023. EmoTalk: Speech-driven emotional disentanglement for 3D face animation. CoRR abs/2303.11089 (2023). https://doi.org/10.48550/arXiv.2303.11089 arXiv:2303.11089

[44]

Hai Xuan Pham, Samuel Cheung, and Vladimir Pavlovic. 2017a. Speech-Driven 3D Facial Animation with Implicit Emotional Awareness: A Deep Learning Approach. In 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2017, Honolulu, HI, USA, July 21-26, 2017. IEEE Computer Society, 2328–2336. https://doi.org/10.1109/CVPRW.2017.287

[45]

Hai Xuan Pham, Yuting Wang, and Vladimir Pavlovic. 2017b. End-to-end Learning for 3D Facial Animation from Raw Waveforms of Speech. CoRR abs/1710.00920 (2017). arXiv:1710.00920http://arxiv.org/abs/1710.00920

[46]

Soujanya Poria, Devamanyu Hazarika, Navonil Majumder, Gautam Naik, Erik Cambria, and Rada Mihalcea. 2019. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, Anna Korhonen, David R. Traum, and Lluís Màrquez (Eds.). Association for Computational Linguistics, 527–536. https://doi.org/10.18653/v1/p19-1050

[47]

K R Prajwal, Rudrabha Mukhopadhyay, Vinay P. Namboodiri, and C.V. Jawahar. 2020. A Lip Sync Expert Is All You Need for Speech to Lip Generation In the Wild. In Proceedings of the 28th ACM International Conference on Multimedia (Seattle, WA, USA) (MM ’20). Association for Computing Machinery, New York, NY, USA, 484–492. https://doi.org/10.1145/3394171.3413532

[48]

Nikhila Ravi, Jeremy Reizenstein, David Novotný, Taylor Gordon, Wan-Yen Lo, Justin Johnson, and Georgia Gkioxari. 2020. Accelerating 3D Deep Learning with PyTorch3D. CoRR abs/2007.08501 (2020). arXiv:2007.08501https://arxiv.org/abs/2007.08501

[49]

Alexander Richard, Michael Zollhöfer, Yandong Wen, Fernando De la Torre, and Yaser Sheikh. 2021. MeshTalk: 3D Face Animation from Speech using Cross-Modality Disentanglement. In 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021. IEEE, 1153–1162. https://doi.org/10.1109/ICCV48922.2021.00121

[50]

Andreas Rössler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Nießner. 2018. FaceForensics: A Large-scale Video Dataset for Forgery Detection in Human Faces. CoRR abs/1803.09179 (2018). arXiv:1803.09179http://arxiv.org/abs/1803.09179

[51]

K. Ruhland, C. E. Peters, S. Andrist, J. B. Badler, N. I. Badler, M. Gleicher, B. Mutlu, and R. McDonnell. 2015. A Review of Eye Gaze in Virtual Agents, Social Robotics and HCI: Behaviour Generation, User Interaction and Perception. Comput. Graph. Forum 34, 6 (sep 2015), 299–326. https://doi.org/10.1111/cgf.12603

[52]

Soubhik Sanyal, Timo Bolkart, Haiwen Feng, and Michael J. Black. 2019. Learning to Regress 3D Face Shape and Expression From an Image Without 3D Supervision. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019. Computer Vision Foundation / IEEE, 7763–7772. https://doi.org/10.1109/CVPR.2019.00795

[53]

Jiaxiang Shang, Tianwei Shen, Shiwei Li, Lei Zhou, Mingmin Zhen, Tian Fang, and Long Quan. 2020. Self-Supervised Monocular 3D Face Reconstruction by Occlusion-Aware Multi-view Geometry Consistency. In Computer Vision – ECCV 2020 – 16th European Conference, Glasgow, UK, August 23-28, 2020, Proceedings, Part XV(Lecture Notes in Computer Science, Vol. 12360), Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer, 53–70. https://doi.org/10.1007/978-3-030-58555-6_4

[54]

Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. 2017. Synthesizing Obama: learning lip sync from audio. ACM Trans. Graph. 36, 4 (2017), 95:1–95:13. https://doi.org/10.1145/3072959.3073640

[55]

Sarah L. Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica K. Hodgins, and Iain A. Matthews. 2017. A deep learning approach for generalized speech animation. ACM Trans. Graph. 36, 4 (2017), 93:1–93:11. https://doi.org/10.1145/3072959.3073699

[56]

Sarah L. Taylor, Moshe Mahler, Barry-John Theobald, and Iain A. Matthews. 2012. Dynamic Units of Visual Speech. In Proceedings of the 2012 Eurographics/ACM SIGGRAPH Symposium on Computer Animation, SCA 2012, Lausanne, Switzerland, 2012, Jehee Lee and Paul G. Kry (Eds.). Eurographics Association, 275–284. https://doi.org/10.2312/SCA/SCA12/275-284

[57]

Ayush Tewari, Florian Bernard, Pablo Garrido, Gaurav Bharaj, Mohamed Elgharib, Hans-Peter Seidel, Patrick Pérez, Michael Zollhöfer, and Christian Theobalt. 2019. FML: Face Model Learning From Videos. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019. Computer Vision Foundation / IEEE, 10812–10822. https://doi.org/10.1109/CVPR.2019.01107

[58]

Ayush Tewari, Michael Zollhöfer, Pablo Garrido, Florian Bernard, Hyeongwoo Kim, Patrick Pérez, and Christian Theobalt. 2018. Self-Supervised Multi-Level Face Model Learning for Monocular Reconstruction at Over 250 Hz. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018. Computer Vision Foundation / IEEE Computer Society, 2549–2559. https://doi.org/10.1109/CVPR.2018.00270

[59]

Ayush Tewari, Michael Zollhöfer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Pérez, and Christian Theobalt. 2017. MoFA: Model-Based Deep Convolutional Face Autoencoder for Unsupervised Monocular Reconstruction. In IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, October 22-29, 2017. IEEE Computer Society, 3735–3744. https://doi.org/10.1109/ICCV.2017.401

[60]

Balamurugan Thambiraja, Ikhsanul Habibie, Sadegh Aliakbarian, Darren Cosker, Christian Theobalt, and Justus Thies. 2023. Imitator: Personalized Speech-driven 3D Facial Animation. https://doi.org/10.48550/ARXIV.2301.00023

[61]

Justus Thies, Mohamed Elgharib, Ayush Tewari, Christian Theobalt, and Matthias Nießner. 2020. Neural Voice Puppetry: Audio-Driven Facial Reenactment. 12361 (2020), 716–731. https://doi.org/10.1007/978-3-030-58517-4_42

[62]

Soumya Tripathy, Juho Kannala, and Esa Rahtu. 2020. ICface: Interpretable and Controllable Face Reenactment Using GANs. (2020), 3374–3383. https://doi.org/10.1109/WACV45572.2020.9093474

[63]

Soumya Tripathy, Juho Kannala, and Esa Rahtu. 2021. FACEGAN: Facial Attribute Controllable rEenactment GAN. In IEEE Winter Conference on Applications of Computer Vision, WACV 2021, Waikoloa, HI, USA, January 3-8, 2021. IEEE, 1328–1337. https://doi.org/10.1109/WACV48630.2021.00137

[64]

Kaisiyuan Wang, Qianyi Wu, Linsen Song, Zhuoqian Yang, Wayne Wu, Chen Qian, Ran He, Yu Qiao, and Chen Change Loy. 2020. MEAD: A Large-Scale Audio-Visual Dataset for Emotional Talking-Face Generation. In Computer Vision – ECCV 2020 – 16th European Conference, Glasgow, UK, August 23-28, 2020, Proceedings, Part XXI(Lecture Notes in Computer Science, Vol. 12366), Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer, 700–717. https://doi.org/10.1007/978-3-030-58589-1_42

[65]

Cheng-hsin Wuu, Ningyuan Zheng, Scott Ardisson, Rohan Bali, Danielle Belko, Eric Brockmeyer, Lucas Evans, Timothy Godisart, Hyowon Ha, Alexander Hypes, Taylor Koska, Steven Krenn, Stephen Lombardi, Xiaomin Luo, Kevyn McPhail, Laura Millerschoen, Michal Perdoch, Mark Pitts, Alexander Richard, Jason M. Saragih, Junko Saragih, Takaaki Shiratori, Tomas Simon, Matt Stewart, Autumn Trimble, Xinshuo Weng, David Whitewolf, Chenglei Wu, Shoou-I Yu, and Yaser Sheikh. 2022. Multiface: A Dataset for Neural Face Rendering. CoRR abs/2207.11243. https://doi.org/10.48550/arXiv.2207.11243 arXiv:2207.11243

[66]

Jinbo Xing, Menghan Xia, Yuechen Zhang, Xiaodong Cun, Jue Wang, and Tien-Tsin Wong. 2023. CodeTalker: Speech-Driven 3D Facial Animation with Discrete Motion Prior. (2023), 12780–12790. https://doi.org/10.1109/CVPR52729.2023.01229

[67]

Yuyu Xu, Andrew W. Feng, Stacy Marsella, and Ari Shapiro. 2013. A Practical and Configurable Lip Sync Method for Games. In Motion in Games, MIG ’13, Dublin, Ireland, November 6-8, 2013, Rachel McDonnell, Nathan R. Sturtevant, and Victor B. Zordan (Eds.). ACM, 131–140. https://doi.org/10.1145/2522628.2522904

[68]

Haotian Yang, Hao Zhu, Yanru Wang, Mingkai Huang, Qiu Shen, Ruigang Yang, and Xun Cao. 2020. FaceScape: A Large-Scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020. Computer Vision Foundation / IEEE, 598–607. https://doi.org/10.1109/CVPR42600.2020.00068

[69]

Amir Zadeh, Rowan Zellers, Eli Pincus, and Louis-Philippe Morency. 2016. MOSI: Multimodal Corpus of Sentiment Intensity and Subjectivity Analysis in Online Opinion Videos. CoRR abs/1606.06259 (2016). arXiv:1606.06259http://arxiv.org/abs/1606.06259

[70]

Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, and Victor S. Lempitsky. 2019. Few-Shot Adversarial Learning of Realistic Neural Talking Head Models. In 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea (South), October 27 – November 2, 2019. IEEE, 9458–9467. https://doi.org/10.1109/ICCV.2019.00955

[71]

Jie Zhang and Robert B. Fisher. 2019. 3D Visual Passcode: Speech-Driven 3D Facial Dynamics for Behaviometrics. Signal Process. 160, C (jul 2019), 164–177. https://doi.org/10.1016/j.sigpro.2019.02.025

[72]

Yang Zhou, Zhan Xu, Chris Landreth, Evangelos Kalogerakis, Subhransu Maji, and Karan Singh. 2018. Visemenet: Audio-Driven Animator-Centric Speech Animation. ACM Trans. Graph. 37, 4, Article 161 (jul 2018), 10 pages. https://doi.org/10.1145/3197517.3201292

[73]

Hao Zhu, Wayne Wu, Wentao Zhu, Liming Jiang, Siwei Tang, Li Zhang, Ziwei Liu, and Chen Change Loy. 2022. CelebV-HQ: A Large-Scale Video Facial Attributes Dataset. 13667 (2022), 650–667. https://doi.org/10.1007/978-3-031-20071-7_38

[74]

Wojciech Zielonka, Timo Bolkart, and Justus Thies. 2022. Towards Metrical Reconstruction of Human Faces. In Computer Vision – ECCV 2022 – 17th European Conference, Tel Aviv, Israel, October 23-27, 2022, Proceedings, Part XIII(Lecture Notes in Computer Science, Vol. 13673), Shai Avidan, Gabriel J. Brostow, Moustapha Cissé, Giovanni Maria Farinella, and Tal Hassner (Eds.). Springer, 250–269. https://doi.org/10.1007/978-3-031-19778-9_15

[75]

Michael Zollhöfer, Justus Thies, Pablo Garrido, Derek Bradley, Thabo Beeler, Patrick Pérez, Marc Stamminger, Matthias Nießner, and Christian Theobalt. 2018. State of the Art on Monocular 3D Face Reconstruction, Tracking, and Applications. Comput. Graph. Forum 37, 2 (2018), 523–550. https://doi.org/10.1111/cgf.13382