“SAILOR: Synergizing Radiance and Occupancy Fields for Live Human Performance Capture” by Dong, Xu, Gao, Sun, Bao, et al. …

Conference:

Type(s):

Title:

- SAILOR: Synergizing Radiance and Occupancy Fields for Live Human Performance Capture

Session/Category Title: Full-Body Avatar

Presenter(s)/Author(s):

Abstract:

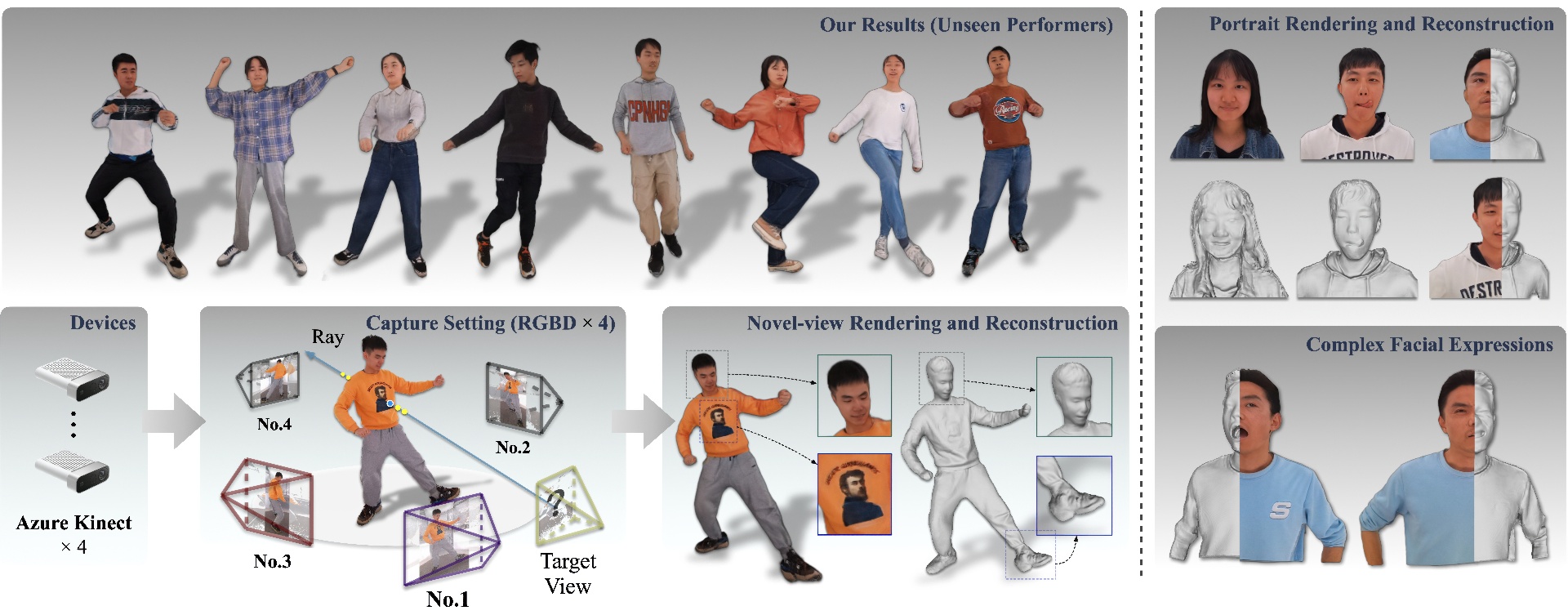

Immersive user experiences in live VR/AR performances require a fast and accurate free-view rendering of the performers. Existing methods are mainly based on Pixel-aligned Implicit Functions (PIFu) or Neural Radiance Fields (NeRF). However, while PIFu-based methods usually fail to produce photorealistic view-dependent textures, NeRF-based methods typically lack local geometry accuracy and are computationally heavy (e.g., dense sampling of 3D points, additional fine-tuning, or pose estimation). In this work, we propose a novel generalizable method, named SAILOR, to create photorealistic human free-view videos from very sparse RGBD streams with low latency. To produce photorealistic view-dependent textures while preserving locally accurate geometry, we integrate PIFu and NeRF such that they work synergistically by conditioning the PIFu on depth and then rendering view-dependent textures through NeRF. Specifically, we propose a novel network, named SRONet, for this hybrid representation to reconstruct and render live free-view videos. SRONet can handle unseen performers without fine-tuning. Both geometric and colorimetric supervision signals are exploited to enhance SRONet’s capability of capturing high-quality details. Besides, a neural blending-based ray interpolation scheme, a tree-based data structure, and a parallel computing pipeline are incorporated for fast upsampling, efficient points sampling, and acceleration. To evaluate the rendering performance, we construct a real-captured RGBD benchmark from 40 performers. Experimental results show that SAILOR outperforms existing human reconstruction and performance capture methods.