“TM-NET: deep generative networks for textured meshes” by Gao, Wu, Yuan, Lin, Lai, et al. …

Conference:

Type(s):

Title:

- TM-NET: deep generative networks for textured meshes

Session/Category Title: Surface Parameterization and Texturing

Presenter(s)/Author(s):

Abstract:

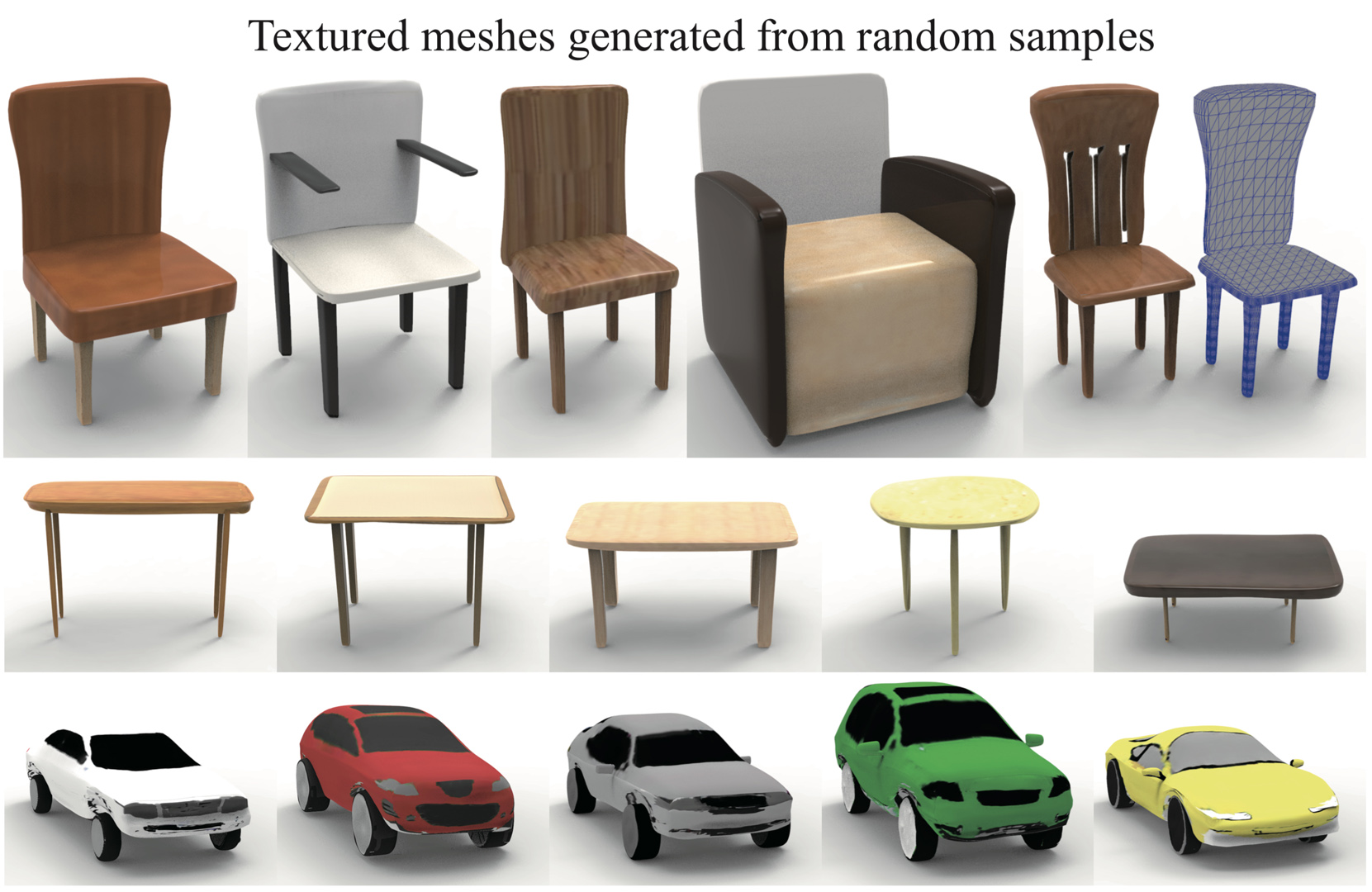

We introduce TM-NET, a novel deep generative model for synthesizing textured meshes in a part-aware manner. Once trained, the network can generate novel textured meshes from scratch or predict textures for a given 3D mesh, without image guidance. Plausible and diverse textures can be generated for the same mesh part, while texture compatibility between parts in the same shape is achieved via conditional generation. Specifically, our method produces texture maps for individual shape parts, each as a deformable box, leading to a natural UV map with limited distortion. The network separately embeds part geometry (via a PartVAE) and part texture (via a TextureVAE) into their respective latent spaces, so as to facilitate learning texture probability distributions conditioned on geometry. We introduce a conditional autoregressive model for texture generation, which can be conditioned on both part geometry and textures already generated for other parts to achieve texture compatibility. To produce high-frequency texture details, our TextureVAE operates in a high-dimensional latent space via dictionary-based vector quantization. We also exploit transparencies in the texture as an effective means to model complex shape structures including topological details. Extensive experiments demonstrate the plausibility, quality, and diversity of the textures and geometries generated by our network, while avoiding inconsistency issues that are common to novel view synthesis methods.

References:

1. Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, and Leonidas Guibas. 2018. Learning Representations and Generative Models for 3D Point Clouds. In International Conference on Machine Learning, Vol. 80. 40–49.

2. Eman Ahmed, Alexandre Saint, Abd El Rahman Shabayek, Kseniya Cherenkova, Rig Das, Gleb Gusev, Djamila Aouada, and Björn E. Ottersten. 2018. Deep Learning Advances on Different 3D Data Representations: A Survey. arXiv:1808.01462 (2018).

3. Sema Berkiten, Maciej Halber, Justin Solomon, Chongyang Ma, Hao Li, and Szymon Rusinkiewicz. 2017. Learning Detail Transfer based on Geometric Features. Comput. Graph. Forum 36, 2 (2017), 361–373.

4. David Berthelot, Colin Raffel, Aurko Roy, and Ian Goodfellow. 2018. Understanding and improving interpolation in autoencoders via an adversarial regularizer. arXiv:1807.07543 (2018).

5. Michael M. Bronstein, Joan Bruna, Yann LeCun, Arthur Szlam, and Pierre Vandergheynst. 2017. Geometric Deep Learning: Going beyond Euclidean data. IEEE Signal Processing Magazine 34, 4 (2017), 18–42.

6. Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. 2015. ShapeNet: An Information-Rich 3D Model Repository. arXiv:1512.03012 (2015).

7. Kang Chen, Kun Xu, Yizhou Yu, Tian-Yi Wang, and Shi-Min Hu. 2015. Magic decorator: automatic material suggestion for indoor digital scenes. ACM Trans. Graph. 34, 6 (2015), 232:1–232:11.

8. Wenzheng Chen, Jun Gao, Huan Ling, Edward Smith, Jaakko Lehtinen, Alec Jacobson, and Sanja Fidler. 2019. Learning to Predict 3D Objects with an Interpolation-based Differentiable Renderer. In NeurIPS. 9605–9616.

9. Xi Chen, Nikhil Mishra, Mostafa Rohaninejad, and Pieter Abbeel. 2018. Pixelsnail: An improved autoregressive generative model. In International Conference on Machine Learning. 864–872.

10. Zhiqin Chen, Andrea Tagliasacchi, and Hao Zhang. 2020. BSP-NET: Generating Compact Meshes via Binary Space Partitioning. In CVPR. 42–51.

11. Zhiqin Chen and Hao Zhang. 2019. Learning implicit fields for generative shape modeling. In CVPR. 5939–5948.

12. Angela Dai, Yawar Siddiqui, Justus Thies, Julien Valentin, and Matthias Nießner. 2021. Spsg: Self-supervised photometric scene generation from rgb-d scans. In CVPR. 1747–1756.

13. Alexey Dosovitskiy and Thomas Brox. 2016. Generating Images with Perceptual Similarity Metrics based on Deep Networks. In NeurIPS. 658–666.

14. Alexei A. Efros and Thomas K. Leung. 1999. Texture Synthesis by Non-parametric Sampling. In ICCV. 1033–1038.

15. Lin Gao, Jie Yang, Tong Wu, Yu-Jie Yuan, Hongbo Fu, Yu-Kun Lai, and Hao(Richard) Zhang. 2019. SDM-NET: Deep Generative Network for Structured Deformable Mesh. ACM Trans. Graph. 38, 6 (2019), 243:1–243:15.

16. Leon Gatys, Alexander S Ecker, and Matthias Bethge. 2015. Texture synthesis using convolutional neural networks. In NeurIPS. 262–270.

17. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In NeurIPS. 2672–2680.

18. Thibault Groueix, Matthew Fisher, Vladimir G. Kim, Bryan Russell, and Mathieu Aubry. 2018. AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation. In CVPR. 216–224.

19. Xiaoguang Han, Chang Gao, and Yizhou Yu. 2017. DeepSketch2Face: a deep learning based sketching system for 3D face and caricature modeling. ACM Trans. Graph. 36, 4 (2017), 126:1–126:12.

20. Philipp Henzler, Valentin Deschaintre, Niloy J. Mitra, and Tobias Ritschel. 2021. Generative Modelling of BRDF Textures from Flash Images. ACM Trans. Graph. 40, 6 (2021), 283:1–283:13.

21. Philipp Henzler, Niloy J Mitra, and Tobias Ritschel. 2020. Learning a Neural 3D Texture Space from 2D Exemplars. In CVPR. 8353–8361.

22. Amir Hertz, Rana Hanocka, Raja Giryes, and Daniel Cohen-Or. 2020. Deep geometric texture synthesis. ACM Trans. Graph. 39, 4 (2020), 108:1–108:11.

23. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In NeurIPS. 6626–6637.

24. Shi-Min Hu, Dun Liang, Guo-Ye Yang, Guo-Wei Yang, and Wen-Yang Zhou. 2020. Jittor: a novel deep learning framework with meta-operators and unified graph execution. Science China Information Sciences 63, 222103 (2020), 1–21.

25. Tao Hu, Geng Lin, Zhizhong Han, and Matthias Zwicker. 2021. Learning to Generate Dense Point Clouds with Textures on Multiple Categories. In WACV. 2169–2178.

26. Jingwei Huang, Justus Thies, Angela Dai, Abhijit Kundu, Chiyu Jiang, Leonidas J Guibas, Matthias Nießner, Thomas Funkhouser, et al. 2020. Adversarial texture optimization from rgb-d scans. In CVPR. 1559–1568.

27. Angjoo Kanazawa, Shubham Tulsiani, Alexei A. Efros, and Jitendra Malik. 2018. Learning Category-Specific Mesh Reconstruction from Image Collections. In ECCV, Vol. 11219. 386–402.

28. Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In International Conference on Learning Representations (ICLR).

29. Johannes Kopf, Daniel Cohen-Or Chi-Wing Fu, Oliver Deussen, Dani Lischinski, and Tien-Tsin Wong. 2007. Solid texture synthesis from 2D exemplars. ACM Trans. Graph. 26, 3 (2007), 2:1–2:9.

30. Yu-Kun Lai, Shi-Min Hu, Xianfeng Gu, and Ralph R. Martin. 2005. Geometric texture synthesis and transfer via geometry images. In Proceedings of the Tenth ACM Symposium on Solid and Physical Modeling. ACM, 15–26.

31. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas J. Guibas. 2017. GRASS: Generative Recursive Autoencoders for Shape Structures. ACM Trans. Graph. 36, 4 (2017), 52:1–52:14.

32. Manyi Li and Hao Zhang. 2021. D2IM-Net: Learning Detail Disentangled Implicit Fields from Single Images. In CVPR. 10246–10255.

33. Shichen Liu, Tianye Li, Weikai Chen, and Hao Li. 2019. Soft Rasterizer: A Differentiable Renderer for Image-based 3D Reasoning. In ICCV. 7708–7717.

34. Zhaoliang Lun, Matheus Gadelha, Evangelos Kalogerakis, Subhransu Maji, and Rui Wang. 2017. 3D Shape Reconstruction from Sketches via Multi-view Convolutional Networks. In Proc. of the International Conference on 3D Vision (3DV). 67–77.

35. Ricardo Martin-Brualla, Rohit Pandey, Sofien Bouaziz, Matthew Brown, and Dan B Goldman. 2020. GeLaTO: Generative Latent Textured Objects. In ECCV, Vol. 12351. 242–258.

36. Lars Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. 2019. Occupancy networks: Learning 3D reconstruction in function space. In CVPR. 4460–4470.

37. Kaichun Mo, Paul Guerrero, Li Yi, Hao Su, Peter Wonka, Niloy Mitra, and Leonidas Guibas. 2019a. StructureNet: Hierarchical Graph Networks for 3D Shape Generation. ACM Trans. Graph. 38, 6 (2019), 242:1–242:29.

38. Kaichun Mo, Shilin Zhu, Angel X Chang, Li Yi, Subarna Tripathi, Leonidas J Guibas, and Hao Su. 2019b. PartNet: A Large-scale Benchmark for Fine-grained and Hierarchical Part-level 3D Object Understanding. In CVPR. 909–918.

39. Michael Niemeyer, Lars Mescheder, Michael Oechsle, and Andreas Geiger. 2020. Differentiable Volumetric Rendering: Learning Implicit 3D Representations without 3D Supervision. In CVPR. 3501–3512.

40. Michael Oechsle, Lars Mescheder, Michael Niemeyer, Thilo Strauss, and Andreas Geiger. 2019. Texture Fields: Learning Texture Representations in Function Space. In ICCV. 4531–4540.

41. Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Love-grove. 2019. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In CVPR. 165–174.

42. Keunhong Park, Konstantinos Rematas, Ali Farhadi, and Steven M. Seitz. 2018. PhotoShape: Photorealistic Materials for Large-Scale Shape Collections. ACM Trans. Graph. 37, 6 (2018), 192:1–192:12.

43. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In NeurIPS. 8024–8035.

44. Dario Pavllo, Graham Spinks, Thomas Hofmann, Marie-Francine Moens, and Aurelien Lucchi. 2020. Convolutional Generation of Textured 3D Meshes. In NeurIPS.

45. Charles Ruizhongtai Qi, Hao Su, Kaichun Mo, and Leonidas J. Guibas. 2017a. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In CVPR. 77–85.

46. Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J. Guibas. 2017b. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In NeurIPS. 5105–5114.

47. Amit Raj, Cusuh Ham, Connelly Barnes, Vladimir Kim, Jingwan Lu, and James Hays. 2019. Learning to Generate Textures on 3D Meshes. In CVPR Workshops. 32–38.

48. Ali Razavi, Aaron van den Oord, and Oriol Vinyals. 2019a. Generating diverse high-fidelity images with vq-vae-2. In NeurIPS. 14866–14876.

49. Ali Razavi, Aäron van den Oord, and Oriol Vinyals. 2019b. Generating Diverse High-Resolution Images with VQ-VAE. In Deep Generative Models for Highly Structured Data, ICLR 2019 Workshop.

50. Stephan R. Richter and Stefan Roth. 2018. Matryoshka networks: Predicting 3D geometry via nested shape layers. In CVPR. 1936–1944.

51. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. 234–241.

52. Shunsuke Saito, Zeng Huang, Ryota Natsume, Shigeo Morishima, Angjoo Kanazawa, and Hao Li. 2019. Pifu: Pixel-aligned implicit function for high-resolution clothed human digitization. In ICCV. 2304–2314.

53. Shunsuke Saito, Tomas Simon, Jason Saragih, and Hanbyul Joo. 2020. Pifuhd: Multilevel pixel-aligned implicit function for high-resolution 3d human digitization. In CVPR. 84–93.

54. Shunsuke Saito, Lingyu Wei, Liwen Hu, Koki Nagano, and Hao Li. 2017. Photorealistic facial texture inference using deep neural networks. In CVPR. 5144–5153.

55. Karen Simonyan and Andrew Zisserman. 2015. Very deep convolutional networks for large-scale image recognition. In International Conference on Learning Representations (ICLR).

56. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. In NeurIPS. 1119–1130.

57. Dmitriy Smirnov, Mikhail Bessmeltsev, and Justin Solomon. 2021. Learning Manifold Patch-Based Representations of Man-Made Shapes. In International Conference on Learning Representations (ICLR).

58. Xavier Snelgrove. 2017. High-Resolution Multi-Scale Neural Texture Synthesis. In SIGGRAPH ASIA 2017 Technical Briefs. 13:1–13:4.

59. Shao-Hua Sun, Minyoung Huh, Yuan-Hong Liao, Ning Zhang, and Joseph J Lim. 2018. Multi-view to Novel View: Synthesizing Novel Views with Self-Learned Confidence. In ECCV, Vol. 11207. 162–178.

60. Qingyang Tan, Lin Gao, Yu-Kun Lai, and Shihong Xia. 2018. Variational Autoencoders for Deforming 3D Mesh Models. In CVPR. 5841–5850.

61. Shubham Tulsiani, Tinghui Zhou, Alexei A. Efros, and Jitendra Malik. 2017. Multi-view Supervision for Single-view Reconstruction via Differentiable Ray Consistency. In CVPR. 2626–2634.

62. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, and Yu-Gang Jiang. 2018b. Pixel2mesh: Generating 3d mesh models from single rgb images. In ECCV, Vol. 11215. 55–71.

63. Peng-Shuai Wang, Chun-Yu Sun, Yang Liu, and Xin Tong. 2018a. Adaptive O-CNN: A Patch-based Deep Representation of 3D Shapes. ACM Trans. Graph. 37, 6 (2018), 217:1–217:11.

64. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T Freeman, and Joshua B Tenenbaum. 2016. Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. In NeurIPS. 82–90.

65. Zhijie Wu, Xiang Wang, Di Lin, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2019. SAGNet: Structure-aware Generative Network for 3D-Shape Modeling. ACM Trans. Graph. 38, 4 (2019), 91:1–91:14.

66. Yun-Peng Xiao, Yu-Kun Lai, Fang-Lue Zhang, Chunpeng Li, and Lin Gao. 2020. A survey on deep geometry learning: From a representation perspective. Comput. Vis. Media 6, 2 (2020), 113–133.

67. Qiangeng Xu, Weiyue Wang, Duygu Ceylan, Radomir Mech, and Ulrich Neumann. 2019. Disn: Deep implicit surface network for high-quality single-view 3d reconstruction. In NeurIPS. 490–500.

68. Yu-Jie Yuan, Yu-Kun Lai, Tong Wu, Lin Gao, and Ligang Liu. 2021. A Revisit of Shape Editing Techniques: From the Geometric to the Neural Viewpoint. J. Comput. Sci. Technol. 36, 3 (2021), 520–554.

69. Richard Zhang, Phillip Isola, and Alexei A Efros. 2016. Colorful Image Colorization. In ECCV, Vol. 9907. 649–666.

70. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR. 586–595.

71. Kun Zhou, John Synder, Baining Guo, and Heung-Yeung Shum. 2004. Iso-charts: stretch-driven mesh parameterization using spectral analysis. In Symposium on Geometry processing. 45–54.

72. Yang Zhou, Zhen Zhu, Xiang Bai, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2018. Non-stationary Texture Synthesis by Adversarial Expansion. ACM Trans. Graph. 37, 4 (2018), 49:1–49:13.

73. Jun-Yan Zhu, Zhoutong Zhang, Chengkai Zhang, Jiajun Wu, Antonio Torralba, Joshua B. Tenenbaum, and William T. Freeman. 2018. Visual Object Networks: Image Generation with Disentangled 3D Representations. In NeurIPS. 118–129.