“FreeStyleGAN: free-view editable portrait rendering with the camera manifold” by Leimkühler and Drettakis

Conference:

Type(s):

Title:

- FreeStyleGAN: free-view editable portrait rendering with the camera manifold

Session/Category Title: Facial Animation and Rendering

Presenter(s)/Author(s):

Abstract:

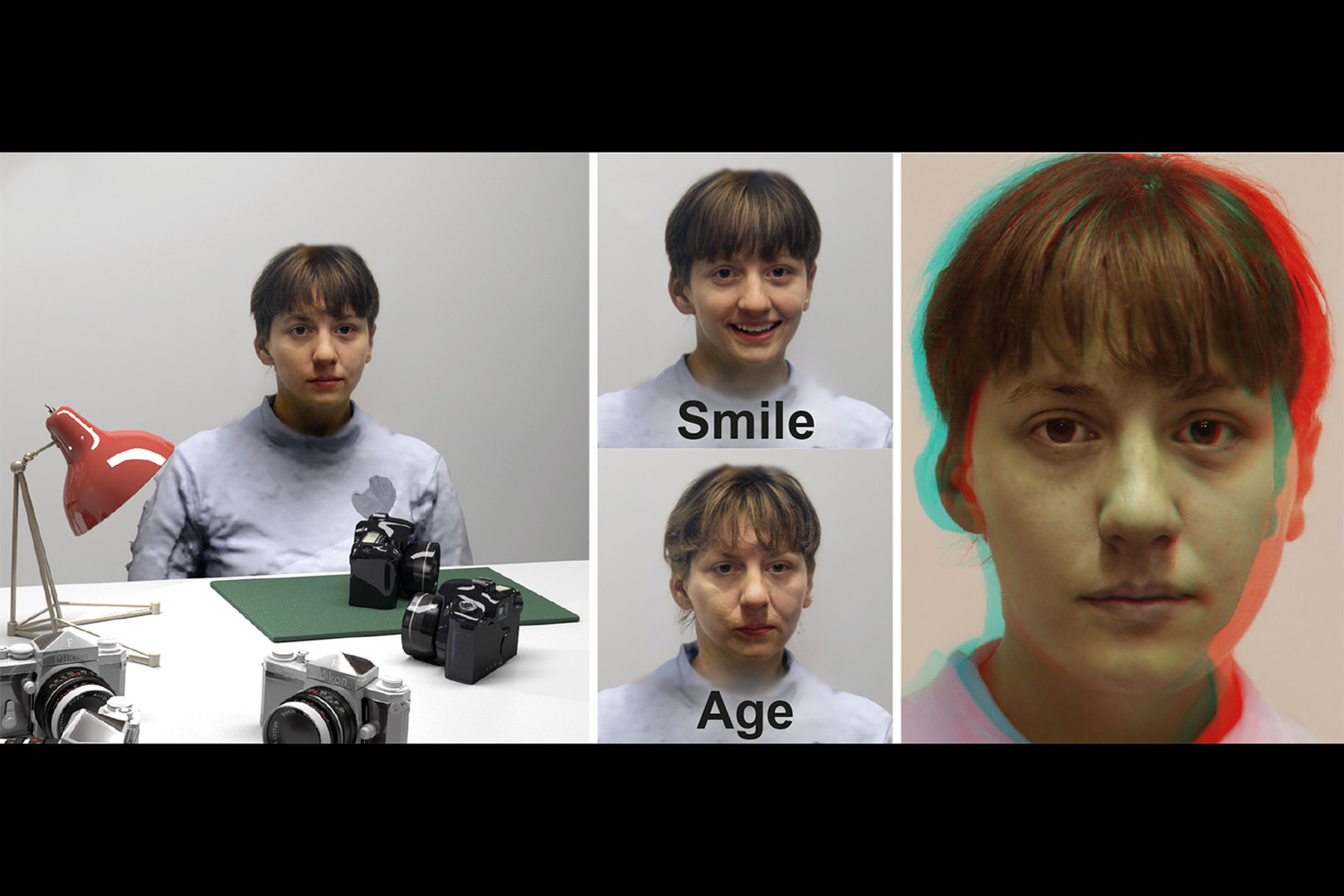

Current Generative Adversarial Networks (GANs) produce photorealistic renderings of portrait images. Embedding real images into the latent space of such models enables high-level image editing. While recent methods provide considerable semantic control over the (re-)generated images, they can only generate a limited set of viewpoints and cannot explicitly control the camera. Such 3D camera control is required for 3D virtual and mixed reality applications. In our solution, we use a few images of a face to perform 3D reconstruction, and we introduce the notion of the GAN camera manifold, the key element allowing us to precisely define the range of images that the GAN can reproduce in a stable manner. We train a small face-specific neural implicit representation network to map a captured face to this manifold and complement it with a warping scheme to obtain free-viewpoint novel-view synthesis. We show how our approach – due to its precise camera control – enables the integration of a pre-trained StyleGAN into standard 3D rendering pipelines, allowing e.g., stereo rendering or consistent insertion of faces in synthetic 3D environments. Our solution proposes the first truly free-viewpoint rendering of realistic faces at interactive rates, using only a small number of casual photos as input, while simultaneously allowing semantic editing capabilities, such as facial expression or lighting changes.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space?. In ICCV. 4432–4441.

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. Image2StyleGAN++: How to Edit the Embedded Images?. In CVPR. 8296–8305.

3. Rameen Abdal, Peihao Zhu, Niloy Mitra, and Peter Wonka. 2021. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. ACM Transactions on Graphics (TOG) (2021).

4. Mallikarjun B R, Ayush Tewari, Abdallah Dib, Tim Weyrich, Bernd Bickel, Hans-Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Louis Chevallier, Mohamed Elgharib, and Christian Theobalt. 2021. PhotoApp: Photorealistic Appearance Editing of Head Portraits. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH).

5. Thabo Beeler, Bernd Bickel, Paul Beardsley, Bob Sumner, and Markus Gross. 2010. High-quality single-shot capture of facial geometry. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH). 1–9.

6. Sai Bi, Stephen Lombardi, Shunsuke Saito, Tomas Simon, Shih-En Wei, Kevyn Mcphail, Ravi Ramamoorthi, Yaser Sheikh, and Jason Saragih. 2021. Deep Relightable Appearance Models for Animatable Faces. ACM Transactions on Graphics (TOG, Proc. SIGGRAPH) (2021).

7. Volker Blanz and Thomas Vetter. 1999. A morphable model for the synthesis of 3D faces. In Proc. SIGGRAPH. 187–194.

8. Yochai Blau and Tomer Michaeli. 2018. The perception-distortion tradeoff. In CVPR. 6228–6237.

9. J. Blinn. 1988. Where am I? What am I looking at? (cinematography). IEEE Computer Graphics and Applications 8, 4 (1988), 76–81.

10. Kevin W Bowyer, Kyong Chang, and Patrick Flynn. 2006. A survey of approaches and challenges in 3D and multi-modal 3D+ 2D face recognition. Computer vision and image understanding 101, 1 (2006), 1–15.

11. Chris Buehler, Michael Bosse, Leonard McMillan, Steven Gortler, and Michael Cohen. 2001. Unstructured lumigraph rendering. In Proc. SIGGRAPH. 425–432.

12. Adrian Bulat and Georgios Tzimiropoulos. 2017. How far are we from solving the 2D & 3D Face Alignment problem? (and a dataset of 230,000 3D facial landmarks). In ICCV.

13. CapturingReality. 2016. RealityCapture. www.capturingreality.com. [accessed 20-July-2020].

14. Eric R Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. pi-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis. In CVPR.

15. Anpei Chen, Ruiyang Liu, Ling Xie, Zhang Chen, Hao Su, and Yu Jingyi. 2021. SofGAN: A Portrait Image Generator with Dynamic Styling. ACM Transactions on Graphics (TOG) 41, 1, Article 1 (July 2021), 26 pages.

16. Marc Christie, Patrick Olivier, and Jean-Marie Normand. 2008. Camera control in computer graphics. In Computer Graphics Forum, Vol. 27. Wiley Online Library, 2197–2218.

17. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the reflectance field of a human face. In Proc. SIGGRAPH. 145–156.

18. Paul Debevec, Camillo Taylor, and Jitendra Malik. 1996. Modeling and rendering architecture from photographs: A hybrid geometry-and image-based approach. In Proc. SIGGRAPH. 11–20.

19. Yu Deng, Jiaolong Yang, Dong Chen, Fang Wen, and Xin Tong. 2020. Disentangled and Controllable Face Image Generation via 3D Imitative-Contrastive Learning. In CVPR. 5154–5163.

20. Bernhard Egger, William AP Smith, Ayush Tewari, Stefanie Wuhrer, Michael Zollhoefer, Thabo Beeler, Florian Bernard, Timo Bolkart, Adam Kortylewski, Sami Romdhani, et al. 2020. 3d morphable face models – past, present, and future. ACM Transactions on Graphics (TOG) 39, 5 (2020), 1–38.

21. Zeev Farbman, Raanan Fattal, and Dani Lischinski. 2011. Convolution pyramids. ACM Transactions on Graphics (TOG) 30, 6 (2011), 175.

22. Zeev Farbman, Gil Hoffer, Yaron Lipman, Daniel Cohen-Or, and Dani Lischinski. 2009. Coordinates for instant image cloning. ACM Transactions on Graphics (TOG) 28, 3 (2009), 1–9.

23. Ohad Fried, Eli Shechtman, Dan B Goldman, and Adam Finkelstein. 2016. Perspective-aware Manipulation of Portrait Photos. ACM Transactions on Graphics (TOG, Proc. SIGGRAPH) (2016).

24. Guy Gafni, Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2021. Dynamic Neural Radiance Fields for Monocular 4D Facial Avatar Reconstruction. In CVPR. 8649–8658.

25. Chen Gao, Yichang Shih, Wei-Sheng Lai, Chia-Kai Liang, and Jia-Bin Huang. 2020. Portrait Neural Radiance Fields from a Single Image. arXiv preprint arXiv:2012.05903 (2020).

26. Jiahao Geng, Tianjia Shao, Youyi Zheng, Yanlin Weng, and Kun Zhou. 2018. Warp-guided gans for single-photo facial animation. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–12.

27. Kyle Genova, Forrester Cole, Daniel Vlasic, Aaron Sarna, William T Freeman, and Thomas Funkhouser. 2019. Learning shape templates with structured implicit functions. In ICCV. 7154–7164.

28. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. 2011. Multiview face capture using polarized spherical gradient illumination. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH Asia). 1–10.

29. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.

30. Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. In Proc. NeurIPS.

31. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel Brostow. 2018. Deep blending for free-viewpoint image-based rendering. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–15.

32. Ali Jahanian, Lucy Chai, and Phillip Isola. 2020. On the “steerability” of generative adversarial networks. In ICLR.

33. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training Generative Adversarial Networks with Limited Data. In Proc. NeurIPS.

34. Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-Free Generative Adversarial Networks. CoRR abs/2106.12423 (2021).

35. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In CVPR. 4401–4410.

36. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of stylegan. In CVPR. 8110–8119.

37. Vahid Kazemi and Josephine Sullivan. 2014. One millisecond face alignment with an ensemble of regression trees. In CVPR. 1867–1874.

38. Zhanghan Ke, Kaican Li, Yurou Zhou, Qiuhua Wu, Xiangyu Mao, Qiong Yan, and Rynson WH Lau. 2020. Is a Green Screen Really Necessary for Real-Time Human Matting? arXiv preprint arXiv:2011.11961 (2020).

39. Hyeongwoo Kim, Pablo Garrido, Ayush Tewari, Weipeng Xu, Justus Thies, Matthias Nießner, Patrick Pérez, Christian Richardt, Michael Zollöfer, and Christian Theobalt. 2018. Deep Video Portraits. ACM Transactions on Graphics (TOG) 37, 4 (2018), 163.

40. Hans Knutsson and C-F Westin. 1993. Normalized and differential convolution. In CVPR. 515–523.

41. Zhengqi Li, Wenqi Xian, Abe Davis, and Noah Snavely. 2020. Crowdsampling the Plenoptic Function. In ECCV.

42. Christophe Lino and Marc Christie. 2012. Efficient Composition for Virtual Camera Control. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation (Lausanne, Switzerland) (SCA ’12). Eurographics Association, 65–70.

43. Christophe Lino and Marc Christie. 2015. Intuitive and efficient camera control with the toric space. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–12.

44. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep appearance models for face rendering. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–13.

45. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Transactions on Graphics (TOG) 38, 4, Article 65 (July 2019), 14 pages.

46. William R Mark, Leonard McMillan, and Gary Bishop. 1997. Post-rendering 3D warping. In Proceedings of the 1997 symposium on Interactive 3D graphics. 7–ff.

47. Ricardo Martin-Brualla, Noha Radwan, Mehdi S. M. Sajjadi, Jonathan T. Barron, Alexey Dosovitskiy, and Daniel Duckworth. 2021. NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. In CVPR.

48. Iain Matthews and Simon Baker. 2004. Active appearance models revisited. International journal of computer vision 60, 2 (2004), 135–164.

49. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV.

50. Koki Nagano, Huiwen Luo, Zejian Wang, Jaewoo Seo, Jun Xing, Liwen Hu, Lingyu Wei, and Hao Li. 2019. Deep face normalization. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–16.

51. A. Nagrani, J. S. Chung, and A. Zisserman. 2017. VoxCeleb: a large-scale speaker identification dataset. In INTERSPEECH.

52. Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. 2019. Hologan: Unsupervised learning of 3d representations from natural images. In ICCV. 7588–7597.

53. Michael Niemeyer and Andreas Geiger. 2021. CAMPARI: Camera-Aware Decomposed Generative Neural Radiance Fields. arXiv:2103.17269

54. Xingang Pan, Bo Dai, Ziwei Liu, Chen Change Loy, and Ping Luo. 2021. Do 2D GANs Know 3D Shape? Unsupervised 3D Shape Reconstruction from 2D Image GANs. In ICLR.

55. Keunhong Park, Utkarsh Sinha, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Steven M. Seitz, and Ricardo Martin-Brualla. 2021. Nerfies: Deformable Neural Radiance Fields. ICCV (2021).

56. Omkar M. Parkhi, Andrea Vedaldi, and Andrew Zisserman. 2015. Deep Face Recognition. In BMVC. BMVA Press, Article 41, 41.1-41.12 pages.

57. Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In ICLR 2016.

58. Srinivas Rao, Rodrigo Ortiz-Cayon, Matteo Munaro, Aidas Liaudanskas, Krunal Chande, Tobias Bertel, Christian Richardt, Alexander JB, Stefan Holzer, and Abhishek Kar. 2020. Free-Viewpoint Facial Re-Enactment from a Casual Capture. In SIGGRAPH Asia 2020 Posters. 1–2.

59. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a stylegan encoder for image-to-image translation. In CVPR. 2287–2296.

60. Gernot Riegler and Vladlen Koltun. 2020. Free view synthesis. In ECCV. 623–640.

61. Johannes L Schonberger and Jan-Michael Frahm. 2016. Structure-from-motion revisited. In CVPR. 4104–4113.

62. Florian Schroff, Dmitry Kalenichenko, and James Philbin. 2015. Facenet: A unified embedding for face recognition and clustering. In CVPR. 815–823.

63. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis. In NeurIPS.

64. Mike Seymour, Chris Evans, and Kim Libreri. 2017. Meet mike: epic avatars. In ACM SIGGRAPH 2017 VR Village. 1–2.

65. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the latent space of gans for semantic face editing. In CVPR. 9243–9252.

66. Yichun Shi, Divyansh Aggarwal, and Anil K Jain. 2021. Lifting 2D StyleGAN for 3D-Aware Face Generation. In CVPR. 6258–6266.

67. Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci, and Nicu Sebe. 2019. First Order Motion Model for Image Animation. In NeurIPS.

68. Vincent Sitzmann, Julien Martel, Alexander Bergman, David Lindell, and Gordon Wetzstein. 2020. Implicit neural representations with periodic activation functions. NeurIPS 33 (2020).

69. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. In NeurIPS.

70. William AP Smith, Alassane Seck, Hannah Dee, Bernard Tiddeman, Joshua B Tenenbaum, and Bernhard Egger. 2020. A morphable face albedo model. In CVPR. 5011–5020.

71. Pratul P. Srinivasan, Boyang Deng, Xiuming Zhang, Matthew Tancik, Ben Mildenhall, and Jonathan T. Barron. 2021. NeRV: Neural Reflectance and Visibility Fields for Relighting and View Synthesis. In CVPR.

72. Matthew Tancik, Pratul P. Srinivasan, Ben Mildenhall, Sara Fridovich-Keil, Nithin Raghavan, Utkarsh Singhal, Ravi Ramamoorthi, Jonathan T. Barron, and Ren Ng. 2020. Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains. NeurIPS (2020).

73. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zöllhofer, and Christian Theobalt. 2020a. StyleRig: Rigging StyleGAN for 3D Control over Portrait Images. In CVPR.

74. Ayush Tewari, Mohamed Elgharib, Mallikarjun BR, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zöllhofer, and Christian Theobalt. 2020b. PIE: Portrait Image Embedding for Semantic Control. ACM Transactions on Graphics (TOG, Proc. SIGGRAPH Asia) 39, 6.

75. A. Tewari, O. Fried, J. Thies, V. Sitzmann, S. Lombardi, K. Sunkavalli, R. Martin-Brualla, T. Simon, J. Saragih, M. Nießner, R. Pandey, S. Fanello, G. Wetzstein, J.-Y. Zhu, C. Theobalt, M. Agrawala, E. Shechtman, D. B Goldman, and M. Zollhöfer. 2020c. State of the Art on Neural Rendering. Computer Graphics Forum (EG STAR 2020) (2020).

76. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019a. Deferred Neural Rendering: Image Synthesis using Neural Textures. ACM Transactions on Graphics (TOG) (2019).

77. Justus Thies, Michael Zollhöfer, Christian Theobalt, Marc Stamminger, and Matthias Nießner. 2019b. Image-guided neural object rendering. In ICLR.

78. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an Encoder for StyleGAN Image Manipulation. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH).

79. Paul Upchurch, Jacob Gardner, Geoff Pleiss, Robert Pless, Noah Snavely, Kavita Bala, and Kilian Weinberger. 2017. Deep feature interpolation for image content changes. In CVPR. 7064–7073.

80. Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz, and Bryan Catanzaro. 2019. Few-shot Video-to-Video Synthesis. In NeurIPS.

81. Ziyan Wang, Timur Bagautdinov, Stephen Lombardi, Tomas Simon, Jason Saragih, Jessica Hodgins, and Michael Zollhofer. 2021. Learning Compositional Radiance Fields of Dynamic Human Heads. In CVPR. 5704–5713.

82. Shih-En Wei, Jason Saragih, Tomas Simon, Adam W Harley, Stephen Lombardi, Michal Perdoch, Alexander Hypes, Dawei Wang, Hernan Badino, and Yaser Sheikh. 2019. VR facial animation via multiview image translation. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–16.

83. Jonas Wulff and Antonio Torralba. 2020. Improving inversion and generation diversity in stylegan using a Gaussianized latent space. arXiv preprint arXiv:2009.06529 (2020).

84. Sicheng Xu, Jiaolong Yang, Dong Chen, Fang Wen, Yu Deng, Yunde Jia, and Xin Tong. 2020. Deep 3D Portrait from a Single Image. In CVPR. 7710–7720.

85. Lei Yang, Yu-Chiu Tse, Pedro V Sander, Jason Lawrence, Diego Nehab, Hugues Hoppe, and Clara L Wilkins. 2011. Image-based bidirectional scene reprojection. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH Asia). 1–10.

86. Tarun Yenamandra, Ayush Tewari, Florian Bernard, Hans-Peter Seidel, Mohamed Elgharib, Daniel Cremers, and Christian Theobalt. 2021. i3DMM: Deep Implicit 3D Morphable Model of Human Heads. In CVPR.

87. Xuan Yu, Rui Wang, and Jingyi Yu. 2010. Real-time depth of field rendering via dynamic light field generation and filtering. In Computer Graphics Forum, Vol. 29. 2099–2107.

88. Egor Zakharov, Aleksei Ivakhnenko, Aliaksandra Shysheya, and Victor Lempitsky. 2020. Fast Bi-layer Neural Synthesis of One-Shot Realistic Head Avatars. In ECCV.

89. Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, and Victor Lempitsky. 2019. Few-shot adversarial learning of realistic neural talking head models. In ICCV. 9459–9468.

90. Jiakai Zhang, Xinhang Liu, Xinyi Ye, Fuqiang Zhao, Yanshun Zhang, Minye Wu, Yingliang Zhang, Lan Xu, and Jingyi Yu. 2021b. Editable Free-viewpoint Video Using a Layered Neural Representation. In ACM Transactions on Graphics (TOG, Proc. SIGGRAPH).

91. Kai Zhang, Gernot Riegler, Noah Snavely, and Vladlen Koltun. 2020. NeRF++: Analyzing and Improving Neural Radiance Fields. arXiv:2010.07492 [cs.CV]

92. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR. 586–595.

93. Yuxuan Zhang, Wenzheng Chen, Huan Ling, Jun Gao, Yinan Zhang, Antonio Torralba, and Sanja Fidler. 2021a. Image GANs meet Differentiable Rendering for Inverse Graphics and Interpretable 3D Neural Rendering. In ICLR.

94. Yajie Zhao, Zeng Huang, Tianye Li, Weikai Chen, Chloe LeGendre, Xinglei Ren, Ari Shapiro, and Hao Li. 2019. Learning perspective undistortion of portraits. In ICCV. 7849–7859.

95. Hang Zhou, Jihao Liu, Ziwei Liu, Yu Liu, and Xiaogang Wang. 2020. Rotate-and-Render: Unsupervised Photorealistic Face Rotation from Single-View Images. In CVPR. 5911–5920.

96. Jiapeng Zhu, Yujun Shen, Deli Zhao, and Bolei Zhou. 2020. In-domain GAN Inversion for Real Image Editing. In ECCV.

97. Jun-Yan Zhu, Philipp Krähenbühl, Eli Shechtman, and Alexei A Efros. 2016. Generative visual manipulation on the natural image manifold. In ECCV. Springer, 597–613.

98. Jun-Yan Zhu, Zhoutong Zhang, Chengkai Zhang, Jiajun Wu, Antonio Torralba, Josh Tenenbaum, and Bill Freeman. 2018. Visual object networks: Image generation with disentangled 3D representations. NeurIPS 31 (2018), 118–129.