“Rendering with style: combining traditional and neural approaches for high-quality face rendering” by Chandran, Winberg, Zoss, Riviere, Gross, et al. …

Conference:

Type(s):

Title:

- Rendering with style: combining traditional and neural approaches for high-quality face rendering

Session/Category Title: Facial Animation and Rendering

Presenter(s)/Author(s):

Abstract:

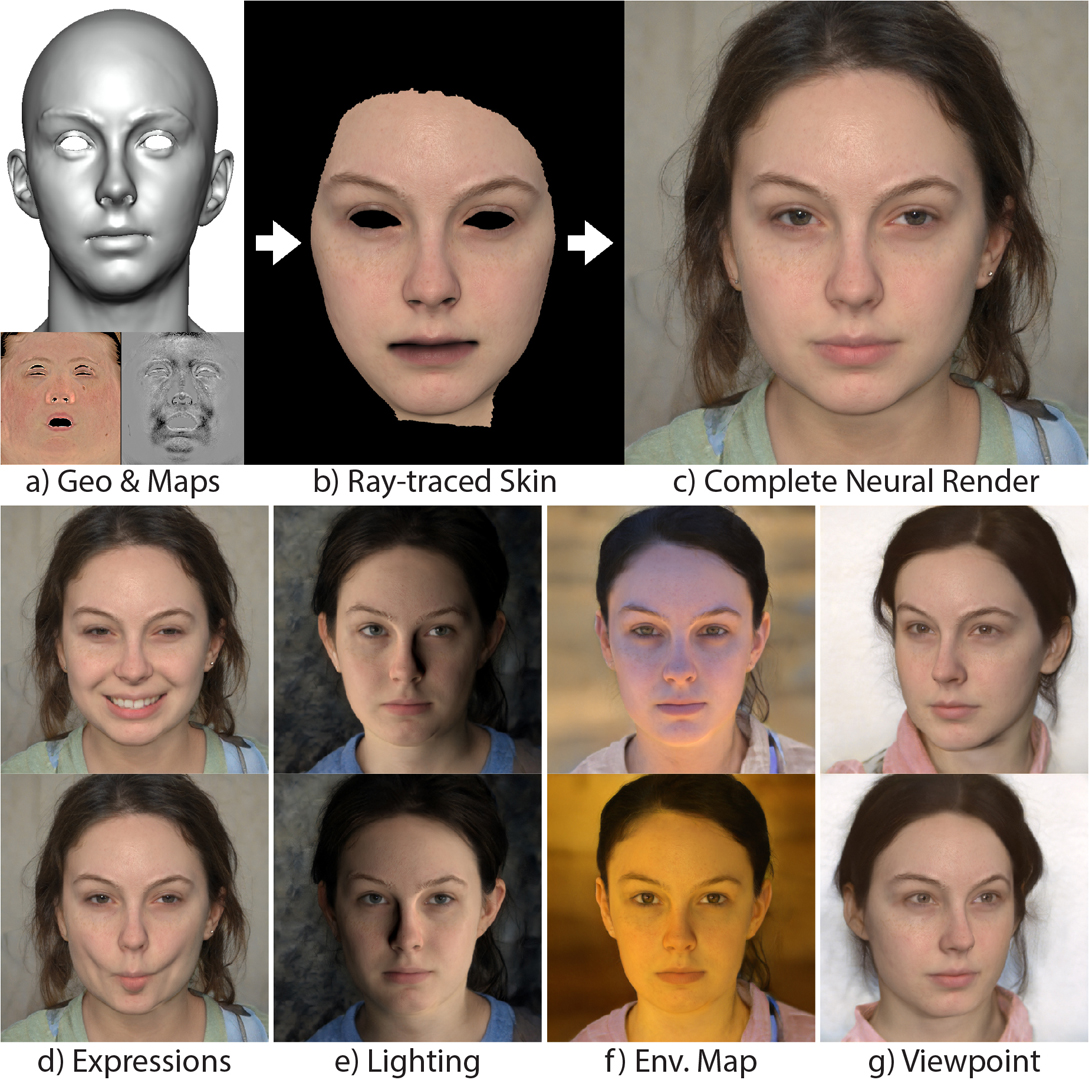

For several decades, researchers have been advancing techniques for creating and rendering 3D digital faces, where a lot of the effort has gone into geometry and appearance capture, modeling and rendering techniques. This body of research work has largely focused on facial skin, with much less attention devoted to peripheral components like hair, eyes and the interior of the mouth. As a result, even with the best technology for facial capture and rendering, in most high-end productions a lot of artist time is still spent modeling the missing components and fine-tuning the rendering parameters to combine everything into photo-real digital renders. In this work we propose to combine incomplete, high-quality renderings showing only facial skin with recent methods for neural rendering of faces, in order to automatically and seamlessly create photo-realistic full-head portrait renders from captured data without the need for artist intervention. Our method begins with traditional face rendering, where the skin is rendered with the desired appearance, expression, viewpoint, and illumination. These skin renders are then projected into the latent space of a pre-trained neural network that can generate arbitrary photo-real face images (StyleGAN2). The result is a sequence of realistic face images that match the identity and appearance of the 3D character at the skin level, but is completed naturally with synthesized hair, eyes, inner mouth and surroundings. Notably, we present the first method for multi-frame consistent projection into this latent space, allowing photo-realistic rendering and preservation of the identity of the digital human over an animated performance sequence, which can depict different expressions, lighting conditions and viewpoints. Our method can be used in new face rendering pipelines and, importantly, in other deep learning applications that require large amounts of realistic training data with ground-truth 3D geometry, appearance maps, lighting, and viewpoint.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2StyleGAN: How to embed images into the StyleGAN latent space?. In Proc. ICCV. IEEE, 4432–4441.

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. Image2StyleGAN++: How to edit the embedded images?. In Proc. CVPR. IEEE, 8296–8305.

3. Rameen Abdal, Peihao Zhu, Niloy J. Mitra, and Peter Wonka. 2021. StyleFlow: Attribute-Conditioned Exploration of StyleGAN-Generated Images Using Conditional Continuous Normalizing Flows. ACM Trans. Graphics 40, 3, Article 21 (May 2021), 21 pages.

4. Yuval Alaluf, Or Patashnik, and Daniel Cohen-Or. 2021. ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement. In Proc. ICCV.

5. Kara-Ali Aliev, Artem Sevastopolsky, Maria Kolos, Dmitry Ulyanov, and Victor Lempitsky. 2020. Neural Point-Based Graphics. In European Conference on Computer Vision (ECCV). Springer.

6. Thabo Beeler, Bernd Bickel, Paul Beardsley, Bob Sumner, and Markus Gross. 2010. High-Quality Single-Shot Capture of Facial Geometry. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 3 (2010), 40:1–40:9.

7. Thabo Beeler, Bernd Bickel, Gioacchino Noris, Paul Beardsley, Steve Marschner, Robert W Sumner, and Markus Gross. 2012. Coupled 3D reconstruction of sparse facial hair and skin. ACM Trans. Graphics (Proc. SIGGRAPH) 31, 4 (2012), 1–10.

8. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graphics (Proc. SIGGRAPH) 30, Article 75 (August 2011), 10 pages. Issue 4.

9. Pascal Bérard, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. Lightweight Eye Capture Using a Parametric Model. ACM Trans. Graphics (Proc. SIGGRAPH) 35, 4, Article 117 (2016), 117:1–117:12 pages.

10. Pascal Bérard, Derek Bradley, Maurizio Nitti, Thabo Beeler, and Markus H Gross. 2014. High-quality capture of eyes. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 6 (2014), 223–1.

11. Derek Bradley, Wolfgang Heidrich, Tiberiu Popa, and Alla Sheffer. 2010. High resolution passive facial performance capture. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 4 (2010), 41.

12. Eric R. Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. Pi-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis. In Proc. CVPR. 5799–5809.

13. Prashanth Chandran, Derek Bradley, Markus Gross, and Thabo Beeler. 2020. Semantic Deep Face Models. In 2020 Intl. Conf. 3D Vision. 345–354.

14. Yunjey Choi, Minje Choi, Munyoung Kim, Jung-Woo Ha, Sunghun Kim, and Jaegul Choo. 2018. StarGAN: Unified generative adversarial networks for multi-domain image-to-image translation. In Proc. CVPR. IEEE, 8789–8797.

15. Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. StarGAN v2: Diverse Image Synthesis for Multiple Domains. In Proc. CVPR. IEEE, 8188–8197.

16. Robert Cook and Kenneth E. Torrance. 1981. A reflectance model for computer graphics. Computer Graphics (Proc. SIGGRAPH) 15, 3 (1981), 301–316.

17. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the reflectance field of a human face. In ACM Trans. Graphics (Proc. SIGGRAPH). ACM Press/Addison-Wesley Publishing Co., ACM, 145–156.

18. Eugene d’Eon, David Luebke, and Eric Enderton. 2007. Efficient Rendering of Human Skin. In Proc. Eurographics Conf. on Rendering Techniques (EGSR’07). Eurographics Association, 147–157.

19. Yao Feng, Haiwen Feng, Michael J Black, and Timo Bolkart. 2020. Learning an Animatable Detailed 3D Face Model from In-The-Wild Images. (Dec. 2020). arXiv:2012.04012 [cs.CV]

20. Graham Fyffe, Paull Graham, Borom Tunwattanapong, Abhijeet Ghosh, and Paul Debevec. 2016. Near-Instant Capture of High-Resolution Facial Geometry and Reflectance. Computer Graphics Forum 35, 2 (2016), 353–363.

21. Graham Fyffe, Tim Hawkins, Chris Watts, Wan-Chun Ma, and Paul Debevec. 2011. Comprehensive Facial Performance Capture. Computer Graphics Forum 30, 2 (2011).

22. Stephan J Garbin, Marek Kowalski, Matthew Johnson, and Jamie Shotton. 2020. High Resolution Zero-Shot Domain Adaptation of Synthetically Rendered Face Images. In Proc. ECCV. Springer, 220–236.

23. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. 2011. Multiview face capture using polarized spherical gradient illumination. In ACM Trans. Graphics (Proc. SIGGRAPH Asia). 1–10.

24. Abhijeet Ghosh, Tim Hawkins, Pieter Peers, Sune Frederiksen, and Paul Debevec. 2008. Practical Modeling and Acquisition of Layered Facial Reflectance. ACM Trans. Graphics 27, 5 (Dec. 2008), 139:1–139:10.

25. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Advances in Neural Information Processing Systems 27 (2014), 2672–2680.

26. Paulo Gotardo, Jérémy Riviere, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2018. Practical Dynamic Facial Appearance Modeling and Acquisition. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 37, 6 (2018), 232:1–232:13.

27. Paulo Gotardo, Tomas Simon, Yaser Sheikh, and Iain Matthews. 2015. Photogeometric Scene Flow for High-Detail Dynamic 3D Reconstruction. In Proc. ICCV. IEEE, 846–854.

28. Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. In Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin (Eds.), Vol. 33. Curran Associates, Inc., 9841–9850.

29. Liwen Hu, Derek Bradley, Hao Li, and Thabo Beeler. 2017. Simulation-ready hair capture. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 281–294.

30. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2014. Robust hair capture using simulated examples. ACM Trans. Graphics 33, 4 (2014), 1–10.

31. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2015. Single-View Hair Modeling Using A Hairstyle Database. ACM Trans. Graphics (Proc. SIGGRAPH) 34, 4 (July 2015).

32. Zhiwu Huang, Bernhard Kratzwald, Danda Pani Paudel, Jiqing Wu, and Luc Van Gool. 2017. Face Translation between Images and Videos using Identity-aware CycleGAN. arXiv:1712.00971 [cs.CV]

33. Jorge Jimenez, Veronica Sundstedt, and Diego Gutierrez. 2009. Screen-space perceptual rendering of human skin. ACM Transactions on Applied Perception 6, 4 (2009), 23:1–23:15.

34. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In Intl. Conf. Learning Representations.

35. Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-Free Generative Adversarial Networks. arXiv:2106.12423 [cs.CV]

36. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proc. CVPR. 4401–4410.

37. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of StyleGAN. In Proc. CVPR. IEEE, 8110–8119.

38. Marek Kowalski, Stephan J. Garbin, Virginia Estellers, Tadas Baltrušaitis, Matthew Johnson, and Jamie Shotton. 2020. CONFIG: Controllable Neural Face Image Generation. In Proc. ECCV.

39. Alexandros Lattas, Stylianos Moschoglou, Baris Gecer, Stylianos Ploumpis, Vasileios Triantafyllou, Abhijeet Ghosh, and Stefanos Zafeiriou. 2020. Avatarme: Realistically renderable 3d facial reconstruction ‘in-The-wild’. In Proc. CVPR.

40. Jiangke Lin, Yi Yuan, Tianjia Shao, and Kun Zhou. 2020. Towards high-fidelity 3D face reconstruction from in-the-wild images using graph convolutional networks. In Proc. CVPR.

41. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graphics (Proc. SIGGRAPH) 38, 4, Article 65 (July 2019), 14 pages.

42. B R Mallikarjun, Ayush Tewari, Abdallah Dib, Tim Weyrich, Bernd Bickel, Hans-Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Louis Chevallier, Mohamed Elgharib, and Christian Theobalt. 2021. PhotoApp: Photorealistic Appearance Editing of Head Portraits. ACM Trans. Graph. (2021).

43. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-Time Neural Re-Rendering. ACM Trans. Graphics 37, 6, Article 255 (Dec. 2018), 14 pages.

44. Abhimitra Meka, Rohit Pandey, Christian Haene, Sergio Orts-Escolano, Peter Barnum, Philip Davidson, Daniel Erickson, Yinda Zhang, Jonathan Taylor, Sofien Bouaziz, Chloe Legendre, Wan-Chun Ma, Ryan Overbeck, Thabo Beeler, Paul Debevec, Shahram Izadi, Christian Theobalt, Christoph Rhemann, and Sean Fanello. 2020. Deep Relightable Textures – Volumetric Performance Capture with Neural Rendering. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 39, 6.

45. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Proc. ECCV.

46. O. Nalbach, E. Arabadzhiyska, D. Mehta, H.-P. Seidel, and T. Ritschel. 2017. Deep Shading: Convolutional Neural Networks for Screen Space Shading. Computer Graphics Forum 36, 4 (July 2017), 65–78.

47. Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. 2019. HoloGAN: Unsupervised Learning of 3D Representations From Natural Images. In Proc. ICCV.

48. Martin Pernuš, Vitomir Štruc, and Simon Dobrišek. 2021. High Resolution Face Editing with Masked GAN Latent Code Optimization. arXiv:2103.11135 [cs.CV]

49. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation. In Proc. CVPR.

50. Jérémy Riviere, Paulo Gotardo, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2020. Single-shot high-quality facial geometry and skin appearance capture. ACM Trans. Graphics (Proc. SIGGRAPH) 39, 4 (2020), 81–1.

51. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis. In Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin (Eds.), Vol. 33. Curran Associates, Inc., 20154–20166.

52. Yujun Shen, Ping Luo, Junjie Yan, Xiaogang Wang, and Xiaoou Tang. 2018. FaceID-GAN: Learning a Symmetry Three-Player GAN for Identity-Preserving Face Synthesis. In Proc. CVPR.

53. Yujun Shen, Ceyuan Yang, Xiaoou Tang, and Bolei Zhou. 2020. InterFaceGAN: Interpreting the Disentangled Face Representation Learned by GANs. IEEE Trans. PAMI PP (Oct. 2020).

54. Yujun Shen and Bolei Zhou. 2020. Closed-form factorization of latent semantics in GANs. (July 2020). arXiv:2007.06600 [cs.CV]

55. Karen Simonyan and Andrew Zisserman. 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Intl. Conf. on Learning Representations.

56. Xu Tang, Zongwei Wang, Weixin Luo, and Shenghua Gao. 2018. Face Aging with Identity-Preserved Conditional Generative Adversarial Networks. In Proc. CVPR. 7939–7947.

57. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhofer, and Christian Theobalt. 2020a. StyleRig: Rigging StyleGAN for 3D Control over Portrait Images. In Proc. CVPR. 6142–6151.

58. Ayush Tewari, Mohamed Elgharib, Mallikarjun B R., Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhöfer, and Christian Theobalt. 2020b. PIE: Portrait Image Embedding for Semantic Control. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 39, 6 (2020).

59. A. Tewari, O. Fried, J. Thies, V. Sitzmann, S. Lombardi, K. Sunkavalli, R. Martin-Brualla, T. Simon, J. Saragih, M. Nießner, R. Pandey, S. Fanello, G. Wetzstein, J.-Y. Zhu, C. Theobalt, M. Agrawala, E. Shechtman, D. B Goldman, and M. Zollhöfer. 2020c. State of the Art on Neural Rendering. Computer Graphics Forum (EG STAR 2020) (2020).

60. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred Neural Rendering: Image Synthesis Using Neural Textures. ACM Trans. Graphics (Proc. SIGGRAPH) 38, 4, Article 66 (July 2019), 12 pages.

61. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an Encoder for StyleGAN Image Manipulation. arXiv preprint arXiv:2102.02766 (2021).

62. Zdravko Velinov, Marios Papas, Derek Bradley, Paulo Gotardo, Parsa Mirdehghan, Steve Marschner, Jan Novák, and Thabo Beeler. 2018. Appearance capture and modeling of human teeth. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 37, 6 (2018), 1–13.

63. Mei Wang and Weihong Deng. 2021. Deep face recognition: A survey. Neurocomputing 429 (2021), 215–244.

64. Chenglei Wu, Derek Bradley, Pablo Garrido, Michael Zollhöfer, Christian Theobalt, Markus H Gross, and Thabo Beeler. 2016. Model-based teeth reconstruction. ACM Trans. Graphics (Proc. SIGGRAPH) 35, 6 (2016), 220–1.

65. Zongze Wu, Dani Lischinski, and Eli Shechtman. 2020. StyleSpace Analysis: Disentangled Controls for StyleGAN Image Generation. (Nov. 2020). arXiv:2011.12799 [cs.CV]

66. Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, and Ming-Hsuan Yang. 2021. GAN Inversion: A Survey. (2021). arXiv:2101.05278 [cs.CV]

67. Changqian Yu, Changxin Gao, Jingbo Wang, Gang Yu, Chunhua Shen, and Nong Sang. 2020. BiSeNet V2: Bilateral Network with Guided Aggregation for Real-time Semantic Segmentation. CoRR abs/2004.02147 (2020). arXiv:2004.02147

68. Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. 2018. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proc. ECCV. 334–349.

69. R. Zhang, P. Isola, A. A. Efros, E. Shechtman, and O. Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proc. CVPR.

70. Yuxuan Zhang, Wenzheng Chen, Huan Ling, Jun Gao, Yinan Zhang, Antonio Torralba, and Sanja Fidler. 2021. Image GANs meet Differentiable Rendering for Inverse Graphics and Interpretable 3D Neural Rendering. In Intl. Conf. on Learning Representations.

71. Jiapeng Zhu, Yujun Shen, Deli Zhao, and Bolei Zhou. 2020b. In-Domain GAN Inversion for Real Image Editing. In ECCV.

72. Peihao Zhu, Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020a. Improved StyleGAN Embedding: Where are the Good Latents? (Dec. 2020). arXiv:2012.09036 [cs.CV]