“X-Fields: implicit neural view-, light- and time-image interpolation” by Bemana, Myszkowski, Seidel and Ritschel

Conference:

Type(s):

Title:

- X-Fields: implicit neural view-, light- and time-image interpolation

Session/Category Title: Neural Rendering

Presenter(s)/Author(s):

Abstract:

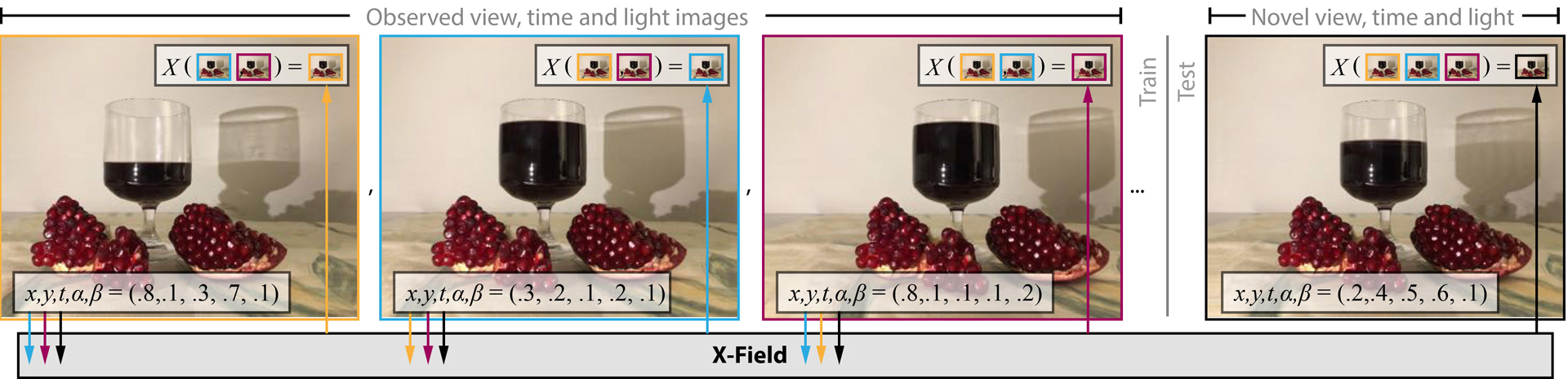

We suggest to represent an X-Field —a set of 2D images taken across different view, time or illumination conditions, i.e., video, lightfield, reflectance fields or combinations thereof—by learning a neural network (NN) to map their view, time or light coordinates to 2D images. Executing this NN at new coordinates results in joint view, time or light interpolation. The key idea to make this workable is a NN that already knows the “basic tricks” of graphics (lighting, 3D projection, occlusion) in a hard-coded and differentiable form. The NN represents the input to that rendering as an implicit map, that for any view, time, or light coordinate and for any pixel can quantify how it will move if view, time or light coordinates change (Jacobian of pixel position with respect to view, time, illumination, etc.). Our X-Field representation is trained for one scene within minutes, leading to a compact set of trainable parameters and hence real-time navigation in view, time and illumination.

References:

1. Wenbo Bao, Wei-Sheng Lai, Chao Ma, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019a. Depth-aware Video Frame Interpolation. In Proc. CVPR.Google ScholarCross Ref

2. Wenbo Bao, Wei-Sheng Lai, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019b. MEMC-Net: Motion Estimation and Motion Compensation Driven Neural Network for Video Interpolation and Enhancement. IEEE Transactions on Pattern Analysis and Machine Intelligence (2019).Google Scholar

3. Chris Buehler, Michael Bosse, Leonard McMillan, Steven Gortler, and Michael Cohen. 2001. Unstructured Lumigraph Rendering. In Proc. SIGGRAPH.Google ScholarDigital Library

4. D. J. Butler, J. Wulff, G. B. Stanley, and M. J. Black. 2012. A naturalistic open source movie for optical flow evaluation. In European Conf. on Computer Vision (ECCV) (Part IV, LNCS 7577), A. Fitzgibbon et al. (Eds.) (Ed.). Springer-Verlag, 611–625.Google Scholar

5. Gaurav Chaurasia, Sylvain Duchene, Olga Sorkine-Hornung, and George Drettakis. 2013. Depth Synthesis and Local Warps for Plausible Image-based Navigation. ACM Trans. Graph. 32, 3 (2013).Google ScholarDigital Library

6. Anpei Chen, Minye Wu, Yingliang Zhang, Nianyi Li, Jie Lu, Shenghua Gao, and Jingyi Yu. 2018. Deep Surface Light Fields. Proc. i3D 1, 1 (2018), 14.Google ScholarDigital Library

7. Billy Chen and Hendrik PA Lensch. 2005. Light Source Interpolation for Sparsely Sampled Reflectance Fields. In Proc. Vision, Modeling and Visualization. 461–469.Google Scholar

8. Xu Chen, Jie Song, and Otmar Hilliges. 2019. Monocular Neural Image Based Rendering with Continuous View Control. In ICCV.Google Scholar

9. Zhiqin Chen and Hao Zhang. 2019. Learning Implicit Fields for Generative Shape Modeling. CVPR (2019).Google Scholar

10. Łukasz Dabała, Matthias Ziegler, Piotr Didyk, Frederik Zilly, Joachim Keinert, Karol Myszkowski, Hans-Peter Seidel, Przemysław Rokita, and Tobias Ritschel. 2016. Efficient Multi-image Correspondences for On-line Light Field Video Processing. Comp. Graph. Forum (Proc. Pacific Graphics) (2016).Google Scholar

11. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the Reflectance Field of a Human Face. In Proc. SIGGRAPH. 145–156.Google ScholarDigital Library

12. Alexey Dosovitskiy, Jost Tobias Springenberg, and Thomas Brox. 2015. Learning to Generate Chairs with Convolutional Neural Networks. In CVPR.Google Scholar

13. Song-Pei Du, Piotr Didyk, Frédo Durand, Shi-Min Hu, and Wojciech Matusik. 2014. Improving Visual Quality of View Transitions in Automultiscopic Displays. ACM Trans. Graph. (Proc. SIGGRAPH) 33, 6 (2014).Google ScholarDigital Library

14. Jesse Engel, Cinjon Resnick, Adam Roberts, Sander Dieleman, Mohammad Norouzi, Douglas Eck, and Karen Simonyan. 2017. Neural Audio Synthesis of Musical Notes with Wavenet Autoencoders. In JMLR.Google Scholar

15. John Flynn, Michael Broxton, Paul Debevec, Matthew DuVall, Graham Fyffe, Ryan Overbeck, Noah Snavely, and Richard Tucker. 2019. DeepView: View Synthesis With Learned Gradient Descent. In CVPR.Google Scholar

16. John Flynn, Ivan Neulander, James Philbin, and Noah Snavely. 2016. DeepStereo: Learning to Predict New Views From the World’s Imagery. In CVPR.Google Scholar

17. David A Forsyth and Jean Ponce. 2002. Computer Vision: a Modern Approach. Prentice Hall Professional Technical Reference.Google Scholar

18. Ohad Fried and Maneesh Agrawala. 2019. Puppet Dubbing. In Proc. EGSR.Google Scholar

19. Martin Fuchs, Volker Blanz, Hendrik P.A. Lensch, and Hans-Peter Seidel. 2007. Adaptive Sampling of Reflectance Fields. ACM Trans. Graph. 26, 2, Article 10 (2007).Google ScholarDigital Library

20. Clément Godard, Oisin Mac Aodha, and Gabriel J. Brostow. 2017. Unsupervised Monocular Depth Estimation with Left-Right Consistency. In CVPR.Google Scholar

21. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Proc. NIPS.Google ScholarDigital Library

22. Steven J Gortler, Radek Grzeszczuk, Richard Szeliski, and Michael F Cohen. 1996. The Lumigraph. In SIGGRAPH.Google Scholar

23. Kaiwen Guo et al. 2019. The Relightables: Volumetric Performance Capture of Humans with Realistic Relighting. ACM Trans. Graph (Proc SIGGRAPH Asia) 38, 5 (2019).Google Scholar

24. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel J. Brostow. 2018. Deep Blending for Free-Viewpoint Image-Based Rendering. ACM Trans. Graph. (Proc. SIGGRAPH) 37, 6 (2018).Google Scholar

25. Peter Hedman, Tobias Ritschel, George Drettakis, and Gabriel Brostow. 2016. Scalable Inside-Out Image-Based Rendering. ACM Trans. Graph. (Proc. SIGRAPH Asia) 35, 6 (2016).Google Scholar

26. Philipp Henzler, Niloy J Mitra, and Tobias Ritschel. 2019. Escaping Plato’s Cave: 3D Shape From Adversarial Rendering. (2019).Google Scholar

27. Geoffrey E Hinton and Ruslan R Salakhutdinov. 2006. Reducing the Dimensionality of Data with Neural Networks. Science 313, 5786 (2006).Google Scholar

28. Max Jaderberg, Karen Simonyan, Andrew Zisserman, et al. 2015. Spatial Transformer Networks. In Proc. NIPS.Google Scholar

29. Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. 2018. Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation. In CVPR.Google Scholar

30. Nima Khademi Kalantari, Ting-Chun Wang, and Ravi Ramamoorthi. 2016. Learning-based View Synthesis for Light Field Cameras. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 35, 6 (2016).Google Scholar

31. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-based Generator Architecture for Generative Adversarial Networks. In CVPR. 4401–4410.Google Scholar

32. Petr Kellnhofer, Piotr Didyk, Szu-Po Wang, Pitchaya Sitthi-Amorn, William Freeman, Fredo Durand, and Wojciech Matusik. 2017. 3DTV at Home: Eulerian-Lagrangian Stereo-to-Multiview Conversion. ACM Trans. Graph. (Proc. SIGGRAPH) 36, 4 (2017).Google Scholar

33. Diederik P Kingma and Max Welling. 2013. Auto-encoding Variational Bayes. In Proc. ICLR.Google Scholar

34. Johannes Kopf, Fabian Langguth, Daniel Scharstein, Richard Szeliski, and Michael Goesele. 2013. Image-Based Rendering in the Gradient Domain. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 32, 6 (2013).Google Scholar

35. Marc Levoy and Pat Hanrahan. 1996. Light field rendering. In SIGGRAPH.Google Scholar

36. Christian Lipski, Christian Linz, Kai Berger, Anita Sellent, and Marcus Magnor. 2010. Virtual Video Camera: Image-Based Viewpoint Navigation Through Space and Time. Computer Graphics Forum 29, 8 (Dec 2010).Google ScholarCross Ref

37. Rosanne Liu, Joel Lehman, Piero Molino, Felipe Petroski Such, Eric Frank, Alex Sergeev, and Jason Yosinski. 2018. An Intriguing Failing of Convolutional Neural Networks and the CoordConv Solution. In Proc. NIPS.Google Scholar

38. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. (Proc. SIGGRAPH) 38, 4 (2019).Google ScholarDigital Library

39. Dhruv Mahajan, Fu-Chung Huang, Wojciech Matusik, Ravi Ramamoorthi, and Peter Belhumeur. 2009. Moving Gradients: a Path-based Method for Plausible Image Interpolation. In ACM Trans. Graph., Vol. 28. 42.Google ScholarDigital Library

40. Tom Malzbender, Dan Gelb, and Hans Wolters. 2001. Polynomial Texture Maps. In Proc. SIGGRAPH.Google ScholarDigital Library

41. Russell A. Manning and Charles R. Dyer. 1999. Interpolating View and Scene Motion by Dynamic View Morphing. In CVPR, Vol. 1. 388–394.Google Scholar

42. William R Mark, Leonard McMillan, and Gary Bishop. 1997. Post-rendering 3D Warping. In Proc. i3D.Google ScholarDigital Library

43. Maxim Maximov, Laura Leal-Taixé, Mario Fritz, and Tobias Ritschel. 2019. Deep Appearance Maps. In Proc. ICCV.Google ScholarCross Ref

44. Abhimitra Meka et al. 2019. Deep Reflectance Fields – High-Quality Facial Reflectance Field Inference From Color Gradient Illumination. ACM Trans. Graph (Proc SIGGRAPH) 38, 4 (2019).Google Scholar

45. Ben Mildenhall, Pratul P. Srinivasan, Rodrigo Ortiz-Cayon, Nima Khademi Kalantari, Ravi Ramamoorthi, Ren Ng, and Abhishek Kar. 2019. Local Light Field Fusion: Practical View Synthesis with Prescriptive Sampling Guidelines. ACM Trans. Graph. (Proc. SIGGRAPH) 38, 4, Article 29 (2019).Google ScholarDigital Library

46. Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. ArXiv 2003.08934 (2020).Google Scholar

47. Thu Nguyen Phuoc, Chuan Li, Stephen Balaban, and Yongliang Yang. 2018. RenderNet: A deep Convolutional Network for Differentiable Rendering from 3D Shapes. (2018).Google Scholar

48. Thu Nguyen Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yongliang Yang. 2019. HoloGAN: Unsupervised Learning of 3D Representations From Natural Images. In ICCV.Google Scholar

49. Michael Niemeyer, Lars Mescheder, Michael Oechsle, and Andreas Geiger. 2019. Occupancy Flow: 4D Reconstruction by Learning Particle Dynamics. In Proc. ICCV.Google ScholarCross Ref

50. Michael Oechsle, Lars Mescheder, Michael Niemeyer, Thilo Strauss, and Andreas Geiger. 2019. Texture Fields: Learning Texture Representations in Function Space. ICCV (2019).Google Scholar

51. Pieter Peers, Dhruv K. Mahajan, Bruce Lamond, Abhijeet Ghosh, Wojciech Matusik, Ravi Ramamoorthi, and Paul Debevec. 2009. Compressive Light Transport Sensing. ACM Trans. Graph. 28, 1, Article 3 (2009).Google ScholarDigital Library

52. Eric Penner and Li Zhang. 2017. Soft 3D Reconstruction for View Synthesis. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 36, 6 (2017).Google Scholar

53. Julien Philip, Michaël Gharbi, Tinghui Zhou, Alexei A. Efros, and George Drettakis. 2019. Multi-View Relighting Using a Geometry-Aware Network. ACM Trans. Graph. 38, 4, Article Article 78 (2019).Google ScholarDigital Library

54. Alec Radford, Luke Metz, and Soumith Chintala. 2015. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. Arxiv 1511.06434 (2015).Google Scholar

55. Dikpal Reddy, Ravi Ramamoorthi, and Brian Curless. 2012. Frequency-Space Decomposition and Acquisition of Light Transport under Spatially Varying Illumination. In Proc. ECCV. 596–610.Google ScholarDigital Library

56. Scott E Reed, Yi Zhang, Yuting Zhang, and Honglak Lee. 2015. Deep Visual Analogy-making. In NIPS.Google Scholar

57. Peiran Ren, Yue Dong, Stephen Lin, Xin Tong, and Baining Guo. 2015. Image Based Relighting Using Neural Networks. ACM Trans. Graph. (Proc. SIGGRAPH) 34, 4 (2015).Google ScholarDigital Library

58. Neus Sabater, Guillaume Boisson, Benoit Vandame, Paul Kerbiriou, Frederic Babon, Matthieu Hog, Tristan Langlois, Remy Gendrot, Olivier Bureller, Arno Schubert, and Valerie Allie. 2017. Dataset and Pipeline for Multi-View Light-Field Video. In CVPR Workshops.Google Scholar

59. Shunsuke Saito, Zeng Huang, Ryota Natsume, Shigeo Morishima, Angjoo Kanazawa, and Hao Li. 2019. PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization. Proc. ICCV (2019), 2304–2314.Google ScholarCross Ref

60. Vincent Sitzmann, Justus Thies, Felix Heide, Matthias Nießner, Gordon Wetzstein, and Michael Zollhöfer. 2019a. DeepVoxels: Learning Persistent 3D Feature Embeddings. In CVPR.Google Scholar

61. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019b. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. In NeurIPS.Google Scholar

62. D. Sun, E. B. Sudderth, and M. J. Black. 2012. Layered Segmentation and Optical Flow Estimation Over Time. In CVPR. 1768–1775.Google Scholar

63. Deqing Sun, Xiaodong Yang, Ming-Yu Liu, and Jan Kautz. 2018b. PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume. In CVPR.Google Scholar

64. Shao-Hua Sun, Minyoung Huh, Yuan-Hong Liao, Ning Zhang, and Joseph J Lim. 2018a. Multi-view to Novel View: Synthesizing Novel Views with Self-learned Confidence. In Proc. ECCV.Google ScholarCross Ref

65. A. Tewari, O. Fried, J. Thies, V. Sitzmann, S. Lombardi, K. Sunkavalli, R. Martin-Brualla, T. Simon, J. Saragih, M. Nießner, R. Pandey, S. Fanello, G. Wetzstein, J.-Y. Zhu, C. Theobalt, M. Agrawala, E. Shechtman, D. B Goldman, and M. Zollhöfer. 2020. State of the Art on Neural Rendering. Comp. Graph. Forum (EG STAR 2020) (2020).Google Scholar

66. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred Neural Rendering: Image Synthesis using Neural Textures. ACM Trans. Graph. (Proc. SIGGRAPH) (2019).Google ScholarDigital Library

67. Huamin Wang and Ruigang Yang. 2005. Towards Space: Time Light Field Rendering. In Proc. i3D.Google ScholarDigital Library

68. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. Video-to-Video Synthesis. In NeurIPS.Google Scholar

69. Ting-Chun Wang, Jun-Yan Zhu, Nima Khademi Kalantari, Alexei A. Efros, and Ravi Ramamoorthi. 2017. Light Field Video Capture Using a Learning-Based Hybrid Imaging System. ACM Trans. Graph. (Proc. SIGGRAPH) 36, 4 (2017).Google ScholarDigital Library

70. Tom White. 2016. Sampling Generative Networks. Arxiv 1609.04468 (2016).Google Scholar

71. Bennett Wilburn, Neel Joshi, Vaibhav Vaish, Eino-Ville Talvala, Emilio Antunez, Adam Barth, Andrew Adams, Mark Horowitz, and Marc Levoy. 2005. High performance imaging using large camera arrays. In ACM SIGGRAPH. 765–76.Google Scholar

72. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019. Deep View Synthesis from Sparse Photometric Images. ACM Transactions on Graphics (TOG) 38, 4 (2019), 76.Google ScholarDigital Library

73. Zexiang Xu, Kalyan Sunkavalli, Sunil Hadap, and Ravi Ramamoorthi. 2018. Deep Image-based Relighting from Optimal Sparse Samples. ACM Transactions on Graphics (TOG) 37, 4 (2018), 126.Google ScholarDigital Library

74. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR. 586–95.Google Scholar

75. Tinghui Zhou, Matthew Brown, Noah Snavely, and David G. Lowe. 2017. Unsupervised Learning of Depth and Ego-Motion from Video. In CVPR.Google Scholar

76. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis Using Multiplane Images. ACM Trans. Graph. (Proc. SIGGRAPH) 37, 4 (2018).Google ScholarDigital Library

77. Tinghui Zhou, Shubham Tulsiani, Weilun Sun, Jitendra Malik, and Alexei A Efros. 2016. View Synthesis by Appearance Flow. In ECCV.Google Scholar

78. C Lawrence Zitnick, Sing Bing Kang, Matthew Uyttendaele, Simon Winder, and Richard Szeliski. 2004. High-quality Video View Interpolation Using a Layered Representation. In ACM Trans. Graph., Vol. 23.Google ScholarDigital Library

79. Yuliang Zou, Zelun Luo, and Jia-Bin Huang. 2018. Df-net: Unsupervised Joint Learning of Depth and Flow Using Cross-task Consistency. In ECCV. 36–53.Google Scholar