“Layered neural rendering for retiming people in video” by Lu, Cole, Dekel, Xie, Zisserman, et al. …

Conference:

Type(s):

Title:

- Layered neural rendering for retiming people in video

Session/Category Title: Neural Rendering

Presenter(s)/Author(s):

Abstract:

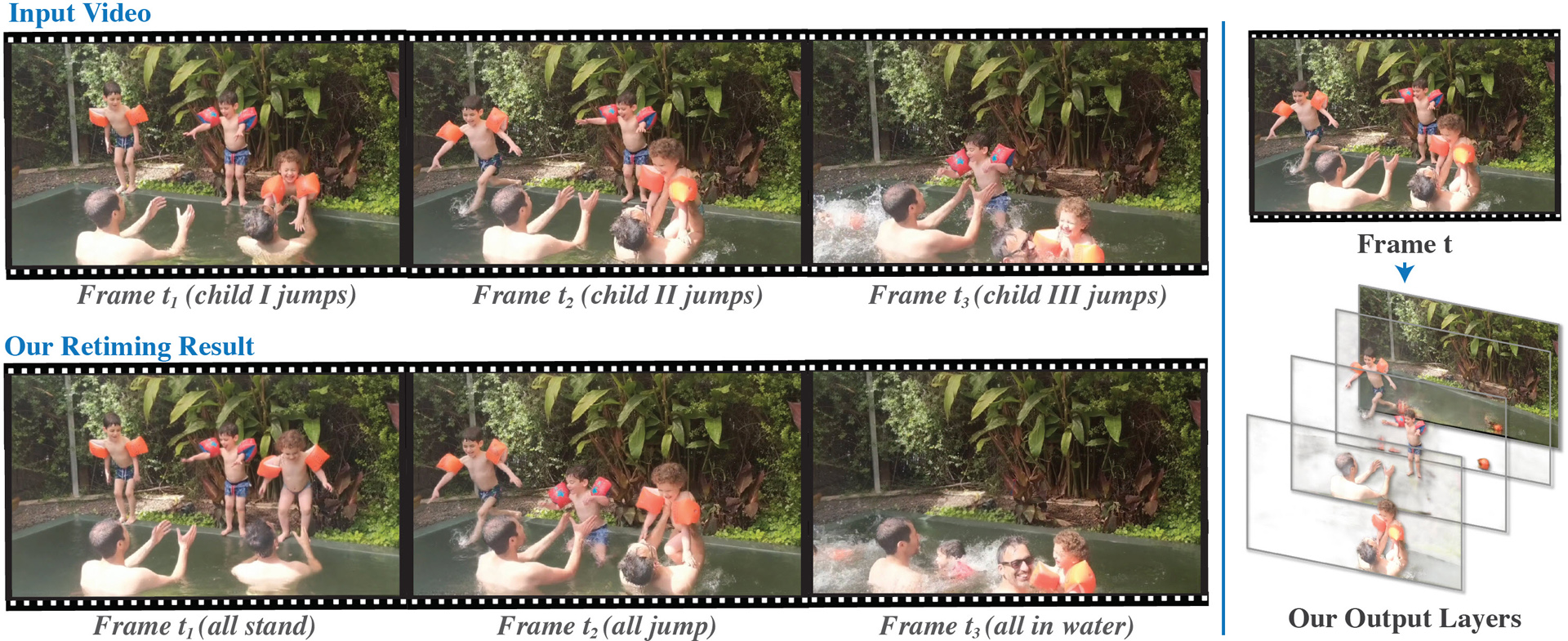

We present a method for retiming people in an ordinary, natural video — manipulating and editing the time in which different motions of individuals in the video occur. We can temporally align different motions, change the speed of certain actions (speeding up/slowing down, or entirely “freezing” people), or “erase” selected people from the video altogether. We achieve these effects computationally via a dedicated learning-based layered video representation, where each frame in the video is decomposed into separate RGBA layers, representing the appearance of different people in the video. A key property of our model is that it not only disentangles the direct motions of each person in the input video, but also correlates each person automatically with the scene changes they generate—e.g., shadows, reflections, and motion of loose clothing. The layers can be individually retimed and recombined into a new video, allowing us to achieve realistic, high-quality renderings of retiming effects for real-world videos depicting complex actions and involving multiple individuals, including dancing, trampoline jumping, or group running.

References:

1. Kfir Aberman, Mingyi Shi, Jing Liao, Dani Lischinski, Baoquan Chen, and Daniel Cohen-Or. 2019. Deep Video-Based Performance Cloning. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 219–233.Google Scholar

2. Aseem Agarwala, Colin Zheng, Chris Pal, Maneesh Agrawala, Michael Cohen, Brian Curless, David Salesin, and Richard Szeliski. 2005. Panoramic Video Textures. In SIGGRAPH.Google Scholar

3. Jean-Baptiste Alayrac, João Carreira, and Andrew Zisserman. 2019a. The Visual Centrifuge: Model-Free Layered Video Representations. In CVPR.Google Scholar

4. Jean-Baptiste Alayrac, Joao Carreira, Relja Arandjelovic, and Andrew Zisserman. 2019b. Controllable Attention for Structured Layered Video Decomposition. In ICCV.Google Scholar

5. Xue Bai, Jue Wang, David Simons, and Guillermo Sapiro. 2009. Video SnapCut: robust video object cutout using localized classifiers. TOG (2009).Google ScholarDigital Library

6. Connelly Barnes, Dan B Goldman, Eli Shechtman, and Adam Finkelstein. 2010. Video Tapestries with Continuous Temporal Zoom. SIGGRAPH (2010).Google Scholar

7. Eric P Bennett and Leonard McMillan. 2007. Computational time-lapse video. In ACM SIGGRAPH 2007 papers. 102-es.Google ScholarDigital Library

8. Daniel Castro, Steven Hickson, Patsorn Sangkloy, Bhavishya Mittal, Sean Dai, James Hays, and Irfan Essa. 2018. Let’s Dance: Learning From Online Dance Videos. In eprint arXiv:2139179.Google Scholar

9. Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A Efros. 2019. Everybody dance now. In Proceedings of the IEEE International Conference on Computer Vision. 5933–5942.Google ScholarCross Ref

10. Yung-Yu Chuang, Aseem Agarwala, Brian Curless, David Salesin, and Richard Szeliski. 2002. Video matting of complex scenes. In SIGGRAPH.Google Scholar

11. Abe Davis and Maneesh Agrawala. 2018. Visual Rhythm and Beat. ACM Trans. Graph. 37, 4 (2018), 122–1.Google ScholarDigital Library

12. Hao-Shu Fang, Shuqin Xie, Yu-Wing Tai, and Cewu Lu. 2017. RMPE: Regional Multiperson Pose Estimation. In ICCV.Google Scholar

13. Oran Gafni, Lior Wolf, and Yaniv Taigman. 2020. Vid2Game: Controllable Characters Extracted from Real-World Videos. In ICLR.Google Scholar

14. Yossi Gandelsman, Assaf Shocher, and Michal Irani. 2019. “Double-DIP”: Unsupervised Image Decomposition via Coupled Deep-Image-Priors. In CVPR.Google Scholar

15. Dan B. Goldman, Chris Gonterman, Brian Curless, David Salesin, and Steven M. Seitz. 2008. Video Object Annotation, Navigation, and Composition. In Proceedings of the 21st Annual ACM Symposium on User Interface Software and Technology (Monterey, CA, USA) (UIST âĂŹ08). Association for Computing Machinery, New York, NY, USA, 3âĂŞ12. Google ScholarDigital Library

16. Matthias Grundmann, Vivek Kwatra, and Irfan Essa. 2011. Auto-directed video stabilization with robust l1 optimal camera paths. In CVPR 2011. IEEE, 225–232.Google ScholarDigital Library

17. Rıza Alp Güler, Natalia Neverova, and Iasonas Kokkinos. 2018. Densepose: Dense human pose estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 7297–7306.Google ScholarCross Ref

18. Qiqi Hou and Feng Liu. 2019. Context-Aware Image Matting for Simultaneous Foreground and Alpha Estimation. In ICCV.Google Scholar

19. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In CVPR.Google Scholar

20. Njegica Jojic and B.J. Frey. 2001. Learning flexible sprites in video layers. In CVPR.Google Scholar

21. Neel Joshi, Wolf Kienzle, Mike Toelle, Matt Uyttendaele, and Michael F Cohen. 2015. Real-time hyperlapse creation via optimal frame selection. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–9.Google ScholarDigital Library

22. H. Kim, P. Garrido, A. Tewari, W. Xu, J. Thies, M. Nießner, P. Péerez, C. Richardt, M. Zollhöfer, and C. Theobalt. 2018. Deep Video Portraits. ACM Transactions on Graphics 2018 (TOG) (2018).Google Scholar

23. Diederik Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. ICLR (2014).Google Scholar

24. M. Pawan Kumar, Philip H. S. Torr, and Andrew Zisserman. 2008. Learning Layered Motion Segmentations of Video. IJCV (2008).Google Scholar

25. Shuyue Lan, Rameswar Panda, Qi Zhu, and Amit K Roy-Chowdhury. 2018. FFNet: Video fast-forwarding via reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6771–6780.Google ScholarCross Ref

26. Jessica Lee, Deva Ramanan, and Rohit Girdhar. 2020. MetaPix: Few-Shot Video Retargeting. In International Conference on Learning Representations. https://openreview.net/forum?id=SJx1URNKwHGoogle Scholar

27. Yin Li, Jian Sun, and Heung-Yeung Shum. 2005. Video Object Cut and Paste. In SIGGRAPH.Google Scholar

28. Lingjie Liu, Weipeng Xu, Michael Zollhöfer, Hyeongwoo Kim, Florian Bernard, Marc Habermann, Wenping Wang, and Christian Theobalt. 2019. Neural Rendering and Reenactment of Human Actor Videos. ACM Trans. Graph. 38, 5, Article 139 (Oct. 2019), 14 pages. Google ScholarDigital Library

29. Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J. Black. 2015. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34, 6 (Oct. 2015), 248:1–248:16.Google ScholarDigital Library

30. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, and et al. 2018. LookinGood: Enhancing Performance Capture with Real-Time Neural Re-Rendering. ACM Trans. Graph. 37, 6, Article 255 (Dec. 2018), 14 pages. Google ScholarDigital Library

31. James McCann, Nancy S Pollard, and Siddhartha Srinivasa. 2006. Physics-based motion retiming. In Proceedings of the 2006 ACM SIGGRAPH/Eurographics symposium on Computer animation. Eurographics Association, 205–214.Google ScholarDigital Library

32. Moustafa Meshry, Dan B Goldman, Sameh Khamis, Hugues Hoppe, Rohit Pandey, Noah Snavely, and Ricardo Martin-Brualla. 2019. Neural rerendering in the wild.Google Scholar

33. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6878–6887.Google Scholar

34. Ajay Nandoriya, Elgharib Mohamed, Changil Kim, Mohamed Hefeeda, and Wojciech Matusik. 2017. Video Reflection Removal Through Spatio-Temporal Optimization. In ICCV.Google Scholar

35. Yair Poleg, Tavi Halperin, Chetan Arora, and Shmuel Peleg. 2015. Egosampling: Fast-forward and stereo for egocentric videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4768–4776.Google ScholarCross Ref

36. Thomas Porter and Tom Duff. 1984. Compositing Digital Images. SIGGRAPH Comput. Graph. 18, 3 (Jan. 1984), 253âĂŞ259. Google ScholarDigital Library

37. Yael Pritch, Alex Rav-Acha, and Shmuel Peleg. 2008. Nonchronological video synopsis and indexing. IEEE transactions on pattern analysis and machine intelligence 30, 11 (2008), 1971–1984.Google ScholarDigital Library

38. Ethan Rublee, Vincent Rabaud, Kurt Konolige, and Gary Bradski. 2011. ORB: An efficient alternative to SIFT or SURF. In 2011 International conference on computer vision. Ieee, 2564–2571.Google ScholarDigital Library

39. Michel Silva, Washington Ramos, Joao Ferreira, Felipe Chamone, Mario Campos, and Erickson R Nascimento. 2018. A weighted sparse sampling and smoothing frame transition approach for semantic fast-forward first-person videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2383–2392.Google ScholarCross Ref

40. V. Sitzmann, J. Thies, F. Heide, M. Nießner, G. Wetzstein, and Zollhöfer. 2019. DeepVoxels: Learning Persistent 3D Feature Embeddings. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE.Google ScholarCross Ref

41. Pratul P. Srinivasan, Richard Tucker, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng, and Noah Snavely. 2019. Pushing the Boundaries of View Extrapolation with Multiplane Images. In CVPR.Google Scholar

42. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred neural rendering: image synthesis using neural textures. ACM Trans. Graph. 38, 4 (2019), 66:1–66:12.Google ScholarDigital Library

43. Giorgio Tomasi, Frans Van Den Berg, and Claus Andersson. 2004. Correlation optimized warping and dynamic time warping as preprocessing methods for chromatographic data. Journal of Chemometrics: A Journal of the Chemometrics Society 18, 5 (2004), 231–241.Google ScholarCross Ref

44. Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. 2018. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 9446–9454.Google Scholar

45. John Wang and Edward Adelson. 1994. Representing Moving Images with Layers. IEEE Trans. on Image Processing (1994).Google ScholarDigital Library

46. Jue Wang, Pravin Bhat, R. Alex Colburn, Maneesh Agrawala, and Michael F. Cohen. 2005. Interactive Video Cutout. TOG (2005).Google Scholar

47. Yonatan Wexler, Eli Shechtman, and Michal Irani. 2007. Space-Time Completion of Video. PAMI (2007).Google Scholar

48. Yuliang Xiu, Jiefeng Li, Haoyu Wang, Yinghong Fang, and Cewu Lu. 2018. Pose Flow: Efficient Online Pose Tracking. In BMVC.Google Scholar

49. Ning Xu, Brian Price, Scott Cohen, and Thomas Huang. 2017. Deep Image Matting. In CVPR.Google Scholar

50. Tianfan Xue, Michael Rubinstein, Ce Liu, and William T. Freeman. 2015. A Computational Approach for Obstruction-Free Photography. ACM Transactions on Graphics (Proc. SIGGRAPH) 34, 4 (2015).Google Scholar

51. Innfarn Yoo, Michel Abdul Massih, Illia Ziamtsov, Raymond Hassan, and Bedrich Benes. 2015. Motion retiming by using bilateral time control surfaces. Computers & Graphics 47 (2015), 59–67.Google ScholarDigital Library

52. Feng Zhou, Sing Bing Kang, and Michael F Cohen. 2014. Time-mapping using spacetime saliency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3358–3365.Google Scholar

53. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis using Multiplane Images. In SIGGRAPH.Google ScholarDigital Library

54. Yipin Zhou, Zhaowen Wang, Chen Fang, Trung Bui, and Tamara Berg. 2019. Dance dance generation: Motion transfer for internet videos. In Proceedings of the IEEE International Conference on Computer Vision Workshops. 0–0.Google ScholarCross Ref

55. C. Lawrence Zitnick, Sing Bing Kang, Matthew Uyttendaele, Simon Winder, and Richard Szeliski. 2004. High-quality video view interpolation using a layered representation. TOG.Google Scholar