“MaterialGAN: reflectance capture using a generative SVBRDF model” by Guo, Smith, Hasan, Sunkavalli and Zhao

Conference:

Type(s):

Title:

- MaterialGAN: reflectance capture using a generative SVBRDF model

Session/Category Title: Modeling and Capturing Appearance

Presenter(s)/Author(s):

Abstract:

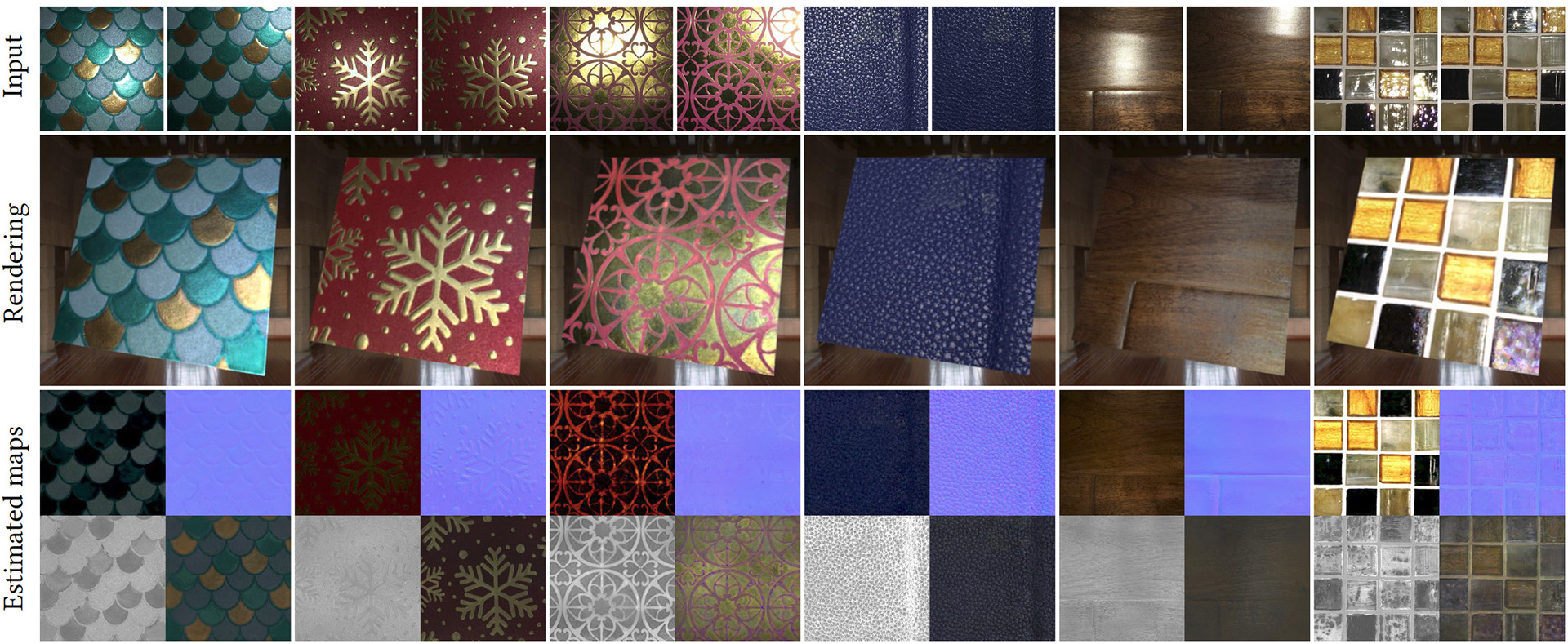

We address the problem of reconstructing spatially-varying BRDFs from a small set of image measurements. This is a fundamentally under-constrained problem, and previous work has relied on using various regularization priors or on capturing many images to produce plausible results. In this work, we present MaterialGAN, a deep generative convolutional network based on StyleGAN2, trained to synthesize realistic SVBRDF parameter maps. We show that MaterialGAN can be used as a powerful material prior in an inverse rendering framework: we optimize in its latent representation to generate material maps that match the appearance of the captured images when rendered. We demonstrate this framework on the task of reconstructing SVBRDFs from images captured under flash illumination using a hand-held mobile phone. Our method succeeds in producing plausible material maps that accurately reproduce the target images, and outperforms previous state-of-the-art material capture methods in evaluations on both synthetic and real data. Furthermore, our GAN-based latent space allows for high-level semantic material editing operations such as generating material variations and material morphing.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019a. Image2StyleGAN++: How to Edit the Embedded Images? arXiv:1911.11544Google Scholar

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019b. Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space? arXiv:1904.03189Google Scholar

3. Miika Aittala, Timo Aila, and Jaakko Lehtinen. 2016. Reflectance Modeling by Neural Texture Synthesis. ACM Trans. Graph. 35, 4 (2016), 65:1–65:13.Google ScholarDigital Library

4. Miika Aittala, Tim Weyrich, and Jaakko Lehtinen. 2013. Practical SVBRDF Capture in the Frequency Domain. ACM Trans. Graph. 32, 4 (2013), 110:1–110:12.Google ScholarDigital Library

5. Miika Aittala, Tim Weyrich, and Jaakko Lehtinen. 2015. Two-shot SVBRDF Capture for Stationary Materials. ACM Trans. Graph. 34, 4 (2015), 110:1–110:13.Google ScholarDigital Library

6. Muhammad Asim, Ali Ahmed, and Paul Hand. 2019. Invertible generative models for inverse problems: mitigating representation error and dataset bias. arXiv preprint arXiv:1905.11672 (2019).Google Scholar

7. Ashish Bora, Ajil Jalal, Eric Price, and Alexandros G. Dimakis. 2017. Compressed Sensing using Generative Models (Proceedings of Machine Learning Research), Vol. 70. 537–546.Google Scholar

8. Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image SVBRDF Capture with a Rendering-aware Deep Network. ACM Trans. Graph. 37, 4 (2018), 128:1–128:15.Google ScholarDigital Library

9. Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, and Adrien Bousseau. 2019. Flexible SVBRDF Capture with a Multi-Image Deep Network. Computer Graphics Forum 38, 4 (2019).Google Scholar

10. Chris Donahue, Julian McAuley, and Miller Puckette. 2018. Synthesizing Audio with Generative Adversarial Networks. CoRR abs/1802.04208 (2018). arXiv:1802.04208Google Scholar

11. Yue Dong. 2019. Deep appearance modeling: A survey. Visual Informatics 3, 2 (2019), 59–68.Google ScholarCross Ref

12. Yannick Francken, Tom Cuypers, Tom Mertens, and Philippe Bekaert. 2009. Gloss and Normal Map Acquisition of Mesostructures Using Gray Codes. In Advances in Visual Computing, Vol. 5876. Springer, 788–798.Google Scholar

13. Duan Gao, Xiao Li, Yue Dong, Pieter Peers, Kun Xu, and Xin Tong. 2019. Deep inverse rendering for high-resolution SVBRDF estimation from an arbitrary number of images. ACM Trans. Graph. 38, 4 (2019).Google ScholarDigital Library

14. Andrew Gardner, Chris Tchou, Tim Hawkins, and Paul Debevec. 2003. Linear Light Source Reflectometry. ACM Trans. Graph. 22, 3 (2003), 749–758.Google ScholarDigital Library

15. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2015. A Neural Algorithm of Artistic Style. arXiv:1508.06576Google Scholar

16. L. A. Gatys, A. S. Ecker, and M. Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In CVPR 2016. 2414–2423.Google Scholar

17. Abhijeet Ghosh, Tongbo Chen, Pieter Peers, Cyrus A. Wilson, and Paul Debevec. 2009. Estimating Specular Roughness and Anisotropy from Second Order Spherical Gradient Illumination. In EGSR 2009. 1161–1170.Google ScholarDigital Library

18. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014a. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27. 2672–2680.Google Scholar

19. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014b. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27. 2672–2680.Google Scholar

20. Dar’ya Guarnera, Giuseppe Claudio Guarnera, Abhijeet Ghosh, Cornelia Denk, and Mashhuda Glencross. 2016. BRDF Representation and Acquisition. Computer Graphics Forum (2016).Google Scholar

21. Xun Huang and Serge Belongie. 2017. Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization. In ICCV 2017.Google Scholar

22. Zhuo Hui, Kalyan Sunkavalli, Joon-Young Lee, Sunil Hadap, Jian Wang, and Aswin C. Sankaranarayanan. 2017. Reflectance Capture Using Univariate Sampling of BRDFs. In ICCV 2017.Google Scholar

23. Justin Johnson, Alexandre Alahi, and Fei-Fei Li. 2016. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In ECCV 2016.Google Scholar

24. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018a. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In ICLR 2018.Google Scholar

25. Tero Karras, Samuli Laine, and Timo Aila. 2018b. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv:1812.04948Google Scholar

26. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2019. Analyzing and Improving the Image Quality of StyleGAN. arXiv:1912.04958Google Scholar

27. Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. 2017. Modeling Surface Appearance from a Single Photograph Using Self-Augmented Convolutional Neural Networks. ACM Trans. Graph. 36, 4 (2017), 45:1–45:11.Google ScholarDigital Library

28. Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. 2019. Synthesizing 3d shapes from silhouette image collections using multi-projection generative adversarial networks. In CVPR 2019. 5535–5544.Google ScholarCross Ref

29. Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018. Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image. In ECCV 2018, Vol. 11207. 74–90.Google ScholarCross Ref

30. Stephen R. Marschner, Stephen H. Westin, Eric P. F. Lafortune, Kenneth E. Torrance, and Donald P. Greenberg. 1999. Image-Based BRDF Measurement Including Human Skin. In Eurographics Workshop on Rendering.Google Scholar

31. Wojciech Matusik, Hanspeter Pfister, Matt Brand, and Leonard McMillan. 2003. A Data-Driven Reflectance Model. ACM Trans. Graph. 22, 3 (2003), 759–769.Google ScholarDigital Library

32. Addy Ngan, Frédo Durand, and Wojciech Matusik. 2005. Experimental Analysis of BRDF Models. In EGSR 2005. 117–226.Google Scholar

33. Daniel O’Malley, John K Golden, and Velimir V Vesselinov. 2019. Learning to regularize with a variational autoencoder for hydrologic inverse analysis. arXiv preprint arXiv:1906.02401 (2019).Google Scholar

34. Alec Radford, Luke Metz, and Soumith Chintala. 2015. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. CoRR abs/1511.06434 (2015). arXiv:1511.06434Google Scholar

35. Peiran Ren, Jiaping Wang, John Snyder, Xin Tong, and Baining Guo. 2011. Pocket Reflectometry. ACM Trans. Graph. 30, 4 (2011), 45:1–45:10.Google ScholarDigital Library

36. Karen Simonyan and Andrew Zisserman. 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition. In ICLR 2015.Google Scholar

37. Sergey Tulyakov, Ming-Yu Liu, Xiaodong Yang, and Jan Kautz. 2018. MoCoGAN: Decomposing Motion and Content for Video Generation. In CVPR 2018.Google Scholar

38. Bruce Walter, Stephen R. Marschner, Hongsong Li, and Kenneth E. Torrance. 2007. Microfacet Models for Refraction Through Rough Surfaces. EGSR 2007 (2007), 195–206.Google Scholar

39. Tim Weyrich, Jason Lawrence, Hendrik PA Lensch, Szymon Rusinkiewicz, and Todd Zickler. 2009. Principles of appearance acquisition and representation. Now Publishers Inc.Google Scholar

40. Zexiang Xu, Jannik Boll Nielsen, Jiyang Yu, Henrik Wann Jensen, and Ravi Ramamoorthi. 2016. Minimal BRDF Sampling for Two-Shot near-Field Reflectance Acquisition. ACM Trans. Graph. 35, 6 (2016), 188:1–188:12.Google ScholarDigital Library

41. Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. CoRR abs/1801.03924 (2018).Google Scholar

42. Jun-Yan Zhu, Philipp Krähenbühl, Eli Shechtman, and Alexei A. Efros. 2016. Generative Visual Manipulation on the Natural Image Manifold. arXiv:1609.03552Google Scholar