“Learned feature embeddings for non-line-of-sight imaging and recognition” by Chen, Wei, Kutulakos, Rusinkiewicz and Heide

Conference:

Type(s):

Title:

- Learned feature embeddings for non-line-of-sight imaging and recognition

Session/Category Title: Learning New Viewpoints

Presenter(s)/Author(s):

Abstract:

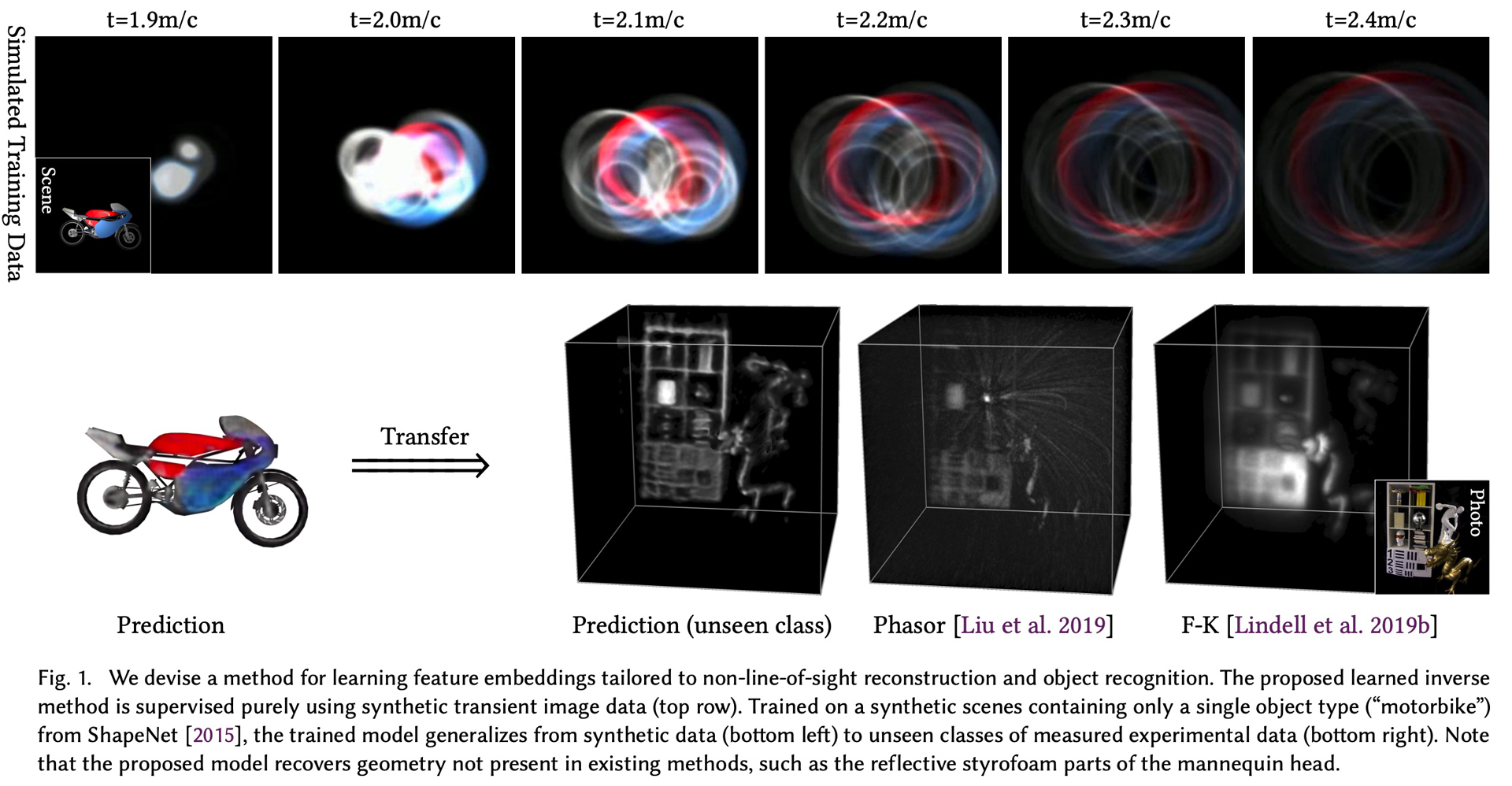

Objects obscured by occluders are considered lost in the images acquired by conventional camera systems, prohibiting both visualization and understanding of such hidden objects. Non-line-of-sight methods (NLOS) aim at recovering information about hidden scenes, which could help make medical imaging less invasive, improve the safety of autonomous vehicles, and potentially enable capturing unprecedented high-definition RGB-D data sets that include geometry beyond the directly visible parts. Recent NLOS methods have demonstrated scene recovery from time-resolved pulse-illuminated measurements encoding occluded objects as faint indirect reflections. Unfortunately, these systems are fundamentally limited by the quartic intensity fall-off for diffuse scenes. With laser illumination limited by eye-safety limits, recovery algorithms must tackle this challenge by incorporating scene priors. However, existing NLOS reconstruction algorithms do not facilitate learning scene priors. Even if they did, datasets that allow for such supervision do not exist, and successful encoder-decoder networks and generative adversarial networks fail for real-world NLOS data. In this work, we close this gap by learning hidden scene feature representations tailored to both reconstruction and recognition tasks such as classification or object detection, while still relying on physical models at the feature level. We overcome the lack of real training data with a generalizable architecture that can be trained in simulation. We learn the differentiable scene representation jointly with the reconstruction task using a differentiable transient renderer in the objective, and demonstrate that it generalizes to unseen classes and unseen real-world scenes, unlike existing encoder-decoder architectures and generative adversarial networks. The proposed method allows for end-to-end training for different NLOS tasks, such as image reconstruction, classification, and object detection, while being memory-efficient and running at real-time rates. We demonstrate hidden view synthesis, RGB-D reconstruction, classification, and object detection in the hidden scene in an end-to-end fashion.

References:

1. Nils Abramson. 1978. Light-in-flight recording by holography. Optics Letters 3, 4 (1978), 121–123.Google ScholarCross Ref

2. Victor Arellano, Diego Gutierrez, and Adrian Jarabo. 2017. Fast back-projection for non-line of sight reconstruction. Optics Express 25, 10 (2017), 11574–11583.Google ScholarCross Ref

3. Katherine L Bouman, Vickie Ye, Adam B Yedidia, Frédo Durand, Gregory W Wornell, Antonio Torralba, and William T Freeman. 2017. Turning corners into cameras: Principles and methods. In IEEE International Conference on Computer Vision (ICCV). 2289–2297.Google ScholarCross Ref

4. Samuel Burri. 2016. Challenges and Solutions to Next-Generation Single-Photon Imagers. Technical Report. EPFL.Google Scholar

5. Mauro Buttafava, Jessica Zeman, Alberto Tosi, Kevin Eliceiri, and Andreas Velten. 2015. Non-line-of-sight imaging using a time-gated single photon avalanche diode. Optics express 23, 16 (2015), 20997–21011.Google Scholar

6. Piergiorgio Caramazza, Alessandro Boccolini, Daniel Buschek, Matthias Hullin, Catherine F Higham, Robert Henderson, Roderick Murray-Smith, and Daniele Faccio. 2018a. Neural network identification of people hidden from view with a single-pixel, single-photon detector. Scientific reports 8, 1 (2018), 11945.Google Scholar

7. Piergiorgio Caramazza, Alessandro Boccolini, Daniel Buschek, Matthias Hullin, Catherine F Higham, Robert Henderson, Roderick Murray-Smith, and Daniele Faccio. 2018b. Neural network identification of people hidden from view with a single-pixel, single-photon detector. Scientific Reports 8, 1 (2018), 11945.Google ScholarCross Ref

8. Susan Chan, Ryan E Warburton, Genevieve Gariepy, Jonathan Leach, and Daniele Faccio. 2017. Non-line-of-sight tracking of people at long range. Optics express 25, 9 (2017), 10109–10117.Google Scholar

9. Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. 2015. ShapeNet: An Information-Rich 3D Model Repository. Technical Report arXiv:1512.03012 [cs.GR]. Stanford University — Princeton University — Toyota Technological Institute at Chicago.Google Scholar

10. Wenzheng Chen, Simon Daneau, Fahim Mannan, and Felix Heide. 2019. Steady-state non-line-of-sight imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6790–6799.Google ScholarCross Ref

11. Javier Grau Chopite, Matthias B. Hullin, Michael Wand, and Julian Iseringhausen. 2020. Deep Non-Line-of-Sight Reconstruction. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

12. Christopher B Choy, Danfei Xu, JunYoung Gwak, Kevin Chen, and Silvio Savarese. 2016. 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In European conference on computer vision. Springer, 628–644.Google ScholarCross Ref

13. Özgün Çiçek, Ahmed Abdulkadir, Soeren S Lienkamp, Thomas Brox, and Olaf Ronneberger. 2016. 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computerassisted intervention. Springer, 424–432.Google Scholar

14. PB Coates. 1972. Pile-up corrections in the measurement of lifetimes. Journal of Physics E: Scientific Instruments 5, 2 (1972), 148.Google ScholarCross Ref

15. Michael F Cohen and Donald P Greenberg. 1985. The hemi-cube: A radiosity solution for complex environments. ACM Siggraph Computer Graphics 19, 3 (1985), 31–40.Google ScholarDigital Library

16. Qi Guo, Iuri Frosio, Orazio Gallo, Todd Zickler, and Jan Kautz. 2018. Tackling 3d tof artifacts through learning and the flat dataset. In Proceedings of the European Conference on Computer Vision (ECCV). 368–383.Google ScholarCross Ref

17. Otkrist Gupta, Thomas Willwacher, Andreas Velten, Ashok Veeraraghavan, and Ramesh Raskar. 2012. Reconstruction of hidden 3D shapes using diffuse reflections. Opt. Express 20, 17 (Aug 2012), 19096–19108.Google ScholarCross Ref

18. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.Google ScholarCross Ref

19. Felix Heide, Steven Diamond, David B Lindell, and Gordon Wetzstein. 2018. Subpicosecond photon-efficient 3D imaging using single-photon sensors. Scientific reports 8, 1 (2018), 17726.Google Scholar

20. Felix Heide, Matthias B Hullin, James Gregson, and Wolfgang Heidrich. 2013. Low-budget transient imaging using photonic mixer devices. ACM Transactions on Graphics (ToG) 32, 4 (2013), 1–10.Google ScholarDigital Library

21. Felix Heide, Matthew O’Toole, Kai Zang, David B Lindell, Steven Diamond, and Gordon Wetzstein. 2019. Non-line-of-sight imaging with partial occluders and surface normals. ACM Transactions on Graphics (ToG) 38, 3 (2019), 22.Google ScholarDigital Library

22. Felix Heide, Lei Xiao, Wolfgang Heidrich, and Matthias B Hullin. 2014. Diffuse mirrors: 3D reconstruction from diffuse indirect illumination using inexpensive time-of-flight sensors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3222–3229.Google ScholarDigital Library

23. Quercus Hernandez, Diego Gutierrez, and Adrian Jarabo. 2017. A Computational Model of a Single-Photon Avalanche Diode Sensor for Transient Imaging. arXiv:physics.insdet/1703.02635Google Scholar

24. Julian Iseringhausen and Matthias B Hullin. 2020. Non-line-of-sight reconstruction using efficient transient rendering. ACM Transactions on Graphics (TOG) 39, 1 (2020), 1–14.Google ScholarDigital Library

25. Max Jaderberg, Karen Simonyan, Andrew Zisserman, et al. 2015. Spatial transformer networks. In Advances in neural information processing systems. 2017–2025.Google Scholar

26. Adrian Jarabo and Victor Arellano. 2018. Bidirectional rendering of vector light transport. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 96–105.Google ScholarDigital Library

27. Adrian Jarabo, Julio Marco, Adolfo Munoz, Raul Buisan, Wojciech Jarosz, and Diego Gutierrez. 2014. A Framework for Transient Rendering. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia) 33, 6 (nov 2014). Google ScholarDigital Library

28. Adrian Jarabo, Belen Masia, Julio Marco, and Diego Gutierrez. 2017. Recent advances in transient imaging: A computer graphics and vision perspective. Visual Informatics 1, 1 (2017), 65–79.Google ScholarCross Ref

29. Achuta Kadambi, Refael Whyte, Ayush Bhandari, Lee Streeter, Christopher Barsi, Adrian Dorrington, and Ramesh Raskar. 2013. Coded time of flight cameras: sparse deconvolution to address multipath interference and recover time profiles. ACM Transactions on Graphics (ToG) 32, 6 (2013), 167.Google ScholarDigital Library

30. Achuta Kadambi, Hang Zhao, Boxin Shi, and Ramesh Raskar. 2016. Occluded imaging with time-of-flight sensors. ACM Transactions on Graphics (ToG) 35, 2 (2016), 15.Google ScholarDigital Library

31. Ori Katz, Pierre Heidmann, Mathias Fink, and Sylvain Gigan. 2014. Non-invasive singleshot imaging through scattering layers and around corners via speckle correlations. Nature photonics 8, 10 (2014), 784.Google Scholar

32. Ori Katz, Eran Small, and Yaron Silberberg. 2012. Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nature photonics 6, 8 (2012), 549–553.Google Scholar

33. A. Kirmani, T. Hutchison, J. Davis, and R. Raskar. 2009. Looking around the corner using transient imaging. In IEEE International Conference on Computer Vision (ICCV). 159–166.Google Scholar

34. Ahmed Kirmani, Dheera Venkatraman, Dongeek Shin, Andrea Colaço, Franco NC Wong, Jeffrey H Shapiro, and Vivek K Goyal. 2014. First-photon imaging. Science 343, 6166 (2014), 58–61.Google Scholar

35. Jonathan Klein, Christoph Peters, Jaime Martín, Martin Laurenzis, and Matthias B Hullin. 2016. Tracking objects outside the line of sight using 2D intensity images. Scientific reports 6 (2016), 32491.Google Scholar

36. Martin Laurenzis and Andreas Velten. 2014. Feature selection and back-projection algorithms for nonline-of-sight laser-gated viewing. Journal of Electronic Imaging 23, 6 (2014), 063003.Google ScholarCross Ref

37. David B Lindell, Gordon Wetzstein, and Vladlen Koltun. 2019a. Acoustic non-line-of-sight imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6780–6789.Google ScholarCross Ref

38. David B. Lindell, Gordon Wetzstein, and Matthew O’Toole. 2019b. Wave-based non-line-of-sight imaging using fast f-k migration. ACM Trans. Graph. (SIGGRAPH) 38, 4 (2019), 116.Google ScholarDigital Library

39. Xiaochun Liu, Sebastian Bauer, and Andreas Velten. 2020. Phasor field diffraction based reconstruction for fast non-line-of-sight imaging systems. Nature Communications 11 (2020). Google ScholarCross Ref

40. Xiaochun Liu, Ibón Guillén, Marco La Manna, Ji Hyun Nam, Syed Azer Reza, Toan Huu Le, Adrian Jarabo, Diego Gutierrez, and Andreas Velten. 2019. Non-line-of-sight imaging using phasor-field virtual wave optics. Nature (2019), 1–4.Google Scholar

41. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. 38, 4, Article 65 (July 2019), 14 pages.Google ScholarDigital Library

42. Julio Marco, Quercus Hernandez, Adolfo Muñoz, Yue Dong, Adrian Jarabo, Min H Kim, Xin Tong, and Diego Gutierrez. 2017. DeepToF: off-the-shelf real-time correction of multipath interference in time-of-flight imaging. ACM Transactions on Graphics (ToG) 36, 6 (2017), 1–12.Google ScholarDigital Library

43. Christopher A. Metzler, Felix Heide, Prasana Rangarajan, Muralidhar Madabhushi Balaji, Aparna Viswanath, Ashok Veeraraghavan, and Richard G. Baraniuk. 2020. Deep-inverse correlography: towards real-time high-resolution non-line-of-sight imaging. Optica 7, 1 (Jan 2020), 63–71. Google ScholarCross Ref

44. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv:cs.CV/2003.08934Google Scholar

45. N. Naik, S. Zhao, A. Velten, R. Raskar, and K. Bala. 2011. Single view reflectance capture using multiplexed scattering and time-of-flight imaging. ACM Trans. Graph. 30, 6 (2011), 171.Google ScholarDigital Library

46. Frédéric Nolet, Samuel Parent, Nicolas Roy, Marc-Olivier Mercier, Serge Charlebois, Réjean Fontaine, and Jean-Francois Pratte. 2018. Quenching Circuit and SPAD Integrated in CMOS 65 nm with 7.8 ps FWHM Single Photon Timing Resolution. Instruments 2, 4 (2018), 19.Google ScholarCross Ref

47. Kyle Olszewski, Sergey Tulyakov, Oliver Woodford, Hao Li, and Linjie Luo. 2019. Transformable Bottleneck Networks. The IEEE International Conference on Computer Vision (ICCV) (Nov 2019).Google Scholar

48. Matthew O’Toole, David B Lindell, and Gordon Wetzstein. 2018a. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 7696 (2018), 338.Google Scholar

49. Matthew O’Toole, David B. Lindell, and Gordon Wetzstein. 2018b. Confocal Non-line-of-sight imaging based on the light cone transform. Nature (2018), 338–341. Issue 555.Google Scholar

50. R. Pandharkar, A. Velten, A. Bardagjy, E. Lawson, M. Bawendi, and R. Raskar. 2011. Estimating motion and size of moving non-line-of-sight objects in cluttered environments. In Proc. CVPR. 265–272.Google Scholar

51. Luca Parmesan, Neale AW Dutton, Neil J Calder, Andrew J Holmes, Lindsay A Grant, and Robert K Henderson. 2014. A 9.8 μm sample and hold time to amplitude converter CMOS SPAD pixel. In Solid State Device Research Conference (ESSDERC), 2014 44th European. IEEE, 290–293.Google Scholar

52. Adithya Pediredla, Ashok Veeraraghavan, and Ioannis Gkioulekas. 2019. Ellipsoidal Path Connections for Time-gated Rendering. ACM Trans. Graph. (SIGGRAPH) (2019).Google Scholar

53. Adithya Kumar Pediredla, Mauro Buttafava, Alberto Tosi, Oliver Cossairt, and Ashok Veeraraghavan. 2017. Reconstructing rooms using photon echoes: A plane based model and reconstruction algorithm for looking around the corner. In IEEE International Conference on Computational Photography (ICCP). IEEE.Google ScholarCross Ref

54. Stephan R Richter and Stefan Roth. 2018. Matryoshka networks: Predicting 3d geometry via nested shape layers. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1936–1944.Google Scholar

55. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.Google ScholarCross Ref

56. Charles Saunders, John Murray-Bruce, and Vivek K Goyal. 2019. Computational periscopy with an ordinary digital camera. Nature 565, 7740 (2019), 472.Google Scholar

57. Nicolas Scheiner, Florian Kraus, Fangyin Wei, Buu Phan, Fahim Mannan, Nils Appenrodt, Werner Ritter, Jurgen Dickmann, Klaus Dietmayer, Bernhard Sick, et al. 2020. Seeing Around Street Corners: Non-Line-of-Sight Detection and Tracking In-the-Wild Using Doppler Radar. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2068–2077.Google ScholarCross Ref

58. Vincent Sitzmann, Justus Thies, Felix Heide, Matthias Niessner, Gordon Wetzstein, and Michael Zollhöfer. 2019a. DeepVoxels: Learning Persistent 3D Feature Embeddings. In Proc. CVPR.Google ScholarCross Ref

59. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019b. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. In Advances in Neural Information Processing Systems.Google Scholar

60. Robert H Stolt. 1978. Migration by Fourier transform. Geophysics 43, 1 (1978), 23–48.Google ScholarCross Ref

61. Shuochen Su, Felix Heide, Gordon Wetzstein, and Wolfgang Heidrich. 2018. Deep end-to-end time-of-flight imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6383–6392.Google ScholarCross Ref

62. Matthew Tancik, Guy Satat, and Ramesh Raskar. 2018. Flash Photography for Data-Driven Hidden Scene Recovery. CoRR abs/1810.11710 (2018). arXiv:1810.11710 http://arxiv.org/abs/1810.11710Google Scholar

63. Maxim Tatarchenko, Alexey Dosovitskiy, and Thomas Brox. 2015. Single-view to Multi-view: Reconstructing Unseen Views with a Convolutional Network. CoRR abs/1511.06702 (2015). arXiv:1511.06702 http://arxiv.org/abs/1511.06702Google Scholar

64. Chia-Yin Tsai, Kiriakos N Kutulakos, Srinivasa G Narasimhan, and Aswin C Sankaranarayanan. 2017. The geometry of first-returning photons for non-line-of-sight imaging. In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

65. Chia-Yin Tsai, Aswin C Sankaranarayanan, and Ioannis Gkioulekas. 2019. Beyond Volumetric Albedo-A Surface Optimization Framework for Non-Line-Of-Sight Imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1545–1555.Google ScholarCross Ref

66. A. Velten, T. Willwacher, O. Gupta, A. Veeraraghavan, M.G. Bawendi, and R. Raskar. 2012. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nature Communications 3 (2012), 745.Google ScholarCross Ref

67. A. Velten, D. Wu, A. Jarabo, B. Masia, C. Barsi, C. Joshi, E. Lawson, M. Bawendi, D. Gutierrez, and R. Raskar. 2013. Femto-Photography: Capturing and Visualizing the Propagation of Light. ACM Trans. Graph. 32 (2013).Google Scholar

68. Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaiming He. 2018. Non-local neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 7794–7803.Google ScholarCross Ref

69. D. Wu, M. O’Toole, A. Velten, A. Agrawal, and R. Raskar. 2012. Decomposing global light transport using time of flight imaging. In Proc. CVPR. 366–373.Google Scholar

70. Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 2015. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1912–1920.Google Scholar

71. Feihu Xu, Gal Shulkind, Christos Thrampoulidis, Jeffrey H. Shapiro, Antonio Torralba, Franco N. C. Wong, and Gregory W. Wornell. 2018. Revealing hidden scenes by photon-efficient occlusion-based opportunistic active imaging. OSA Opt. Express 26, 8 (2018), 9945–9962.Google ScholarCross Ref

72. Tinghui Zhou, Shubham Tulsiani, Weilun Sun, Jitendra Malik, and Alexei A. Efros. 2016. View Synthesis by Appearance Flow. CoRR abs/1605.03557 (2016). arXiv:1605.03557 http://arxiv.org/abs/1605.03557Google Scholar