“Neural crossbreed: neural based image metamorphosis” by Park, Seo and Noh

Conference:

Type(s):

Title:

- Neural crossbreed: neural based image metamorphosis

Session/Category Title: Image Synthesis with Generative Models

Presenter(s)/Author(s):

Abstract:

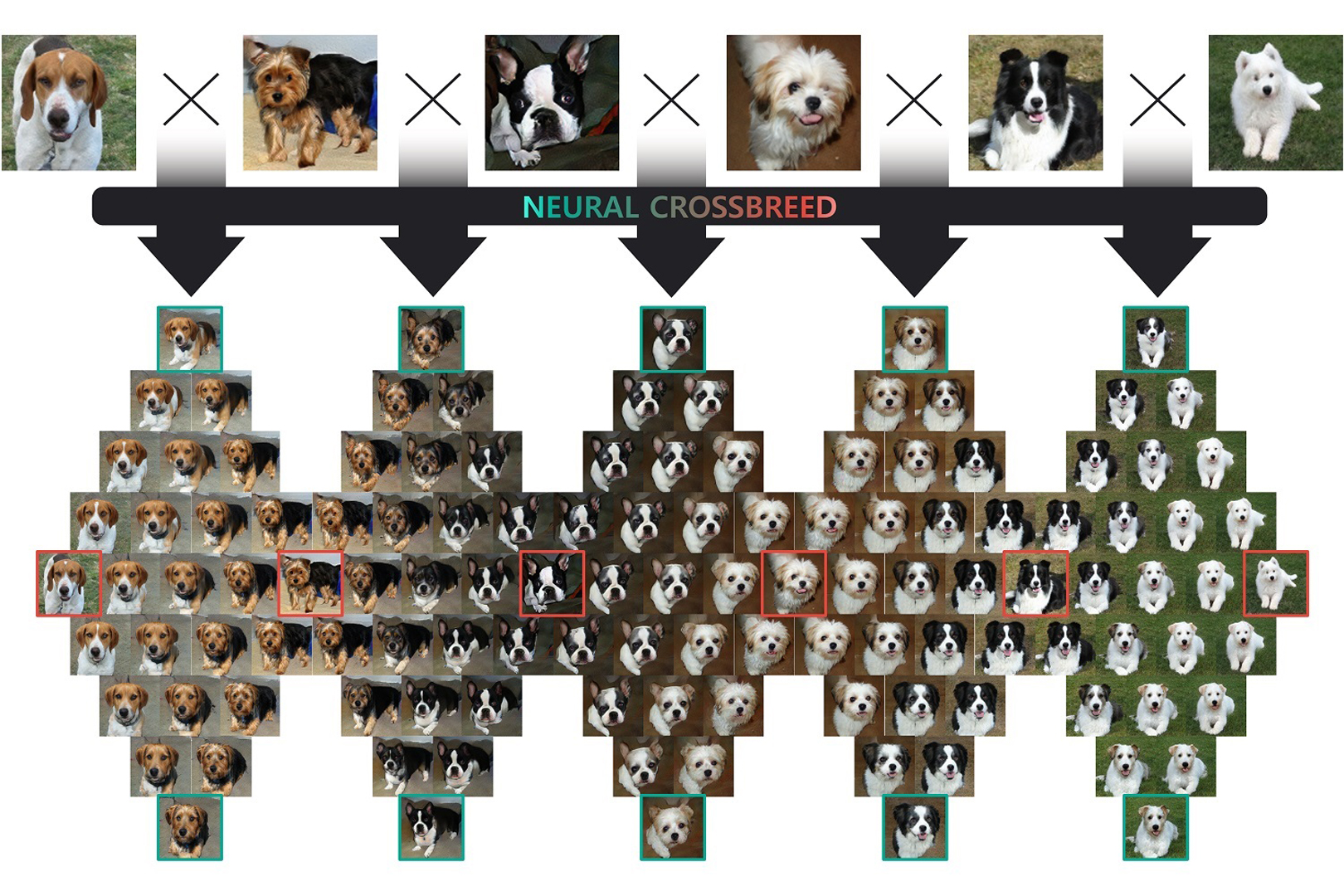

We propose Neural Crossbreed, a feed-forward neural network that can learn a semantic change of input images in a latent space to create the morphing effect. Because the network learns a semantic change, a sequence of meaningful intermediate images can be generated without requiring the user to specify explicit correspondences. In addition, the semantic change learning makes it possible to perform the morphing between the images that contain objects with significantly different poses or camera views. Furthermore, just as in conventional morphing techniques, our morphing network can handle shape and appearance transitions separately by disentangling the content and the style transfer for rich usability. We prepare a training dataset for morphing using a pre-trained BigGAN, which generates an intermediate image by interpolating two latent vectors at an intended morphing value. This is the first attempt to address image morphing using a pre-trained generative model in order to learn semantic transformation. The experiments show that Neural Crossbreed produces high quality morphed images, overcoming various limitations associated with conventional approaches. In addition, Neural Crossbreed can be further extended for diverse applications such as multi-image morphing, appearance transfer, and video frame interpolation.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019a. Image2StyleGAN++: How to Edit the Embedded Images? arXiv preprint arXiv:1911.11544 (2019).Google Scholar

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019b. Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space?. In Proceedings of the IEEE International Conference on Computer Vision. 4432–4441.Google ScholarCross Ref

3. David Bau, Jun-Yan Zhu, Jonas Wulff, William Peebles, Hendrik Strobelt, Bolei Zhou, and Antonio Torralba. 2019. Seeing what a gan cannot generate. In Proceedings of the IEEE International Conference on Computer Vision. 4502–4511.Google ScholarCross Ref

4. Thaddeus Beier and Shawn Neely. 1992. Feature-based image metamorphosis. ACM SIGGRAPH computer graphics 26, 2 (1992), 35–42.Google Scholar

5. Yoshua Bengio, Grégoire Mesnil, Yann Dauphin, and Salah Rifai. 2013. Better mixing via deep representations. In International conference on machine learning. 552–560.Google Scholar

6. Andrew Brock, Jeff Donahue, and Karen Simonyan. 2019. Large Scale GAN Training for High Fidelity Natural Image Synthesis. In International Conference on Learning Representations. https://openreview.net/forum?id=B1xsqj09FmGoogle Scholar

7. Xi Chen, Yan Duan, Rein Houthooft, John Schulman, Ilya Sutskever, and Pieter Abbeel. 2016. Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In Advances in neural information processing systems. 2172–2180.Google ScholarDigital Library

8. Ying-Cong Chen, Xiaogang Xu, Zhuotao Tian, and Jiaya Jia. 2019. Homomorphic latent space interpolation for unpaired image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2408–2416.Google ScholarCross Ref

9. Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. StarGAN v2: Diverse Image Synthesis for Multiple Domains. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

10. Antonia Creswell and Anil Anthony Bharath. 2018. Inverting the generator of a generative adversarial network. IEEE transactions on neural networks and learning systems 30, 7 (2018), 1967–1974.Google Scholar

11. Noa Fish, Richard Zhang, Lilach Perry, Daniel Cohen-Or, Eli Shechtman, and Connelly Barnes. 2020. Image Morphing With Perceptual Constraints and STN Alignment. In Computer Graphics Forum. Wiley Online Library.Google Scholar

12. Jacob R Gardner, Paul Upchurch, Matt J Kusner, Yixuan Li, Kilian Q Weinberger, Kavita Bala, and John E Hopcroft. 2015. Deep manifold traversal: Changing labels with convolutional features. arXiv preprint arXiv:1511.06421 (2015).Google Scholar

13. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2015. A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576 (2015).Google Scholar

14. Rui Gong, Wen Li, Yuhua Chen, and Luc Van Gool. 2019. Dlow: Domain flow for adaptation and generalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2477–2486.Google ScholarCross Ref

15. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.Google Scholar

16. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in neural information processing systems. 6626–6637.Google Scholar

17. Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. 2015. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015).Google Scholar

18. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.Google ScholarCross Ref

19. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal Unsupervised Image-to-image Translation. In ECCV.Google Scholar

20. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

21. Ali Jahanian, Lucy Chai, and Phillip Isola. 2019. On the “steerability” of generative adversarial networks. arXiv preprint arXiv:1907.07171 (2019).Google Scholar

22. Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. 2018. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 9000–9008.Google ScholarCross Ref

23. Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.Google ScholarCross Ref

24. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In 6th International Conference on Learning Representations, ICLR 2018.Google Scholar

25. Tero Karras, Samuli Laine, and Timo Aila. 2019b. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4401–4410.Google ScholarCross Ref

26. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2019a. Analyzing and Improving the Image Quality of StyleGAN. arXiv preprint arXiv:1912.04958 (2019).Google Scholar

27. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

28. Durk P Kingma and Prafulla Dhariwal. 2018. Glow: Generative flow with invertible 1×1 convolutions. In Advances in Neural Information Processing Systems. 10215–10224.Google Scholar

29. Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Yoshua Bengio and Yann LeCun (Eds.).Google Scholar

30. Dmytro Kotovenko, Artsiom Sanakoyeu, Sabine Lang, and Bjorn Ommer. 2019. Content and style disentanglement for artistic style transfer. In Proceedings of the IEEE International Conference on Computer Vision. 4422–4431.Google ScholarCross Ref

31. Hsin-Ying Lee, Hung-Yu Tseng, Jia-Bin Huang, Maneesh Kumar Singh, and Ming-Hsuan Yang. 2018. Diverse Image-to-Image Translation via Disentangled Representations. In European Conference on Computer Vision.Google ScholarCross Ref

32. Hsin-Ying Lee, Hung-Yu Tseng, Qi Mao, Jia-Bin Huang, Yu-Ding Lu, Maneesh Singh, and Ming-Hsuan Yang. 2020. Drit++: Diverse image-to-image translation via disentangled representations. International Journal of Computer Vision (2020), 1–16.Google ScholarCross Ref

33. Jing Liao, Rodolfo S Lima, Diego Nehab, Hugues Hoppe, Pedro V Sander, and Jinhui Yu. 2014. Automating image morphing using structural similarity on a halfway domain. ACM Transactions on Graphics (TOG) 33, 5 (2014), 168.Google ScholarDigital Library

34. Jae Hyun Lim and Jong Chul Ye. 2017. Geometric gan. arXiv preprint arXiv:1705.02894 (2017).Google Scholar

35. Zachary C Lipton and Subarna Tripathi. 2017. Precise recovery of latent vectors from generative adversarial networks. arXiv preprint arXiv:1702.04782 (2017).Google Scholar

36. Wallace Lira, Johannes Merz, Daniel Ritchie, Daniel Cohen-Or, and Hao Zhang. 2020. GANHopper: Multi-Hop GAN for Unsupervised Image-to-Image Translation. arXiv preprint arXiv:2002.10102 (2020).Google Scholar

37. Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. 2019. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE International Conference on Computer Vision. 10551–10560.Google ScholarCross Ref

38. Jonathan Long, Evan Shelhamer, and Trevor Darrell. 2015. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3431–3440.Google ScholarCross Ref

39. Dhruv Kumar Mahajan, Ross B. Girshick, Vignesh Ramanathan, Kaiming He, Manohar Paluri, Yixuan Li, Ashwin Bharambe, and Laurens van der Maaten. 2018. Exploring the Limits of Weakly Supervised Pretraining. In ECCV.Google Scholar

40. Marco Marchesi. 2017. Megapixel size image creation using generative adversarial networks. arXiv preprint arXiv:1706.00082 (2017).Google Scholar

41. Lars Mescheder, Andreas Geiger, and Sebastian Nowozin. 2018. Which training methods for GANs do actually converge? arXiv preprint arXiv:1801.04406 (2018).Google Scholar

42. Chuong H Nguyen, Oliver Nalbach, Tobias Ritschel, and Hans-Peter Seidel. 2015. Guiding Image Manipulations using Shape-appearance Subspaces from Co-alignment of Image Collections. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 143–154.Google Scholar

43. Alam Noor, Yaqin Zhao, Anis Koubaa, Longwen Wu, Rahim Khan, and Fakheraldin YO Abdalla. 2020. Automated sheep facial expression classification using deep transfer learning. Computers and Electronics in Agriculture 175 (2020), 105528.Google ScholarCross Ref

44. Augustus Odena, Christopher Olah, and Jonathon Shlens. 2017. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the 34th International Conference on Machine Learning-Volume 70. JMLR. org, 2642–2651.Google ScholarDigital Library

45. Mathijs Pieters and Marco Wiering. 2018. Comparing Generative Adversarial Network Techniques for Image Creation and Modification. arXiv preprint arXiv:1803.09093 (2018).Google Scholar

46. Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In 4th International Conference on Learning Representations, ICLR 2016, Yoshua Bengio and Yann LeCun (Eds.).Google Scholar

47. Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. 2015. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV) 115, 3 (2015), 211–252. Google ScholarDigital Library

48. Ulrich Scherhag, Christian Rathgeb, Johannes Merkle, Ralph Breithaupt, and Christoph Busch. 2019. Face recognition systems under morphing attacks: A survey. IEEE Access 7 (2019), 23012–23026.Google ScholarCross Ref

49. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2019. Interpreting the latent space of gans for semantic face editing. arXiv preprint arXiv:1907.10786 (2019).Google Scholar

50. Joel Simon. 2018. Ganbreeder. http://web.archive.org/web/20190907132621/http://ganbreeder.app/. Accessed: 2019-09-07.Google Scholar

51. Douglas B Smythe. 1990. A two-pass mesh warping algorithm for object transformation and image interpolation. Rapport technique 1030 (1990), 31.Google Scholar

52. Felipe Petroski Such, Aditya Rawal, Joel Lehman, Kenneth O Stanley, and Jeff Clune. 2019. Generative Teaching Networks: Accelerating Neural Architecture Search by Learning to Generate Synthetic Training Data. arXiv preprint arXiv:1912.07768 (2019).Google Scholar

53. Dustin Tran, Rajesh Ranganath, and David Blei. 2017. Hierarchical implicit models and likelihood-free variational inference. In Advances in Neural Information Processing Systems. 5523–5533.Google Scholar

54. Paul Upchurch, Jacob Gardner, Geoff Pleiss, Robert Pless, Noah Snavely, Kavita Bala, and Kilian Weinberger. 2017. Deep feature interpolation for image content changes. In Proceedings of the IEEE conference on computer vision and pattern recognition. 7064–7073.Google ScholarCross Ref

55. Yuri Viazovetskyi, Vladimir Ivashkin, and Evgeny Kashin. 2020. StyleGAN2 Distillation for Feed-forward Image Manipulation. arXiv preprint arXiv:2003.03581 (2020).Google Scholar

56. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8798–8807.Google ScholarCross Ref

57. George Wolberg. 1998. Image morphing: a survey. The visual computer 14, 8–9 (1998), 360–372.Google Scholar

58. Po-Wei Wu, Yu-Jing Lin, Che-Han Chang, Edward Y Chang, and Shih-Wei Liao. 2019. Relgan: Multi-domain image-to-image translation via relative attributes. In Proceedings of the IEEE International Conference on Computer Vision. 5914–5922.Google Scholar

59. Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. 2016. Aggregated Residual Transformations for Deep Neural Networks. arXiv preprint arXiv:1611.05431 (2016).Google Scholar

60. Xuexiang Xie, Feng Tian, and Hock Soon Seah. 2007. Feature guided texture synthesis (fgts) for artistic style transfer. In Proceedings of the 2nd international conference on Digital interactive media in entertainment and arts. 44–49.Google ScholarDigital Library

61. Jason Yosinski, Jeff Clune, Yoshua Bengio, and Hod Lipson. 2014. How transferable are features in deep neural networks?. In Advances in neural information processing systems. 3320–3328.Google Scholar

62. Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. 2019. Self-attention generative adversarial networks. In International Conference on Machine Learning. 7354–7363.Google Scholar

63. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR.Google Scholar

64. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref