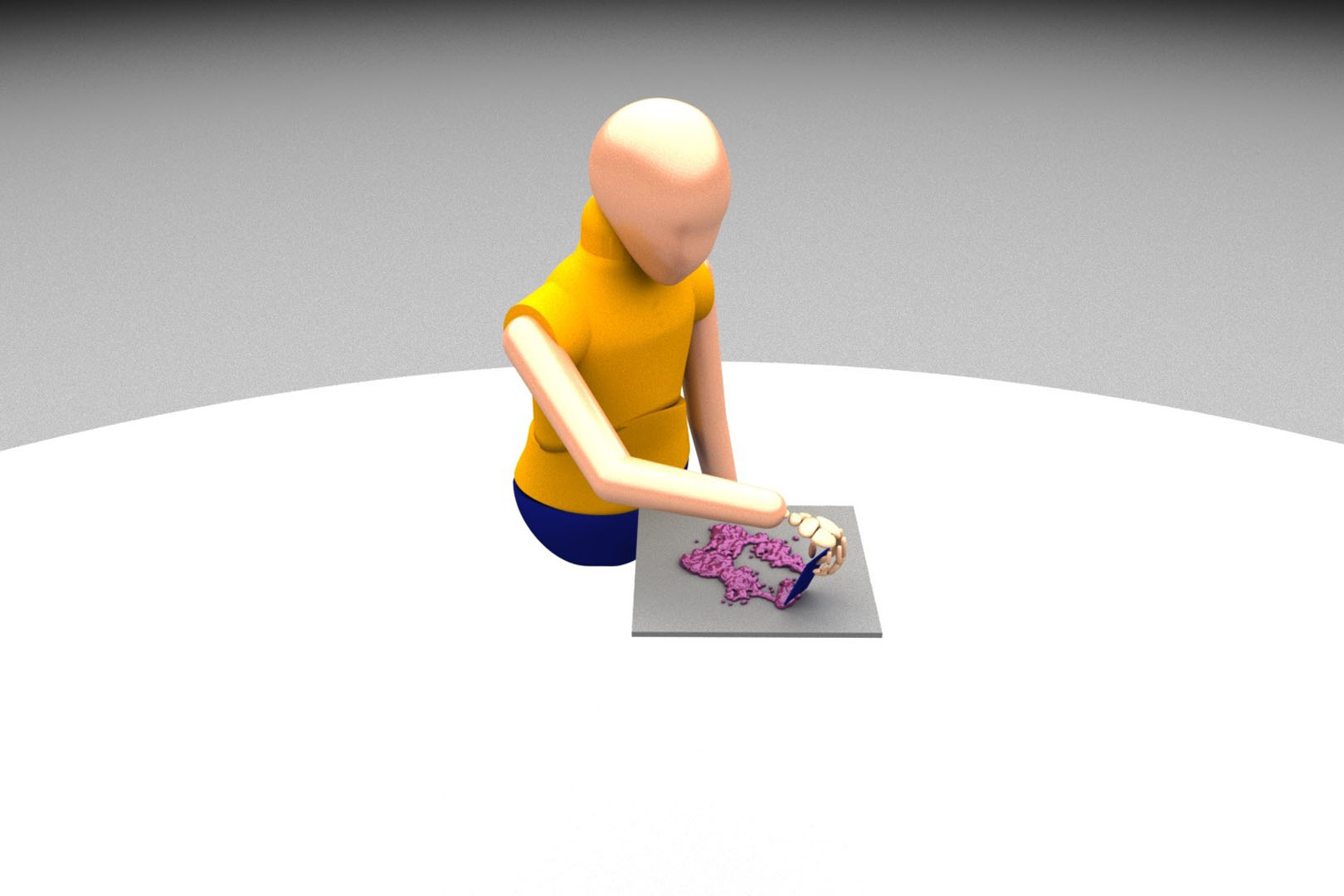

“Learning to manipulate amorphous materials” by Zhang, Yu, Liu, Kemp and Turk

Conference:

Type(s):

Title:

- Learning to manipulate amorphous materials

Session/Category Title: Computational Robotics

Presenter(s)/Author(s):

Abstract:

We present a method of training character manipulation of amorphous materials such as those often used in cooking. Common examples of amorphous materials include granular materials (salt, uncooked rice), fluids (honey), and visco-plastic materials (sticky rice, softened butter). A typical task is to spread a given material out across a flat surface using a tool such as a scraper or knife. We use reinforcement learning to train our controllers to manipulate materials in various ways. The training is performed in a physics simulator that uses position-based dynamics of particles to simulate the materials to be manipulated. The neural network control policy is given observations of the material (e.g. a low-resolution density map), and the policy outputs actions such as rotating and translating the knife. We demonstrate policies that have been successfully trained to carry out the following tasks: spreading, gathering, and flipping. We produce a final animation by using inverse kinematics to guide a character’s arm and hand to match the motion of the manipulation tool such as a knife or a frying pan.

References:

1. Ilge Akkaya, Marcin Andrychowicz, Maciek Chociej, Mateusz Litwin, Bob McGrew, Arthur Petron, Alex Paino, Matthias Plappert, Glenn Powell, Raphael Ribas, et al. 2019. Solving Rubik’s Cube with a Robot Hand. arXiv preprint arXiv:1910.07113 (2019).Google Scholar

2. Sheldon Andrews and Paul G Kry. 2012. Policies for goal directed multi-finger manipulation. (2012).Google Scholar

3. Yunfei Bai and C Karen Liu. 2014. Dexterous manipulation using both palm and fingers. In 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 1560–1565.Google ScholarCross Ref

4. Yunfei Bai, Wenhao Yu, and C Karen Liu. 2016. Dexterous manipulation of cloth. In Computer Graphics Forum, Vol. 35. Wiley Online Library, 523–532.Google Scholar

5. Yevgen Chebotar, Ankur Handa, Viktor Makoviychuk, Miles Macklin, Jan Issac, Nathan Ratliff, and Dieter Fox. 2019. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In 2019 International Conference on Robotics and Automation (ICRA). IEEE, 8973–8979.Google ScholarDigital Library

6. Alexander Clegg, Wenhao Yu, Jie Tan, C Karen Liu, and Greg Turk. 2018. Learning to dress: Synthesizing human dressing motion via deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.Google ScholarDigital Library

7. Erwin Coumans and Yunfei Bai. 2016. Pybullet, a python module for physics simulation for games, robotics and machine learning. GitHub repository (2016).Google Scholar

8. Sarah Elliott and Maya Cakmak. 2018. Robotic cleaning through dirt rearrangement planning with learned transition models. In 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 1623–1630.Google ScholarCross Ref

9. Yuanming Hu, Luke Anderson, Tzu-Mao Li, Qi Sun, Nathan Carr, Jonathan Ragan-Kelley, and Frédo Durand. 2019a. DiffTaichi: Differentiable Programming for Physical Simulation. arXiv preprint arXiv:1910.00935 (2019).Google Scholar

10. Yuanming Hu, Jiancheng Liu, Andrew Spielberg, Joshua B Tenenbaum, William T Freeman, Jiajun Wu, Daniela Rus, and Wojciech Matusik. 2019b. ChainQueen: A real-time differentiable physical simulator for soft robotics. In 2019 International Conference on Robotics and Automation (ICRA). IEEE, 6265–6271.Google ScholarDigital Library

11. Yifeng Jiang and C Karen Liu. 2018. Data-driven approach to simulating realistic human joint constraints. In 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 1098–1103.Google ScholarCross Ref

12. Dmitry Kalashnikov, Alex Irpan, Peter Pastor Sampedro, Julian Ibarz, Alexander Herzog, Eric Jang, Deirdre Quillen, Ethan Holly, Mrinal Kalakrishnan, Vincent Vanhoucke, and Sergey Levine. 2018. QT-Opt: Scalable Deep Reinforcement Learning for Vision-Based Robotic Manipulation. https://arxiv.org/pdf/1806.10293Google Scholar

13. Jeongseok Lee, Michael Grey, Sehoon Ha, Tobias Kunz, Sumit Jain, Yuting Ye, Siddhartha Srinivasa, Mike Stilman, and Chuanjian Liu. 2018. Dart: Dynamic animation and robotics toolkit. Journal of Open Source Software 3, 22 (2018), 500.Google ScholarCross Ref

14. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable muscle-actuated human simulation and control. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–13.Google ScholarDigital Library

15. Yunzhu Li, Jiajun Wu, Russ Tedrake, Joshua B Tenenbaum, and Antonio Torralba. 2019. Learning Particle Dynamics for Manipulating Rigid Bodies, Deformable Objects, and Fluids. In ICLR.Google Scholar

16. C Karen Liu. 2009. Dextrous manipulation from a grasping pose. In ACM SIGGRAPH 2009 papers. 1–6.Google ScholarDigital Library

17. Libin Liu and Jessica Hodgins. 2018. Learning basketball dribbling skills using trajectory optimization and deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.Google ScholarDigital Library

18. Rosanne Liu, Joel Lehman, Piero Molino, Felipe Petroski Such, Eric Frank, Alex Sergeev, and Jason Yosinski. 2018. An intriguing failing of convolutional neural networks and the coordconv solution. In Advances in Neural Information Processing Systems. 9605–9616.Google Scholar

19. Pingchuan Ma, Yunsheng Tian, Zherong Pan, Bo Ren, and Dinesh Manocha. 2018. Fluid directed rigid body control using deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.Google ScholarDigital Library

20. Miles Macklin, Matthias Müller, Nuttapong Chentanez, and Tae-Yong Kim. 2014. Unified particle physics for real-time applications. ACM Transactions on Graphics (TOG) 33, 4 (2014), 1–12.Google ScholarDigital Library

21. Stephen Miller, Jur Van Den Berg, Mario Fritz, Trevor Darrell, Ken Goldberg, and Pieter Abbeel. 2012. A geometric approach to robotic laundry folding. The International Journal of Robotics Research 31, 2 (2012), 249–267.Google ScholarDigital Library

22. Igor Mordatch, Zoran Popović, and Emanuel Todorov. 2012. Contact-invariant optimization for hand manipulation. In Proceedings of the ACM SIGGRAPH/Eurographics symposium on computer animation. Eurographics Association, 137–144.Google Scholar

23. Daehyung Park, Yuuna Hoshi, Harshal P Mahajan, Ho Keun Kim, Zackory Erickson, Wendy A Rogers, and Charles C Kemp. 2019. Active Robot-Assisted Feeding with a General-Purpose Mobile Manipulator: Design, Evaluation, and Lessons Learned. arXiv preprint arXiv:1904.03568 (2019).Google Scholar

24. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills. ACM Transactions on Graphics (Proc. SIGGRAPH 2018) (2018).Google Scholar

25. Aravind Rajeswaran, Vikash Kumar, Abhishek Gupta, Giulia Vezzani, John Schulman, Emanuel Todorov, and Sergey Levine. 2017. Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. arXiv preprint arXiv:1709.10087 (2017).Google Scholar

26. Connor Schenck and Dieter Fox. 2018. Spnets: Differentiable fluid dynamics for deep neural networks. arXiv preprint arXiv:1806.06094 (2018).Google Scholar

27. Connor Schenck, Jonathan Tompson, Dieter Fox, and Sergey Levine. 2017. Learning robotic manipulation of granular media. arXiv preprint arXiv:1709.02833 (2017).Google Scholar

28. John Schulman, Philipp Moritz, Sergey Levine, Michael Jordan, and Pieter Abbeel. 2015. High-dimensional continuous control using generalized advantage estimation. arXiv preprint arXiv:1506.02438 (2015).Google Scholar

29. John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. 2017. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017).Google Scholar

30. Emanuel Todorov, Tom Erez, and Yuval Tassa. 2012. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 5026–5033.Google ScholarCross Ref

31. Matthew Wilson and Tucker Hermans. 2019. Learning to Manipulate Object Collections Using Grounded State Representations. arXiv preprint arXiv:1909.07876 (2019).Google Scholar

32. Yilin Wu, Wilson Yan, Thanard Kurutach, Lerrel Pinto, and Pieter Abbeel. 2019. Learning to Manipulate Deformable Objects without Demonstrations. arXiv preprint arXiv:1910.13439 (2019).Google Scholar

33. Yuting Ye and C Karen Liu. 2012. Synthesis of detailed hand manipulations using contact sampling. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.Google ScholarDigital Library

34. Ri Yu, Hwangpil Park, and Jehee Lee. 2019. Figure Skating Simulation from Video. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 225–234.Google Scholar

35. Wenping Zhao, Jianjie Zhang, Jianyuan Min, and Jinxiang Chai. 2013. Robust realtime physics-based motion control for human grasping. ACM Transactions on Graphics (TOG) 32, 6 (2013), 1–12.Google ScholarDigital Library