“Language-based colorization of scene sketches” by Zou, Mo, Gao, Du and Fu

Conference:

Type(s):

Title:

- Language-based colorization of scene sketches

Session/Category Title: Hairy & Sketchy Geometry

Presenter(s)/Author(s):

Moderator(s):

Abstract:

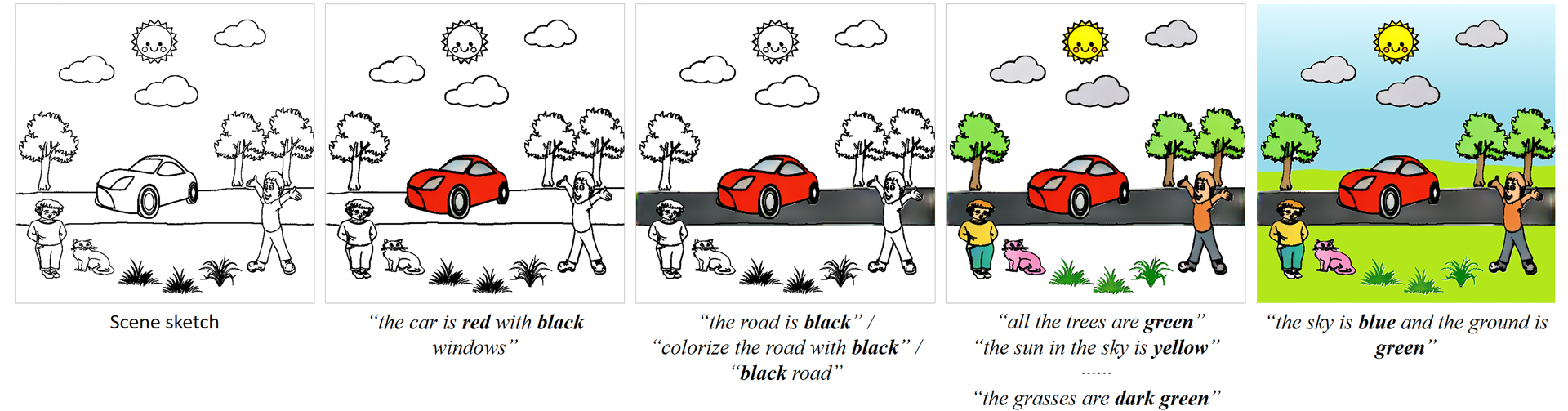

Being natural, touchless, and fun-embracing, language-based inputs have been demonstrated effective for various tasks from image generation to literacy education for children. This paper for the first time presents a language-based system for interactive colorization of scene sketches, based on semantic comprehension. The proposed system is built upon deep neural networks trained on a large-scale repository of scene sketches and cartoonstyle color images with text descriptions. Given a scene sketch, our system allows users, via language-based instructions, to interactively localize and colorize specific foreground object instances to meet various colorization requirements in a progressive way. We demonstrate the effectiveness of our approach via comprehensive experimental results including alternative studies, comparison with the state-of-the-art methods, and generalization user studies. Given the unique characteristics of language-based inputs, we envision a combination of our interface with a traditional scribble-based interface for a practical multimodal colorization system, benefiting various applications. The dataset and source code can be found at https://github.com/SketchyScene/SketchySceneColorization.

References:

1. Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. 2017. SegNet: a Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Transactions on Pattern Analysis & Machine Intelligence 12 (2017), 2481–2495.Google ScholarCross Ref

2. Hyojin Bahng, Seungjoo Yoo, Wonwoong Cho, David Keetae Park, Ziming Wu, Xiaojuan Ma, and Jaegul Choo. 2018. Coloring with words: Guiding image colorization through text-based palette generation. In ECCV. 431–447.Google Scholar

3. Huiwen Chang, Ohad Fried, Yiming Liu, Stephen DiVerdi, and Adam Finkelstein. 2015. Palette-Based Photo Recoloring. ACM Transactions on Graphics 34, 4 (2015), 139.Google ScholarDigital Library

4. Jianbo Chen, Yelong Shen, Jianfeng Gao, Jingjing Liu, and Xiaodong Liu. 2018b. Language-Based Image Editing With Recurrent Attentive Models. In CVPR. IEEE.Google Scholar

5. Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. 2018a. Deeplab: Semantic Image Segmentation With Deep Convolutional Nets, Atrous Convolution, and Fully Connected Crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence 40, 4 (2018), 834–848.Google ScholarCross Ref

6. Liang-Chieh Chen, Yukun Zhu,George Papandreou, Florian Schroff, and Hartwig Adam. 2018c. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In ECCV.Google Scholar

7. Wengling Chen and James Hays. 2018. Sketchygan: Towards diverse and realistic sketch to image synthesis. In CVPR. 9416–9425.Google Scholar

8. Ming-Ming Cheng, Shuai Zheng, Wen-Yan Lin, Vibhav Vineet, Paul Sturgess, Nigel Crook, Niloy J Mitra, and Philip Torr. 2014. ImageSpirit: Verbal guided image parsing. ACM Transactions on Graphics (TOG) 34, 1 (2014), 3.Google ScholarDigital Library

9. Yuanzheng Ci, Xinzhu Ma, Zhihui Wang, Haojie Li, and Zhongxuan Luo. 2018. User-Guided Deep Anime Line Art Colorization With Conditional Adversarial Networks. In ACM Multimedia. 1536–1544.Google Scholar

10. Faming Fang, Tingting Wang, Tieyong Zeng, and Guixu Zhang. 2019. A Superpixel-based Variational Model for Image Colorization. IEEE Transactions on Visualization and Computer Graphics (2019).Google Scholar

11. Chie Furusawa, Kazuyuki Hiroshiba, Keisuke Ogaki, and Yuri Odagiri. 2017. Comicolorization: semi-automatic manga colorization. In SIGGRAPH Asia 2017 Technical Briefs. ACM, 12.Google Scholar

12. Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. 2017. Mask r-cnn. In ICCV. 2961–2969.Google Scholar

13. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In CVPR. 770–778.Google Scholar

14. Mingming He, Dongdong Chen, Jing Liao, Pedro V Sander, and Lu Yuan. 2018. Deep Exemplar-Based Colorization. ACM Transactions on Graphics 37, 4 (2018), 47.Google ScholarDigital Library

15. Ronghang Hu, Marcus Rohrbach, and Trevor Darrell. 2016a. Segmentation From Natural Language Expressions. In ECCV. Springer, 108–124.Google Scholar

16. Ronghang Hu, Huazhe Xu, Marcus Rohrbach, Jiashi Feng, Kate Saenko, and Trevor Darrell. 2016b. Natural Language Object Retrieval. In CVPR. 4555–4564.Google Scholar

17. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In CVPR. 1125–1134.Google Scholar

18. Hyunhoon Jung, Hee Jae Kim, Seongeun So, Jinjoong Kim, and Changhoon Oh. 2019. TurtleTalk: An Educational Programming Game for Children with Voice User Interface. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA ’19).Google ScholarDigital Library

19. Yoon Kim. 2014. Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882 (2014).Google Scholar

20. Siwei Lai, Liheng Xu, Kang Liu, and Jun Zhao. 2015. Recurrent convolutional neural networks for text classification. In AAAI.Google Scholar

21. Guillaume Lample, Miguel Ballesteros, Sandeep Subramanian, Kazuya Kawakami, and Chris Dyer. 2016. Neural architectures for named entity recognition. arXiv preprint arXiv:1603.01360 (2016).Google Scholar

22. Gierad P Laput, Mira Dontcheva, Gregg Wilensky, Walter Chang, Aseem Agarwala, Jason Linder, and Eytan Adar. 2013. Pixeltone: A multimodal interface for image editing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 2185–2194.Google ScholarDigital Library

23. Jianan Li, Yunchao Wei, Xiaodan Liang, Fang Zhao, Jianshu Li, Tingfa Xu, and Jiashi Feng. 2017. Deep attribute-preserving metric learning for natural language object retrieval. In ACM Multimedia. 181–189.Google Scholar

24. Mengtian Li, Zhe Lin, Radomir Mech, Ersin Yumer, and Deva Ramanan. 2019. Photo-Sketching: Inferring Contour Drawings from Images. In WACV. IEEE, 1403–1412.Google Scholar

25. Ruiyu Li, Kai-Can Li, Yi-Chun Kuo, Michelle Shu, Xiaojuan Qi, Xiaoyong Shen, and Jiaya Jia. 2018. Referring Image Segmentation via Recurrent Refinement Networks. In CVPR. 5745–5753.Google Scholar

26. Chenxi Liu, Zhe Lin, Xiaohui Shen, Jimei Yang, Xin Lu, and Alan Yuille. 2017a. Recurrent Multimodal Interaction for Referring Image Segmentation. In ICCV. IEEE.Google Scholar

27. Yifan Liu, Zengchang Qin, Zhenbo Luo, and Hua Wang. 2017b. Auto-Painter: Cartoon Image Generation From Sketch by Using Conditional Generative Adversarial Networks. ArXiv Preprint ArXiv:1705.01908 (2017).Google Scholar

28. Jonathan Long, Evan Shelhamer, and Trevor Darrell. 2015. Fully convolutional networks for semantic segmentation. In CVPR. 3431–3440.Google Scholar

29. Silvia Lovato and Anne Marie Piper. 2015. “Siri, is This You?”: Understanding Young Children’s Interactions with Voice Input Systems. In Proceedings of the 14th International Conference on Interaction Design and Children (IDC ’15). 335–338.Google ScholarDigital Library

30. Jiasen Lu, Jianwei Yang, Dhruv Batra, and Devi Parikh. 2016. Hierarchical Question-Image Co-Attention for Visual Question Answering. In NIPS. 289–297.Google Scholar

31. Junhua Mao, Jonathan Huang, Alexander Toshev, Oana Camburu, Alan L. Yuille, and Kevin Murphy. 2016. Generation and Comprehension of Unambiguous Object Descriptions. In CVPR. 11–20.Google Scholar

32. George A Miller. 1995. WordNet: a lexical database for English. Commun. ACM 38, 11 (1995), 39–41.Google ScholarDigital Library

33. Will Monroe, Noah D. Goodman, and Christopher Potts. 2016. Learning to Generate Compositional Color Descriptions. In EMNLP.Google Scholar

34. Will Monroe, Robert XD Hawkins, Noah D Goodman, and Christopher Potts. 2017. Colors in context: A pragmatic neural model for grounded language understanding. Transactions of the Association for Computational Linguistics 5 (2017), 325–338.Google ScholarCross Ref

35. Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. 2019. Semantic image synthesis with spatially-adaptive normalization. In CVPR. 2337–2346.Google Scholar

36. Yingge Qu, Tien-Tsin Wong, and Pheng-Ann Heng. 2006. Manga colorization. In ACM Transactions on Graphics (TOG), Vol. 25. 1214–1220.Google ScholarDigital Library

37. Hayes Raffle, Cati Vaucelle, Ruibing Wang, and Hiroshi Ishii. 2007. Jabberstamp: Embedding Sound and Voice in Traditional Drawings. In Proceedings of the 6th International Conference on Interaction Design and Children (IDC ’07). 137–144.Google ScholarDigital Library

38. Patsorn Sangkloy, Nathan Burnell, Cusuh Ham, and James Hays. 2016. The Sketchy Database: Learning to Retrieve Badly Drawn Bunnies. ACM Transactions on Graphics (proceedings of SIGGRAPH) (2016).Google ScholarDigital Library

39. Patsorn Sangkloy, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. 2017. Scribbler: Controlling deep image synthesis with sketch and color. In CVPR. 5400–5409.Google Scholar

40. Hengcan Shi, Hongliang Li, Fanman Meng, and Qingbo Wu. 2018. Key-Word-Aware Network for Referring Expression Image Segmentation. In ECCV. 38–54.Google Scholar

41. Dong Wang, Changqing Zou, Guiqing Li, Chengying Gao, Zhuo Su, and Ping Tan. 2017. L0 Gradient-Preserving Color Transfer. Comput. Graph. Forum 36, 7 (2017), 93–103.Google ScholarCross Ref

42. Holger Winnemöller. 2011. Xdog: advanced image stylization with extended difference-of-gaussians. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Non-Photorealistic Animation and Rendering. 147–156.Google ScholarDigital Library

43. Chufeng Xiao, Chu Han, Zhuming Zhang, Jing Qin, Tien-Tsin Wong, Guoqiang Han, and Shengfeng He. 2019a. Example-Based Colourization Via Dense Encoding Pyramids. In Computer Graphics Forum. Wiley Online Library.Google Scholar

44. Yi Xiao, Peiyao Zhou, Yan Zheng, and Chi-Sing Leung. 2019b. Interactive Deep Colorization Using Simultaneous Global and Local Inputs. In ICASSP. IEEE, 1887–1891.Google Scholar

45. Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, and Xiaodong He. 2018. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In CVPR. 1316–1324.Google Scholar

46. Xinchen Yan, Jimei Yang, Kihyuk Sohn, and Honglak Lee. 2016. Attribute2image: Conditional image generation from visual attributes. In ECCV. Springer, 776–791.Google Scholar

47. Taizan Yonetsuji. 2017. Paints Chainer. https://github.com/pfnet/PaintsChainer. (2017).Google Scholar

48. Dongfei Yu, Jianlong Fu, Tao Mei, and Yong Rui. 2017. Multi-level Attention Networks for Visual Question Answering. In CVPR.Google Scholar

49. Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, and Dimitris N Metaxas. 2017a. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In ICCV. 5907–5915.Google Scholar

50. Lvmin Zhang, Chengze Li, Tien-Tsin Wong, Yi Ji, and Chunping Liu. 2018. Two-stage sketch colorization. In SIGGRAPH Asia 2018 Technical Papers. ACM, 261.Google Scholar

51. Richard Zhang, Jun-Yan Zhu, Phillip Isola, Xinyang Geng, Angela S Lin, Tianhe Yu, and Alexei A Efros. 2017b. Real-Time User-Guided Image Colorization With Learned Deep Priors. ACM Transactions on Graphics (TOG) 9, 4 (2017).Google Scholar

52. Changqing Zou, Qian Yu, Ruofei Du, Haoran Mo, Yi-Zhe Song, Tao Xiang, Chengying Gao, Baoquan Chen, and Hao Zhang. 2018. SketchyScene: Richly-Annotated Scene Sketches. In ECCV. Springer, 438–454.Google Scholar