“Multi-theme generative adversarial terrain amplification” by Zhao, Liu, Borovikov, Beirami, Sanjabi, et al. …

Conference:

Type(s):

Title:

- Multi-theme generative adversarial terrain amplification

Session/Category Title: Geometry with Style

Presenter(s)/Author(s):

Moderator(s):

Abstract:

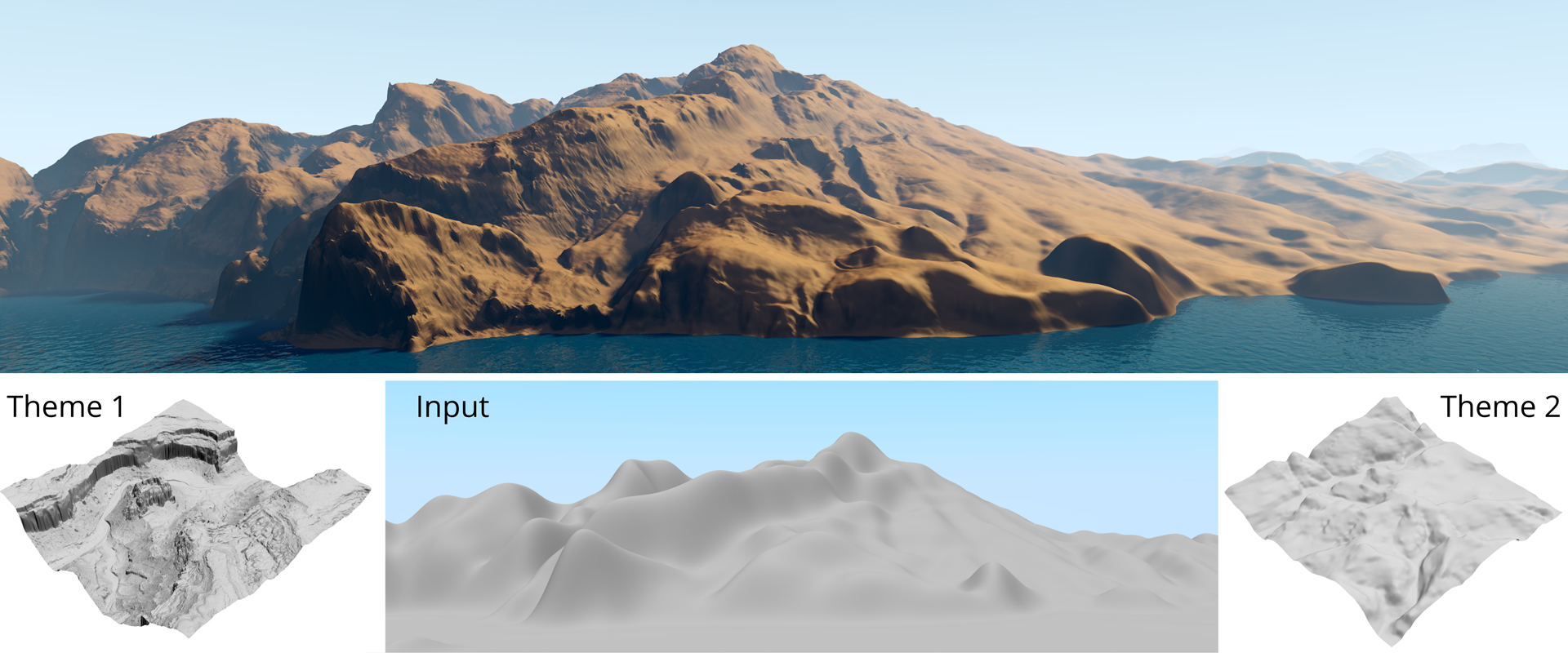

Achieving highly detailed terrain models spanning vast areas is crucial to modern computer graphics. The pipeline for obtaining such terrains is via amplification of a low-resolution terrain to refine the details given a desired theme, which is a time-consuming and labor-intensive process. Recently, data-driven methods, such as the sparse construction tree, have provided a promising direction to equip the artist with better control over the theme.These methods learn to amplify terrain details by using an exemplar of high-resolution detailed terrains to transfer the theme. In this paper, we propose Generative Adversarial Terrain Amplification (GATA) that achieves better local/global coherence compared to the existing data-driven methods while providing even more ways to control the theme. GATA is comprised of two key ingredients. Thefi rst one is a novel embedding of themes into vectors of real numbers to achieve a single tool for multi-theme amplification. The theme component can leverage existing LIDAR data to generate similar terrain features. It can also generate newfi ctional themes by tuning the embedding vector or even encoding a new example terrain into an embedding. The second one is an adversarially trained model that, conditioned on an embedding and a low-resolution terrain, generates a high-resolution terrain adhering to the desired theme. The proposed integral approach reduces the need for unnecessary manual adjustments, can speed up the development, and brings the model quality to a new level. Our implementation of the proposed method has proved successful in large-scale terrain authoring for an open-world game.

References:

1. Martín Abadi, Paul Barham, Jianmin Chen, Zhifeng Chen, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Geoffrey Irving, Michael Isard, et al. 2016. Tensorflow: A system for large-scale machine learning. In 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16). 265–283.Google ScholarDigital Library

2. Oscar Argudo, Carlos Andujar, Antonio Chica, Eric Guérin, Julie Digne, Adrien Peytavie, and Eric Galin. 2017. Coherent multi-layer landscape synthesis. The Visual Computer 33, 6–8 (2017), 1005–1015.Google ScholarDigital Library

3. Oscar Argudo, Antoni Chica, and Carlos Andujar. 2018. Terrain Super-resolution through Aerial Imagery and Fully Convolutional Networks. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 101–110.Google Scholar

4. Bedrich Benes and Rafael Forsbach. 2001. Layered data representation for visual simulation of terrain erosion. In sccg. IEEE, 0080.Google Scholar

5. Bedřich Beneš, Václav Těšínsky’, Jan Hornyš, and Sanjiv K Bhatia. 2006. Hydraulic erosion. Computer Animation and Virtual Worlds 17, 2 (2006), 99–108.Google ScholarDigital Library

6. BiteTheBytesUG. 2019. World Creator. https://www.world-creator.com/Google Scholar

7. Piotr Bojanowski, Armand Joulin, David Lopez-Pas, and Arthur Szlam. 2018. Optimizing the Latent Space of Generative Networks. In International Conference on Machine Learning. 599–608.Google Scholar

8. Kaidi Cao, Jing Liao, and Lu Yuan. 2018. CariGANs: unpaired photo-to-caricature translation. In SIGGRAPH Asia 2018 Technical Papers. ACM, 244.Google Scholar

9. Norishige Chiba, Kazunobu Muraoka, and Kunihiko Fujita. 1998. An erosion model based on velocityfi elds for the visual simulation of mountain scenery. The Journal of Visualization and Computer Animation 9, 4 (1998), 185–194.Google ScholarCross Ref

10. Guillaume Cordonnier, Jean Braun, Marie-Paule Cani, Bedrich Benes, Eric Galin, Adrien Peytavie, and Eric Guérin. 2016. Large scale terrain generation from tectonic uplift andfl uvial erosion. In Computer Graphics Forum, Vol. 35. Wiley Online Library, 165–175.Google Scholar

11. Paul Cotton. 2019. How does Battlefield V’s Firestorm battle royale map compare to Fortnite and Apex Legends? https://www.dexerto.com/battlefield/battlefield-frestorm-map-size-472053Google Scholar

12. Daz3D. 2019. Bryce 7 Pro. https://www.daz3d.comGoogle Scholar

13. DigitalElement. 2019. WorldBuilder Pro 4. https://www.digi-element.com/worldbuilder-pro/Google Scholar

14. Carl Doersch. 2016. Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908 (2016).Google Scholar

15. Jeff Donahue, Philipp Krähenbühl, and Trevor Darrell. 2016. Adversarial feature learning. arXiv preprint arXiv:1605.09782 (2016).Google Scholar

16. Alexey Dosovitskiy and Thomas Brox. 2016. Generating images with perceptual similarity metrics based on deep networks. In Advances in neural information processing systems. 658–666.Google Scholar

17. Vincent Dumoulin, Ethan Perez, Nathan Schucher, Florian Strub, Harm de Vries, Aaron Courville, and Yoshua Bengio. 2018. Feature-wise transformations. Distill 3, 7 (2018), e11.Google ScholarCross Ref

18. E-onSoftware. 2019. Creator Solution. https://info.e-onsoftware.com/creator-solutionGoogle Scholar

19. David S Ebert and F Kenton Musgrave. 2003. Texturing & modeling: a procedural approach. Morgan Kaufmann.Google Scholar

20. Arnaud Emilien, Ulysse Vimont, Marie-Paule Cani, Pierre Poulin, and Bedrich Benes. 2015. Worldbrush: Interactive example-based synthesis of procedural virtual worlds. ACM Transactions on Graphics (TOG) 34, 4 (2015), 106.Google ScholarDigital Library

21. Anastasia Feygina, Dmitry I Ignatov, and Ilya Makarov. 2018. Realistic post-processing of rendered 3D scenes. In ACM SIGGRAPH 2018 Posters. ACM, 42.Google ScholarDigital Library

22. William T Freeman, Thouis R Jones, and Egon C Pasztor. 2002. Example-based super-resolution. IEEE Computer graphics and Applications 22, 2 (2002), 56–65.Google ScholarDigital Library

23. Sakiko Fujieda, Yuki Morimoto, and Kazuo Ohzeki. 2017. An image generation system of delicious food in a manga style. In SIGGRAPH Asia 2017 Posters, SA 2017. Association for Computing Machinery, Inc, 28.Google Scholar

24. James Gain, Patrick Marais, and Wolfgang Straßer. 2009. Terrain sketching. In Proceedings of the 2009 symposium on Interactive 3D graphics and games. ACM, 31–38.Google ScholarDigital Library

25. James Gain, Bruce Merry, and Patrick Marais. 2015. Parallel, realistic and controllable terrain synthesis. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 105–116.Google Scholar

26. Eric Galin, Eric Guérin, Adrien Peytavie, Guillaume Cordonnier, Marie-Paule Cani, Bedrich Benes, and James Gain. 2019. A Review of Digital Terrain Modeling. (2019).Google Scholar

27. Lin Gao, Jie Yang, Yi-Ling Qiao, Yu-Kun Lai, Paul L Rosin, Weiwei Xu, and Shihong Xia. 2018. Automatic unpaired shape deformation transfer. In SIGGRAPH Asia 2018 Technical Papers. ACM, 237.Google Scholar

28. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2015. A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576 (2015).Google Scholar

29. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2414–2423.Google ScholarCross Ref

30. Jean-David Génevaux, Eric Galin, Adrien Peytavie, Eric Guérin, Cyril Briquet, François Grosbellet, and Bedrich Benes. 2015. Terrain modelling from feature primitives. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 198–210.Google Scholar

31. Jiahao Geng, Tianjia Shao, Youyi Zheng, Yanlin Weng, and Kun Zhou. 2018. Warpguided GANs for single-photo facial animation. In SIGGRAPH Asia 2018 Technical Papers. ACM, 231.Google Scholar

32. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.Google Scholar

33. Eric Guérin, Julie Digne, Eric Galin, and Adrien Peytavie. 2016. Sparse representation of terrains for procedural modeling. In Computer Graphics Forum, Vol. 35. Wiley Online Library, 177–187.Google Scholar

34. Eric Guérin, Julie Digne, Eric Galin, Adrien Peytavie, Christian Wolf, Bedrich Benes, and Benoît Martinez. 2017. Interactive example-based terrain authoring with conditional generative adversarial networks. ACM Transactions on Graphics (TOG) 36, 6 (2017), 228.Google ScholarDigital Library

35. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 138.Google ScholarDigital Library

36. Alain Hore and Djemel Ziou. 2010. Image quality metrics: PSNR vs. SSIM. In 2010 20th International Conference on Pattern Recognition. IEEE, 2366–2369.Google ScholarDigital Library

37. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

38. Tero Karras, Samuli Laine, and Timo Aila. 2018. A style-based generator architecture for generative adversarial networks. arXiv preprint arXiv:1812.04948 (2018).Google Scholar

39. Tom Kelly, Paul Guerrero, Anthony Steed, Peter Wonka, and Niloy J Mitra. 2018. FrankenGAN: guided detail synthesis for building mass models using stylesynchonized GANs. In SIGGRAPH Asia 2018 Technical Papers. ACM, 216.Google Scholar

40. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

41. Diederik P Kingma and Max Welling. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).Google Scholar

42. Ares Lagae, Sylvain Lefebvre, George Drettakis, and Philip Dutré. 2009. Procedural noise using sparse Gabor convolution. ACM Transactions on Graphics (TOG) 28, 3 (2009), 54.Google ScholarDigital Library

43. Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. 2017. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4681–4690.Google ScholarCross Ref

44. Jerry Liu, Fisher Yu, and Thomas Funkhouser. 2017. Interactive 3D modeling with a generative adversarial network. In 2017 International Conference on 3D Vision (3DV). IEEE, 126–134.Google ScholarCross Ref

45. Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep Learning Face Attributes in the Wild. In Proceedings of International Conference on Computer Vision (ICCV).Google ScholarDigital Library

46. Benoit B Mandelbrot. 1982. The fractal geometry of nature. Vol. 1. WH freeman New York.Google Scholar

47. Benoit B Mandelbrot and John W Van Ness. 1968. Fractional Brownian motions, fractional noises and applications. SIAM review 10, 4 (1968), 422–437.Google Scholar

48. David M Mark and Peter B Aronson. 1984. Scale-dependent fractal dimensions of topographic surfaces: an empirical investigation, with applications in geomorphology and computer mapping. Journal of the International Association for Mathematical Geology 16, 7 (1984), 671–683.Google ScholarCross Ref

49. Mehdi Mirza and Simon Osindero. 2014. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784 (2014).Google Scholar

50. Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida. 2018. Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 (2018).Google Scholar

51. Takeru Miyato and Masanori Koyama. 2018. cGANs with projection discriminator. arXiv preprint arXiv:1802.05637 (2018).Google Scholar

52. F Kenton Musgrave, Craig E Kolb, and Robert S Mace. 1989. The synthesis and rendering of eroded fractal terrains. In ACM Siggraph Computer Graphics, Vol. 23. ACM, 41–50.Google ScholarDigital Library

53. Koki Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, Hao Li, Richard Roberts, et al. 2018. paGAN: real-time avatars using dynamic textures. In SIGGRAPH Asia 2018 Technical Papers. ACM, 258.Google Scholar

54. Ryota Natsume, Tatsuya Yatagawa, and Shigeo Morishima. 2018. RSGAN: face swapping and editing using face and hair representation in latent spaces. In ACM SIGGRAPH 2018 Posters. ACM, 69.Google ScholarDigital Library

55. Kamyar Nazeri, Eric Ng, Tony Joseph, Faisal Qureshi, and Mehran Ebrahimi. 2019. EdgeConnect: Generative Image Inpainting with Adversarial Edge Learning. arXiv preprint arXiv:1901.00212 (2019).Google Scholar

56. NVIDIA. 2019. NVIDIA RTX Server Lineup Expands to Meet Growing Demand for Data Center and Cloud Graphics Applications. https://blogs.nvidia.com/blog/2019/03/18/rtx-server-lineup-expands/Google Scholar

57. Augustus Odena, Christopher Olah, and Jonathon Shlens. 2017. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the 34th International Conference on Machine Learning-Volume 70. JMLR. org, 2642–2651.Google ScholarDigital Library

58. Guim Perarnau, Joost Van De Weijer, Bogdan Raducanu, and Jose M Álvarez. 2016. Invertible conditional gans for image editing. arXiv preprint arXiv:1611.06355 (2016).Google Scholar

59. Ken Perlin. 1985. An image synthesizer. ACM Siggraph Computer Graphics 19, 3 (1985), 287–296.Google ScholarDigital Library

60. PlanetsideSoftwareLLC. 2019. Terragen. https://planetside.co.uk/Google Scholar

61. Alec Radford, Luke Metz, and Soumith Chintala. 2015. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 (2015).Google Scholar

62. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.Google ScholarCross Ref

63. Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved techniques for training gans. In Advances in neural information processing systems. 2234–2242.Google Scholar

64. Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. 2018. It takes (only) two: Adversarial generator-encoder networks. In Thirty-Second AAAI Conference on Artificial Intelligence.Google Scholar

65. Hao Wang, Nadav Schor, Ruizhen Hu, Haibin Huang, Daniel Cohen-Or, and Hui Huang. 2018. Global-to-local generative model for 3d shapes. In SIGGRAPH Asia 2018 Technical Papers. ACM, 214.Google Scholar

66. Zhou Wang, Alan C Bovik, Hamid R Sheikh, Eero P Simoncelli, et al. 2004. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13, 4 (2004), 600–612.Google Scholar

67. Lingyu Wei, Liwen Hu, Vladimir Kim, Ersin Yumer, and Hao Li. 2018. Real-Time Hair Rendering using Sequential Adversarial Networks. In Proceedings of the European Conference on Computer Vision (ECCV). 99–116.Google ScholarDigital Library

68. Li-Yi Wei, Sylvain Lefebvre, Vivek Kwatra, and Greg Turk. 2009. State of the art in example-based texture synthesis. In Eurographics 2009, State of the Art Report, EG-STAR. Eurographics Association, 93–117.Google Scholar

69. Christopher Wojtan, Mark Carlson, Peter J Mucha, and Greg Turk. 2007. Animating Corrosion and Erosion.. In NPH. Citeseer, 15–22.Google Scholar

70. Steven Worley. 1996. A cellular texture basis function. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. ACM, 291–294.Google ScholarDigital Library

71. You Xie, Erik Franz, Mengyu Chu, and Nils Thuerey. 2018. tempogan: A temporally coherent, volumetric gan for super-resolution fluid flow. ACM Transactions on Graphics (TOG) 37, 4 (2018), 95.Google ScholarDigital Library

72. Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. 2018. Self-attention generative adversarial networks. arXiv preprint arXiv:1805.08318 (2018).Google Scholar

73. Howard Zhou, Jie Sun, Greg Turk, and James M Rehg. 2007. Terrain synthesis from digital elevation models. IEEE transactions on visualization and computer graphics 13, 4 (2007), 834–848.Google ScholarDigital Library

74. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017a. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref

75. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017b. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref