“Comic-guided speech synthesis” by Wang, Wang, Liang and Yu

Conference:

Type(s):

Title:

- Comic-guided speech synthesis

Session/Category Title: Synthesis in the Arvo

Presenter(s)/Author(s):

Moderator(s):

Abstract:

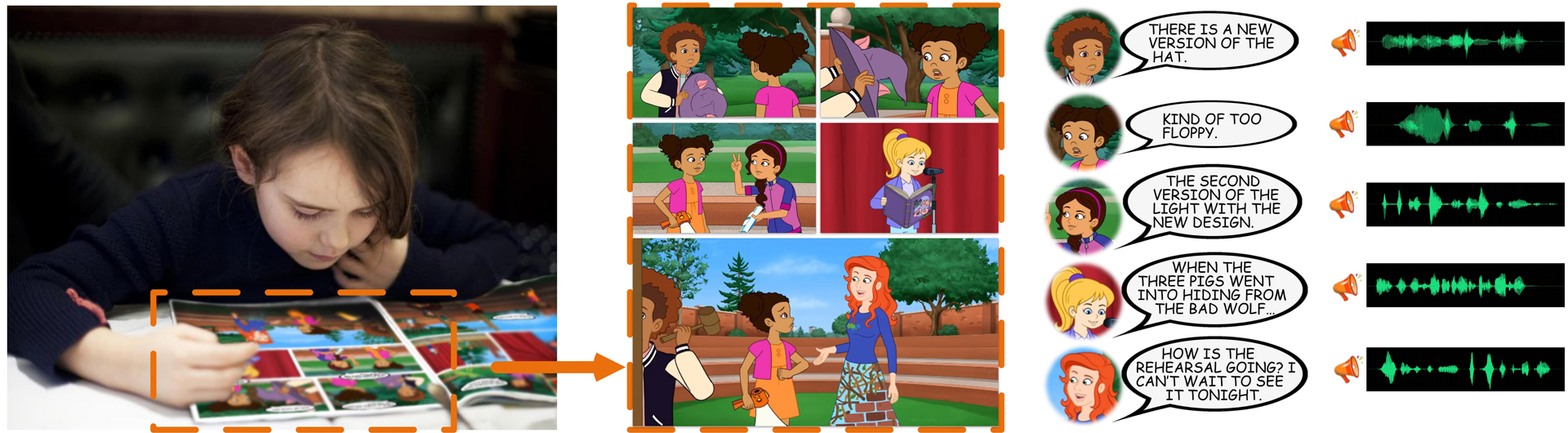

We introduce a novel approach for synthesizing realistic speeches for comics. Using a comic page as input, our approach synthesizes speeches for each comic character following the reading flow. It adopts a cascading strategy to synthesize speeches in two stages: Comic Visual Analysis and Comic Speech Synthesis. In the first stage, the input comic page is analyzed to identify the gender and age of the characters, as well as texts each character speaks and corresponding emotion. Guided by this analysis, in the second stage, our approach synthesizes realistic speeches for each character, which are consistent with the visual observations. Our experiments show that the proposed approach can synthesize realistic and lively speeches for different types of comics. Perceptual studies performed on the synthesis results of multiple sample comics validate the efficacy of our approach.

References:

1. Waleed Abdulla. 2017. Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow. https://github.com/matterport/Mask_RCNN.Google Scholar

2. Olivier Augereau, Motoi Iwata, and Koichi Kise. 2018. A survey of comics research in computer science. Journal of Imaging 4, 7 (2018), 87.Google ScholarCross Ref

3. Rainer Banse and Klaus R Scherer. 1996. Acoustic profiles in vocal emotion expression. Journal of personality and social psychology 70, 3 (1996), 614.Google ScholarCross Ref

4. Pascal Belin, Patricia EG Bestelmeyer, Marianne Latinus, and Rebecca Watson. 2011. Understanding voice perception. British Journal of Psychology 102, 4 (2011), 711–725.Google ScholarCross Ref

5. Pascal Belin, Shirley Fecteau, and Catherine Bedard. 2004. Thinking the voice: neural correlates of voice perception. Trends in cognitive sciences 8, 3 (2004), 129–135.Google Scholar

6. P. Praat Boersma. 2002. A System for Doing Phonetics by Computer. Glot International 5, 9/10 (2002), 341–345.Google Scholar

7. Vicki Bruce and Andy Young. 1986. Understanding face recognition. British Journal of Psychology 77, 3 (1986), 305–327.Google ScholarCross Ref

8. Salvatore Campanella and Pascal Belin. 2007. Integrating face and voice in person perception. Trends in cognitive sciences 11, 12 (2007), 535–543.Google Scholar

9. Chen Cao, Hongzhi Wu, Yanlin Weng, Tianjia Shao, and Kun Zhou. 2016. Real-time facial animation with image-based dynamic avatars. TOG 35, 4 (2016), 126.Google ScholarDigital Library

10. Ying Cao, Antoni B. Chan, and Rynson W. H. Lau. 2012. Automatic stylistic manga layout. TOG 31, 6 (2012), 1–10.Google ScholarDigital Library

11. Ying Cao, Rynson W. H. Lau, and Antoni B. Chan. 2014. Look over here:attention-directing composition of manga elements. TOG 33, 4 (2014), 1–11.Google Scholar

12. Wei-Ta Chu and Wei-Wei Li. 2017. Manga facenet: Face detection in manga based on deep neural network. In ICMR. ACM, 412–415.Google Scholar

13. Wei-Ta Chu and Wei-Wei Li. 2019. Manga face detection based on deep neural networks fusing global and local information. Pattern Recognition 86 (2019), 62–72.Google ScholarCross Ref

14. K Dimos, L Dick, and V Dellwo. 2015. Perception of levels of emotion in speech prosody. The Scottish Consortium for ICPhS (2015).Google Scholar

15. Alexander Dunst, Jochen Laubrock, and Janina Wildfeuer. 2018. Empirical Comics Research: Digital, Multimodal, and Cognitive Methods. Routledge.Google Scholar

16. Marek Dvorožnák, Wilmot Li, Vladimir G Kim, and Daniel Sỳkora. 2018. Toonsynth: example-based synthesis of hand-colored cartoon animations. TOG 37, 4 (2018), 167.Google ScholarDigital Library

17. Jesse Engel, Cinjon Resnick, Adam Roberts, Sander Dieleman, Mohammad Norouzi, Douglas Eck, and Karen Simonyan. 2017. Neural Audio Synthesis of Musical Notes with WaveNet Autoencoders. In ICML. 1068–1077.Google Scholar

18. Haytham M Fayek, Margaret Lech, and Lawrence Cavedon. 2017. Evaluating deep learning architectures for Speech Emotion Recognition. Neural Networks 92 (2017), 60–68.Google ScholarCross Ref

19. Bjarke Felbo, Alan Mislove, Anders Søgaard, Iyad Rahwan, and Sune Lehmann. 2017. Using millions of emoji occurrences to learn any-domain representations for detecting sentiment, emotion and sarcasm. In Conference on Emirical Methods in Natural Language Processing.Google ScholarCross Ref

20. Adam Finkelstein, Adam Finkelstein, Adam Finkelstein, Adam Finkelstein, and Adam Finkelstein. 2017. VoCo: text-based insertion and replacement in audio narration. TOG 36, 4 (2017), 96.Google Scholar

21. Brendan J Frey and Delbert Dueck. 2007. Clustering by passing messages between data points. science 315, 5814 (2007), 972–976.Google Scholar

22. Aviv Gabbay, Asaph Shamir, and Shmuel Peleg. 2018. Visual Speech Enhancement. In Interspeech. 1170–1174.Google Scholar

23. Asif A Ghazanfar and Drew Rendall. 2008. Evolution of human vocal production. Current Biology 18, 11 (2008), R457–R460.Google ScholarCross Ref

24. Ankush Gupta, Andrea Vedaldi, and Andrew Zisserman. 2016. Synthetic data for text localisation in natural images. In CVPR. 2315–2324.Google Scholar

25. Raia Hadsell, Sumit Chopra, and Yann LeCun. 2006. Dimensionality reduction by learning an invariant mapping. In CVPR, Vol. 2. IEEE, 1735–1742.Google ScholarDigital Library

26. W Keith Hastings. 1970. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 1 (1970), 97–109.Google ScholarCross Ref

27. Iben Have and Birgitte Stougaard Pedersen. 2013. Sonic mediatization of the book: affordances of the audiobook. MedieKultur: Journal of media and communication research 29, 54 (2013), 18-p.Google Scholar

28. Liwen Hu, Shunsuke Saito, Lingyu Wei, Koki Nagano, Jaewoo Seo, Jens Fursund, Iman Sadeghi, Carrie Sun, Yen-Chun Chen, and Hao Li. 2017. Avatar digitization from a single image for real-time rendering. TOG 36, 6 (2017), 195.Google ScholarDigital Library

29. Andrew J Hunt and Alan W Black. 1996. Unit selection in a concatenative speech synthesis system using a large speech database. In ICASSP, Vol. 1. 373–376.Google Scholar

30. Nal Kalchbrenner, Erich Elsen, Karen Simonyan, Seb Noury, Norman Casagrande, Edward Lockhart, Florian Stimberg, Aaron van den Oord, Sander Dieleman, and Koray Kavukcuoglu. 2018. Efficient Neural Audio Synthesis. In ICML, Vol. 80. 2410–2419.Google Scholar

31. Miyuki Kamachi, Harold Hill, Karen Lander, and Eric Vatikiotis-Bateson. 2003. Putting the face to the voice’: Matching identity across modality. Current Biology 13, 19 (2003), 1709–1714.Google ScholarCross Ref

32. Hideki Kawahara, Masanori Morise, Toru Takahashi, Ryuichi Nisimura, Toshio Irino, and Hideki Banno. 2008. TANDEM-STRAIGHT: A temporally stable power spectral representation for periodic signals and applications to interference-free spectrum, F0, and aperiodicity estimation. In ICASSP. 3933–3936.Google Scholar

33. Bernhard Kratzwald, Suzana Ilić, Mathias Kraus, Stefan Feuerriegel, and Helmut Prendinger. 2018. Deep learning for affective computing: Text-based emotion recognition in decision support. Decision Support Systems 115 (2018), 24–35.Google ScholarCross Ref

34. Andy SY Lai, Chris YK Wong, and Oscar CH Lo. 2015. Applying augmented reality technology to book publication business. In International Conference on e-Business Engineering. IEEE, 281–286.Google ScholarDigital Library

35. Yining Lang, Wei Liang, Yujia Wang, and Lap-Fai Yu. 2019. 3d face synthesis driven by personality impression. In AAAI, Vol. 33. 1707–1714.Google ScholarDigital Library

36. Norman J Lass, Karen R Hughes, Melanie D Bowyer, Lucille T Waters, and Victoria T Bourne. 1976. Speaker sex identification from voiced, whispered, and filtered isolated vowels. The Journal of the Acoustical Society of America 59, 3 (1976), 675–678.Google ScholarCross Ref

37. Younggun Lee, Azam Rabiee, and Soo-Young Lee. 2017. Emotional End-to-End Neural Speech Synthesizer. In NIPS Workshop.Google Scholar

38. Chengze Li, Xueting Liu, and Tien-Tsin Wong. 2017. Deep extraction of manga structural lines. TOG 36, 4 (2017), 117.Google ScholarDigital Library

39. Hao Li, Yongguo Kang, and Zhenyu Wang. 2018. EMPHASIS: An Emotional Phoneme-based Acoustic Model for Speech Synthesis System. arXiv preprint arXiv:1806.09276 (2018).Google Scholar

40. Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep learning face attributes in the wild. In ICCV. 3730–3738.Google Scholar

41. Jianqi Ma, Weiyuan Shao, Hao Ye, Li Wang, Hong Wang, Yingbin Zheng, and Xiangyang Xue. 2018. Arbitrary-oriented scene text detection via rotation proposals. TMM 20, 11 (2018), 3111–3122.Google ScholarDigital Library

42. Yusuke Matsui, Kota Ito, Yuji Aramaki, Azuma Fujimoto, Toru Ogawa, Toshihiko Yamasaki, and Kiyoharu Aizawa. 2017. Sketch-based manga retrieval using manga109 dataset. Multimedia Tools and Applications 76, 20 (2017), 21811–21838.Google ScholarDigital Library

43. Phil McAleer, Alexander Todorov, and Pascal Belin. 2014. How do you say “Hello”? Personality impressions from brief novel voices. PLOS ONE 9, 3 (2014), e90779.Google Scholar

44. Rachel Mcdonnell, Cathy Ennis, Simon Dobbyn, and Carol O’Sullivan. 2009. Talking bodies:Sensitivity to desynchronization of conversations. TAP 6, 4 (2009), 1–8.Google Scholar

45. Soroush Mehri, Kundan Kumar, Ishaan Gulrajani, Rithesh Kumar, Shubham Jain, Jose Sotelo, Aaron Courville, and Yoshua Bengio. 2017. SampleRNN: An unconditional end-to-end neural audio generation model. In ICLR.Google Scholar

46. David S. Miall. 1989. Beyond the schema given: Affective comprehension of literary narratives. 3, 1 (1989), 55–78.Google Scholar

47. Seyed Hamidreza Mohammadi and Alexander Kain. 2017. An overview of voice conversion systems. Speech Communication 88, 88 (2017), 65–82.Google ScholarDigital Library

48. Masanori Morise, Fumiya Yokomori, and Kenji Ozawa. 2016. WORLD: A Vocoder-Based High-Quality Speech Synthesis System for Real-Time Applications. Ieice Transactions on Information and Systems 99, 7 (2016), 1877–1884.Google ScholarCross Ref

49. R. W. Morris and M. A. Clements. 2002. Reconstruction of speech from whispers. Medical Engineering & Physics 24, 7 (2002), 515–520.Google ScholarCross Ref

50. Nhu-Van Nguyen, Christophe Rigaud, and Jean-Christophe Burie. 2017. Comic characters detection using deep learning. In IAPR international conference on document analysis and recognition, Vol. 3. IEEE, 41–46.Google ScholarCross Ref

51. Toru Ogawa, Atsushi Otsubo, Rei Narita, Yusuke Matsui, Toshihiko Yamasaki, and Kiyoharu Aizawa. 2018. Object Detection for Comics using Manga109 Annotations. CoRR abs/1803.08670 (2018).Google Scholar

52. Jan Ondřej, Cathy Ennis, Niamh A Merriman, and Carol O’sullivan. 2016. Franken-Folk: Distinctiveness and attractiveness of voice and motion. TAP 13, 4 (2016), 20.Google ScholarDigital Library

53. Dayi Ou and Cheuk Ming Mak. 2017. Optimization of natural frequencies of a plate structure by modifying boundary conditions. The Journal of the Acoustical Society of America 142, 1 (2017), EL56–EL62.Google ScholarCross Ref

54. Xufang Pang, Ying Cao, Rynson WH Lau, and Antoni B Chan. 2014. A robust panel extraction method for manga. In International Conference on Multimedia. ACM, 1125–1128.Google ScholarDigital Library

55. Robert S Petersen. 2011. Comics, manga, and graphic novles: a history of graphic narratives. ABC-CLIO.Google Scholar

56. Siyuan Qi, Wenguan Wang, Baoxiong Jia, Jianbing Shen, and Song-Chun Zhu. 2018. Learning human-object interactions by graph parsing neural networks. In ECCV. 401–417.Google Scholar

57. Xiaoran Qin, Yafeng Zhou, Zheqi He, Yongtao Wang, and Zhi Tang. 2017. A faster R-CNN based method for comic characters face detection. In IAPR International Conference on Document Analysis and Recognition, Vol. 1. IEEE, 1074–1080.Google ScholarCross Ref

58. Yingge Qu, Wai Man Pang, Tien Tsin Wong, and Pheng Ann Heng. 2008. Richness-preserving manga screening. TOG 27, 5 (2008), 1–8.Google ScholarDigital Library

59. Yingge Qu, Tien Tsin Wong, and Pheng Ann Heng. 2006. Manga colorization. TOG 25, 3 (2006), 1214–1220.Google ScholarDigital Library

60. Richard Rieman. 2016. The Author’s Guide to Audiobook Creation. Breckenridge Press.Google Scholar

61. Christophe Rigaud, Clément Guérin, Dimosthenis Karatzas, Jean-Christophe Burie, and Jean-Marc Ogier. 2015. Knowledge-driven understanding of images in comic books. International Journal on Document Analysis and Recognition 18, 3 (2015), 199–221.Google ScholarDigital Library

62. Ethan M Rudd, Manuel Günther, and Terrance E Boult. 2016. Moon: A mixed objective optimization network for the recognition of facial attributes. In ECCV. 19–35.Google Scholar

63. Jonathan Shen, Ruoming Pang, Ron J Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, Rj Skerrv-Ryan, et al. 2018. Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In ICASSP. IEEE, 4779–4783.Google Scholar

64. Baoguang Shi, Mingkun Yang, Xinggang Wang, Pengyuan Lyu, Cong Yao, and Xiang Bai. 2018. Aster: An attentional scene text recognizer with flexible rectification. TPAMI (2018).Google Scholar

65. Edgar Simo-Serra, Satoshi Iizuka, Kazuma Sasaki, and Hiroshi Ishikawa. 2016. Learning to simplify: fully convolutional networks for rough sketch cleanup. TOG 35, 4 (2016), 121.Google ScholarDigital Library

66. RJ Skerry-Ryan, Eric Battenberg, Ying Xiao, Yuxuan Wang, Daisy Stanton, Joel Shor, Ron J Weiss, Rob Clark, and Rif A Saurous. 2018. Towards End-to-End Prosody Transfer for Expressive Speech Synthesis with Tacotron. In ICML. 4700–4709.Google Scholar

67. Jose Sotelo, Soroush Mehri, Kundan Kumar, Joao Felipe Santos, Kyle Kastner, Aaron Courville, and Yoshua Bengio. 2017. Char2wav: End-to-end speech synthesis. In International Conference on Learning Representations Workshop.Google Scholar

68. Marco Stricker, Olivier Augereau, Koichi Kise, and Motoi Iwata. 2018. Facial Landmark Detection for Manga Images. arXiv preprint arXiv:1811.03214 (2018).Google Scholar

69. Supasorn Suwajanakorn, Steven M. Seitz, Ira Kemelmacher-Shlizerman, Supasorn Suwajanakorn, Steven M. Seitz, Ira Kemelmacher-Shlizerman, Supasorn Suwajanakorn, Steven M. Seitz, Ira Kemelmacher-Shlizerman, and Supasorn Suwajanakorn. 2017. Synthesizing Obama: learning lip sync from audio. TOG 36, 4 (2017), 1–13.Google ScholarDigital Library

70. Debra Trampe, Jordi Quoidbach, and Maxime Taquet. 2015. Emotions in Everyday Life. PLOS ONE 10, 12 (2015).Google Scholar

71. Aaron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. 2016. WaveNet: A Generative Model for Raw Audio. In 9th ISCA Speech Synthesis Workshop. 125–125.Google Scholar

72. Robin Varnum and Christina T Gibbons. 2007. The language of comics: Word and image. Univ, Press of Mississippi.Google Scholar

73. Christophe Veaux, Junichi Yamagishi, Kirsten MacDonald, et al. 2016. SUPERSEDEDCSTR VCTK Corpus: English Multi-speaker Corpus for CSTR Voice Cloning Toolkit. (2016).Google Scholar

74. Wenguan Wang, Jianbing Shen, and Haibin Ling. 2018a. A deep network solution for attention and aesthetics aware photo cropping. TPAMI 41, 7 (2018), 1531–1544.Google ScholarCross Ref

75. Wenguan Wang, Yuanlu Xu, Jianbing Shen, and Song-Chun Zhu. 2018c. Attentive fashion grammar network for fashion landmark detection and clothing category classification. In CVPR. 4271–4280.Google Scholar

76. Yujia Wang, Wei Liang, Jianbing Shen, Yunde Jia, and Lap-Fai Yu. 2019. A deep Coarse-to-Fine network for head pose estimation from synthetic data. Pattern Recognition 94 (2019), 196–206.Google ScholarDigital Library

77. Yuxuan Wang, RJ Skerry-Ryan, Daisy Stanton, Yonghui Wu, Ron J Weiss, Navdeep Jaitly, Zongheng Yang, Ying Xiao, Zhifeng Chen, Samy Bengio, et al. 2017. Tacotron: Towards End-to-End Speech Synthesis. In Proceedings of Interspeech. 4006–4010.Google ScholarCross Ref

78. Yuxuan Wang, Daisy Stanton, Yu Zhang, RJ Skerry-Ryan, Eric Battenberg, Joel Shor, Ying Xiao, Fei Ren, Ye Jia, and Rif A Saurous. 2018b. Style Tokens: Unsupervised Style Modeling, Control and Transfer in End-to-End Speech Synthesis. In ICML.Google Scholar

79. Zhong-Qiu Wang and Ivan Tashev. 2017. Learning utterance-level representations for speech emotion and age/gender recognition using deep neural networks. In ICASSP. IEEE, 5150–5154.Google Scholar