“How to train your dragon: example-guided control of flapping flight” by Won, Park, Kim and Lee

Conference:

Type(s):

Title:

- How to train your dragon: example-guided control of flapping flight

Session/Category Title: Fire, Flow and Flight

Presenter(s)/Author(s):

Abstract:

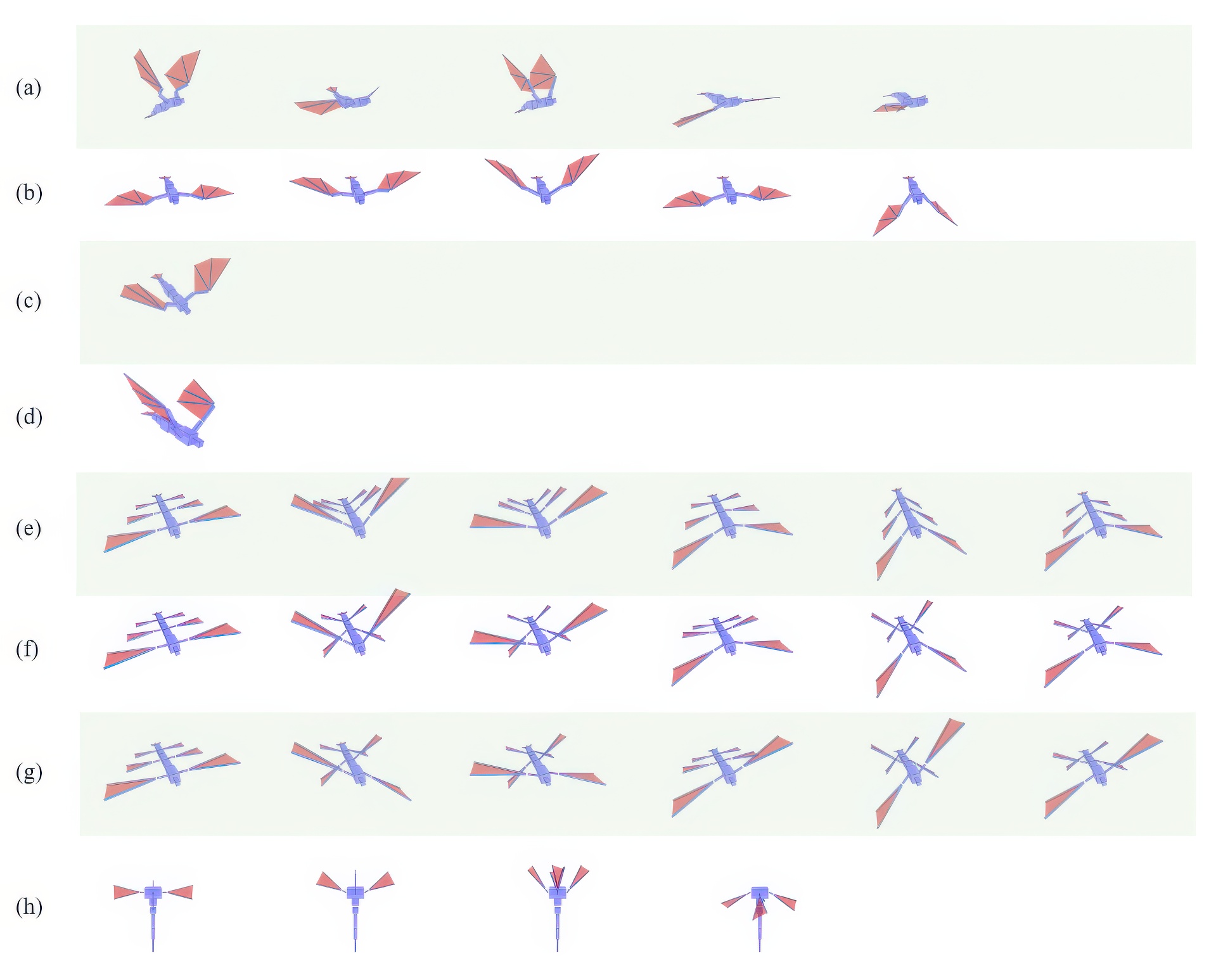

Imaginary winged creatures in computer animation applications are expected to perform a variety of motor skills in a physically realistic and controllable manner. Designing physics-based controllers for a flying creature is still very challenging particularly when the dynamic model of the creatures is high-dimensional, having many degrees of freedom. In this paper, we present a control method for flying creatures, which are aerodynamically simulated, interactively controllable, and equipped with a variety of motor skills such as soaring, gliding, hovering, and diving. Each motor skill is represented as Deep Neural Networks (DNN) and learned using Deep Q-Learning (DQL). Our control method is example-guided in the sense that it provides the user with direct control over the learning process by allowing the user to specify keyframes of motor skills. Our novel learning algorithm was inspired by evolutionary strategies of Covariance Matrix Adaptation Evolution Strategy (CMA-ES) to improve the convergence rate and the final quality of the control policy. The effectiveness of our Evolutionary DQL method is demonstrated with imaginary winged creatures flying in a physically simulated environment and their motor skills learned automatically from user-provided keyframes.

References:

1. Mazen Al Borno, Martin de Lasa, and Aaron Hertzmann. 2013. Trajectory Optimization for Full-Body Movements with Complex Contacts. IEEE Transactions on Visualization and Computer Graphics 19, 8 (2013).

2. Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. 2016. OpenAI Gym. (2016).

3. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2009. Robust Task-based Control Policies for Physics-based Characters. ACM Trans. Graph. (SIGGRPAH 2009) 28, 5 (2009).

4. Stelian Coros, Andrej Karpathy, Ben Jones, Lionel Reveret, and Michiel van de Panne. 2011. Locomotion Skills for Simulated Quadrupeds. ACM Trans. Graph. (SIGGRPAH 2011) 30, 4 (2011).

5. Dart. 2012. Dart: Dynamic Animation and Robotics Toolkit. https://dartsim.github.io/. (2012).

6. Martin de Lasa, Igor Mordatch, and Aaron Hertzmann. 2010. Feature-based locomotion controllers. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010).

7. Yan Duan, Xi Chen, Rein Houthooft, John Schulman, and Pieter Abbeel. 2016. Benchmarking Deep Reinforcement Learning for Continuous Control. CoRR abs/1604.06778 (2016).

8. Xavier Glorot and Yoshua Bengio. 2010. Understanding the difficulty of training deep feedforward neural networks. In In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATSfi10).

9. Radek Grzeszczuk, Demetri Terzopoulos, and Geoffrey E. Hinton. 1998. NeuroAnimator: Fast Neural Network Emulation and Control of Physics-based Models. In Proceedings of International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH 1998). 9–20.

10. Sehoon Ha and C. Karen Liu. 2014. Iterative Training of Dynamic Skills Inspired by Human Coaching Techniques. ACM Trans. Graph. 34, 1 (2014).

11. Nicolas Heess, Gregory Wayne, David Silver, Timothy P. Lillicrap, Tom Erez, and Yuval Tassa. 2015. Learning Continuous Control Policies by Stochastic Value Gradients. In Annual Conference on Neural Information Processing Systems (NIPS 2015). 2944–2952.

12. Eunjung Ju, Jungdam Won, Jehee Lee, Byungkuk Choi, Junyong Noh, and Min Gyu Choi. 2013. Data-driven Control of Flapping Flight. ACM Trans. Graph. 32 (2013), 151:1–151:12.

13. Jehee Lee and Kang Hoon Lee. 2004. Precomputing Avatar Behavior from Human Motion Data. In Proceedings of the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 79–87.

14. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010. Data-driven biped control. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010).

15. Yoonsang Lee, Moon Seok Park, Taesoo Kwon, and Jehee Lee. 2014. Locomotion Control for Many-muscle Humanoids. ACM Trans. Graph. (SIGGRAPH Asia 2014) 33, 6 (2014).

16. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popović, and Zoran Popović 2010. Motion Fields for Interactive Character Locomotion. ACM Trans. Graph. (SIGGRPAH Asia 2010) 29, 6 (2010).

17. Sergey Levine, Chelsea Finn, Trevor Darrell, and Pieter Abbeel. 2016. End-to-end Training of Deep Visuomotor Policies. J. Mach. Learn. Res. 17 (2016), 1334–1373.

18. Sergey Levine and Vladlen Koltun. 2014. Learning Complex Neural Network Policies with Trajectory Optimization. In Proceedings of the 31st International Conference on Machine Learning (ICML 2014).

19. Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. 2015. Continuous control with deep reinforcement learning. CoRR abs/1509.02971 (2015).

20. Libin Liu, Michiel Van De Panne, and Kangkang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Trans. Graph. 35, 3 (2016).

21. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. 2015. Human-level control through deep reinforcement learning. Nature 518 (2015), 529–533. Cross Ref

22. Igor Mordatch and Emanuel Todorov. 2014. Combining the benefits of function approximation and trajectory optimization. In In Robotics: Science and Systems (RSS 2014).

23. Igor Mordatch, Emanuel Todorov, and Zoran Popović 2012. Discovery of complex behaviors through contact-invariant optimization. ACM Trans. Graph. (SIGGRAPH 2012) 29, 4 (2012).

24. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2016. Terrain-adaptive Locomotion Skills Using Deep Reinforcement Learning. ACM Trans. Graph. (SIGGRPAH 2016) 35, 4 (2016).

25. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel van de Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Grap. (SIGGRAPH 2017) 36, 4 (2017).

26. Xue Bin Peng and Michiel van de Panne. 2016. Learning Locomotion Skills Using DeepRL: Does the Choice of Action Space Matter? CoRR abs/1611.01055 (2016).

27. Tom Schaul, John Quan, Ioannis Antonoglou, and David Silver. 2015. Prioritized Experience Replay. CoRR abs/1511.05952 (2015).

28. John Schulman, Sergey Levine, Philipp Moritz, Michael I. Jordan, and Pieter Abbeel. 2015a. Trust Region Policy Optimization. CoRR abs/1502.05477 (2015).

29. John Schulman, Philipp Moritz, Sergey Levine, Michael I. Jordan, and Pieter Abbeel. 2015b. High-Dimensional Continuous Control Using Generalized Advantage Estimation. CoRR abs/1506.02438 (2015).

30. David Silver, Guy Lever, Nicolas Heess, Thomas Degris, Daan Wierstra, and Martin Riedmiller. 2014. Deterministic Policy Gradient Algorithms. In Proceedings of the 31st International Conference on Machine Learning (ICML 2014). 387–395.

31. Kwang Won Sok, Manmyung Kim, and Jehee Lee. 2007. Simulating biped behaviors from human motion data. ACM Trans. Graph. (SIGGRAPH 2007) 26, 3 (2007).

32. Jie Tan, Yuting Gu, C. Karen Liu, and Greg Turk. 2014. Learning Bicycle Stunts. ACM Trans. Graph. (SIGGRAPH 2014) 33 (2014), 50:1–50:12.

33. Jie Tan, Yuting Gu, Greg Turk, and C. Karen Liu. 2011. Articulated swimming creatures. ACM Trans. Graph. (SIGGRAPH 2011) 30, 4 (2011).

34. TensorFlow. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. (2015). http://tensorflow.org/ Software availablefromtensorflow.org.

35. Adrien Treuille, Yongjoon Lee, and Zoran Popović. 2007. Near-optimal Character Animation with Continuous Control. ACM Trans. Graph. (SIGGRAPH 2007) 26, 3 (2007).

36. Hado van Hasselt and Marco A. Wiering. 2007. Reinforcement Learning in Continuous Action Spaces. In Proceedings of the 2007 IEEE Symposium on Approximate Dynamic Programming and Reinforcement Learning (ADPRL 2007). 272–279. Cross Ref

37. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2010. Optimizing Walking Controllers for Uncertain Inputs and Environments. ACM Trans. Graph. (SIGGRAPH 2010) 29, 4 (2010).

38. Jack M. Wang, Samuel. R. Hamner, Scott. L. Delp, and Vladlen. Koltun. 2012. Optimizing Locomotion Controllers Using Biologically-Based Actuators and Objectives. ACM Transactions on Graphics (SIGGRAPH 2012) 31, 4 (2012).

39. Jia-chi Wu and Zoran Popović. 2003. Realistic modeling of bird flight animations. ACM Trans. Graph. (SIGGRAPH 2003) 22, 3 (2003).

40. Kangkang Yin, Kevin Loken, and Michiel van de Panne. 2007. SIMBICON: Simple Biped Locomotion Control. ACM Trans. Graph. (SIGGRAPH 2007) 26, 3 (2007).