“Video-audio driven real-time facial animation”

Conference:

Type(s):

Title:

- Video-audio driven real-time facial animation

Session/Category Title: Faces and Characters

Presenter(s)/Author(s):

Abstract:

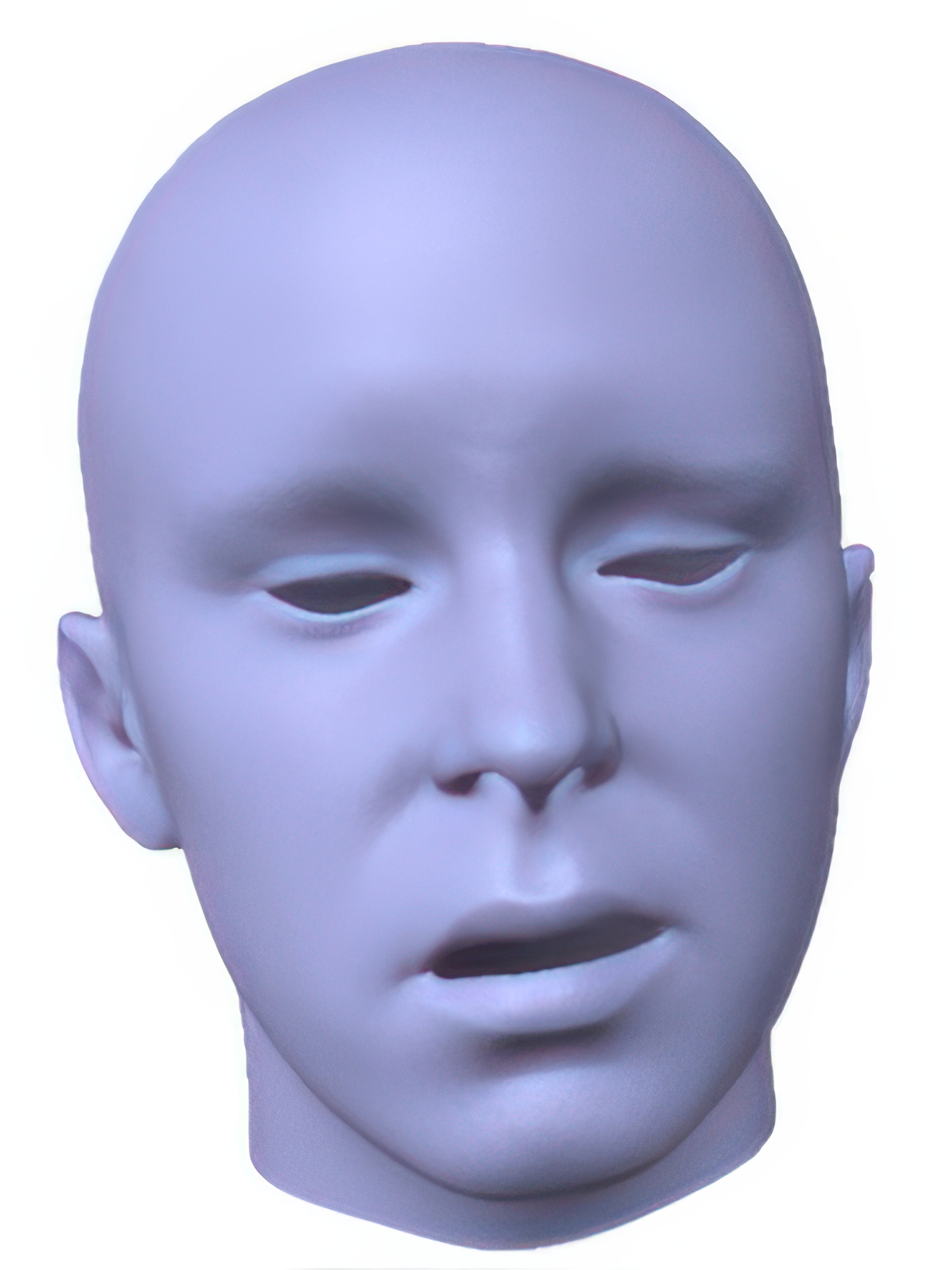

We present a real-time facial tracking and animation system based on a Kinect sensor with video and audio input. Our method requires no user-specific training and is robust to occlusions, large head rotations, and background noise. Given the color, depth and speech audio frames captured from an actor, our system first reconstructs 3D facial expressions and 3D mouth shapes from color and depth input with a multi-linear model. Concurrently a speaker-independent DNN acoustic model is applied to extract phoneme state posterior probabilities (PSPP) from the audio frames. After that, a lip motion regressor refines the 3D mouth shape based on both PSPP and expression weights of the 3D mouth shapes, as well as their confidences. Finally, the refined 3D mouth shape is combined with other parts of the 3D face to generate the final result. The whole process is fully automatic and executed in real time.The key component of our system is a data-driven regresor for modeling the correlation between speech data and mouth shapes. Based on a precaptured database of accurate 3D mouth shapes and associated speech audio from one speaker, the regressor jointly uses the input speech and visual features to refine the mouth shape of a new actor. We also present an improved DNN acoustic model. It not only preserves accuracy but also achieves real-time performance.Our method efficiently fuses visual and acoustic information for 3D facial performance capture. It generates more accurate 3D mouth motions than other approaches that are based on audio or video input only. It also supports video or audio only input for real-time facial animation. We evaluate the performance of our system with speech and facial expressions captured from different actors. Results demonstrate the efficiency and robustness of our method.

References:

1. Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM Transactions on Graphics (TOG) 30, 4, 75.

2. Besl, P. J., and McKay, N. D. 1992. Method for registration of 3-d shapes. In Robotics-DL tentative, International Society for Optics and Photonics, 586–606.

3. Botsch, M., and Sorkine, O. 2008. On linear variational surface deformation methods. Visualization and Computer Graphics, IEEE Transactions on 14, 1, 213–230.

4. Bouaziz, S., Wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM Transactions on Graphics (TOG) 32, 4, 40.

5. Brand, M. 1999. Voice puppetry. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques, ACM Press/Addison-Wesley Publishing Co., 21–28.

6. Bregler, C., Covell, M., and Slaney, M. 1997. Video rewrite: Driving visual speech with audio. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques, ACM Press/Addison-Wesley Publishing Co., 353–360.

7. Cao, Y., Tien, W. C., Faloutsos, P., and Pighin, F. 2005. Expressive speech-driven facial animation. ACM Trans. Graph. 24, 4 (Oct.), 1283–1302.

8. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3d shape regression for real-time facial animation. ACM Trans. Graph. 32, 4 (July), 41:1–41:10.

9. Cao, C., Hou, Q., and Zhou, K. 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM Transactions on Graphics (TOG) 33, 4, 43.

10. Cao, C., Weng, Y., Zhou, S., Tong, Y., and Zhou, K. 2014. Facewarehouse: a 3d facial expression database for visual computing. Visualization and Computer Graphics, IEEE Transactions on 20, 3, 413–425.

11. Chuang, E., and Bregler, C. 2005. Mood swings: Expressive speech animation. ACM Trans. Graph. 24, 2 (Apr.), 331–347.

12. Dahl, G. E., Yu, D., Deng, L., and Acero, A. 2012. Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. Audio, Speech, and Language Processing, IEEE Transactions on 20, 1, 30–42.

13. Deng, Z., Neumann, U., Lewis, J. P., Kim, T., Bulut, M., and Narayanan, S. 2006. Expressive facial animation synthesis by learning speech coarticulation and expression spaces. IEEE Trans. Vis. Comput. Graph. 12, 6, 1523–1534.

14. Ezzat, T., and Poggio, T. 2000. Visual speech synthesis by morphing visemes. International Journal of Computer Vision 38, 1, 45–57.

15. Fu, S., Gutierrez-Osuna, R., Esposito, A., Kakumanu, P. K., and Garcia, O. N. 2005. Audio/visual mapping with cross-modal hidden markov models. Multimedia, IEEE Transactions on 7, 2, 243–252.

16. Godfrey, J. J., and Holliman, E. 1997. Switchboard-1 release 2. Linguistic Data Consortium, Philadelphia.

17. Hsieh, P.-L., Ma, C., Yu, J., and Li, H. 2015. Unconstrained realtime facial performance capture. In Computer Vision and Pattern Recognition (CVPR).

18. King, S., and Parent, R. 2005. Creating speech-synchronized animation. Visualization and Computer Graphics, IEEE Transactions on 11, 3 (May), 341–352.

19. Le, B., Ma, X., and Deng, Z. 2012. Live speech driven head-and-eye motion generators. Visualization and Computer Graphics, IEEE Transactions on 18, 11 (Nov), 1902–1914.

20. Lei, X., Dongmei, J., Ravyse, I., Verhelst, W., Sahli, H., Slavova, V., and Rongchun, Z. 2003. Context dependent viseme models for voice driven animation. In Video/Image Processing and Multimedia Communications, 2003. 4th EURASIP Conference focused on, vol. 2, IEEE, 649–654.

21. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM Trans. Graph. 32, 4, 42.

22. Massaro, D. W., Beskow, J., Cohen, M. M., Fry, C. L., and Rodgriguez, T. 1999. Picture my voice: Audio to visual speech synthesis using artificial neural networks. In AVSP’99-International Conference on Auditory-Visual Speech Processing.

23. Rabiner, L. R., and Juang, B.-H. 1993. Fundamentals of speech recognition, vol. 14. PTR Prentice Hall Englewood Cliffs.

24. Ren, S., Cao, X., Wei, Y., and Sun, J. 2014. Face alignment at 3000 fps via regressing local binary features. In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, IEEE, 1685–1692.

25. Seide, F., Li, G., Chen, X., and Yu, D. 2011. Feature engineering in context-dependent deep neural networks for conversational speech transcription. In Automatic Speech Recognition and Understanding (ASRU), 2011 IEEE Workshop on, IEEE, 24–29.

26. Seide, F., Li, G., and Yu, D. 2011. Conversational speech transcription using context-dependent deep neural networks. In Interspeech, 437–440.

27. Sun, N., Suigetsu, K., and Ayabe, T. 2008. An approach to speech driven animation. In Intelligent Information Hiding and Multimedia Signal Processing, 2008. IIHMSP’08 International Conference on, IEEE, 113–116.

28. Taylor, S. L., Mahler, M., Theobald, B.-J., and Matthews, I. 2012. Dynamic units of visual speech. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, SCA ’12, 275–284.

29. Wampler, K., Sasaki, D., Zhang, L., and Popović, Z. 2007. Dynamic, expressive speech animation from a single mesh. In Proceedings of the 2007 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, SCA ’07, 53–62.

30. Wang, G.-Y., Yang, M.-T., Chiang, C.-C., and Tai, W.-K. 2006. A talking face driven by voice using hidden markov model. Journal of information science and engineering 22, 5, 1059.

31. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Real-time performance-based facial animation. ACM Transactions on Graphics (Proceedings SIGGRAPH 2011) 30, 4 (July).

32. Xie, L., and Liu, Z.-Q. 2007. A coupled hmm approach to video-realistic speech animation. Pattern Recognition 40, 8, 2325–2340.

33. Yu, D., Deng, L., and Dahl, G. 2010. Roles of pre-training and fine-tuning in context-dependent dbn-hmms for real-world speech recognition. In Proc. NIPS Workshop on Deep Learning and Unsupervised Feature Learning.

34. Zhang, X., Wang, L., Li, G., Seide, F., and Soong, F. K. 2013. A new language independent, photo-realistic talking head driven by voice only. In INTERSPEECH, 2743–2747.

35. Zhuang, X., Wang, L., Soong, F., and Hasegawa-Johnson, M. 2010. A minimum converted trajectory error (mcte) approach to high quality speech-to-lips conversion.