“Automatic unpaired shape deformation transfer”

Conference:

Type(s):

Title:

- Automatic unpaired shape deformation transfer

Session/Category Title: Geometry generation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

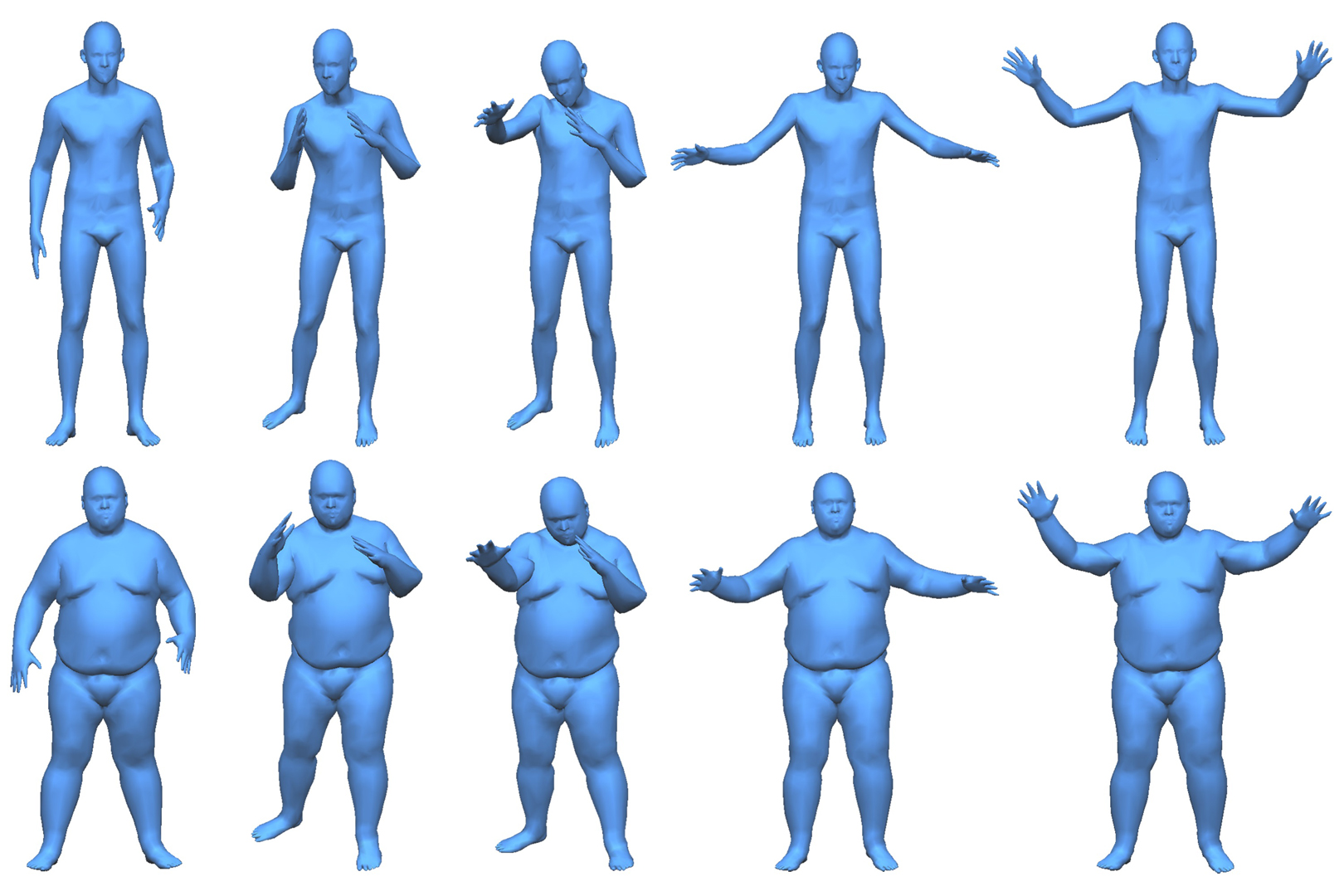

Transferring deformation from a source shape to a target shape is a very useful technique in computer graphics. State-of-the-art deformation transfer methods require either point-wise correspondences between source and target shapes, or pairs of deformed source and target shapes with corresponding deformations. However, in most cases, such correspondences are not available and cannot be reliably established using an automatic algorithm. Therefore, substantial user effort is needed to label the correspondences or to obtain and specify such shape sets. In this work, we propose a novel approach to automatic deformation transfer between two unpaired shape sets without correspondences. 3D deformation is represented in a high-dimensional space. To obtain a more compact and effective representation, two convolutional variational autoencoders are learned to encode source and target shapes to their latent spaces. We exploit a Generative Adversarial Network (GAN) to map deformed source shapes to deformed target shapes, both in the latent spaces, which ensures the obtained shapes from the mapping are indistinguishable from the target shapes. This is still an under-constrained problem, so we further utilize a reverse mapping from target shapes to source shapes and incorporate cycle consistency loss, i.e. applying both mappings should reverse to the input shape. This VAE-Cycle GAN (VC-GAN) architecture is used to build a reliable mapping between shape spaces. Finally, a similarity constraint is employed to ensure the mapping is consistent with visual similarity, achieved by learning a similarity neural network that takes the embedding vectors from the source and target latent spaces and predicts the light field distance between the corresponding shapes. Experimental results show that our fully automatic method is able to obtain high-quality deformation transfer results with unpaired data sets, comparable or better than existing methods where strict correspondences are required.

References:

1. Dragomir Anguelov, Praveen Srinivasan, Daphne Koller, Sebastian Thrun, Jim Rodgers, and James Davis. 2005. SCAPE: shape completion and animation of people. ACM Trans. Graph. 24, 3 (2005), 408–416. Google ScholarDigital Library

2. Ilya Baran, Daniel Vlasic, Eitan Grinspun, and Jovan Popović. 2009. Semantic deformation transfer. ACM Trans. Graph. 28, 3 (2009), 36:1–36:6. Google ScholarDigital Library

3. Mirela Ben-Chen, Ofir Weber, and Craig Gotsman. 2009. Spatial deformation transfer. In Proceedings of the 2009 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. ACM, 67–74. Google ScholarDigital Library

4. Davide Boscaini, Jonathan Masci, Emanuele Rodolà, and Michael Bronstein. 2016a. Learning shape correspondence with anisotropic convolutional neural networks. In Advances in Neural Information Processing Systems (NIPS). 3189–3197. Google ScholarDigital Library

5. Davide Boscaini, Jonathan Masci, Emanuele Rodolà, Michael M. Bronstein, and Daniel Cremers. 2016b. Anisotropic diffusion descriptors. Comp. Graph. Forum 35, 2 (2016), 431–441.Google ScholarCross Ref

6. Joan Bruna, Wojciech Zaremba, Arthur Szlam, and Yann LeCun. 2014. Spectral networks and locally connected networks on graphs. In International Conference on Learning Representations (ICLR).Google Scholar

7. Ding-Yun Chen, Xiao-Pei Tian, Yu-Te Shen, and Ming Ouhyoung. 2003. On Visual Similarity Based 3D Model Retrieval. Comp. Graph. Forum 22, 3 (2003), 223–232.Google ScholarCross Ref

8. Lu Chen, Jin Huang, Hanqiu Sun, and Hujun Bao. 2010. Cage-based deformation transfer. Computers & Graphics 34, 2 (2010), 107–118. Google ScholarDigital Library

9. Christopher B. Choy, Danfei Xu, JunYoung Gwak, Kevin Chen, and Silvio Savarese. 2016. 3D-R2N2: A unified approach for single and multi-view 3D object reconstruction. In European conference on computer vision (ECCV). Springer, 628–644.Google ScholarCross Ref

10. Hung-Kuo Chu and Chao-Hung Lin. 2010. Example-based Deformation Transfer for 3D Polygon Models. J. Inf. Sci. Eng. 26, 2 (2010), 379–391.Google Scholar

11. Etienne Corman, Justin Solomon, Mirela Ben-Chen, Leonidas J. Guibas, and Maks Ovsjanikov. 2017. Functional Characterization of Intrinsic and Extrinsic Geometry. ACM Trans. Graph. 36, 2 (2017), 14:1–14:17. Google ScholarDigital Library

12. Darren Cosker, R. Borkett, David Marshall, and Paul L. Rosin. 2008. Towards automatic performance-driven animation between multiple types of facial model. IET Computer Vision 2, 3 (2008), 129–141.Google ScholarCross Ref

13. Michaël Defferrard, Xavier Bresson, and Pierre Vandergheynst. 2016. Convolutional neural networks on graphs with fast localized spectral filtering. In Advances in Neural Information Processing Systems (NIPS). 3844–3852. Google ScholarDigital Library

14. David K. Duvenaud, Dougal Maclaurin, Jorge Iparraguirre, Rafael Bombarell, Timothy Hirzel, Alán Aspuru-Guzik, and Ryan P. Adams. 2015. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems (NIPS). 2224–2232. Google ScholarDigital Library

15. Haoqiang Fan, Hao Su, and Leonidas J. Guibas. 2017. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 605–613.Google Scholar

16. James E. Gain and Dominique Bechmann. 2008. A survey of spatial deformation from a user-centered perspective. ACM Trans. Graph. 27, 4 (2008), 107:1–107:21. Google ScholarDigital Library

17. Lin Gao, Yu-Kun Lai, Jie Yang, Ling-Xiao Zhang, Leif Kobbelt, and Shihong Xia. 2017. Sparse Data Driven Mesh Deformation. arXiv:1709.01250 (2017).Google Scholar

18. Lin Gao, Yu-Kun Lai, Dun Liang, Shu-Yu Chen, and Shihong Xia. 2016. Efficient and Flexible Deformation Representation for Data-Driven Surface Modeling. ACM Trans. Graph. 35, 5 (2016), 158:1–158:17. Google ScholarDigital Library

19. Rohit Girdhar, David F. Fouhey, Mikel Rodriguez, and Abhinav Gupta. 2016. Learning a Predictable and Generative Vector Representation for Objects. In European conference on computer vision (ECCV). Springer, 484–499.Google ScholarCross Ref

20. Xiaoguang Han, Chang Gao, and Yizhou Yu. 2017. DeepSketch2Face: a deep learning based sketching system for 3D face and caricature modeling. ACM Trans. Graph. 36, 4 (2017), 126:1–126:12. Google ScholarDigital Library

21. Mikael Henaff, Joan Bruna, and Yann LeCun. 2015. Deep convolutional networks on graph-structured data. arXiv:1506.05163 (2015).Google Scholar

22. Elad Hoffer and Nir Ailon. 2014. Deep Metric Learning Using Triplet Network. In International Workshop on Similarity-Based Pattern Recognition. 84–92.Google Scholar

23. Haibin Huang, Evangelos Kalegorakis, Siddhartha Chaudhuri, Duygu Ceylan, Vladimir G. Kim, and Ersin Yumer. 2018. Learning Local Shape Descriptors with View-based Convolutional Neural Networks. ACM Trans. Graph. 37, 1 (2018), 6:1–6:14. Google ScholarDigital Library

24. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 1125–1134.Google Scholar

25. Tao Ju. 2004. Robust Repair of Polygonal Models. ACM Trans. Graph. 23, 3 (2004), 888–895. Google ScholarDigital Library

26. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In International Conference on Learning Representations (ICLR).Google Scholar

27. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas J. Guibas. 2017. GRASS: Generative Recursive Autoencoders for Shape Structures. ACM Trans. Graph. 36, 4 (2017), 52:1–52:14. Google ScholarDigital Library

28. Yangyan Li, Hao Su, Charles Ruizhongtai Qi, Noa Fish, Daniel Cohen-Or, and Leonidas J. Guibas. 2015. Joint embeddings of shapes and images via CNN image purification. ACM Trans. Graph. 34, 6 (2015), 234:1–234:12. Google ScholarDigital Library

29. Or Litany, Alex Bronstein, Michael Bronstein, and Ameesh Makadia. 2017. Deformable Shape Completion with Graph Convolutional Autoencoders. arXiv:1712.00268 (2017).Google Scholar

30. Jerry Liu, Fisher Yu, and Thomas Funkhouser. 2017. Interactive 3D modeling with a generative adversarial network. In IEEE International Conference on 3D Vision (3DV). 126–134.Google ScholarCross Ref

31. Haggai Maron, Meirav Galun, Noam Aigerman, Miri Trope, Nadav Dym, Ersin Yumer, Vladimir G. Kim, and Yaron Lipman. 2017. Convolutional neural networks on surfaces via seamless toric covers. ACM Trans. Graph 36, 4 (2017), 71:1–71:10. Google ScholarDigital Library

32. Daniel Maturana and Sebastian Scherer. 2015. Voxnet: a 3D convolutional neural network for real-time object recognition. In IEEE Conference on Intelligent Robots and Systems. 922–928.Google ScholarCross Ref

33. Mark Meyer, Mathieu Desbrun, Peter Schröder, and Alan H. Barr. 2003. Discrete differential-geometry operators for triangulated 2-manifolds. In Visualization and mathematics III. 35–57.Google Scholar

34. Charlie Nash and Chris KI Williams. 2017. The shape variational autoencoder: A deep generative model of part-segmented 3D objects. Comp. Graph. Forum 36, 5, 1–12. Google ScholarDigital Library

35. Mathias Niepert, Mohamed Ahmed, and Konstantin Kutzkov. 2016. Learning convolutional neural networks for graphs. In International conference on machine learning (ICML). 2014–2023. Google ScholarDigital Library

36. Gerard Pons-Moll, Javier Romero, Naureen Mahmood, and Michael J. Black. 2015. Dyna: A model of dynamic human shape in motion. ACM Trans. Graph. 34, 4 (2015), 120:1–120:14. Google ScholarDigital Library

37. Raif M. Rustamov, Maks Ovsjanikov, Omri Azencot, Mirela Ben-Chen, Frédéric Chazal, and Leonidas J. Guibas. 2013. Map-based exploration of intrinsic shape differences and variability. ACM Trans. Graph. 32, 4 (2013), 72:1–72:12. Google ScholarDigital Library

38. Abhishek Sharma, Oliver Grau, and Mario Fritz. 2016. Vconv-dae: Deep volumetric shape learning without object labels. In European conference on computer vision (ECCV) Workshops. 236–250.Google ScholarCross Ref

39. Baoguang Shi, Song Bai, Zhichao Zhou, and Xiang Bai. 2015. Deeppano: Deep panoramic representation for 3-d shape recognition. IEEE Signal Processing Letters 22, 12 (2015), 2339–2343.Google ScholarCross Ref

40. Philip Shilane, Patrick Min, Michael Kazhdan, and Thomas Funkhouser. 2004. The princeton shape benchmark. In Proceedings Shape Modeling Applications, 2004. IEEE, 167–178. Google ScholarDigital Library

41. Ayan Sinha, Asim Unmesh, Qixing Huang, and Karthik Ramani. 2017. SurfNet: Generating 3D Shape Surfaces Using Deep Residual Networks. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 6040–6049.Google ScholarCross Ref

42. Robert W. Sumner and Jovan Popović. 2004. Deformation transfer for triangle meshes. ACM Trans. Graph. 23, 3 (2004), 399–405. Google ScholarDigital Library

43. Minhyuk Sung, Hao Su, Vladimir G. Kim, Siddhartha Chaudhuri, and Leonidas J. Guibas. 2017. Complementme: Weakly-supervised Component Suggestions for 3D Modeling. ACM Trans. Graph. 36, 6 (2017), 226:1–226:12. Google ScholarDigital Library

44. Qingyang Tan, Lin Gao, Yu-Kun Lai, and Shihong Xia. 2018a. Variational Autoencoders for Deforming 3D Mesh Models. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 5841–5850.Google ScholarCross Ref

45. Qingyang Tan, Lin Gao, Yu-Kun Lai, Jie Yang, and Shihong Xia. 2018b. Mesh-based Autoencoders for Localized Deformation Component Analysis. In AAAI Conference on Artificial Intelligence (AAAI). 2452–2459.Google Scholar

46. Shubham Tulsiani, Hao Su, Leonidas J. Guibas, Alexei A. Efros, and Jitendra Malik. 2017. Learning Shape Abstractions by Assembling Volumetric Primitives. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 2635–2643.Google ScholarCross Ref

47. Daniel Vlasic, Ilya Baran, Wojciech Matusik, and Jovan Popović. 2008. Articulated mesh animation from multi-view silhouettes. ACM Trans. Graph. 27, 3 (2008), 97:1–97:9. Google ScholarDigital Library

48. Peng-Shuai Wang, Yang Liu, Yu-Xiao Guo, Chun-Yu Sun, and Xin Tong. 2017a. O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis. ACM Trans. Graph. 36, 4 (2017), 72:1–72:11. Google ScholarDigital Library

49. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2017b. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 6721–6729.Google Scholar

50. Ryan White, Keenan Crane, and David Forsyth. 2007. Capturing and Animating Occluded Cloth. ACM Trans. Graph. 26, 3 (2007), 34:1–34:8. Google ScholarDigital Library

51. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T. Freeman, and Joshua B. Tenenbaum. 2016. Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. In Advances in Neural Information Processing Systems (NIPS). 82–90. Google ScholarDigital Library

52. Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 2015. 3D ShapeNets: A deep representation for volumetric shapes. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 1912–1920.Google Scholar

53. Weiwei Xu, Jun Wang, KangKang Yin, Kun Zhou, Michiel van de Panne, Falai Chen, and Baining Guo. 2009. Joint-aware manipulation of deformable models. ACM Trans. Graph. 28, 3 (2009), 35:1–35:9. Google ScholarDigital Library

54. Xinchen Yan, Jimei Yang, Ersin Yumer, Yijie Guo, and Honglak Lee. 2016. Perspective Transformer Nets: Learning Single-View 3D Object Reconstruction without 3D Supervision. In Advances in Neural Information Processing Systems (NIPS). 1696–1704. Google ScholarDigital Library

55. Jie Yang, Lin Gao, Yu-Kun Lai, Paul L. Rosin, and Shihong Xia. 2018. Biharmonic Deformation Transfer with Automatic Key Point Selection. Graph. Models 98 (2018), 1–13.Google ScholarCross Ref

56. Li Yi, Hao Su, Xingwen Guo, and Leonidas J. Guibas. 2017a. SyncSpecCNN: Synchronized Spectral CNN for 3D Shape Segmentation.. In IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 6584–6592.Google Scholar

57. Zili Yi, Hao (Richard) Zhang, Ping Tan, and Minglun Gong. 2017b. DualGAN: Unsupervised Dual Learning for Image-to-Image Translation.. In IEEE International Conference on Computer Vision (ICCV). 2868–2876.Google ScholarCross Ref

58. Kun Zhou, Weiwei Xu, Yiying Tong, and Mathieu Desbrun. 2010. Deformation Transfer to Multi-Component Objects. Comp. Graph. Forum 29, 2 (2010), 319–325.Google ScholarCross Ref

59. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In IEEE International Conference on Computer Vision (ICCV). 2223–2232.Google Scholar