“Visual-gestural Interface for Auslan Virtual Assistant” by Zelenskaya, Whittington, Lyons, Vogel and Korte

Conference:

Experience Type(s):

Title:

- Visual-gestural Interface for Auslan Virtual Assistant

Award:

Organizer(s)/Presenter(s):

Description:

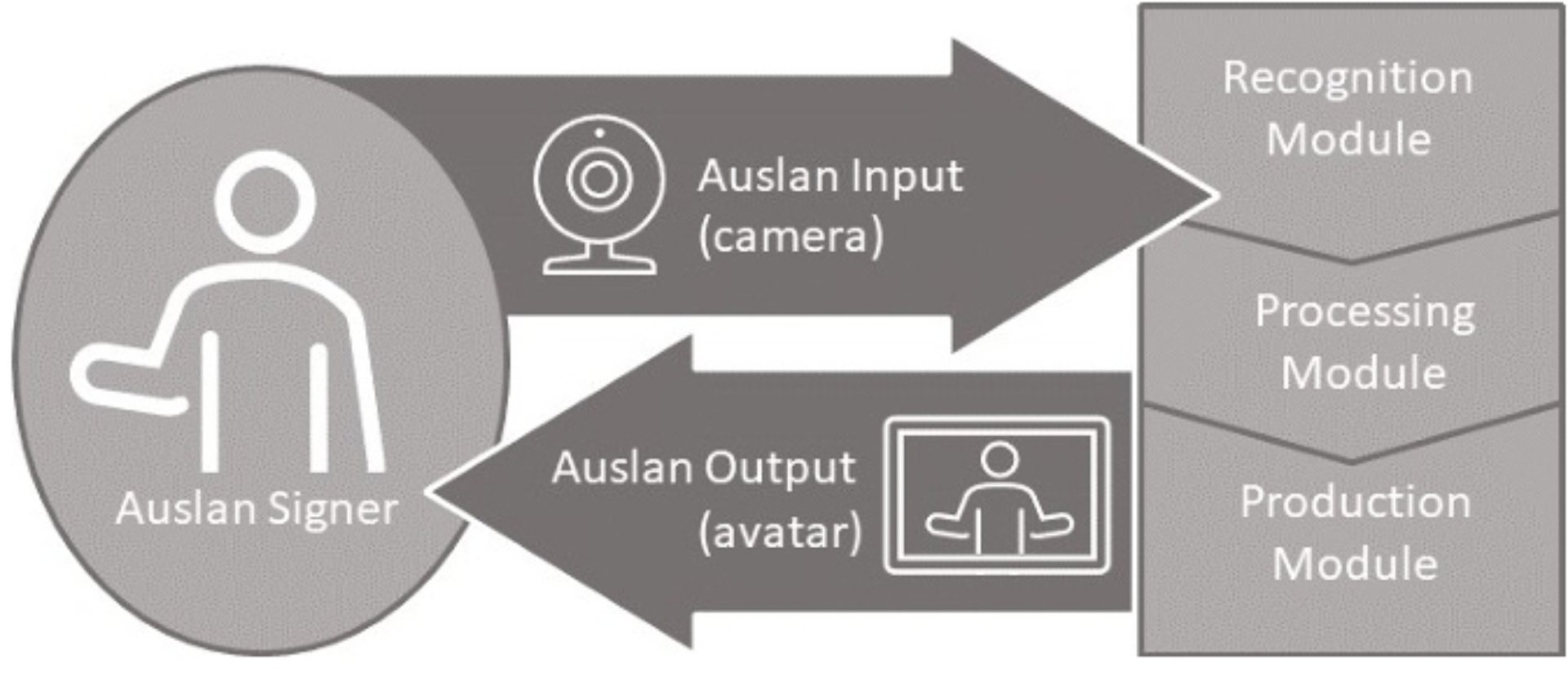

For Deaf people to use natural language interfaces, technologies must understand sign language input and respond in the same language. We developed a prototype smart home assistant that utilises gesture-based controls, improving accessibility and convenience for Deaf users. The prototype features Zelda, an interactive signing avatar that provides responses in Auslan (Australian Sign Language), enabling effective two-way communication. Our live demonstration includes gesture recognition and sign production.

References:

[1] Danielle Bragg, Oscar Koller, Mary Bellard, Larwan Berke, Patrick Boudrealt, Annelies Braffort, Naomi Caselli, Matt Huenerfauth, Hernisa Kacorri, Tessa Verhoef, Christian Vogler, and Meredith Ringel Morris. 2019. Sign language recognition, generation, and translation: An interdisciplinary perspective. In ASSETS ’19. Pittsburgh, PA, USA, 16–31. https://doi.org/10.1145/3308561.3353774

[2] Necati Cihan Camgoz, Ben Saunders, Guillaume Rochette, Marco Giovanelli, Giacomo Inches, Robin Nachtrab-Ribback, and R. Bowden. 2021. Content4All open research sign language translation datasets. In FG 2021. 1–5.

[3] Aashaka Desai, Lauren Berger, Fyodor O Minakov, Vanessa Milan, Chinmay Singh, Kriston Pumphrey, Richard E Ladner, Hal Daumé III, Alex X Lu, Naomi Caselli, and Danielle Bragg. 2023. ASL Citizen: A Community-Sourced Dataset for Advancing Isolated Sign Language Recognition. arXiv preprint arXiv:2304.05934 (2023).

[4] Trevor A Johnston. 2008. Auslan Corpus. https://elar.soas.ac.uk/Collection/MPI55247

[5] Trevor A Johnston and Adam Schembri. 2010. Variation, lexicalization and grammaticalization in signed languages. Langage et société 1, 131 (2010), 19–35.

[6] Jessica Korte, Axel Bender, Guy Gallasch, Janet Wiles, and Andrew D. Back. 2020. A plan for developing an Auslan Communication Technologies Pipeline. Lecture Notes in Computer Science 12536 (2020), 264–277. https://doi.org/10.1007/978-3-030-66096-3_19

[7] Jessica Korte, Julie Lyons, and Adele Vogel. 2022a. Auslan personal assistant functionality identified through interviews. Technical Report. TAS DCRC and DSTG.

[8] Jessica Korte, Adele Vogel, and Maria Zelenskaya. 2022b. Maximising usability of an avatar for visual-gestural user interfaces. Technical Report. TAS DCRC and DSTG.

[9] Verena Krausneker and Sandra Schügerl. 2021. Best practice protocol on the use of sign language avatars. Technical Report. University of Vienna, Vienna, Austria. 1–17 pages. https://avatar-bestpractice.univie.ac.at/

[10] Razieh Rastgoo, Kourosh Kiani, and Sergio Escalera. 2021. Sign language recognition: A deep survey. Expert Systems with Applications 164 (2021), 113794. https://doi.org/10.1016/j.eswa.2020.113794

[11] Panneer Selvam Santhalingam, Al Amin Hosain, Ding Zhang, Parth Pathak, Huzefa Rangwala, and Raja Kushalnagar. 2020. MmASL: Environment-Independent ASL Gesture Recognition Using 60 GHz Millimeter-Wave Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 1, Article 26 (mar 2020), 30 pages. https://doi.org/10.1145/3381010

[12] Xin Shen, Shaozu Yuan, Hongwei Sheng, Heming Du, and Xin Yu. 2023. Auslan-Daily: Australian Sign Language translation for daily communication and news. https://uq-cvlab.github.io/Auslan-Daily-Dataset/

[13] Shashindi Vithanage. 2022. Auslan Alexa: VR “Wizard of Oz”. Honours. The University of Queensland.

[14] Shashindi Vithanage, Arindam Dey, and Jessica Korte. 2023. ’Auslan Alexa’: A case study of VR Wizard of Oz for requirements elicitation. In IEEE Conference on Virtual Reality and 3D User Interfaces. IEEE, Shanghai, 911–912. https://doi.org/10.1007/978-3-030

[15] World Federation of the Deaf and World Association of Sign Language Interpreters. 2018. WFD and WASLI statement on use of signing avatars. https://wfdeaf.org/news/resources/wfd-wasli-statement-use-signing-avatars/