“EgoScanning: Quickly Scanning First-Person Videos with Egocentric Elastic Timelines” by Higuchi, Yonetani and Sato

Conference:

Experience Type(s):

Title:

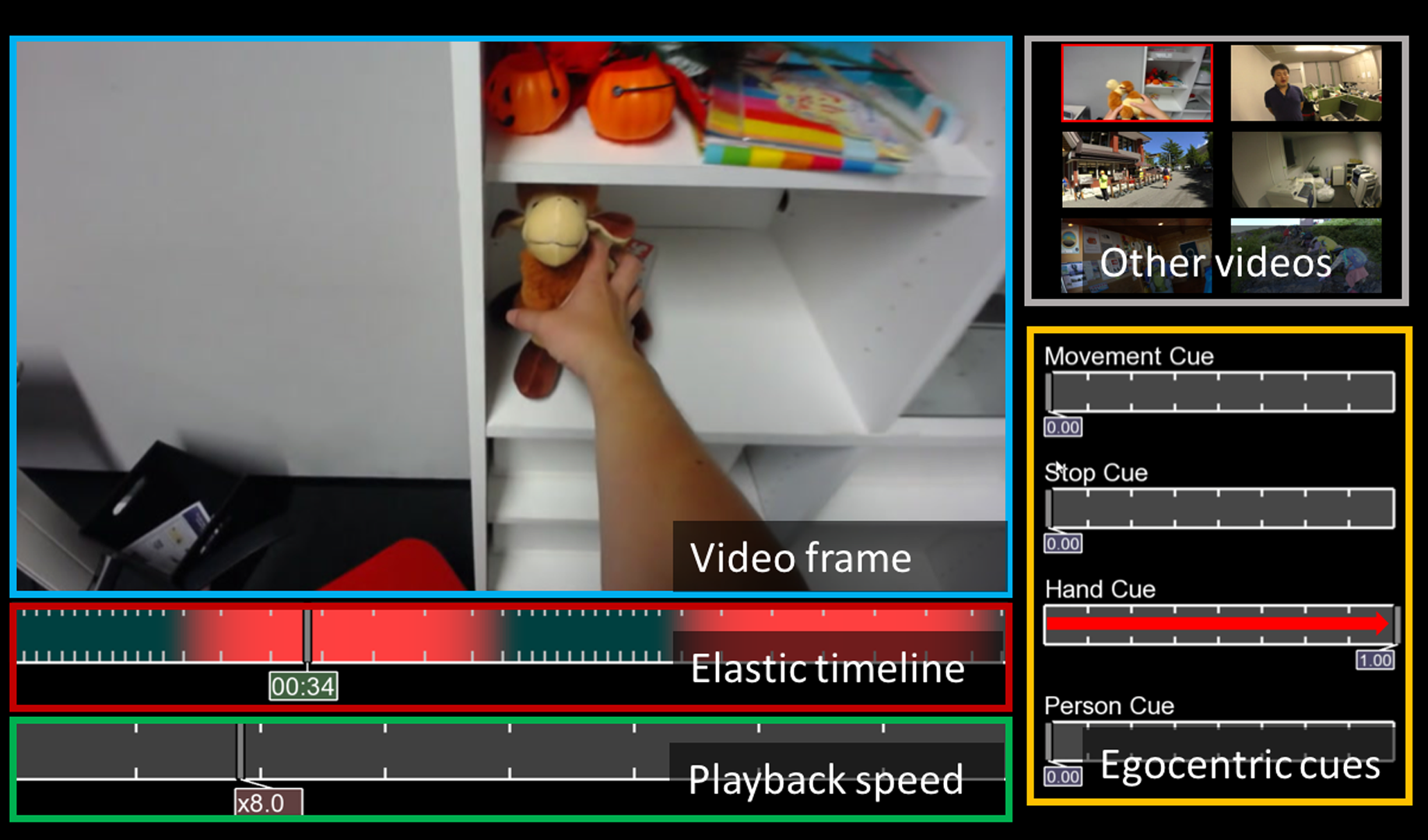

- EgoScanning: Quickly Scanning First-Person Videos with Egocentric Elastic Timelines

Organizer(s)/Presenter(s):

Description:

This work presents EgoScanning, a novel video fast-forwarding interface that helps users to find important events from lengthy first-person videos recorded with wearable cameras continuously. This interface is featured by an elastic timeline that adaptively changes playback speeds and emphasizes egocentric cues specific to first-person videos, such as hand manipulations, moving, and conversations with people, based on computer-vision techniques. The interface also allows users to input which of such cues are relevant to events of their interests.

References:

[1] Cheng Li and Kris M Kitani. 2013. Pixel-level hand detection in ego-centric videos. In In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR ’13).

[2] Yair Poleg, Chetan Arora, and Shmuel Peleg. 2014. Temporal Segmentation of Egocentric Videos. In In Proc. IEEE Conference On Computer Vision and Pattern Recognition (CVPR ’14).

[3] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. 2015. Faster R-CNN: Towards real-time object detection with region proposal networks. In In Proc. Annual Conference on Neural Information Processing Systems (NIPS ’15).