“Filter-Guided Diffusion for Controllable Image Generation”

Conference:

Type(s):

Title:

- Filter-Guided Diffusion for Controllable Image Generation

Presenter(s)/Author(s):

Abstract:

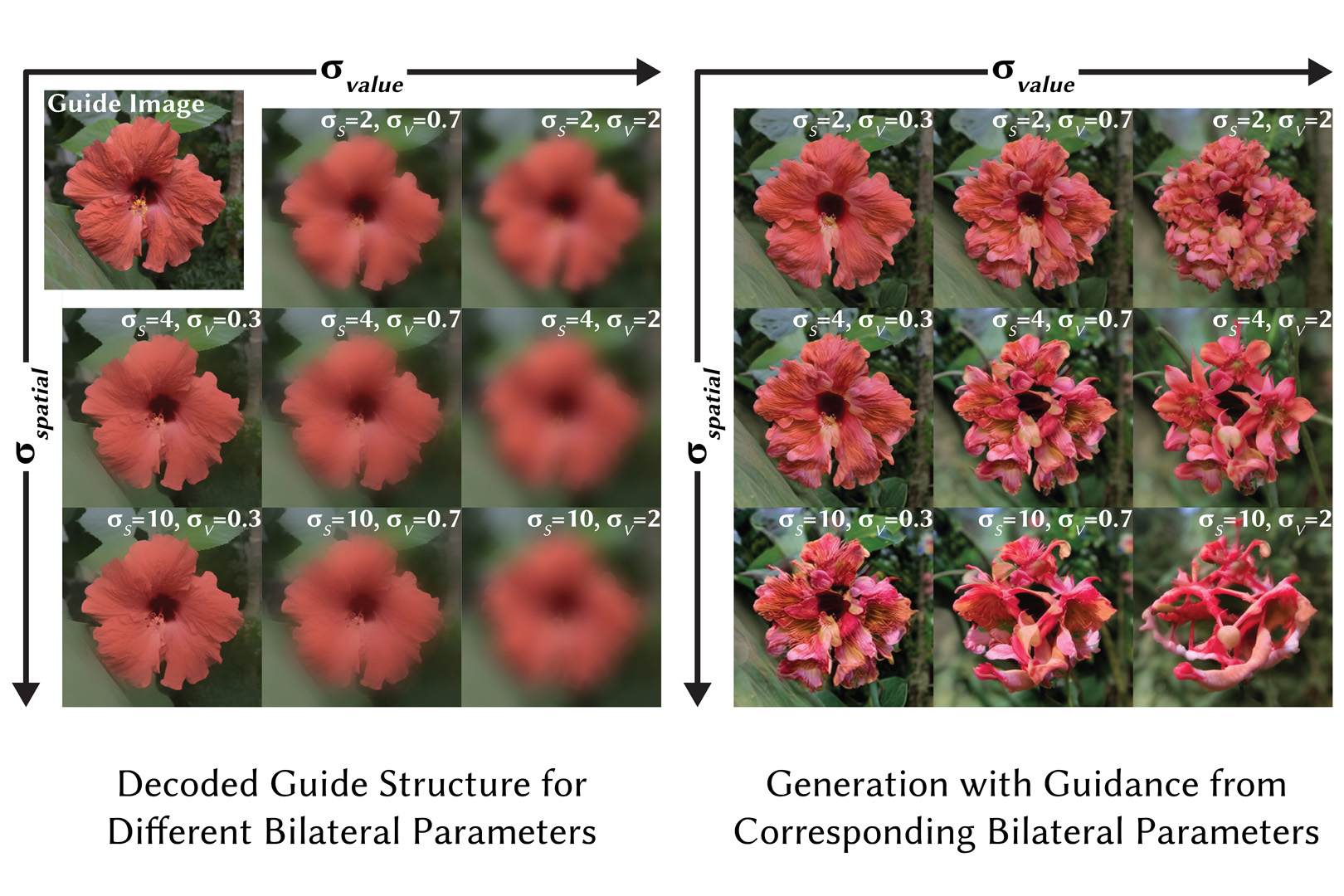

Filter-Guided Diffusion (FGD) is a controllable, tuning-free, image-to-image translation method for diffusion models. It combines fast filtering operations with non-deterministic samplers to generate high-quality and diverse images. With its efficiency, FGD can be sampled multiple times to outperform previous methods in less time on structural and semantic metrics.

References:

[1]

Mahmoud Afifi, Marcus A Brubaker, and Michael S Brown. 2021. Histogan: Controlling colors of gan-generated and real images via color histograms. In Proc. Computer Vision and Pattern Recognition (CVPR). 7941?7950.

[2]

Soonmin Bae, Sylvain Paris, and Fr?do Durand. 2006. Two-scale tone management for photographic look. ACM Trans. Graph. 25, 3 (jul 2006), 637?645. https://doi.org/10.1145/1141911.1141935

[3]

Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2023. Instructpix2pix: Learning to follow image editing instructions. In Proc. Computer Vision and Pattern Recognition (CVPR). 18392?18402.

[4]

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, 2020. Language models are few-shot learners. Adv. Neural Inform. Process. Syst. 33 (2020), 1877?1901.

[5]

Peter J. Burt and Edward H. Adelson. 1987. The Laplacian Pyramid as a Compact Image Code. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 671?679.

[6]

Tao Chen, Ming-Ming Cheng, Ping Tan, Ariel Shamir, and Shi-Min Hu. 2009. Sketch2photo: Internet image montage. ACM Trans. Graph. 28, 5 (2009), 1?10.

[7]

Jooyoung Choi, Sungwon Kim, Yonghyun Jeong, Youngjune Gwon, and Sungroh Yoon. 2021. ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models. arxiv:2108.02938 [cs.CV]

[8]

Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. Stargan v2: Diverse image synthesis for multiple domains. In Proc. Computer Vision and Pattern Recognition (CVPR). 8188?8197.

[9]

Elmar Eisemann and Fr?do Durand. 2004. Flash Photography Enhancement via Intrinsic Relighting. 23, 3 (aug 2004), 673?678. https://doi.org/10.1145/1015706.1015778

[10]

Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626 (2022).

[11]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Adv. Neural Inform. Process. Syst. 33 (2020), 6840?6851.

[12]

Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. Proc. Computer Vision and Pattern Recognition (CVPR) (2017).

[13]

Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2017. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017).

[14]

Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proc. Computer Vision and Pattern Recognition (CVPR). 4401?4410.

[15]

Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2023. Imagic: Text-based real image editing with diffusion models. In Proc. Computer Vision and Pattern Recognition (CVPR). 6007?6017.

[16]

Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. 2022. Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proc. Computer Vision and Pattern Recognition (CVPR). 2426?2435.

[17]

Gihyun Kwon and Jong Chul Ye. 2022. Diffusion-based image translation using disentangled style and content representation. arXiv preprint arXiv:2209.15264 (2022).

[18]

Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep Learning Face Attributes in the Wild. In Proc. Int. Conf. on Computer Vision (ICCV).

[19]

Chenlin Meng, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. 2021. Sdedit: Image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073 (2021).

[20]

Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Null-text inversion for editing real images using guided diffusion models. In Proc. Computer Vision and Pattern Recognition (CVPR). 6038?6047.

[21]

Aude Oliva, Antonio Torralba, and Philippe G. Schyns. 2006. Hybrid Images. ACM Trans. Graph. 25, 3 (jul 2006), 527?532. https://doi.org/10.1145/1141911.1141919

[22]

Sylvain Paris, Samuel W Hasinoff, and Jan Kautz. 2011. Local Laplacian filters: Edge-aware image processing with a Laplacian pyramid.ACM Trans. Graph. 30, 4 (2011), 68.

[23]

Taesung Park, Alexei A Efros, Richard Zhang, and Jun-Yan Zhu. 2020. Contrastive learning for unpaired image-to-image translation. In Proc. European Conference on Computer Vision (ECCV). Springer, 319?345.

[24]

Gaurav Parmar, Yijun Li, Jingwan Lu, Richard Zhang, Jun-Yan Zhu, and Krishna Kumar Singh. 2022. Spatially-adaptive multilayer selection for gan inversion and editing. In Proc. Computer Vision and Pattern Recognition (CVPR). 11399?11409.

[25]

Gaurav Parmar, Krishna Kumar Singh, Richard Zhang, Yijun Li, Jingwan Lu, and Jun-Yan Zhu. 2023. Zero-shot Image-to-Image Translation. arXiv preprint arXiv:2302.03027 (2023).

[26]

Georg Petschnigg, Richard Szeliski, Maneesh Agrawala, Michael Cohen, Hugues Hoppe, and Kentaro Toyama. 2004. Digital Photography with Flash and No-Flash Image Pairs. ACM Trans. Graph. 23, 3 (aug 2004), 664?672. https://doi.org/10.1145/1015706.1015777

[27]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In Int. Conf. Mach. Learn. PMLR, 8748?8763.

[28]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proc. Computer Vision and Pattern Recognition (CVPR). 10684?10695.

[29]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020).

[30]

C. Tomasi and R. Manduchi. 1998. Bilateral filtering for gray and color images. In Proc. Int. Conf. on Computer Vision (ICCV). 839?846. https://doi.org/10.1109/ICCV.1998.710815

[31]

Narek Tumanyan, Omer Bar-Tal, Shai Bagon, and Tali Dekel. 2022a. Splicing vit features for semantic appearance transfer. In Proc. Computer Vision and Pattern Recognition (CVPR). 10748?10757.

[32]

Narek Tumanyan, Michal Geyer, Shai Bagon, and Tali Dekel. 2022b. Plug-and-Play Diffusion Features for Text-Driven Image-to-Image Translation. arXiv preprint arXiv:2211.12572 (2022).

[33]

Tianyi Wei, Dongdong Chen, Wenbo Zhou, Jing Liao, Weiming Zhang, Lu Yuan, Gang Hua, and Nenghai Yu. 2021. A simple baseline for stylegan inversion. arXiv preprint arXiv:2104.07661 9 (2021), 10?12.

[34]

Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In Proc. Int. Conf. on Computer Vision (ICCV).